This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Azure Data Factory is Azure’s cloud go-to data integration service, with 90+ built-in connectors and data transformation capabilities through data flows. The ADF’s power does not lie only in its capacity to connect out of the box to a big number of data stores, but also in its capability to dynamically pass in parameters and by this, create metadata driven pipelines.

In this blog post, we will show exactly this – how to create metadata driven pipelines in Azure Data Factory.

Let’s assume this scenario – you are an ISV (Independent Software Vendors) that needs to integrate data from different end-customer data stores into a unified storage layer on top of which you want to build analytics for internal or external use. Instead of creating a pipeline (with datasets, linked services, activities) per customer with hardcoded values, you can create a generic pipeline that, at runtime, will retrieve the list of data stores to read from and write to.

This approach not only dramatically decreases the number of objects you need to build in your ADF, but also makes the maintainability of the solution much easier – whenever you have a new customer, you can just update your metadata tables without touching the ADF pipeline.

This is enabled by the dynamic content feature that allows you to parameterize attributes. These attributes are stored in a control/ metadata table or file.

In the rest of this blog, we will build the metadata and ADF pipeline and show how all this works end-to-end.

To follow along with the tutorial, you will need to meet these prerequisites:

- Have an existing ADF instance. If you don’t have one, follow the steps here to create it;

- Existing Azure SQL Database instances that will serve as sources;

- An existing ADLS Gen 2 account that will be the sink.

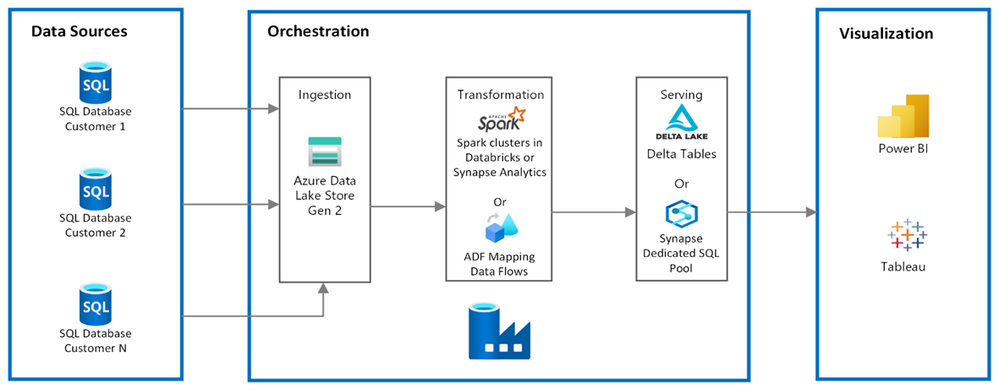

Here is an architecture diagram of the ETL.

In this tutorial, we will be performing the Ingestion part only. If you’re interested to see the entire process implemented in a metadata driven fashion with the help of ADF, please let me know in the comments section.

Dynamic Linked Services and Datasets

Let’s imagine you need to connect to 'N' Azure SQL Databases to copy data from. When hardcoding, it would mean creating N Linked Services, and N datasets only for your source data stores. By parameterizing the Server name and Database, we can use one Linked Service and one Dataset.

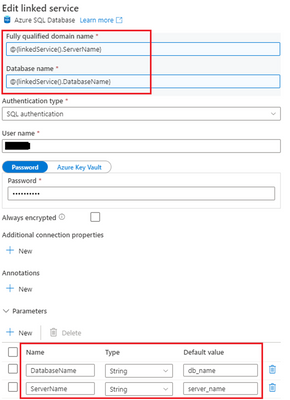

Let’s first create the Linked Service, under Manage -> Connections-> New -> Select the Azure SQL Database type:

Next, create new parameters for the Server Name and Database Name.

In the FQDN section, hover over it and click ‘Add dynamic connect’:

Inside the ‘Add dynamic content’ menu, click on the corresponding parameter you created earlier:

Your Linked Service should look like this:

Next, let’s create the dynamic Dataset:

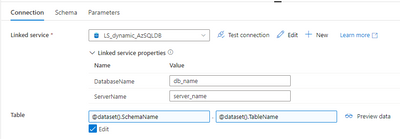

Give the Dataset a name and then, in the Connection tab, choose the Linked Service we created earlier:

Fill in the Linked Service parameters with the dynamic content using the newly created parameters. To use the explicit table names, click the Edit checkbox under the dropdown. Then click the textbox to reveal the Add dynamic content link.

Set it up as follows:

Now, let’s configure our sink datastore, the ADLS Gen 2. We will create, just as on the source side, one Linked Service and one dataset. We will use a directory per customer in the Landing container to ingest data from different customers.

Note: As this is a demo and the resources are short-lived, I provided the Account Key in the Linked service. In a production environment this is not recommended, and you should use Key Vault to securely store and retrieve secrets.

Then, create a dataset of Azure Data Lake Gen 2 type and Parquet file type by following the same steps as for the source. Choose the Linked Service created earlier.

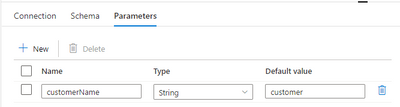

For the directory name, let’s create a parameter to pass this value in at runtime:

The Dataset will look like:

Metadata table

The Metadata table is at the heart of this configuration. In a nutshell, it is the table holding the metadata that needs to be passed into the ADF pipeline.

For this demo, I will store the metadata table in my Azure SQL DB. Depending on the scenario, you can store it in an Admin or Config DB.

For simplicity, I stored both my source and target metadata in the same table:

I wouldn’t recommend doing it in a production environment. You could pass in more attributes and could duplicate data unnecessarily.

Metadata driven pipeline

In this section, we will finally create the pipeline. It will have two main components:

- A Lookup activity – this will fetch the data from our configuration table;

- A ForEach activity – this will take in the values from the Lookup and loop through these to perform the predefined actions, in our case – ingest the data into our ADLS Landing container.

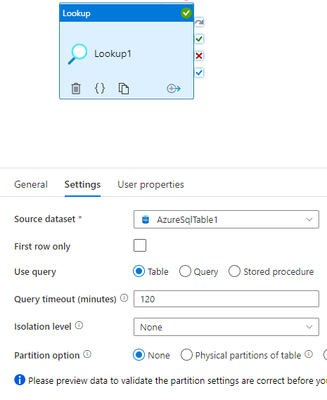

Create a new Pipeline and give it a name. Add a Lookup type activity to the canvas, and point it to your Metadata table. In my case, I am pointing it to my Azure SQL DB -> Config Database ->

Make sure to unselect the ‘First row only’. And Debug your activity to make sure the output looks as expected. In my case, I have my 2 values below:

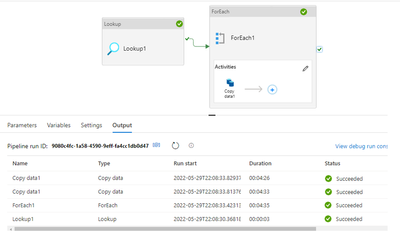

Now, let’s add the ForEach activity to the canvas and connect it on Success to our Lookup. In the Settings tab, click on ‘Add dynamic content’ for the Items field. This is where we will pass the array of values we retrieved in our Lookup activity. You can select this from the predefined options in the editor.

Next, you will need to add the activities that you want to execute within the loop. Click on the pen icon to do so:

In this example, I will add a Copy activity to the loop. You can add multiple activities to be executed for each item in the array. To avoid the 30 activities per pipeline limit, you might also want to group these activities into an Execute Pipeline activity.

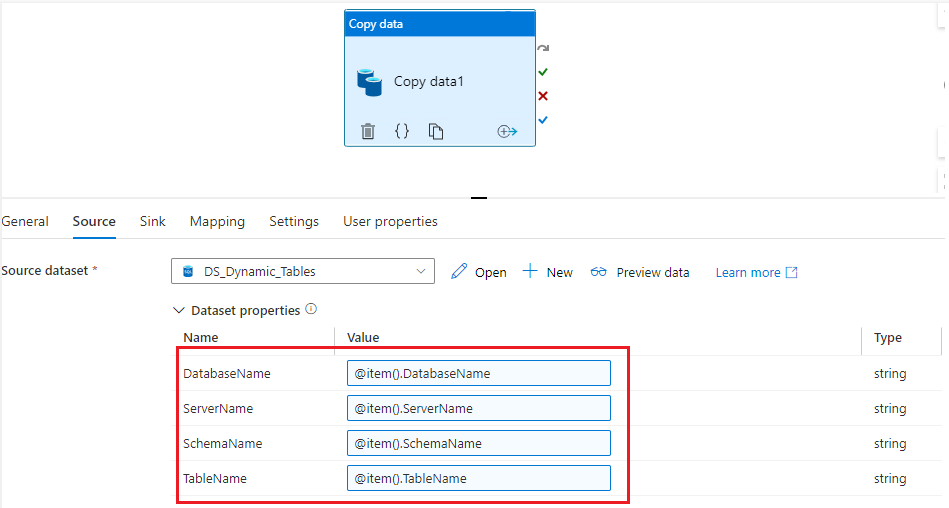

In the Source tab, choose the Dataset that we created earlier with our Dynamic source configuration. Then, you will see the Datasets properties show up, in the Value field, choose ‘Add Dynamic Content’ and point to the appropriate fields passed in from the Lookup activity. The intellisense functionality will ease this task.

Let’s do the same for our Sink dataset:

Now, we’re all set and can Debug our entire pipeline to validate it works as expected.

This was it. I hope you enjoyed this tutorial. Let me know in the comments section below what do you think about doing Metadata Driven Pipelines with Data Factory.