This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Azure Data Factory allows connecting to a Git repository for source control, partial saves, better collaboration among data engineers and better CI/CD. As of this writing, Azure Repos and GitHub are supported. To enable automated CI/CD, we can use Azure Pipelines or GitHub Actions.

In this blog post, we will implement CI/CD with GitHub Actions. This will be done using workflows. A workflow is defined by a YAML (.yml) file that contains the various steps and parameters that make up the workflow.

We will perform the following steps:

- Generate deployment credentials,

- Configure the GitHub secrets,

- Create the workflow that deploys the ADF ARM template,

- Monitor the workflow execution.

Requirements

- Azure Subscription. If you don't have one, create a free Azure account before you begin.

- Azure Data Factory instance. If you don't have an existing Data Factory, follow this tutorial to create one.

- GitHub repository integration set up. If you don't yet have a GitHub repository connected to your development Data Factory, follow the steps here to set it up.

Generate deployment credentials

You will need to generate credentials that will authenticate and authorize GitHub Actions to deploy your ARM template to the target Data Factory. You can create a service principal with the az ad sp create-for-rbac command in the Azure CLI. Run this command with Azure Cloud Shell in the Azure portal or locally. Replace the placeholder `myApp` with the name of your application.

az ad sp create-for-rbac --name {myApp} --role contributor --scopes /subscriptions/{subscription- id}/resourceGroups/{MyResourceGroup} --sdk-auth

In the example above, replace the placeholders with your subscription ID and resource group name. The output is a JSON object with the role assignment credentials that provide access to your Data Factory resource group, like below. Copy this JSON object for later. You will only need the sections with the `clientId`, `clientSecret`, `subscriptionId`, and `tenantId` values.

{

"clientId": "<GUID>",

"clientSecret": "<GUID>",

"subscriptionId": "<GUID>",

"tenantId": "<GUID>",

(...)

}

Configure the GitHub secrets

You need to create secrets for your Azure credentials, resource group, and subscriptions.

- In GitHub, browse to your repository.

- Select Settings > Secrets > New secret.

- Paste the entire JSON output from the Azure CLI command into the secret's value field. Give the secret the name `AZURE_CREDENTIALS`.

- Create another secret named `AZURE_RG`. Add the name of your resource group to the secret's value field (example: `myResourceGroup`).

- Create an additional secret named `AZURE_SUBSCRIPTION`. Add your subscription ID to the secret's value field (example: `90fd3f9d-4c61-432d-99ba-1273f236afa2`).

Create the workflow that deploys the ADF ARM template

At this point, you must have a Data Factory instance with git integration set up. If this is not the case, please follow the links in the Requirements section.

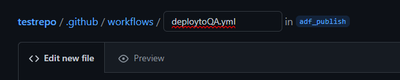

The workflow file must be stored in the .github/workflows folder at the root of the `adf_publish` branch. The workflow file extension can be either .yml or .yaml.

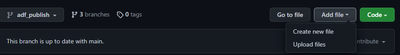

- From your GitHub repository, go to the adf_publish branch

- Click Add file -> Create new file

- Create the path .github/workflows/workflowFileName.yml

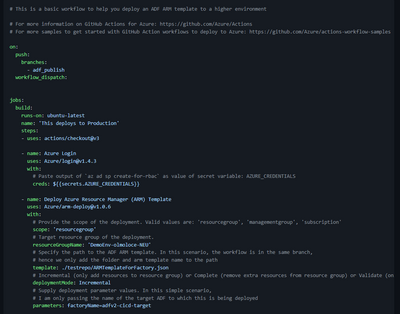

- Paste the content of the yaml file attached to this blog post. It looks like this:

5. Commit the new file

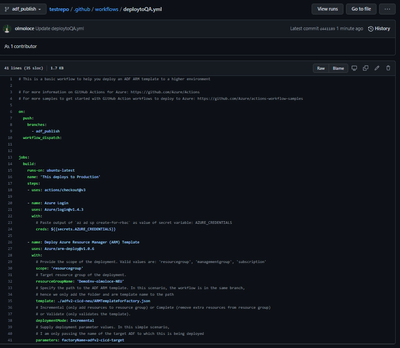

6. Navigate to the newly created yaml file and validate that it looks correct

7. Now, to test that everything is setup properly, make some changes in your Dev ADF instance and Publish these. This should trigger the workflow to execute.

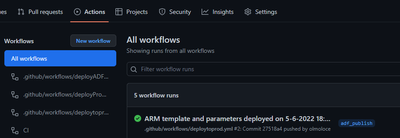

8. To check it, browse to the repository -> Actions -> and identify your workflow

9. You can further drill down into each execution and see more details such as all the steps and their duration

As next steps, to make this production ready, we should integrate stopping triggers before deployment and start these post-deployment, as well as propagating deletions into the upper environments.

Stay tuned for more tutorials.