This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

The need for very large security logs datasets to support complex security analytics and ML is ever-increasing. To facilitate this, security analysts and data scientists need to have the ability to easily export, transform and store data in a way that is flexible whilst being highly performant and scalable.

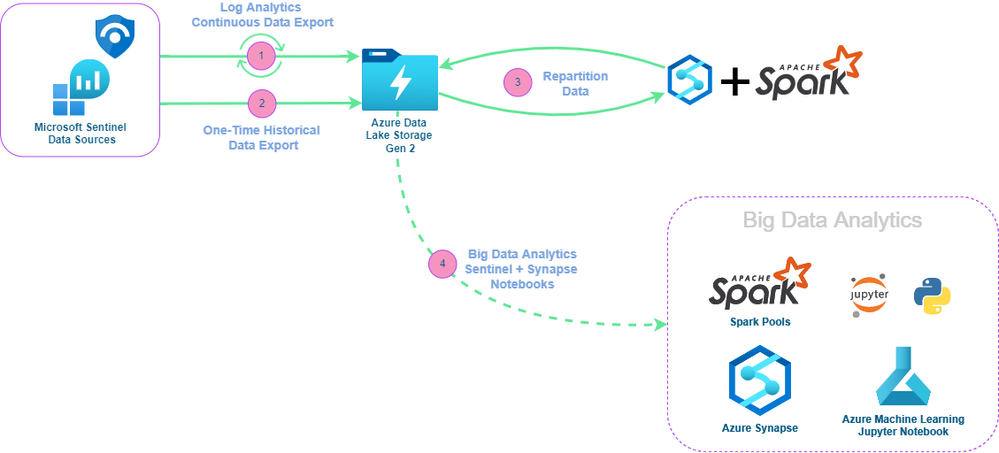

We have previously blogged about setting up continuously data exports directly from the Sentinel UI using the Sentinel data export tool. To augment this, we have created a new Sentinel notebook to provide an easy way to orchestrate the export, transformation and partitioning of historical data in your Azure Log Analytics workspace. Together, these provide a log data management solution for downstream analytics or for archival purposes that only requires a one-time setup.

Pre-Requisites

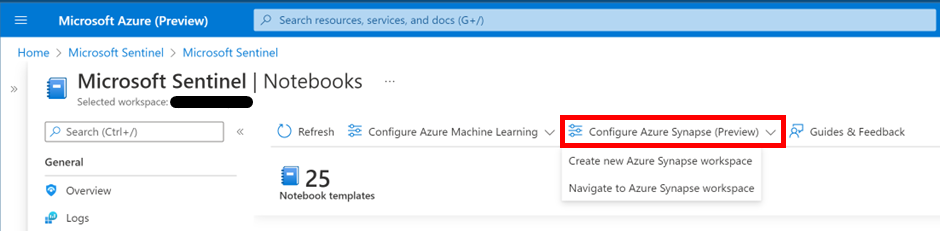

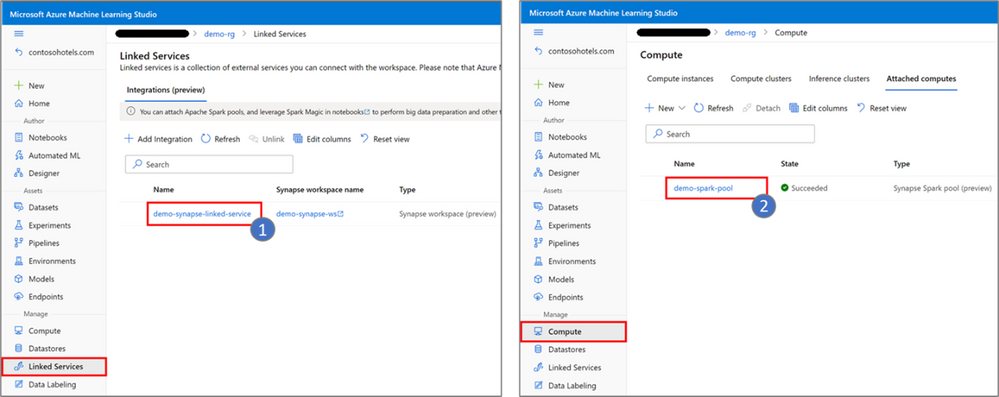

Synapse Integration for Sentinel Notebooks

The new historical data export notebook uses Azure Synapse to work with data at scale. If you do not have the Synapse integration set up for your Sentinel notebooks, you can follow the steps here.

Continuous Log Export

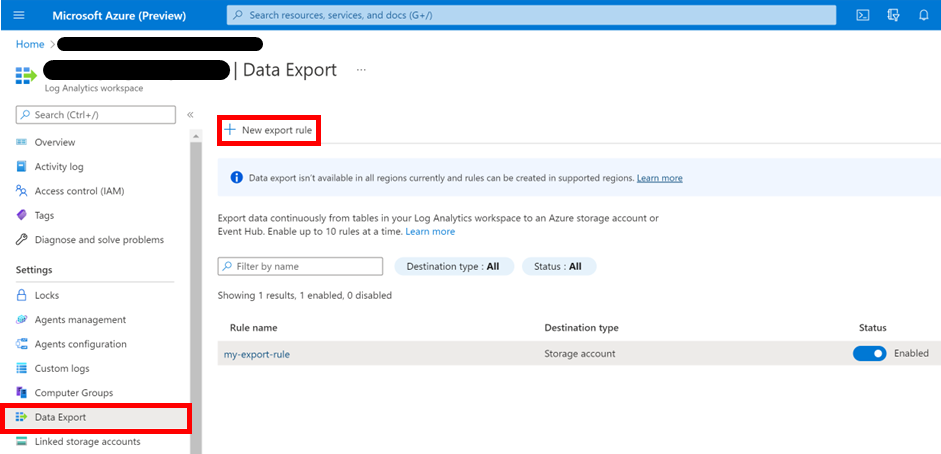

The Data Export blade in Sentinel allows data to be continuously exported from tables in your Log Analytics workspace to an Azure storage account or Event Hub. You may wish to set up a continuous data export rule to eliminate the need to re-run manual data exports on a scheduled basis. The continuous export also allows Sentinel hunting notebooks using exported logs to utilize the latest data.

It is recommended to set up any continuous log export rule prior to performing a one-time export of historical logs to ensure that there is no gap in exported logs. It is also recommended that data is exported to Azure Data Lake Storage (ADLS) Gen2 to take advantage of the hierarchical namespace this provides (this will be important in a later step).

For a walkthrough on setting up new export rules, take a look at our previous blog, Configure a continuous data pipeline in Microsoft Sentinel for big data analytics.

Note: Log Analytics Data Export is currently free, but billing will start on July 1, 2022 – see the pricing page for details. Advance notice will be provided before billing starts.

Link ADLS Gen2 to Synapse Workspace

If the primary storage account for your Synapse workspace is not the account to which you want to export log data, you will need to create a new Azure Data Lake Storage Gen2 linked service by following the instructions here: Create an Azure Data Lake Storage Gen2 linked service using UI.

Running the Historical Log Export

Launch the Notebook

This notebook can be launched straight from your Sentinel workspace by following the steps below.

- In the Sentinel portal, navigate to the Notebooks blade.

- Go to the Templates tab.

- Search for, and select, the “Export Historical Data” notebook.

- On the right panel, select Save notebook. You can rename the selected notebook or keep the default name and save it to an Azure ML workspace.

- The notebook is now accessible in your Azure ML workspace. From the same panel, select Launch notebook to open the notebook in Azure ML studio. (You may be prompted to log into the Azure ML workspace.)

- In the Azure ML workspace, notice that an Export Historical Data.ipynb file and a config.json file have been automatically generated from the previous step.

The ipynb notebook file has the main content of the notebook whilst the config.json file stores configuration details about the Sentinel environment from which the notebook was launched. - Select a compute instance for your notebook server. If you don’t have a compute instance, create one by following step 4 in Launch a notebook using your Azure ML workspace.

Configure Data to be Exported

The notebook contains detailed instructions on how to use it; it is designed to provide a step-by-step walkthrough on exporting any subset of data from your Log Analytics workspace.

First you will need to specify the subset of logs you wish to export. This can either be the name of a table or a specific KQL query. You may wish to run some exploratory queries in your log analytics workspace to determine which subset of columns or rows you wish to export.

(Currently, data can only be exported from one table at a time – this will be changed in future updates.)

Set Data Export Time Range

The next step is to set the time range from which you want to export data. This is done by specifying an end datetime and the number of days back before the end datetime to start the querying. If you have set up a continuous data export rule, you will want to set the end datetime to the time at which the continuous export was started (you can do this by checking the creation time of the export storage container).

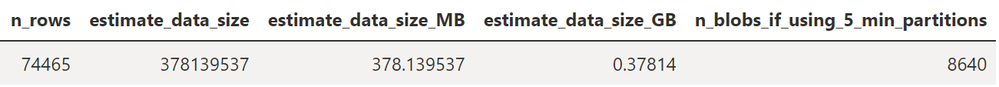

Prior to running the data export, you can use the notebook to determine the size of data to be exported and the number of blobs that will be written, in order to accurately gauge costs associated with the data export.

The notebook uses batched, asynchronous calls to the Log Analytics REST API to retrieve data. Due to throttling and rate-limiting (see the Query API section in the docs), you may need to adjust the default value of the query batch size – there are detailed notes in the notebook on how to set this value.

Note: This step may take some time to run depending on the volume of data being exported.

You may wish to run this cell with only a few days of data, initially, to ensure that the dataframe in the cell output contains the expected data (e.g., the expected set of columns and the expected number of rows).

Write Data to ADLS Gen2

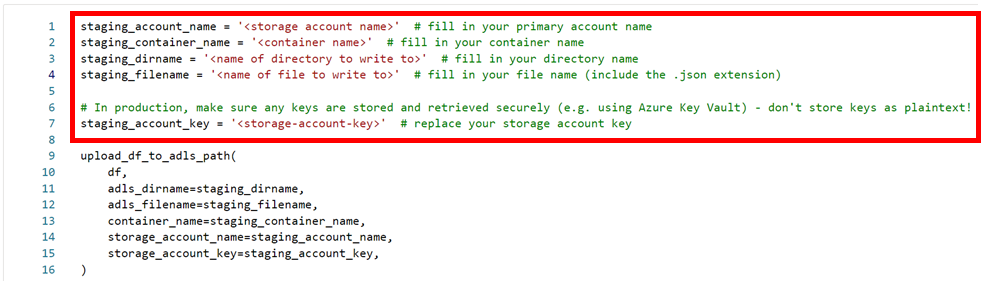

Once the queries have run, the data can be persisted to Azure Data Lake Gen2 storage*. Fill in the details of your storage account in the notebook cell.

*Any Azure storage account can be used here, but the hierarchical namespace used by ADLS Gen2 makes moving and repartitioning log data in downstream tasks much more efficient.

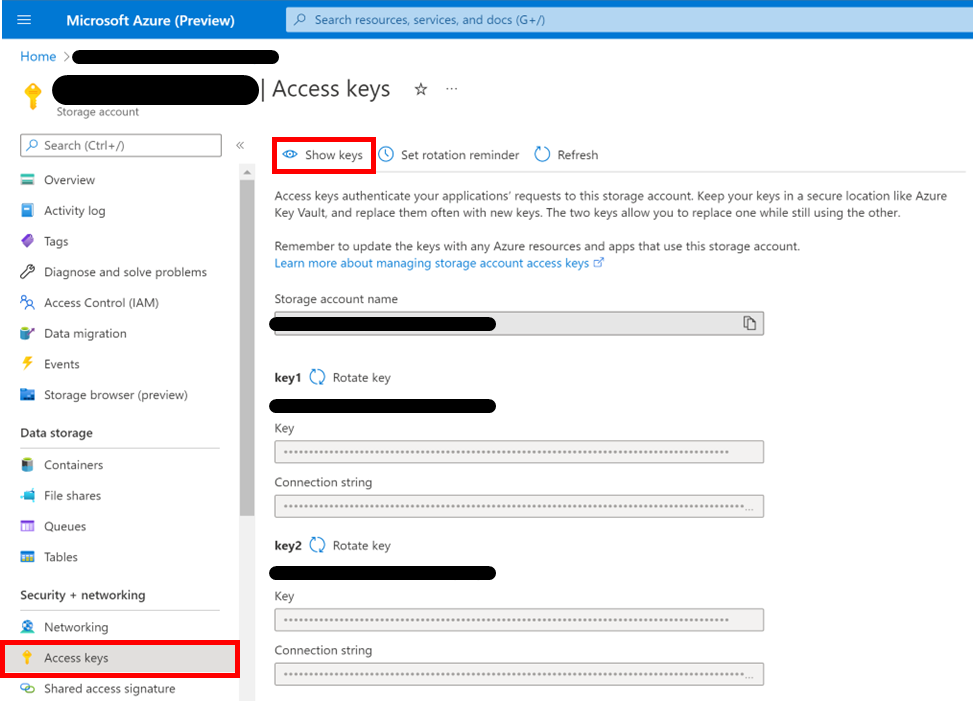

You can view and rotate access keys for your storage account by navigating to the “Access Keys” blade in the Azure storage portal.

Note: The code shown above is for demo/testing purposes only! Keys should always be stored and retrieved securely (e.g. by using Azure Key Vault) and should never be stored as plaintext. Alternatively, you may wish to use another of Azure’s authentication flows (such as using SAS tokens)– see the docs for details.

Partition Data Using Spark

At this point, the historical log data has been successfully exported for custom archiving or for use in Sentinel notebooks or other downstream tasks.

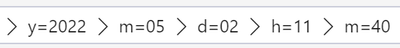

However, you may wish to partition the data to allow for more performant data reads. The last section of the notebook repartitions the exported data by timestamp – this means splitting the data rows across multiple files in multiple directories with rows of data grouped by timestamp. We use a year/month/day/hour/five-minute-interval directory structure for partitions.

This provides two key benefits:

- Matching the partition scheme used by continuously exported logs – this means that means that continuously exported data and historical log data can be read from in a unified way by any notebooks or data pipelines that consume this data

- More performant data loading – by encoding the timestamp values in file paths, we can minimize the number of required file reads when loading data from a specific time range in downstream tasks

Using Spark via Azure Synapse

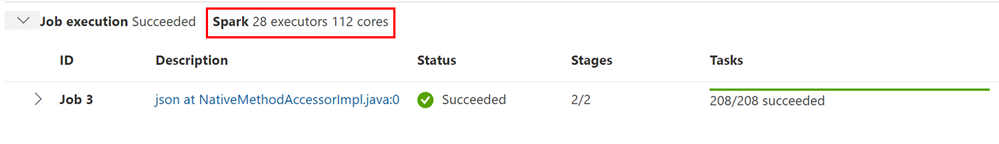

For a year's worth of historical log data, we may be writing files for over 100,000 separate partitions, so we rely on Spark's multi-executor parallelism to do this efficiently.

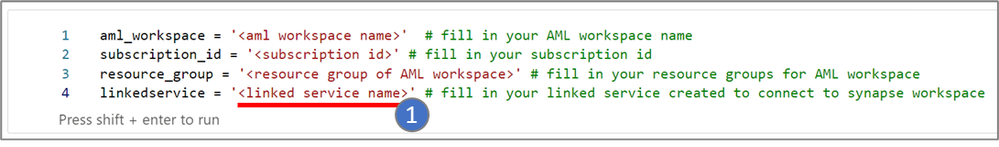

In order to run code on a Synapse Spark pool, we will need to specify the name of the linked Synapse workspace and Synapse Spark pool to use (see Pre-Requisites section, above).

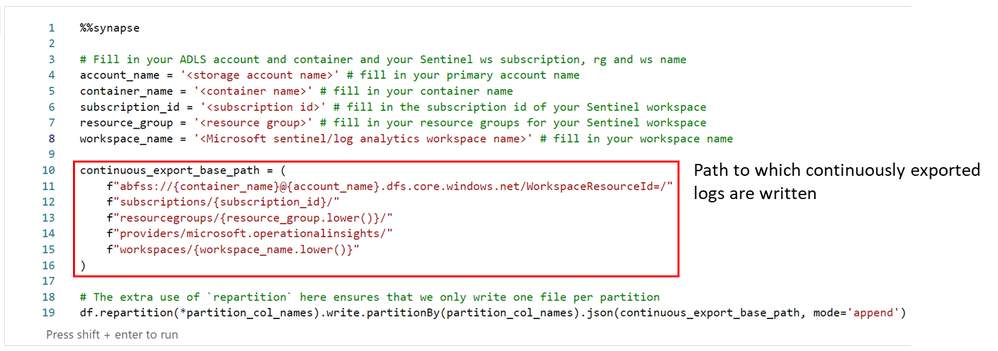

Once we have started the Spark session, we can run the code in a notebook cell on the Spark pool by using the `%%synapse` cell magic at the start of the cell.

Note: If you encounter “UsageError: Line magic function `%synapse` not found”, ensure that you have run the notebook setup cells (at the top of the notebook) and that the “azureml-synapse” package was installed successfully.

Running through the last few cells of the notebook will write the historical logs to the same location as the continuously exported data, in the same format and with the same partition scheme.

We are now able to process, transform and analyze security log data at scale using Sentinel and Synapse notebooks! Get started by cloning one of our template guided hunting notebooks from the Templates tab under the Notebooks blade in Sentinel (also available on the Microsoft Sentinel Notebook GitHub).

Summary

An important part of being able to extract value from large volumes of log data is the ability to make it available for advanced analytics and ML in a flexible, performant and highly scalable manner.

Sentinel users can now leverage Synapse Spark pools to orchestrate the ETL of data in their Log Analytics workspace directly from a Sentinel notebook.

Next Steps

Get started with big data analytics using one of our template guided hunting notebooks in Sentinel (we also have guided hunting blogs), or write your own big data Sentinel+Synapse notebook using PySpark, MLlib and SynapseML.

For native low-cost log archival in Log Analytics workspaces, use the new archive policies feature. Archive policies can be configured for individual tables, and archived data can be easily searched or restored directly from your Log Analytics workspace.

For more customizable archiving, you can use this notebook in conjunction with archive-tier Azure storage.