This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Machine Learning Operations (MLOps) can make significant improvements in acerating how data scientists and ML engineers can impact organizational needs. A well-implemented MLOps process not only speeds up the time from testing to production, but also provides ownership, lineage, and historical information of ML artifacts being used across the team. Data science team is now required to be equipped with skills with CI/CD to sustain ongoing inference with retraining cycles and automated redeployments of models. Unfortunately, many ML professionals are still forced to run MLOps manually and this reduces the time that they can focus to add more value to business.

To address this critical issue for data science team, today, we are thrilled to announce the Beta #2 version of MLOps v2 to simplify your MLOps workstream with a unified solution accelerators available on GitHub repository.

The anticipated full release is targeted for July 2022.

MLOps v2 is fundamentally redefining the operationalization of Machine Learning Operations in Microsoft. MLOps v2 will allow AI professionals and our customers to deploy an end-to-end standardized and unified Machine Learning lifecycle scalable across multiple workspaces. By abstracting agnostic infrastructure in an outer loop, the customer can focus on the inner loop development of their use cases.

Feature Store? Responsible AI? Any AI building block can fit your environment with MLOps v2, and you will be able to build a modular MLOps ecosystem in a standardized, scalable, secure, and enterprise-ready way.

Historically, we collected and evaluated 20+ MLOps v1 assets including their codebase and scalable capacity to redefine the next generation of MLOps at Microsoft. MLOps v1 included customer-specific unscalable or outdated scenarios that did not provide technology modularity. With MLOps v2, we are moving Classical Machine Learning, Natural Language Processing, and Computer Vision to a newer and faster scale for our customers.

Overall, the MLOps v2 Solution Accelerator is intended to serve as the starting point for MLOps implementation in Azure. Solution Accelerators enable customers 80% of the way but require 20% customization as customer projects always offer their own level of uniqueness.

MLOps v2 is a set of repeatable, automated, and collaborative workflows with best practices that empower teams of Machine Learning professionals to quickly and easily get their machine learning models deployed into production. You can learn more about MLOps here:

- MLOps with Azure Machine Learning

- Cloud Adoption Framework Guidance

- How: Machine Learning Operations

Customer challenges

MLOps v2 provides a templatized approach for the end-to-end Data Science process and focuses on driving efficiency at each stage. Currently, the general customer struggle is standing up an end-to-end MLOps engine due to resource, time, and skill constraints.

One main issue is that it often takes a significant amount of time to bootstrap a new Data Science project. The MLOps v2 provides templates that can be reused to establish a “Cookie-Cutter-Approach" for the bootstrapping process to shorten the process from days to hours or minutes. The bootstrapping process encapsulates key MLOps decisions such as the components of the repository, the structure of the repository, the link between model development and model deployment, and technology choices for each phase of the Data Science process.

The best way to consume MLOps v2 is to choose a complex use case that reflects most of your organization's needs from a Data Science perspective and start adjusting this accelerator to accommodate those requirements. The first use case may take longer to deliver; however, once the process has been ironed out, subsequent use cases can be onboarded in a matter of days if not hours.

Common customer challenges MLOps v2 gives answers to:

|

Roles/Skills |

Tools |

Artifacts & Versioning |

Development & Production |

|

|

|

|

Goal 1 - People:

- Blend together the work of individual engineers in a repository

- Each time you commit, your work is automatically built and tested, and bugs are detected faster

- Code, data, models and training pipelines are shared to accelerate innovation

Goal 2 - Process:

- Provide templates to bootstrap your infrastructure and model development environment, expressed as code

- Automate the entire process from code commit to production

Goal 3 - Platform:

- Safely deliver features to your customers as soon as they’re ready

- Monitor your pipelines, infrastructure and products in production and know when they aren’t behaving as expected

Solution Accelerator

The solution accelerator provides a modular end-to-end approach for MLOps in Azure based on pattern architectures. As each organization is unique, solutions will often need to be customized to fit the organization's needs.

The solution accelerator goals are:

- Simplicity

- Modularity

- Repeatability

- Collaboration

- Enterprise readiness

It accomplishes these goals with a template-based approach for end-to-end data science, driving operational efficiency at each stage. You should be able to get up and running with the solution accelerator in a few hours.

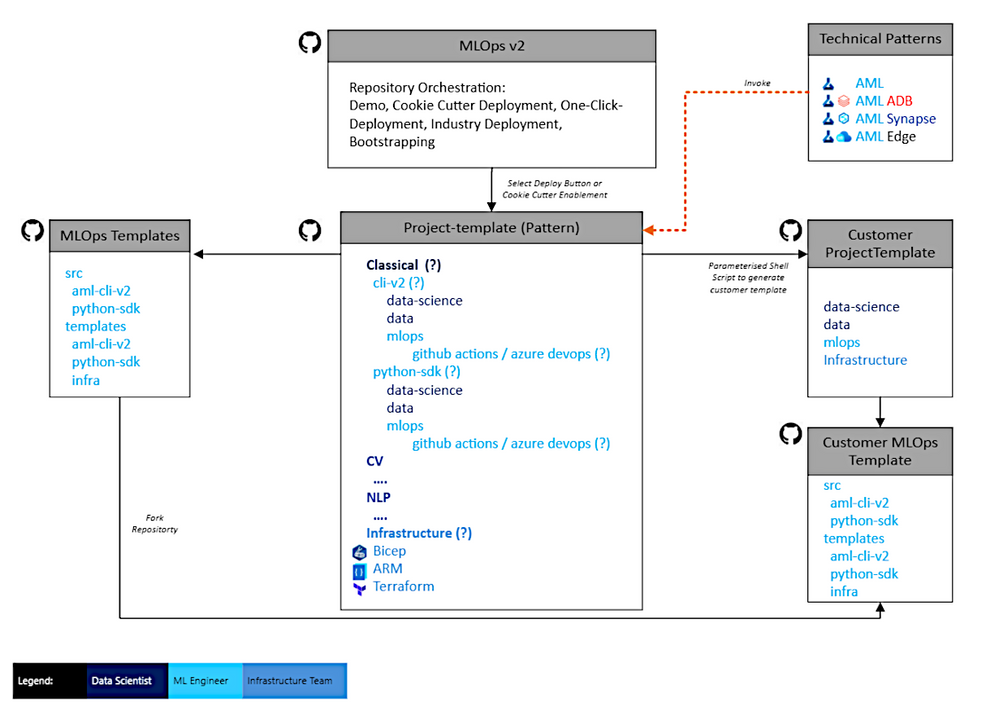

The Solution Accelerator is set up following the Mono Repository Methodology. Architecturally, a header repository is driving the deployment of individual technical patterns. Current identified patterns are:

- AML (Azure Machine Learning) Classical Machine Learning

- AML Natural Language Processing

- AML Computer Vision

- AML Azure Databricks (ADB) Classical Machine Learning

- AML ADB Natural Language Processing

- AML ADB Computer Vision

- AML Synapse

- AML On the Edge

The Solution Accelerator repositories are broken down by technology pattern:

Image: Repository Architecture of MLOps v2

Architectural Patterns

The MLOps v2 architectural pattern is made up of four modular elements representing phases of the MLOps lifecycle for a given data science scenario, the relationships and process flow between those elements, and the personas associated with ownership of those elements.

Below is the MLOps v2 architecture for a Classical Machine Learning scenario on tabular data along with explanation for each element.

Image: Classical Machine Learning MLOps Architecture using AML

- Data Estate: This element illustrates the organization data estate and potential data sources and targets for a data science project. Data Engineers would be the primary owners of this element of the MLOps v2 lifecycle. The Azure data platforms in this diagram are neither exhaustive nor prescriptive. However, data sources and targets that represent recommended best practices based on customer use case will be highlighted and their relationships to other elements in the architecture indicated.

- Admin/Setup: This element is the first step in the MLOps v2 Accelerator deployment. It consists of all tasks related to creation and management of resources and roles associated with the project. These can include but may not be limited to:

- Creation of project source code repositories

- Creation of Azure Machine Learning Workspaces for the project

- Creation/modification of Data Sets and Compute Resources used for model experimentation and deployment.

- Definition of project team users, their roles, and access controls to other resources.

- Creation of CI/CD (Continuous Integration and Continuous Delivery) pipelines

- Creation of Monitors for collection and notification of model and infrastructure metrics.

Personas associated with this phase may be primarily Infrastructure Team but may also include all of Data Engineers, Machine Learning Engineers, and Data Scientists. - Model Development (Inner Loop): The inner loop element consists of your iterative data science workflow. A typical workflow is illustrated here from data ingestion, EDA (Exploratory Data Analysis), experimentation, model development and evaluation, to registration of a candidate model for production. This modular element as implemented in the MLOps v2 accelerator is agnostic and adaptable to the process your team may use to develop models.

Personas associated with this phase include Data Scientists and ML Engineers. - Deployment (Outer Loop): The outer loop phase consists of pre-production deployment testing, production deployment, and production monitoring triggered by continuous integration pipelines upon registration of a candidate production model by the Inner Loop team. Continuous Deployment pipelines will promote the model and related assets through production and production monitoring as tests appropriate to your organization and use case are satisfied.

Monitoring in staging/test and production enables you to collect and act on data related to model performance, data drift, and infrastructure performance that may call for human-in-the-loop review, automated retraining and reevaluation of the model, or a return to the Development loop or Admin/Setup for development of a new model or modification of infrastructure resources.

Personas associated with this phase are primarily ML Engineers.

Additional architectures tailored for Computer Vision and Natural Language Processing use cases, as well as architectures for Azure ML + Databricks and IoT (Internet of Things) Edge scenarios, are in development.

Azure Machine Learning’s v2 developer APIs (Application Programming Interfaces) introduce consistency and new features that make it easy to operationalize machine learning models. With v2, you can use REST, CLI (command-line interface), or SDK (software development kit) for all operations and leverage managed endpoints for easy, safe rollout of models to production.

Technical Implementation

The MLOps repository contains the best practices that we have gathered to allow the users to go from development to production with minimum effort. We have also made sure that we do not get locked on to any single technology stack or any hard coded examples. However, we have still attempted to make sure that the examples are easy to work with and expand where the need be. In the MLOps repository, you will find the following matrix of technologies in the stack. The users will be able to pick any combination of items in the stack to serve their needs.

|

Infrastructure as Code |

CI/CD Platforms |

Deployment examples |

ML Examples |

Language |

|

Bicep |

Azure DevOps |

CLI |

Tabular - Regression, Classification |

Python |

|

Terraform |

GitHub Actions |

Python SDK |

Computer Vision – Classification |

R |

|

Azure Resource Manager |

|

|

NLP (natural language processing) - Classification |

|

|

|

|

|

Azure Databricks |

|

Table: Items marked in blue are currently available in the repository

We have also included some steps to demonstrate how you can use GitHub’s advanced security into your workflows, which include code scanning and dependency scanning. We plan to add more security features that the users can take advantage of in your workflows. Our goal is to add model monitoring together with data/model/concept drift monitoring in the setup.

See here for an end-to-end demo walkthrough:

Video: Demo video of MLOps v2 Solution Accelerator

Learning Motion and Evangelism

To help organizations onboard the MLOps v2 accelerator, learn content will be available on our Microsoft Learn platform. Microsoft Learn offers self-paced and hands-on online content to get yourself familiar with modern technologies. To get data scientists and machine learning engineers familiar with the DevOps concepts and technologies used in the MLOps v2 accelerator, we will be releasing hands-on content on how to automate model training and deployment with GitHub Actions this summer.

As MLOps v2 continues to evolve, the team will speak over the next month at several events. We will communicate updates and news to ensure that consumers of MLOps v2 are up to date with the progress Microsoft has made.

Summary

MLOps v2 is the de-facto MLOps solution for Microsoft on forward. Aligned with the development of Azure Machine Learning v2, MLOps v2 gives you and your customer the flexibility, security, modularity, ease-of-use, and scalability to go fast to product with your AI. MLOps v2 not just unifies Machine Learning Operations at Microsoft, even more, it sets innovative new standards to any AI workload. Moving forward, MLOps v2 is a must-consume for any AI/ML project redefining your AI journey.

Get started now!

Github repository: Azure/mlops-v2: Azure MLOps (v2) solution accelerators. (github.com)

Demo: mlops-v2/QUICKSTART.md at main · Azure/mlops-v2 (github.com)