This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

The Holland Museum is a small, yet innovative museum located in Holland, on the western coast of Michigan. The museum owns and operates four buildings: the main galleries in an old Post Office building, the Settlers House, the Cappon House, and the Armory. The museum is proud to collect artifacts and stories from Holland’s early settlers, the original inhabitants of the area, and the latest wave of immigrants to the area.

I serve on the Holland Museum’s Board and wanted to use Azure Percept to help the museum showcase more of its artifacts. More than 100,000 artifacts sit in the Holland Museum basement, with only 700 artifacts on display and visible to the public.

In April 2022, the Museum was preparing for the Tulip Time festival in Holland, Michigan where 500,000 visitors arrive to see millions of tulips in bloom. The Museum’s goal was to engage Tulip Time visitors with Azure Percept and detect the “dwell time” visitors spent with each artifact. The results the museum staff and I experienced were amazing and will further shape the way future exhibits are designed and monitored.

In this blog post, I will be sharing the ways that the Holland Museum staff were able to accomplish their goals of sharing the museum's story and large collection of artifacts while also exploring how Azure Percept can bring some of the artifacts that were once out of sight, into the public's view.

Azure Percept and Holland Museum collections overview

A brief demo of the Azure Percept shines light on Holland Museum collections can be found in the following YouTube video:

Azure Percept and Holland Museum collections Implementation

Phase 1: Getting Started

The first stage of the journey was training the museum staff about Azure Percept. I used two Azure Percept DKs; one unit was used to detect tulips, and the second was used to detect people in front of the display.

Azure Percept is very easy to use. One museum staff member attended the meeting virtually, so we shared our screen and logged in to Azure Percept Studio. She was able to remotely upload images of 10 tulip varieties, build the bounding boxes, and press the train button. Thirty minutes later, we had the new AI model running Tulip Detecting with Azure Percept. It was that easy!

The following 3 key steps were followed in this phase:

- Step 1: Getting the DK set up.

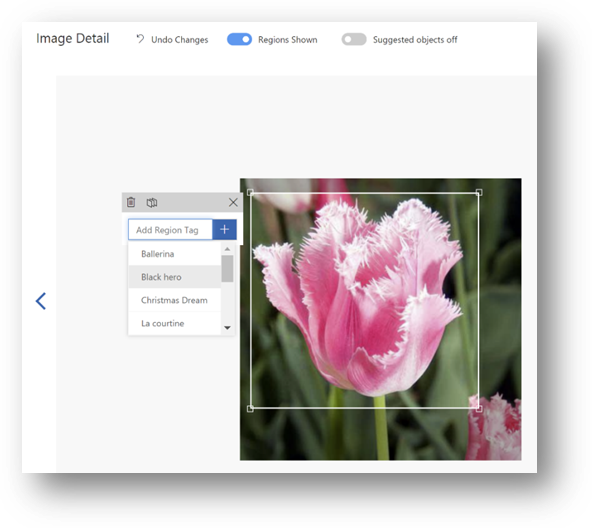

- Step 2: Azure Percept Studio: Upload 15 images for each tulip species, drag out the bounding boxes, and select the respective tag from the menu.

- Step 3: Deploy the newly trained AI model to Azure Percept.

Phase 2: Training our own AI model

A week later, I revisited the museum to review the model. The amazing part is the museum staff was able to train an intern to upload images and had also trained her on the AI model. This democratized Edge AI in action with Azure Percept, as the knowledge and processes were being organically transferred throughout the museum. At this second meeting, we walked through the iteration test images to better understand the issues in training images that were causing incorrect guesses. Our intern was then able to update the model and rebuild the bounding boxes prior to the influx of tourists that would arrive in West Michigan.

Visitor interaction

Visitors walked up to the exhibit, quickly read through the instructions, pulled out their phones and swiped through the images of tulips they took during their Tulip Time visit. They held up their images to the camera and watched for tulip variety detection. Some visitors noted they had to hold the phone/images very close to the camera for detection to occur. Others noticed that images with many tulips triggered detection events as easily as images of a single flower.

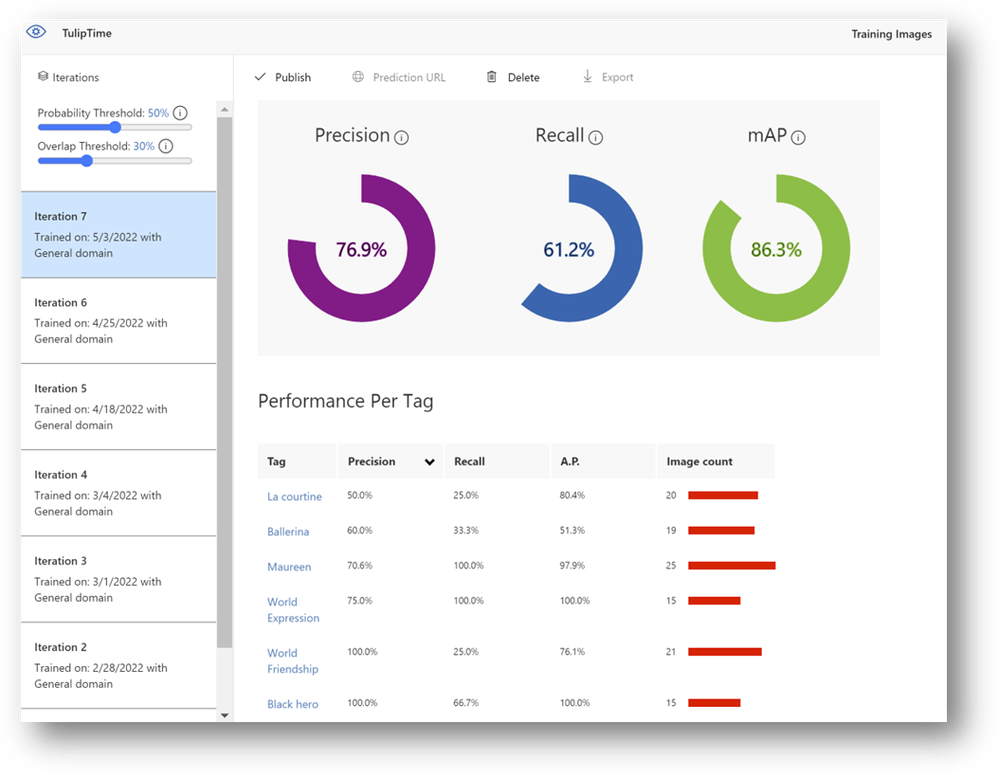

Precision of the Model

Initially only 15 images were uploaded for each species, but the museum staff noticed there was some misrecognition occurring. We learned that adding more images improved the initial model. The staff also learned that using images with close-ups and isolated images of tulips was much more effective than using images with a whole field of tulips. The staff revised the AI model and trained seven additional iterations before the model was ready for visitor use.

Visualizing the data

The exhibit was a huge success as it introduced visitors to new technology, connected visitors with tulip varieties (which represent a rich Dutch heritage in the local area), and provided a fun and interactive way for visitors to engage with the Museum’s artifacts and exhibits.

The most exciting part of this exhibit was viewing the live data connection using PowerBI. We could see when people were standing in front of the exhibit. We could also see at night that traffic lights in the museum gallery were being detected when no people or cars were being detected. Car detection was interesting as well. When a horse drawn carriage with two rows of seating was in front of Azure Percept, it was detected as a “car.”

Next steps: The future of Edge AI: Azure Percept in museums

It’s crazy to think about how far we have come in making Edge AI and customized AI models accessible and available for public use with Azure Percept. To get started, you really only need to get an Azure Percept Dev Kit, connect the kit with the self-contained out-of-box experience/user interface, set up and train an AI model with Azure Percept Studio and then deploy your model to the device. With Azure Percept, you get the added benefit of working on the Edge, where internet connection is not a requirement anymore, and privacy is protected since the AI model can be trained to only collect specific types of information.

This project breaks the mold of traditional communication methods, taking cutting edge (pun intended) technology and merging museum stories with Edge AI: Azure Percept, therefore pushing the boundaries for future exhibits/displays.

We are planning to place three Azure Percept DK in the museum’s main gallery. We want to monitor three important artifacts and measure the dwell time visitors spend with each artifact. We can, in turn, make changes to the artifacts and study changes in dwell time. Some of the data we could monitor includes: change in artifact height, change in artifact labeling, movement of artifacts to a different space in the museum gallery, and replacement of one artifact with another from the collection. As the museum staff learns about how AI can measure dwell time, we want to survey the staff on further applications of Edge AI: Azure Percept in the museum. By understanding what artifacts attract a visitor’s interest, and which ones they want to spend more time with, museums can create exhibits that will generate greater foot traffic and loyal patrons. We look forward to presenting our project at museum conferences nationwide!

To learn more about Azure Percept, visit these resources:

- Learn about Azure Percept

- Learn about Azure Percept Technical Documentation

- Learn more about our customer and partner stories

Microsoft Build 2022 Key Sessions

- Microsoft Build Into Focus: Preparing for the metaverse

- Embrace digital transformation at the edge with Azure Percept

GTC Spring 2022 Key Sessions