This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Simplify your Kubernetes experience, without compromising the power of what it can do. If you're experienced or new to cloud-native apps, you'll get a closer look at the recent updates for Azure Kubernetes Service to improve and streamline the developer experience, with the new open-source Draft bootstrapping tool, to containerize and prepare your apps for deployment, along with the new managed Kubernetes Event Driven Autoscaler – or KEDA.

And for operators, we’ll share how you can now run Kubernetes in ARM-based VMs, and Windows Server 2022 node pools, as well as storage driver and traffic optimizations in Azure to speed up performance. Join Jorge Palma, Principal PM Lead, from the Azure Kubernetes Service Team and Jeremy Chapman as they walk you through the benefits and details of building, scaling, and operating Kubernetes workloads in Azure.

Create clusters with pre-built Smart Defaults.

Get apps up and running with pre-built Smart Defaults for creating clusters. Customize everything pre- or post-deployment without taking away any granularity or control. See how Smart Defaults can help you get started.

Build a basic app faster than a starter app.

Draft removes the need for multiple tools, multiple steps, and multiple days to get up and running. See how does the Draft bootstrapping tool make Kubernetes easier.

Check out the newly updated Azure Disk CSI driver.

The newly updated Azure Disk CSI driver allows you to optimize for specific workloads. See how we achieved 40% more reads! Check out the latest performance updates to storage drivers.

Watch our video here.

► QUICK LINKS:

00:00 Intro to recent updates for Azure Kubernetes Service (AKS)

01:49 What are Smart Defaults and how do they help you get started?

03:39 What is the fastest way to get an app running on Kubernetes?

04:57 How does the Draft bootstrapping tool make Kubernetes easier?

09:58 What is Azure managed Grafana and how does it improve monitoring

10:38 Kubernetes can target Windows Server 2022 and ARM-based VMs

11:06 Performance updates to storage drivers

► Link Reference: Find the latest information on Kubernetes updates: aka.ms/KubernetesMechanics

► Unfamiliar with Microsoft Mechanics?

• As the Microsoft’s official video series for IT, you can watch and share valuable content and demos of current and upcoming tech from the people who build it at Microsoft. Subscribe to our YouTube: https://www.youtube.com/c/MicrosoftMe...

• Talk with other IT Pros, join us on the Microsoft Tech Community: https://techcommunity.microsoft.com/t...

• Watch or listen from anywhere, subscribe to our podcast: https://microsoftmechanics.libsyn.com...

• To get the newest tech for IT in your inbox, subscribe to our newsletter: https://www.getrevue.co/profile/msftm...

► Keep getting this insider knowledge, join us on social:

• Follow us on Twitter: https://twitter.com/MSFTMechanics

• Share knowledge on LinkedIn: https://www.linkedin.com/company/micr...

• Enjoy us on Instagram: https://www.instagram.com/microsoftme...

• Loosen up with us on TikTok: https://www.tiktok.com/@msftmechanics

Video Transcript:

- Coming up, whether you're experienced or new to cloud native apps, we'll take a closer look at recent updates for Azure Kubernete service to improve and streamline the developer experience. With our new open source Draft bootstrapping tool to containerize and prepare your apps for deployment along with a new managed Kubernetes event-driven auto-scaler, KEDA. And for operators, we'll share how you can now run Kubernetes on arm-based VMs. And windows server 2022 node pools, as well as optimizations in Azure to speed up performance. And to walk us through all the updates, I'm joined by Jorge PALMA from the Kubernetes team in Azure. Welcome to Mechanics.

- Thanks for having me.

- Thanks so much for joining us today. You know, we've seen a massive adoption of cloud native applications. And we're seeing this running on Kubernetes in Azure. In fact, you know, we've been charting all the progress from the time that Kubernetes co-founder, Brendan Burns, joined Microsoft and was on the show. And there's been a ton of focus since then on making cloud native application development even more accessible for everybody. And we recently covered Container App service to extract the infrastructure complexity of orchestration and scaling, allowing you to just focus on code and building Container Apps.

- That's right. The Container App service is great. If you wanna quickly get started building containerized microservices without really being exposed to all the option and the operator side of things. And now that being said, if you're targeting Kubernetes and wanna take full advantage of its power, the new Azure Kubernetes service and tooling updates help streamline the process for building, deploying and customizing your apps as a developer. We also continue to expand and optimize on the options for operators and cluster admins. And all of this is part of a multi-stage effort to make Azure the best platform for cloud native development on Kubernetes.

- So, why don't we dig into this? So, how do things change now, if I wanna develop and operate an app run in Kubernetes in Azure?

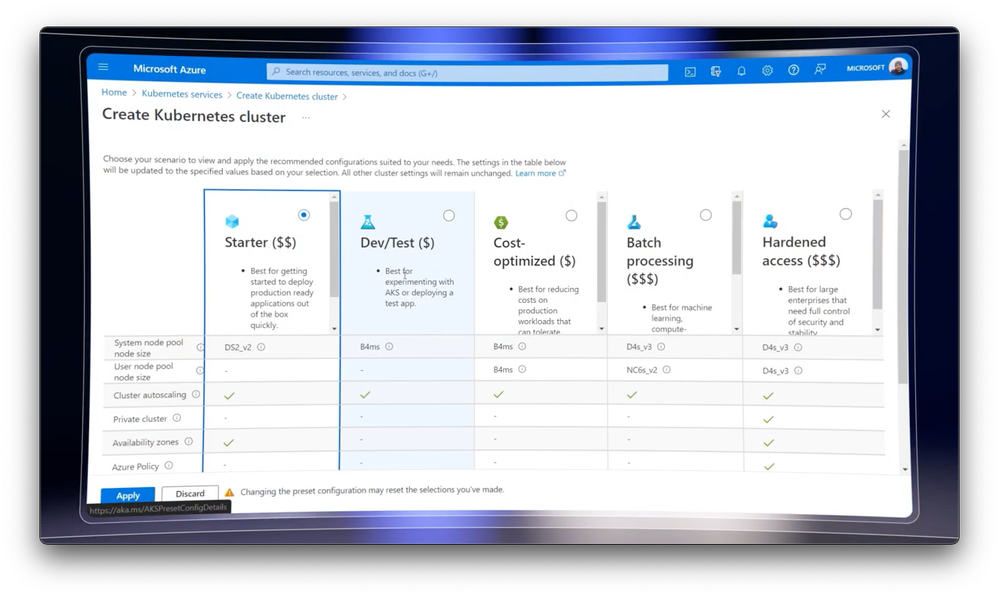

- Well, now it's much easier to get started. In the past, beyond just building your app, you'd have to start with how do you wanna run your applications with the right services and configurations? So now, without taking away any granularity or control we've made this easier by offering pre-built configurations, called smart defaults, when you create clusters. These plug in a lot of default configurations for you, so you can get up and running fast. And you can still customize everything, pre or post deployment. Let me show you. So, I'm in the Azure portal and I'll start by creating a Kubernetes cluster. I'll just need to add in the standard details right here. And then you'll see that when I get to the preset configuration, I can choose from a few options based on my workload. Starter is a great production ready general purpose configuration. And then Dev/Test is the right choice if you are experimenting with AKS in a non-production situation, while Cost-optimized is for workloads that can tolerate interruptions and Batch processing is for machine learning compute. Finally, Harden access is for when you really need higher security and scalability. So, in my case, I'll go ahead and create a starter cluster. I just need to give it a name. I'll call it Mechanics. And I'll keep the Kubernetes version and API server availability as is. Next, in the Node pool screen, you'll see a few defaults pre-configured here. A User Node pool, which you'd use for your app components and a System Node pool for the cluster system components. I'll keep the defaults for access, integrations, advanced and tags. And from right here, I can just review my settings. Seems like everything looks good. And I'll just go ahead and create my Kubernetes cluster. And that's it. In about a minute, I completed all the required configurations

- And this is really night and day compared to what you would've had to do before. It's simplifying abstracting a lot of the detail for you, so now you've got your cluster running. How would I then get an application to run on top of that cluster?

- Well, I can get an application running even faster than that. Let's switch back and I'll show you. Still in the Azure portal from my new clusters overview tab, I'll hit create. I'll choose a quick start application option. Next, we have the option to create a basic app or a single image app. And I'll go ahead and choose a single image option. For the container registry, I'll just choose mine, J. Palma. And then for the image, I'll just pick my AKS Hello World image, which is a simple app running on Linux. And on the application details, I'll just leave all the default values for replicas but I'll add some resource minimums of a 100 mil CPU and a 100 megs of memory as resources. And I'll set my limits to one CPU and 500 megs for memory. For networking, I'll just keep it default port 80. And then we can just check the underlying manifests for deploying the app. And it actually points out all the important selections that we've made so far. I can just go ahead and hit deploy now, and you'll see that everything starts in just a few seconds, in this case. And I can just go click view application and you can see my Hello World app up and running.

- Okay so, now you've shown us all the basics for getting everything up and running but I really wanna take this up a level. So, what if we wanna deploy a mission critical app and use DevOps processes? So, how have we made that process easier?

- Well, we've put a lot of work and effort in making this process a lot easier. First, is the work that we've done to simplify the development and deployment process, using Draft. Draft is a new open source bootstrapping tool that lets you start from source code and get non-containerized apps deployed to a Kubernetes cluster. Draft will generate everything for you, from docker files, Kubernetes manifest to Helm charts or customize configurations, and it will automate CICD setup for you via GitHub actions. It also integrates with KEDA for auto-scaling and beyond the Azure CLI, it also works from the Azure portal and visual studio code as well.

- So, I'd love to see how you'd use Draft to get everything set up and running, also with high performance and auto-scaling configured.

- Sure. So, let me show you an example using an airlines flight booking application that we need to containerize and get running in AKS. I'll start in GitHub. As you can see, this is a non-containerized no JS web application that has a Cosmos Database backend. To make it easy to get started, I can just open code spaces from our Contoso Air repository and you'll see the Draft selection menu. From here, I can just right click and pick the right command, which in this case I'm gonna select the Draft create command to bootstrap our repo with the necessary files for deployment. I'll go ahead and add the port that our app is exposing and Draft will immediately generated a docker file for us. It will then ask me how I would like to deploy the application between Kubernetes manifests, Helm charts and customize. And in this case, because we have experience with Helm, I'll go ahead and choose that. For the application to be exposed to the outside of the cluster, I have to choose a port for our service. And finally I'll just need to give it a name. So, at this point I have all the necessary files to containerize and deploy our application. Draft can be used across all platforms and clouds to make things simple. And we further streamlined the Draft workflow to reduce the number of additional steps in the Azure environment. Now, I'll use Draft to connect our GitHub repo to our AKS cluster and automatically generate the GitHub action workflow file that will be used to deploy our cluster to every time we push changes or do a pool request. By running Draft up, it will securely connect to GitHub and our cluster via OIDC. So, I'll just provide the name for the app and next I'll provide the resource group, and finally, our GitHub organization. I'll then go ahead and pass our Azure container registry, where Draft will build and push our container image based on the automatically generated docker file as well. And our cluster details. Even though I need to plug in a few parameters here, we've streamlined the number of manual steps significantly and it's a guided wizard like experience. Finally, I'll select which branch from GitHub I wanna deploy from, and I can see that all our files are now generated. Draft has completed all the necessary steps to get our application ready for deployment. So, let's kick that off by committing and pushing the recent changes that we've made. Once we have pushed to our repo we will be able to see our GitHub actions kicking off the container image build and then deploying our newly containerized application to our AKS cluster. And once that is finished, we can just go ahead and take a look at the result in the Azure portal. We can check our services blade and use the IP that was automatically created by AKS for our application and just open it. And there we have it. Contoso Air.

- And just to underscore, what's truly different here, you know, the process that you just showed, actually removes you know, the need for multiple tools, multiple steps and without Draft, you know, something like this could have taken days to get up and running. But I can see that you still got an IP address here, so it's probably not production ready yet.

- Not yet. That's the next step. So, everything looks fairly good here but there are some glaring issue with our application. Clearly I used an IP to access it, so I can see that the connection is insecurely using HTP. I can secure this further using web app routing and make it use a secure URL. First, I'm going to use Draft to add and configure web app routing to our cluster, which is right here under update. I'll just go ahead and pass our DNS name as the Ingress resource host, which in this case is ContosoAir.com and then our certificate, which is stored in Azure key vault. Now, I already have this in my clipboard so I'll just go ahead and paste that in. Now, this will create a YAML Ingress manifest for us that gets added to our Helm chart. I'll just need to go ahead and add a message here, like configure web app routing, and then go ahead and commit our changes. After, we just need to push everything to our repo on GitHub, which deploys the changes to our AKS cluster using our GitHub action. I can watch this progress from right here. And you can see that the deployment has started. This process, in my case, takes about three minutes. So, we've speed things up to save a little time. Now, I'll go back to the browser and enter the updated URL, ContosoAir.com and now everything is up and running and secured over HTTPS.

- All right, so now your application's a lot more secure. But what happens if you had a peak in demand, for example? And for that you need auto-scaling. So, how would you set that up?

- Well, that's where KEDA comes in. So, let's get our auto-scaling setup. I'm going to install KEDA first, using Draft. Now you can see that it creates an auto-scaling configuration here, using the built-in HTP scaler. It has a minimum of two replicas and a maximum of 30. And that sounds good to me, so I'll go ahead and commit these changes and push it to our repo. But now let's imagine that we entered a busy travel season. So, I'll simulate some load to test out how our auto-scaling works. And I've already turned on Azure Monitor for containers, in advance, which combines application and infrastructure metrics. I'll show this in the new Azure Manage Grafana service. In the dashboard, you'll see that everything is combined into a single view for full stack visibility. KEDA is already handling the scaling automatically. And if you look at the pod count, you'll see that our existing five pods scale almost instantly to 13 pods in order to meet demand. And while I use Azure Monitor, in this case, it's just one of the many sources that you can use for our Azure Manage Grafana service.

- Okay, so now you've got everything running. You've got auto scaling turned on. You've even wired up Grafana for monitoring as well. So, we're kind of moving a little bit more into operator land, in terms of DevOps. So, what else are we in for, the operators that are watching right now?

- So, this time around, we're really focused on additional flexibility and performance for our operator options. First, there is support for running AKS clusters in arm-based VMs that delivers up to 50% better price to performance when compared with x86 based VMs. They are great for scale-out workloads. and AKS also supports now, Windows server 2022 as a target OS for your windows Node pools. We also have ready build container images available as well. We have also updated the Azure disc CSI driver with significant performance optimizations. So, let's see how fast this can be by using a benchmark. I'll go ahead and run a simple file IO workload, customized to produce loads on IO resources similar to a postgresql DB. This one on the left will create discs using the regular storage class configuration. And this one on the right, we have our optimized storage class configured, where we've customized the OSQ settings. Now, I'll deploy these two workloads and persistent volumes and confirm they are created and running the benchmark. I'll let this run for a moment. It normally takes about two minutes. And once it's done, I'll take a look at the locks from both jobs and see how they did. On the left with the regular storage class, it hit around 80K reads and writes. Not bad. But on the right, you'll see that with the optimized storage class enabled by the new drivers, we hit over 114K reads and around 80K writes.

- And just to point out, this is running on the same disc same spec, same hardware, but your reads are 40% faster.

- That's exactly right. With the new driver, you really can optimize for specific workloads. And again, if we see a spike in travel, we also allow you to make configurations on the OS networking side as well. Here, you can see that I can have up to 240,000 concurrent connections using the customer configuration feature, that we're now making generally available. Once I pass this configuration to our custom Node pool and deploy it, we're now ready to take on massive traffic. So, these configurations, combined, will lift your capacity ceiling, so your infrastructure will never be the bottleneck. And with these updates, if you're an operator, we've worked on improving flexibility and performance across the board. And for developers, we focused on making it easier to get started and to continuously deploy new workloads.

- And all of this, by the way, applies to both hybrid as well as cross cloud configs. And you've got all the portability of Kubernetes and you've got Azure Arc for unified management across your infrastructure, wherever it is. So, for anybody who's watching, looking to start using some of this stuff, what do you recommend?

- You can find everything you need to get started with Kubernetes on Azure at aka.ms/KubernetesMechanics.

- Thanks, Jorge, for joining us today and also sharing what you can now do with Azure Kubernetes service. And of course, keep checking back to Microsoft Mechanics for all the latest tech updates. Subscribe to our channel, if you haven't already. And, as always, thank you for watching.