This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Introduction

MLOps (machine learning operations) is based on DevOps principles and practices that increase overall workflow efficiencies and qualities in the machine learning project lifecycle. In this post, we will start by highlighting general concepts of Microsoft MLOps Maturity Model. Then we will introduce MLOps architectural patterns using Azure Machine Learning CLI v2 and GitHub, elaborating on the challenges and action items. Practical code snippets and step-by-step sample codes are out of scope for this blog, but it will be published in our next blog.

MLOps Maturity Model

To clarify the principles and practices of MLOps, Microsoft defines MLOps maturity model. Different AI Systems have different requirements and different organizations have different maturity levels. Hence, an MLOps maturity model will be very useful to:

- Understand the current MLOps maturity level of your organization

- Set concrete goals and plan the long-term roadmap for your MLOps project.

The table below is an abstract view of each level of MLOps maturity model. Note that this is a generic guideline, and we recommend you customize it for your own MLOps project. We will introduce more details in the following sections of this blog.

| Maturity Level | Training Process | Release Process | Integration into app |

|---|---|---|---|

| Level 0 - No MLOps | Untracked, file is provided for handoff | Manual, hand-off | Manual, heavily DS driven |

| Level 1 - DevOps no MLOps | Untracked, file is provided for handoff | Manual, hand-off to SWE | Manual, heavily DS driven, basic integration tests added |

| Level 2 - Automated Training | Tracked, run results and model artifacts are captured in a repeatable way | Manual release, clean handoff process, managed by SWE team | Manual, heavily DS driven, basic integration tests added |

| Level 3 - Automated Model Deployment | Tracked, run results and model artifacts are captured in a repeatable way | Automated, CI/CD pipeline set up, everything is version controlled | Semi-automated, unit and integration tests added, still needs human signoff |

| Level 4 - Full MLOps Automated Retraining | Tracked, run results and model artifacts are captured in a repeatable way, retraining set up based on metrics from app | Automated, CI/CD pipeline set up, everything is version controlled, A/B testing has been added | Semi-automated, unit and integration tests added, may need human signoff |

As you evolve into a higher level of maturity, you may notice that it is not only about technology, but also the organization, the people, and the culture will become more important. As this blog is focused on the technology, please see AI Business school to learn about AI business strategies in your organization and AI-ready culture.

Azure Machine Learning

Azure Machine Learning is an open-source friendly, machine learning platform that can be used to implement full machine learning lifecycle and MLOps through integration with GitHub (or Azure DevOps) and Responsible AI technologies which support you to develop, use and govern AI responsibly.

In the following sections, we will use Azure Machine Learning Workspace and assets to implement MLOps. Fundamental knowledge with Azure Machine Learning, GitHub, and GitHub Actions concepts are recommended to follow the steps below.

Azure Machine Learning Workspace and assets

Azure Machine Learning Workspace is the top-level resource of Azure Machine Learning. Azure Machine Learning Workspace leverages Azure Storage Account, Azure Container Registry, Azure Key Vault, Azure Application Insights, and related Azure services depending on your requirements. Here is a list of Azure Machine Learning assets we want you to understand before reading this blog.

- Data

- connect to Azure storages and manage subset of data.

- Job (Experiments and Runs)

- handle job requests and execute it.

- manage metrics and logs in the job.

- Model

- register and manage trained model with metadata.

- Environment

- create and manage runtime (Docker Image) for training and inference.

- Component

- create and manage a self-contained piece of code that does one step in a machine learning pipeline.

- Pipeline

- create and manage reproducible machine learning workflow.

- Endpoint

- create and manage inference environments like Managed Endpoint.

For more details, please check out How Azure Machine Learning works: resources and assets (v2).

MLOps Maturity Model with Azure Machine Learning

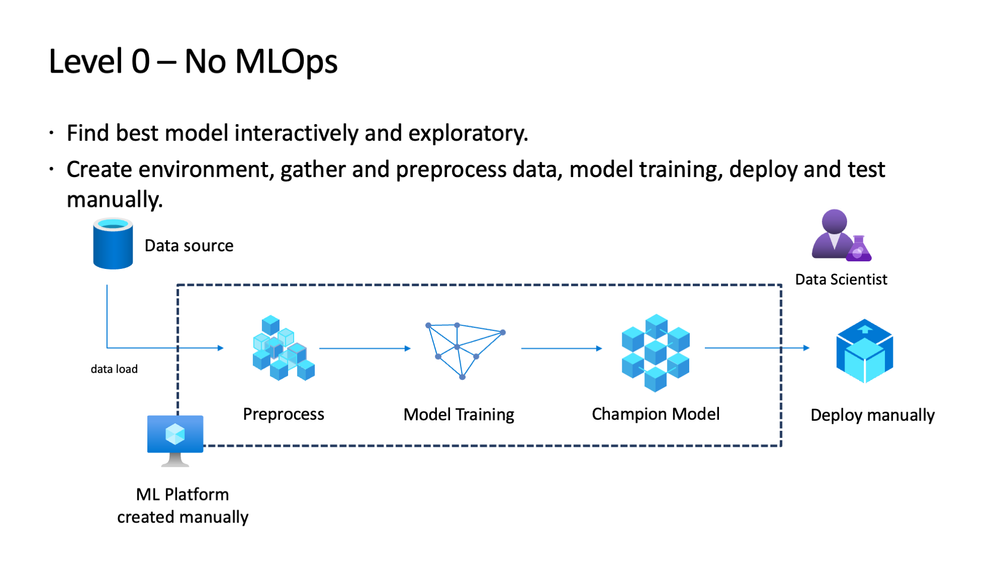

Level 0 : No MLOps

The tables that follow identify the detailed characteristics for this level of process maturity.

| People | Model Creation | Model Release | Application Integration |

|---|---|---|---|

|

|

|

|

Azure Machine Learning Architecture

If you use Azure Machine Learning, Compute Instance is a good place to start your machine learning journey. It includes essential Python/R libraries and development tools like Jupyter Notebook, JupyterLab, R Studio and VSCode remote development feature. After trained models, you can deploy by yourself or useManaged Endpoints to deploy models quickly.

Challenges

- Reproducibility

- It is very difficult to reproduce training model jobs because data scientists use their own customized machine learning tools and codes and Python/R packages that are used by each data scientist and not shared across the organization.

- Quality & Security

- No test is configured. Testing policies are not designed across the organization.

- There are security concerns in machine learning tools because they are not governed by IT.

- Scalability

- Often compute resources don't have enough power to run jobs at scale.

- High-performance compute resources are not shared within the organization, which leads to increase in the cost.

- Other

- Data source is not maintained for machine learning. It requires manual steps to get training data.

What's next?

- Standardize the machine learning platform across the organizations and create shared high-performance compute resources.

- Setup code repository and share codes used by data scientists.

- Refactor codes and rewrite them as testable Python script (.py).

- Configure and automate code test against train and score scripts.

- Setup data pipeline to be able to get data easily.

Next, we will introduce Level 1 : DevOps no MLOps

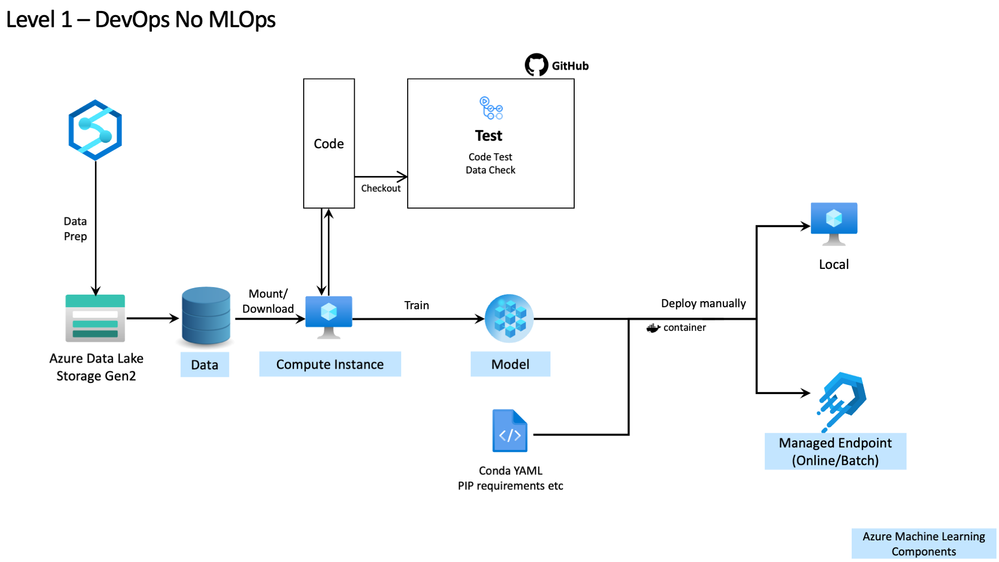

Level 1 : DevOps no MLOps

This is a level that data scientists work on a standardized machine learning platform. Data pipeline is maintained by data engineers, so it is easy to get data for training model.

However, reproducibility in model training is still an issue because machine learning assets such as data and python packages are not shared. Onetime job dependencies within a training workflow are complicated but they are not shared by each data scientists.

| People | Model Creation | Model Release | Application Integration |

|---|---|---|---|

|

|

|

|

Azure Machine Learning Architecture

Azure Machine Learning is a shared and collaborative machine learning platform. By using the Data feature to connect to Azure storage services like Azure Data Lake Storage Gen2, you can access to data source easily from Azure Machine Learning and mount/download it into a Compute Instance. Once you trained model, you can register it to the Modelregistry and deploy it using Managed Endpoints that streamlines the model deployment for both real-time and batch inference deployments. The general concept is explained in this document.

Azure Machine Learning can be integrated with GitHub, allowing you to share your train and score code on GitHub code repository and to test codes automatically using GitHub Actions.

Challenges

- Reproducibility

- Assets in machine learning lifecycle are not shared across organization.

- Some training model workflow has complex job dependencies and is difficult to run by others.

What's next?

- Ensure reproducibility of the experiments.

- Create pipeline to automate and reproduce model training workflows.

- Save assets (not only code but also data, metrics/logs, models etc) associated with the experiments

- Operate model.

- Register the trained model and associate it with the experiments.

Next, we will introduce Level 2 : Automated Training

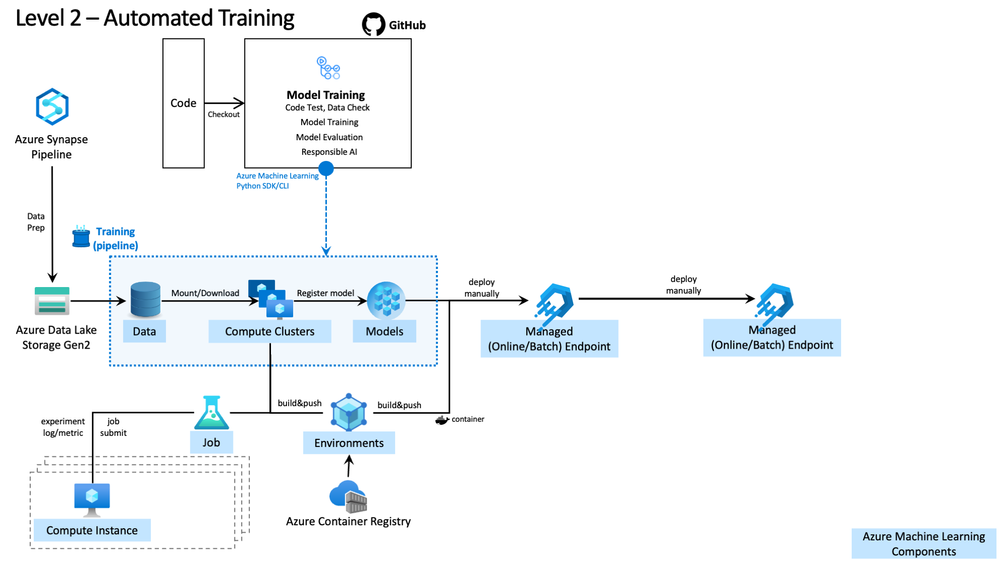

Level 2 : Automated Training

| People | Model Creation | Model Release | Application Integration |

|---|---|---|---|

|

|

|

|

Azure Machine Learning Architecture

Typically, the workflow of training models is defined by a Pipeline which captures complex job dependencies (if the training model workflow is simple, you don't have to use it).

Job manages your training job, logs parameters, and metrics. Azure Machine Learning CLI v2 is a new version of Azure Machine Learning API. You can define your job using YAML files and execute your job using Azure Machine Learning CLI v2. Python SDK v1&v2 is also available if you want to define by Python code.

Finally, an Environment defines Python packages, environment variables, and Docker settings for training model and scoring model.

You can use GitHub Actions to trigger model training job easily via Azure ML CLI v2. Typical cases of triggering model training job are as follows:

- Trigger job every time code in GitHub is changed by pull request or commit.

- Trigger job on a schedule to train model using new data.

Challenges

- Deployment

- Deploy models into multiple inference endpoints quickly.

- Model quality

- Test the trained model behavior and performance.

- Ensure the model has interpretability/explainability and fairness.

What's next?

- Deploy pipelines.

- Define the workflows of your model deployment for automation.

- Improve the model qualities.

- Automate test model using test data.

- Increase transparency by using interpretable machine learning model or use explainability techniques to the trained model.

- Evaluate fairness of the trained model. Mitigate the model bias if necessary.

Next, we will introduce Level 3 : Automated Model Deployment

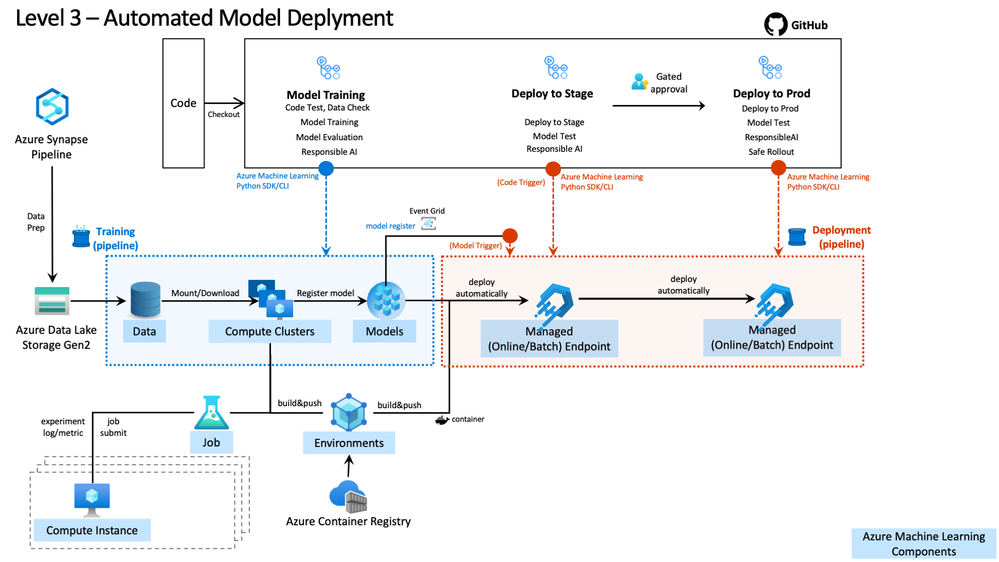

Level 3 : Automated Model Deployment

In this level, trained models are deployed automatically to eliminate human errors and increase quality, security, and compliance in the machine learning application.

The tables that follow identify the detailed characteristics for this level of process maturity.

| People | Model Creation | Model Release | Application Integration |

|---|---|---|---|

|

|

|

|

Azure Machine Learning Architecture

Managed Endpoint using Azure ML CLI v2. GitHub Actions works well with Azure CLI Action for automation, but you don't have to automate the full lifecycle. If you want to put a release gate between Stage and Production, you can use GitHub environments. Challenges

- Model degradation

- Model is not upgraded after deployment. High possibilities that the user experience gets worse due to model performance degradation over time.

- Model Retraining

- Inference environments are not monitored enough. Hard to predict when to retrain model.

- Difficult to deploy new model into production inference environment without affecting customers.

What's next?

- Implementing monitoring system.

- Detect data drift by scanning data regularly.

- Monitor metrics and logs that are associated with your model and system performance.

- Automation

- Trigger training pipeline for retraining model based on metrics and logs (above).

- Rollout new model safely into production endpoint using blue-green deployment.

Next, we will introduce Level 4 : Full MLOps Automated Retraining

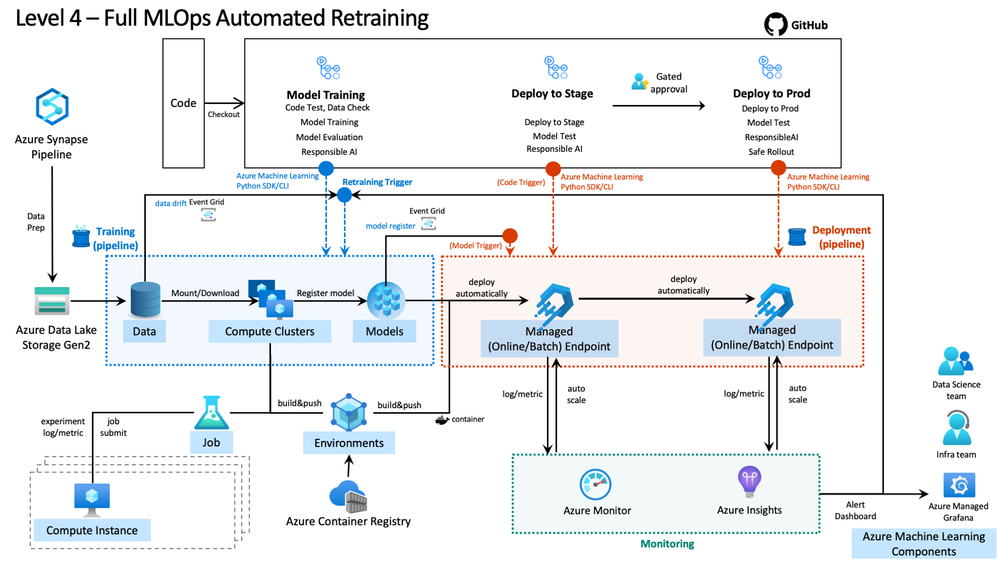

Level 4 : Full MLOps Automated Retraining

In this level, monitoring and retraining are introduced for continuous model improvement.

| People | Model Creation | Model Release | Application Integration |

|---|---|---|---|

|

|

|

|

Azure Machine Learning Architecture

Metrics and logs are stored and analyzed in Azure Monitor and Azure Application Insights. You can check if deployed model performs well or not and if system performance needs to be improved or not. A retraining job is triggered by the defined metrics automatically or via human judgment.

When deploying new model into an existing inference endpoint, Managed Online Endpoint for examples, you can use blue-green deployment (and mirrored traffic) to control traffics into production inference endpoint without affecting the existing production environment before rolling new model out completely.

Summary

We introduced the concept of an MLOps maturity model and explained the architecture and product features of Azure Machine Learning and GitHub used in each maturity level. We also discussed the challenges and what's next for each maturity level. In the next blog, we will describe how to implement MLOps using Azure Machine Learning and GitHub.

Learn more about MLOps

- Read the product websites to learn the basics.

- Read the product documents for details.

- Checkout sample codes to implement MLOps using Azure ML CLI v2.

- Use MLOps templates to accelerate your MLOps projects.

Acknowledgements

We would like to thank the following people for helping and contributing to us with this post:

- Azure CXP - FastTrack for Azure

- Meer Alam, Kris Bock

- Customer Success Unit - Cloud Solution Architect (ML Insider team in Japan)

- Shunta Ito, Kohei Ogawa