This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

This is the second of two blog posts for this topic; this one takes the Node.js app running that is working against Azure Storage Blobs for image manipulation and get the app running in an Azure Function, with an external executable running in the context of an Azure App Service. Please see Part 1 to get caught up if necessary.

First, let me do an important disclaimer, before I even talk about anything:

This Sample Code is provided for the purpose of illustration only and is not intended to be used in a production environment. THIS SAMPLE CODE AND ANY RELATED INFORMATION ARE PROVIDED "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESSED OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE IMPLIED WARRANTIES OF MERCHANTABILITY AND/OR FITNESS FOR A PARTICULAR PURPOSE. We grant You a nonexclusive, royalty-free right to use and modify the Sample Code and to reproduce and distribute the object code form of the Sample Code, provided that You agree: (i) to not use Our name, logo, or trademarks to market Your software product in which the Sample Code is embedded; (ii) to include a valid copyright notice on Your software product in which the Sample Code is embedded; and (iii) to indemnify, hold harmless, and defend Us and Our suppliers from and against any claims or lawsuits, including attorneys’ fees, that arise or result from the use or distribution of the Sample Code.

OK, now that that's out of the way... last time, I left you with a running Node.js app local on your machine that knew how to read a picture from an Azure Blob, then call out to a local-to-the-app copy of the GraphicsMagick executable through the gm module to manipulate that image, then write that image back to a new Azure Blob. Looking back at that code, it's all pretty straightforward, especially given that the executable can work with pipes, and the module exposes that.

So, you have this code now, and it's working on your machine. Let's get that into the Azure Function app! Last time, you created that app, and the underlying Azure Storage Account, which is what you're working with for the images. That app as mentioned is using a local executable. Luckily for my demo, that executable and its associated libraries are essentially built in a "portable" way, so everything strictly needed is local to the installation folder. That makes it easy for us here, because we can just put that folder up there.

Start with creating a ZIP file with the necessary application parts. Last time, the executables were copied wholesale out of the Program Files folder to the utils folder of the code. Open that folder in File Explorer, and delete the folders and files that aren't related to the executable. (You don't strictly need to do this, but why pay for storage you don't need to pay for?) This means deleting the following folders:

- licenses

- uninstall

- www

and, in the case of version 1.3.36 on Windows, which is what I have installed, the following files:

- unins000.exe

- unins000.dat

- GraphicsMagick.ico

- ChangeLog.txt

- Copyright.txt

- README.txt

You now have a "minimum" install folder. (It's important to note that I'm not demoing any of the GraphicsMagick functionality that uses GhostScript; if I was, I'd have to deal with that dependency.) In the utils folder, ZIP the GraphicsMagick-version folder through right-click, Send to, Compressed (zipped) folder or, on Windows 11, right-click, Compress to ZIP file. (You can do this however you want, of course, if this is not your preferred method.) Hold on to this ZIP file for now; it's going to come up later on after some other work in the Azure Portal.

Open the Azure Portal and navigate to your Azure Function App Service (e.g. through Search at the top) if you are not already there (we left things at the Storage Account, so you probably aren't there.) Under Functions, select Functions, then in the command bar at the top, select + Create. When prompted, choose to Develop in portal with the HTTP trigger template. Both of these are "because we're doing this as a demo" choices... in production, you might do something like an Azure Blob Storage trigger to automatically process blobs as created, or have a proper deployment pipeline as previously mentioned.

Once you select the HTTP trigger template, a new pair of fields where appear at the bottom. Enter a good name for the New Function (this name does not need to be unique across the Azure cloud you're working in, unlike the Azure Function App name) and leave the Authorization level at the default of Function. The Create operation will happen very quickly - after a few seconds, generally.

Once you have the function in place, we can put in the function code. Under Developer, select Code + Test, which will open up a decent text editor in the browser. Make the following changes:

- Delete line 11.

- Delete lines 2 through 8.

This will leave your function body looking like this:

Now:

- Change back to Visual Studio Code.

- Copy all of the app.js application code (including your key and account name, for now) into your clipboard.

- Paste that code in between lines 1 and 2 in the code editor.

- If you'd like, highlight all of the inserted lines and press the Tab key to indent all of them so the code looks nice.

- Delete lines 2 and 3, which will be the 'use strict' line and the blank line after it. Your code should look like this:

On the command bar at the top, select Save to save the code changes. You can't quite test this yet, because we haven't gotten the executables up to Azure yet, nor configured the Node.js packages. We'll do that now.

First, let's get that folder up to the filesystem underlying the App Service hosting your Azure Function, and get the Node.js configuration sorted while we're at it. As explained in the first post, this post isn't about Azure deployment processes such as CI/CD pipelines, so we're going to do this all manually, but in production you would very likely use a more automated process.

Close the function pane using the close button to top right of the pane (not the browser close button):

This will take you back to the Function App pane. On the left, under Development Tools (you'll probably need to scroll down to get to it), select Advanced Tools, then Go ➡:

You can also use Search (Ctrl+/) at the top of the left-hand side to search for this. Either way, this will bring you in to the main Kudu window. Kudu is the open-source engine behind git deployments in Azure App Service as well as a development support tool in its own right. In this case, we're going to use it to do some file uploading and some basic filesystem work.

- Start by selecting CMD under the Debug console menu at the top of the screen. This will open a web-based console window and file browser.

- In the file browser area, click site, then wwwroot, then the name of your function. The console working directory will change as well.

- In the console window, type mkdir utils and press Enter. Notice the new folder appears immediately in the file browser area.

- Still in the console window, type cd utils and press Enter. Notice the file browser area changes to match.

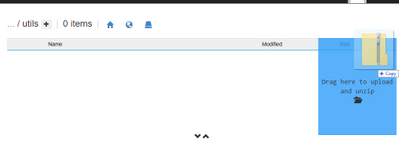

- In File Explorer, click your GraphicsMagick-version.zip file, and drag it to your browser. As you hover it over the Kudu window, a drop area will appear to the right that indicates a file will be unzipped if dropped there:

- Drop the file there and wait. An upload indicator in the upper-right will progress to 100%, then stay visible for a while the file is unzipped:

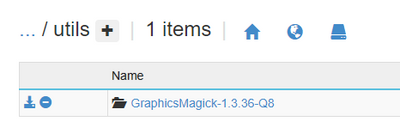

- After the upload and unzip has finished, you'll see a new folder in the file browser listing the new folder. The work in the Kudu is almost, but not quite, done, because we need to worry about the Node.js package management; remember that we are referencing two packages, one of which needs the executable we just uploaded (that's why we bothered!)...

- Still in the Command Prompt in Kudu, cd .. to get back to your main code folder (or use the ... link at the top of the folder view, to the left of / utils.

- In your Function folder view, copy your package.json file to the Function by dragging and dropping it from File Explorer (you probably have File Explorer open somewhere in the utils folder from earlier, so it should be easy to move back up one level to where the package.json file lives).

- Type the following command to initialize the NPM configuration: npm i. This will run for a little while, bringing in the package dependencies for the solution. You'll probably get some warnings about your repository setup and the like; we don't care right now. Again, not production!

Note that technically, I'm not following best practices here. We document that package.json should live in the root folder (not in an individual function), and we'll automatically do an npm install when you deploy from source control (but not in the Portal like we did here). But... say it with me... not production! The focus here is the external executable bit!

At this point, your function is deployed, with appropriate modules in the installation. Let's test it.

- Change back in your browser to the Function App tab and, under the Functions section, change to the Functions list.

- Click/tap on your function in the list.

- Under Developer, select Code+Test.

- In the command bar at the top, select Test/Run.

- The sample function has a bunch of stuff hard-coded, so the Body doesn't matter, and the test environment will send a usable Key for authentication. So you can now use the Run button...

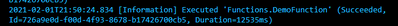

- Notice that a Filesystem Logs view opens. If all goes well, you'll get a Succeeded message after a short time:

Congratulations, the Function App is calling the executable successfully!\

So, what next here, if you were doing this for real? Well, proper CI/CD of course... and the storage account information should be in the Application settings for the function, ideally referencing Key Vault (notice the commented out code that references the process environment for this information)... but that's out of scope for the already long pair of blog posts.