This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Did you ever wonder how to integrate into Azure Percept additional sensors like an Inertial Measurement Unit and a couple of servo motors?

Someone may think to use the GPIO from the carrier board, but it doesn’t sound like a great idea as they are not easy to access for wiring or soldering and it’s also difficult to natively implement GPIO access.

An alternative is to connect an external microcontroller (MCU) via one of the USB ports and hand over to it the interfacing to the actual sensors. This is also increasing the overall security of the solution; I’ll tell you how.

What?

In this article, I’ll go over the steps of how to connect an external MCU over the USB, connect an inertial measurement unit sensor (IMU) to it, and a couple of servo motors including a simplified mechanical design to be able to pan & tilt the Azure Percept Eye module around knowing exactly where it is pointing.

Why do that?

Azure Percept Camera Sensor has a great Field of View (120° diagonal) but what if you want to detect an object beyond this range?

An option could be to host multiple cameras to cover a wider angle or, an alternative solution is just to make it move!

I’ll start explaining the mechanical design including the 3D model I’ve developed and printed plus some pre-build metal plate to mount it physically on the servos, then I’ll deep dive into the Raspberry Pi Pico firmware and the decision I’ve taken to exchange messages with the Carrier Board of Azure Percept, then it’s the turn of the IoT Edge custom modules I’ve built to communicate with the Pico board and, yes, to process locally the inference of the eye module! I’ll spend some work on the Custom Vision module I’ve built, and I’ll wrap up with the lesson learned and the next step!

The mechanics

Ok, let’s start with the mechanical mounting. Azure Percept components (Carrier Board, Video and Audio modules) support a well-known industrial mounting system named 80/20 (Azure Percept DK 80/20 integration | Microsoft Docs)

The very first thing I did was design an adapter from the 80/20 to the servo motor and the tripod.

The Video Module now can be mounted on a couple of servo motors which will be able to pan & tilt it!

So, I took some measurements on the 80/20, and with my 3D modeling took I’ve sketched the following object: Percept 2 Tripod by gornialberto - Thingiverse

This adapts to the 80/20 mounting rail and enables you to attach the Video Module to the servo kit with a simple screw thanks to the bottom hole.

The next step was finding a servo-assisted pan & tilt kit. I realized I can just leverage a mix of metal mounting stuff and yet another custom-made 3D printer model.

I found this base package for the tilt: Staffa servomotore, materiali di alta qualità Staffa motore MG995, rotazione del cannotto dello sterzo Comodo durevole per MG995 : Amazon.it: Giochi e giocattoli

Then I designed a 3D holding plate for the pan motor: Percept Eye Pan&Tilt kit by gornialberto - Thingiverse

Finally, I need to find a way to keep the electronic board close to the servo:

And here we go! That’s the final result:

The Electronics

To make things moving you need a piece of electronics as well. Here is when things start to become interesting. As mentioned earlier, instead of connecting the servo and the IMU directly to the carrier board I decided to leverage the latest Raspberry Pi Pico MCU. That’s easy to use (you can program it in Python), quite cheap, and powerful. Unfortunately to move things you need also some decent level of power, therefore, I can’t drive the servo directly with the Raspberry Pi Pico so I’ve opted also for a PWM Servo Driver board which is controllable via i2c digital bus from the Raspberry Pi Pico.

To understand the orientation of the camera I’ve initially chosen a GY-521 IMU module but this doesn’t include a digital compass therefore it’s too difficult to integrate the values to get the orientation properly. I’ve opted for a bit more expensive IMU 9250/6000 which include also a digital compass and still works on the i2c bus therefore I’ve chained with the Servo Driver board!

Below you can find the shopping list:

That’s the PWM Servo driver board based on PCA9685:

SunFounder PCA9685 16 Channel 12 Bit PWM Servo Driver compatible with Arduino and Raspberry Pi (MEHRWEG) : Amazon.it: Informatica

The beauty of this board is that will take care of the PWM signal generation so the pico code will be quite simple and with relaxed cycles and I was able to power it directly from the USB port bypassing the voltage regulators of the Raspberry Pi Pico.

Regarding the servo, any MG996-based servo is fine. Just check it is powered at 5V.

These one would be fine: ZHITING 2-Pack 55g Servomotore Digitale con Coppia di Ingranaggi in Metallo per Robot Futaba JR RC Elicottero per Auto Barche : Amazon.it: Giochi e giocattoli

Then of course, the Raspberry Pi Pico: IBest for Raspberry Pi Pico RP2040 Microcontroller Board with Pre-Soldered Header Flexible Mini Board Based on Raspberry Pi RP2040 Chip,Dual-Core Arm Cortex M0+ Processor,Support C/C++/Python : Amazon.it: Informatica

Sa last I’ve got that IMU sensor: Paradisetronic.com MPU-9250 Modulo Accelerometro a 3 assi giroscopio e magnetometro 9DOF, I2C, SPI, ad esempio per Arduino, Genuino, Raspberry Pi : Amazon.it: Informatica

And some Jumper Wire and headers to be soldered to the boards.

Below you can find the wiring diagram:

And the schema can be downloaded from the git hub repo here: https://github.com/algorni/AzurePerceptDKPanAndTilt/blob/main/PerceptDK%20Pan%20and%20Tilt.fzz

The Raspi Pico firmware

As the Raspberry Pi Pico supports Python as a programming language, I decided to go for that.

I was initially inspired by this blog post: RAREblog: Controlling a Raspberry Pi Pico remotely using PySerial (rareschool.com) and I tried the same approach. The main difference was that I tried to build a .NET Core application that leverages the Serial Port object to connect to the REPL interface exposed by the MicroPython firmware running on the Raspberry Pi Pico.

Unfortunately, I get a lot of issues with this approach and finally I moved to CircuitPython which could surface a couple of virtual serial ports over USB: one dedicated to the REPL console and another one “data only” on which you can implement your custom protocol on top of it.

Below you can see the list of devices attached to the Azure Percept carrier board:

When I connect the Raspberry Pi Pico with the CircuitPython firmware and enable the “data serial port” I can see two “ttyACMx” devices. The first one is the REPL console, the second one is the “data serial port”.

To enable the data serial port I use the usb_cdc library (usb_cdc – USB CDC Serial streams — Adafruit CircuitPython 7.3.0-beta.1 documentation) and I just put this code in the “boot.py”

Small side notes: Circuit Python lets the device expose an additional drive in your system in which you can easily upload the python code you want to execute. MicroPython on the other side leverage just the REPL interface to upload the code content, a bit more challanging as you need specialized tooling to upload the code into the Raspberry Pi Pico.

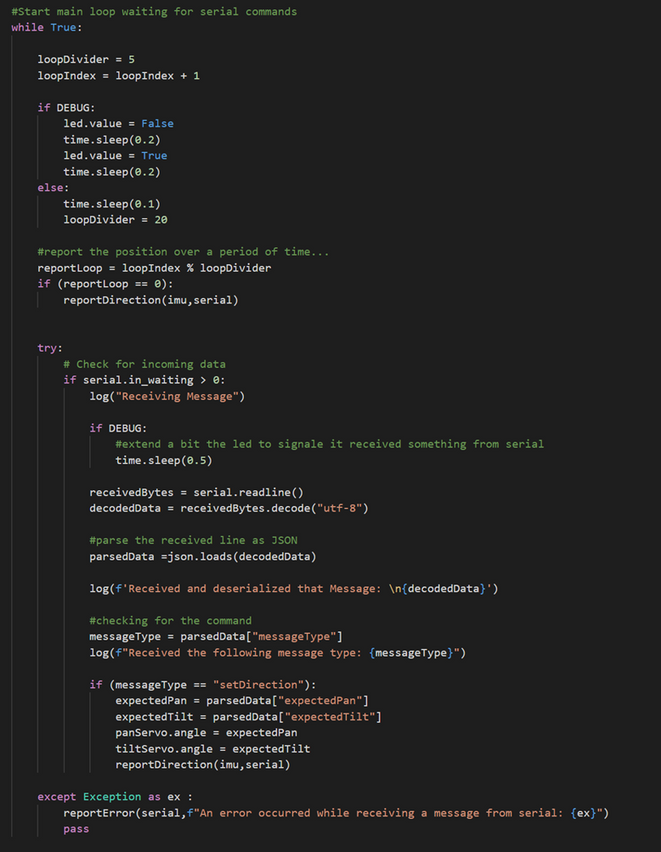

The remaining python code is quite easy to read.

First of all, I’ve imported quite a few libraries, including the one to interface to the servo driver and the IMU.

Then I decided to exchange JSON messages over the serial port.

So, after initializing the servo motors and the IMU libraries:

I’m starting an infinite loop in which I read the serial port to listen for incoming commands from the carrier board and I report every now and then the current IMU reading.

As I mentioned before I’ve decided to use JSON messages over the serial port, so I’ve created a couple of functions facilitating that:

The code on the Raspberry Pi Pico is just that, less than 150 lines of custom code to exchange JSON messages back and forth the Azure Percept carrier board and control the servo and read IMU values.

That’s the result of the code running while connecting to the serial data port: I can see the reported information from the IMU.

The IoT Edge control modules

On the IoT Edge runtime side (remember, Azure Percept runs IoT Edge runtime on a Mariner Linux OS) the component which send and receive the JSON messages over the serial line with the Raspberry Pi Pico is the “panTiltController” module:

Its main responsibility is to leverage the ModuleClient to subscribe to Desired Property update (to be able to control from the cloud the orientation of the eye module) and input messages locally from edgeHub (to be able to establish a local control loop, we will see later how and why), then, of course, it manages the serial port communication to the Raspberry Pi Pico serializing and deserializing the JSON messages.

This is an example of the Module Twin for the panTiltController module.

As you can see in the reported properties you can see the current pan & tilt orientation but also the current values read by the IMU sensor from the accelerometer and the compass.

Those readings are useful to detect the absolute orientation of the module as the servo is quite coarse.

If I update the desired property, the module sends immediately a command to the Raspberry Pi Pico to change the servo position according to the value received from the cloud.

Object Tracking

As the final step, I’ve trained a Custom Vision model to detect simpler “plastic cookies”.

And uploaded the model to my Percept thanks to the Percept Studio.

I’ve then developed another custom IoT Edge Module to receive the Percept Inference (aka the detected object position).

The beauty of IoT Edge is that with its data plane you can route messages across Modules, and this is exactly what I did!

Thanks to the following edge hub routing I’m getting the output of the Azure Eye module and route into my new “percept2panTilt” module:

The module will receive and parse the AI module information and calculate the object barycenter and determine if Percept Eye must be moved around to center the object!

And how can it command the servo to move? It just publishes a new message in output which is then routed to the first panTiltController!

The overall effect is that when you hold in your hands the trained object, the Pan & Tilt engine makes the Percept Eye object move to center the object!

Soo, I’ll publish also a quick video of the final result! But In the meantime, you can download all the software from the git hub repo and try it on your own!

algorni/AzurePerceptDKPanAndTilt: A simple way to Pan & Tilt Azure PerceptDK remotely (github.com)

Security Aspect

At the beginning of the article, I’ve also mentioned the security implications of having a dedicated external MCU controlling physical entities in the real world.

This is just a sample to showcase how to control something directly from the cloud or through an automated local control loop and very little can go wrong, there is no human security at risk.

In a real-world example may it be you want to control dangerous equipment remotely; in this case having an intelligent gateway directly controlling a critical component could be challanging in some situations. When using an intermediate external component like a dedicated MCU to control something that interacts with the physical world you can put extra additional SAFETY FEATURES.

Those features should not be overridable from a remote controller or the local control loop which runs on the intelligent edge. Having that extra layer of safety embedded in those external components is an extra effort and can be considered another step toward the “defense in deep” strategy.