This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Introduction

This technical article provides example for how to perform parallel data copy from Db2 to Azure SQL Database by generating ADF Copy activities dynamically. Solution provided in this article is performing data copy from Db2 installed on Windows (or Linux/Mainframe) to Azure SQL Database using Azure Data Factory Db2 Connector. Please note this concept applies to data copy from any RDBMS source to any RDBMS target supported by ADF.

Below diagram describes high level architecture of data copy from Db2 hosted in Azure Windows VM to Aure SQL Database using ADF.

High level architecture to Copy Data from Db2 to Azure SQL DB by generating multiple Copy activities dynamically

Components present in above diagram:

- Db2 database installed on Windows Server VM in Azure.

- ADF Self Hosted Integration Runtime (SHIR) installed on VM which has connectivity to Db2. If Db2 database can be accessed from Azure; Azure Auto Resolve Integration Runtime can also be used which will provide dynamic scaling for large data copy without any manual configuration / installation.

- Azure Data Factory is a cloud-based data integration service that allows you to create data-driven workflows in the cloud for orchestrating and automating data movement and data transformation. ADF Pipeline can be triggered based on external event or scheduled on definite frequency.

- ADF ForEach Activity enables performs parallel execution for activities inside it. Maximum activities that can run in parallel is 50 currently. Default limit is 20.

- ADF copy activity will have Db2 connector as source to get data from Db2 LUW and Azure SQL DB as sink to write data received.

- Azure SQL DB will store data copied from Db2 LUW.

Pre-requisite:

1. Linked service to Source Db2 database can be created by following steps mentioned at doc.

2. Linked service to Target Azure SQL database can be created by following steps mentioned at doc.

3. Source Db2 Test Table

Table Name: SANDIPK."Employee" definition

|

CREATE TABLE "SANDIPK"."Employee" ( "EmployeeId" INTEGER NOT NULL , "LastName" VARCHAR(20) NOT NULL , "FirstName" VARCHAR(20) NOT NULL , "Title" VARCHAR(30) , "ReportsTo" INTEGER , "BirthDate" DATE , "HireDate" DATE , "Address" VARCHAR(70) , "City" VARCHAR(40) , "State" VARCHAR(40) , "Country" VARCHAR(40) , "PostalCode" VARCHAR(10) , "Phone" VARCHAR(24) , "Fax" VARCHAR(24) , "Email" VARCHAR(60), "ID" BIGINT NOT NULL DEFAULT 0 ) ORGANIZE BY ROW; CREATE INDEX "SANDIPK"."ID_INDEX" ON "SANDIPK"."Employee" ("ID" ASC) COLLECT DETAILED STATISTICS; |

Test Environment Configuration:

|

Configuration Item |

Value |

|

Schema Name |

SANDIPK |

|

Db2 Table Name |

Employee |

|

Number of rows |

29,360128 (29.36 million) |

|

Size of Employee Table |

5.6 GB |

|

ADF Integration Run time |

Azure Auto Resolve Integration Runtime for Dynamic scaling |

|

Target Azure SQL DB |

Business Critical / Storage: 100 GB / cores: 8 vCores |

|

Driver used to connect to Db2 LUW database |

Microsoft OLEDB Provider for Db2 |

ADF Pipeline to Copy data from Db2 to Azure SQL DB

Below screenshot shows how ADF Pipeline should be setup to perform dynamic data copy activities generation.

Every activity and corresponding setup will be explained in sections below:

1. Define Parameters for the pipeline

These parameters are defined in a such a way that it allows dynamic generation of ADF Copy activities. Even this pipeline can be called from master pipeline or external methods (.NET SDK / Azure PowerShell / REST API / Python SDK) etc. which will enable to run multiple pipelines concurrently. Every parameter is explained below:

| Sr.No. | Parameter Name | Description |

| a) | lowerBound | Minimum value of the partitionColumn |

| b) | upperBound | Maximum value of the partitionColumn |

| c) | numPartitions | Number of partitions in which input lowerBound to upperBound data should be divided. Note this is not number of parallel executions. Number of parallel executions will be less than or equal to number of Partitions. In ADF there is max cap limit of 50 parallel executions at a time where numPartitions can be any integer value. |

| d) | schemaName | Source Db2 Schema Name |

| e) | tableName | Source Db2 Table Name |

| f) | partitionColumn | Source Db2 Table Column based on which logical partitions needs to be created |

| g) | copyInRangeOnly |

'Y' – Copy data only in lowerBound and upperBound 'N' – Copy all data from source to target |

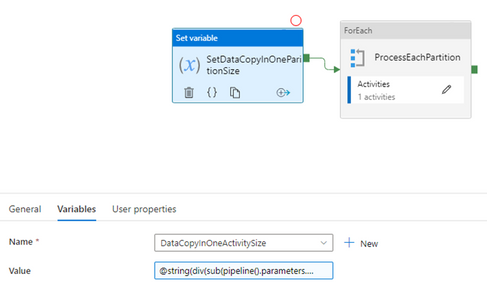

2. Set Size of One Partition

Based on provided parameters above we need to drive size of one partition as below:

upperBound - lowerBound

DataCopyInOneActivitySize = -------------------------------

numPartitions

Corresponding Dynamic content for the SetDataCopyInOneParitionSize activity variable

DataCopyInOneActivitySize value is shown below:

@string(div(sub(pipeline().parameters.upperBound,pipeline().parameters.lowerBound),pipeline().parameters.numPartitions))

Screenshot for Set Variable:

Dynamic content for the ForEach activity variable DataCopyInOneActivitySize Items is shown below:

@range(0,pipeline().parameters.numPartitions)

Screenshot for Set Variable:

3. Generate ADF Copy activities in parallel After clicking on ForEach Configuration option; we will be able to see most important activity of the pipeline which is Copy data activity as shown below:

A) Source tab

i) Source dataset: It points to source Db2 table. Link Service is created using step mentioned

in Create linked service to Db2 on Windows Server.

ii) Dynamic Query for generating Copy Activity 'SELECT' Query during Runtime:

For each execution of ForEach Activity dynamic query will generate SQL Query for every logical partition.

Example: If parameter number of logical partition (numPartitions) is provided as 150; 150 SELECT queries will be generated; Out of 150 max number of queries that will run in parallel will be defined by Batch Count parameter of ForEach Activity.

This dynamic generation of SELECT query where clause is based on provided pipeline parameter. Source Db2 database table may or may not have physical partitions. Though having physical partition on Source Db2 table will definitely lead to improvement in copy performance but this is not mandatory requirement.

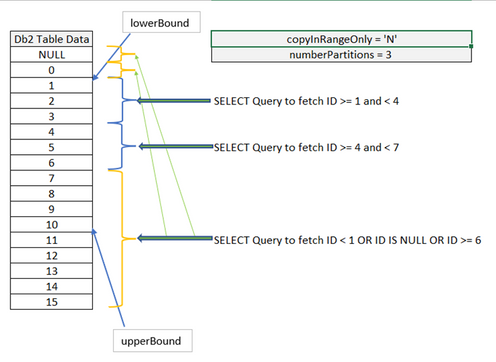

Dynamic query generation for limited number of rows (only 17) is explained below to explain the concept. In actual execution there might be millions of rows to be migrated from Db2 to sink Azure SQL DB.

Case 1 : copyInRangeOnly = 'Y'

When option copyInRangeOnly is set to ‘Y’ dynamic SQL SELECT queries will only be generated for the data in the mentioned lowerBound and upperBound as shown in diagram below. Data outside lowerBound and upperBound range will NOT be copied from Source Db2 to sink SQL DB.

As shown in screenshot above 3 queries (equal to numPartitions) will be generated dynamically to copy data only in range lowerBound (1) and upperBound (10).

Case 2 : copyInRangeOnly = 'N'

When option copyInRangeOnly is set to ‘N’ dynamic SQL SELECT queries will be generated for the data in the mentioned lowerBound and upperBound. Also last query will also copy all data outside the range lowerBound and upperBound which means entire data from source will be copied to sink.

As shown in screenshot above 3 queries (equal to numPartitions) will be generated dynamically to copy entire data from the source table to sink.

B) Sink tab

i) Sink dataset: It points to sink Azure SQL DB table. Link Service is created using step mentioned in Create linked service to Azure SQL DB.

ii) Bulk insert table lock: Use this option to improve copy performance during bulk insert operation on table with no index.

Pipeline Execution:

Let’s see everything in action. We will run pipeline to copy 29,360128 (29.38 million) records of size 5.6 GB from Db2 on Windows to Azure SQL DB Business Critical 8 vCores.

Set pipeline parameters as shown in below screenshot and run pipeline.

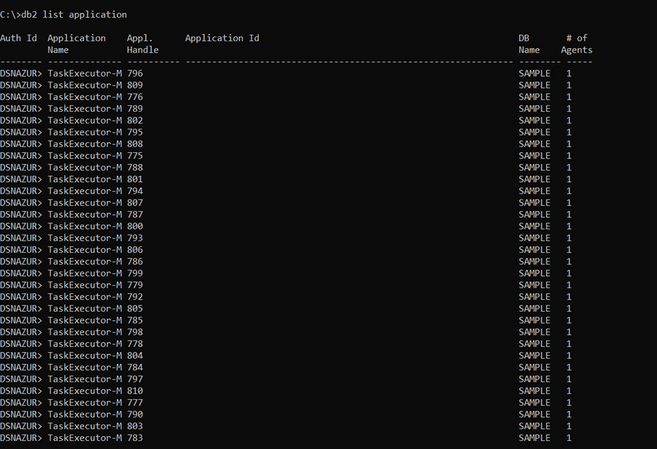

Db2 DBMS Windows VM Statistics:

Below screenshot shows 32 threads were running in time in parallel to get data from Db2 on Windows virtual machine. In absence of dynamic SELECT query generation based on partitioning we will be able to see only 1 thread. Please note our pipeline execution BatchCount for ForEach activity was kept 50 which is maximum number of ADF activities that can run in parallel. In this case at the time when screenshot was taken 32 threads were running in parallel.

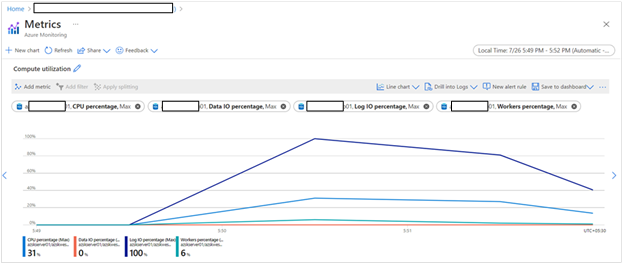

Sink Azure SQL DB Statistics:

As shown below Azure SQL DB Log I/O percentage is hitting 100 %. Maximum transaction log throughput rate for the configuration that we have used i.e. Business 8 vCores is 96 MB/s. Azure SQL DB Log I/O limits are explained at this Microsoft documentation page: link. This mean that parallel execution of SQL Queries is making sure that we are utilizing all the available capacity viz. getting data from source / transferring data on the network / writing data to sink.

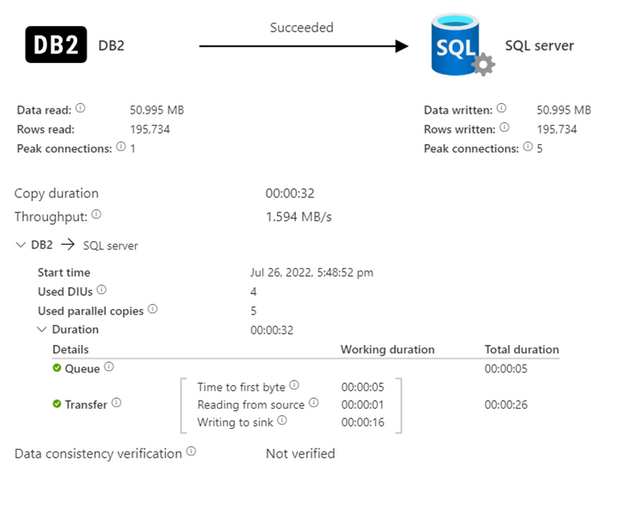

Single Copy Activity statistics:

Single copy activity statistics are shown below. As it can be seen from the screenshot since we have divided input data copy in multiple logical partition Time to get first byte from source is less (5 seconds). Overall time to get 195,734 records from Db2 and write to Azure SQL DB is 32 seconds.

Now comes the most interesting statistics. How much time it took to copy 5.6 GB data with around 29 million records? Any guess?

As shown in below screenshot it took just 1 minute and 47 seconds to perform complete data copy of size 5.6 GB with around 29 million records from Db2 on Windows to Azure SQL DB.

If the same copy activity was run with single copy activity the amount of time it takes is around 24:30 minutes.

So, with this method of dynamic generation of SQL Queries and Parallel execution of the same to extract data from Source Db2 and writing to sink Azure SQL Database we were able to reduce time to copy from 24:30 minutes to 01:47 minutes; which is around 93 % reduction in Data Copy time.

Conclusion

As evident from above test data copy performance can be drastically improved by Dynamic generation of SQL Queries and parallel execution of them to get data from Source Db2 and writing to sink Azure SQL DB.

Below chart summarizes result of test execution with using dynamic copy activity generation approach and usual single copy activity approach:

Important points for Consideration

- It is very important to identify correct number of activities to be run in parallel based on available SHIR cores / Source Db2 database configuration / Sink database number of cores / Network bandwidth etc. Putting higher load on infrastructure might lead to Db2 database not responding / SHIR machine throwing out of memory exception etc.

- Above test results are for representation purpose only. You may or may not get similar results in your environment due to different test environment configuration.

- Above technical blog provides high-level guidance with no warranties with respect to the information contained herein.

Feedback and suggestions

If you have feedback or suggestions for improving this data migration asset, please send an email to Azure Databases SQL Customer Success Engineering. Thanks for your support!