This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Azure Synapse Analytics August Update 2022

Welcome to the August 2022 update for Azure Synapse Analytics! This month, you will find information about Distribution Advisor for dedicated SQL pools, Spark Delta Lake tables in serverless SQL and the new Cast transformation that was added to mapping data flows. Additional general updates and new features in Dedicated and Serverless SQL, Spark, Synapse Data Explorer, Data Integration, Machine Learning and Security are also mentioned in this edition.

Don’t forget to check out our companion video on the Azure Synapse Analytics YouTube channel!

Note: we stopped the companion video auto-play for this month's blog, comment below if this is a monthly feature you want us to continue as part of the blog.

Table of contents

- SQL

- Add SQL objects and users in Lake databases

- Spark Delta Lake tables are available in serverless SQL pool for public preview

- Multi-column Distribution is available for public preview in dedicated SQL pools

- Distribution Advisor is available for public preview in dedicated SQL pools

- Accelerate your Oracle data warehouse migration to dedicated SQL pools in Azure Synapse Analytics

- Apache Spark for Synapse

- Synapse Data Explorer

- Data Integration

- Machine Learning

- Other updates

- Security

SQL

Add SQL objects and users in Lake databases

Lake databases allow you to use SQL to do analytics on top of data in the data link. We're always trying to make lake databases better and bring them closer to a traditional SQL database.

Starting this month, you can perform these operations in the Lake database:

- Create schemas where you can place SQL views, procedures, and inline table value functions (iTVF)

- Create views, procedures, and inline table-valued functions

- Note that the dbo schema is still reserved for tables defined using Apache Spark pools, Synapse Database Designer, and Dataverse

- Create database users from your Azure Active Directory domain and assign them to the db_datareader role. This way you can create database-scoped users who can read data only within your Lake database.

With these improvements, the T-SQL functionalities in Lake databases are closer than ever to the SQL database experience you are familiar with.

To learn more, read Access lake databases using serverless SQL pool in Azure Synapse Analytics and Create and use native external tables using SQL pools in Azure Synapse Analytics.

Spark Delta Lake tables are available in serverless SQL pool for public preview

Serverless SQL pools enable you to query Parquet and CSV tables that are created using Spark notebooks.

With the latest version of Synapse, serverless SQL pools now allows you to access Delta Lake tables created in Spark databases. When you create a table using the Delta Lake format in Apache Spark pools in Synapse workspace, the serverless SQL pool will create a matching table and enable you to access your Delta Lake files using the T-SQL language. This feature is currently in public preview.

To learn more, read Azure Synapse Analytics shared metadata tables.

Multi-column Distribution is available for public preview in dedicated SQL pools

You can now Hash Distribute tables on multiple columns for a more even distribution of the base table, reducing data skew over time and improving query performance. Multi-column Distribution (MCD) in public preview will allow you to choose up to 8 columns for distribution.

To participate in the preview, you can opt into the new database compatibility level 9000. For example:

ALTER DATABASE SCOPED CONFIGURATION SET

At any given time, you can run this command to opt-out of the public preview. Please note that this will disable the MCD feature and access to existing MCD tables. To re-gain preview access, you can opt into compatibility level 9000 again.

ALTER DATABASE SCOPED CONFIGURATION SET DW_COMPATIBILITY_LEVEL = AUTO;

To learn more, read the following Microsoft docs:

- ALTER DATABASE SCOPED CONFIGURATION - SQL Server (Transact-SQL)CREATE TABLE AS SELECT (Azure Synapse Analytics) - SQL Server

- CREATE TABLE (Azure Synapse Analytics) - SQL Server

- CREATE MATERIALIZED VIEW AS SELECT (Transact-SQL) - SQL Server

- sys.pdw_column_distribution_properties (Transact-SQL) - SQL Server

Distribution Advisor is available for public preview in dedicated SQL pools

In Azure Synapse SQL, you can customize the way each table is distributed. Table distribution strategy affects query performance substantially.

Distribution Advisor (DA) is a new feature in Synapse Dedicated SQL Gen2 that analyzes queries and recommends the best distribution strategies for tables to improve query performance. Queries to be considered by the advisor can be provided by you or pulled from your historic queries available in DMV (Dynamic Management Views).

To learn more, read Distribution Advisor in Azure Synapse SQL.

Accelerate your Oracle data warehouse migration to dedicated SQL pools in Azure Synapse Analytics

Modernizing your on-premise data warehouse by migrating to Azure Synapse reduces maintenance costs, greatly improves performance, and provides high availability. However, migrating from on-premise to a Cloud data warehouse can be complex and time consuming. Extract, transform and load (ETL) processes, large amounts of data and reports built over the years need to be migrated while navigating differences in architecture and design, database objects and data types, performance tuning, ETL and SQL.

We are happy to introduce the new Oracle migration guide to help you resolve common issues and accelerate your migration of Oracle data warehouses to dedicated SQL pools in Azure Synapse Analytics. This guide provides migration steps, process and guidelines, and insights on the capabilities of SQL Server Migration Assistant (SSMA) for Oracle to automate migration tasks.

Take advantage of our migration guides to modernize your data warehouse and accelerate your business by integrating with Azure Synapse.

To learn more about Oracle, Teradata and IBM Netezza migrations, read Azure Synapse Analytics migration guides

Apache Spark for Synapse

Azure Synapse Analytics Spark elastic pool storage is available for public preview

Azure Synapse Analytics Spark pools now support elastic pool storage. Apache Spark in Azure Synapse Analytics utilizes temporary VM disk storage while the Spark pool is instantiated. Spark jobs write shuffle map outputs, shuffle data and spilled data to local VM disks. Examples of operations that may utilize local disk are sort, cache, and persist.

In the past, when temporary VM disk space ran out, Spark jobs failed due to “Out of Disk Space” error (java.io.IOException: No space left on device). In the case of “Out of Disk Space” errors, much of the burden to prevent jobs from failing shifts to you to reconfigure the Spark jobs (e.g., tweak the number of partitions) or clusters (e.g., add more nodes to the cluster). These errors might not be consistent, and you may end up experimenting heavily by running production jobs. This process could be expensive in multiple dimensions:

- Wasted time – Customers are required to experiment heavily with job configurations via trial and error and are expected to understand Spark’s internal metrics to make the correct decision.

- Wasted resources – Production jobs can process varying amounts of data, Spark jobs can fail non-deterministically if resources are not over-provisioned. For instance, consider the problem of data skew, which may result in a few nodes requiring more disk space than others. Currently in Synapse, each node in a cluster gets the same disk space size and increasing disk space across all nodes is not an ideal solution and leads to tremendous waste.

- Slowdown in job execution – In the hypothetical scenario where we solve the problem by autoscaling nodes (assuming costs are not an issue to the customer), adding a compute node is expensive (takes a few minutes) as opposed to adding storage (takes a few seconds).

Starting this month, Elastic pool storage allows the Spark engine to monitor worker nodes temporary storage and attach additional disks if needed. No action is required, and you should see less job failures as a result.

Azure Synapse Analytics Spark Optimized Write is available for public preview

Analytical workloads on Apache Spark perform most efficiently when using standardized larger file sizes. The relation between the file size, the number of files, the number of Spark workers and its configurations play a fundamental role with performance. Ingestion workloads into data lake tables may have the inherited characteristic of constantly writing lots of small files; this scenario is commonly known as the "small file problem".

Optimize Write is a Delta Lake on Synapse feature that reduces the number of files written by Apache Spark and aims to increase individual file size of the written data. It dynamically optimizes partitions while generating files with an optimal size. This feature achieves the file size by using an extra data shuffle phase over partitions, causing an extra processing cost while writing the data. The small write penalty should be outweighed by read efficiency on the tables.

The Optimize Write feature is now in Public and readily available on Synapse runtimes for Apache Spark 3 (3.1 and 3.2).

To learn more about the usage scenarios and how to enable the feature, read The need for optimize write on Apache Spark

Synapse Data Explorer

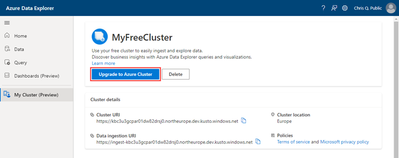

Free cluster upgrade option

You can now upgrade your Azure Data Explorer free cluster to a full cluster that removes the storage limitation allowing you more capacity to grow your data.

An Azure Data Explorer full cluster has the following additional benefits than the free cluster:

- Native to Azure

- Enterprise ready

- Unlimited storage (beyond the free 100GB)

- Unlimited scalability with auto scale feature that automatically turns on / off compute resources based on actual usage

- End-to-end solution for data ingestion, query, visualization, and management

- Integration to Azure Monitor and Insights

- Event Hub and Event Grid connectors

Upgrading is easy and it is done by associating your free cluster and its data to an Azure subscription. From the ADX Web Explorer, go to the menu item My Cluster (Preview) and select the Upgrade to Azure Cluster button, fill in some required details, and you are on your way to an upgraded cluster!

To learn more, read Upgrade a free Azure Data Explorer cluster.

Analyzing fresh ADX data from Excel pivot table

Through Power BI (PBI) datasets, you can now query Kusto data in real time in Excel without having to import any data and without any volume limitations. You can build the PBI model using DQ to Kusto, publish to the PBI service and connect Excel to the published dataset.

MDX queries generated by the Pivot code, will find their way to the Kusto backend as KQL statements that will aggregate the data as needed by the pivot and back to Excel.

To learn more, read Use fresh and unlimited volume of ADX data (Kusto) from your favorite analytic tool - Excel pivot.

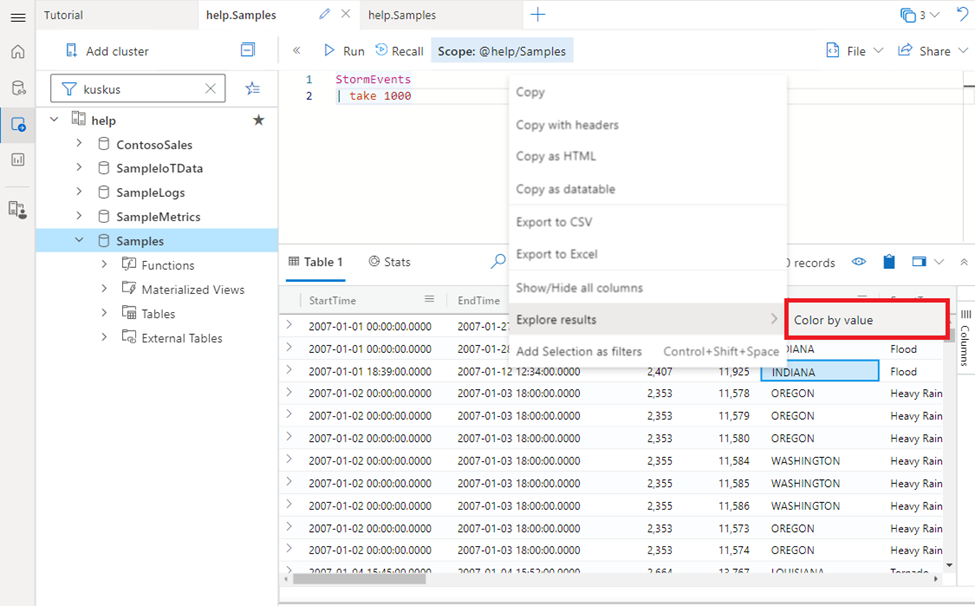

ADX Web Explorer- Query results exploration - Color by value

When working with large datasets, being able to highlight unique data at-a-glance can be valuable as you can visually group rows that share identical values for a specific column.

To perform this, right-click a value in the results grid, and choose Explore results –> Color by value and the rows will be colored based on the selected column.

Note: By using the “Color by value” again on the same column will cancel coloring, using it on a different column will re-color the rows based on a different column.

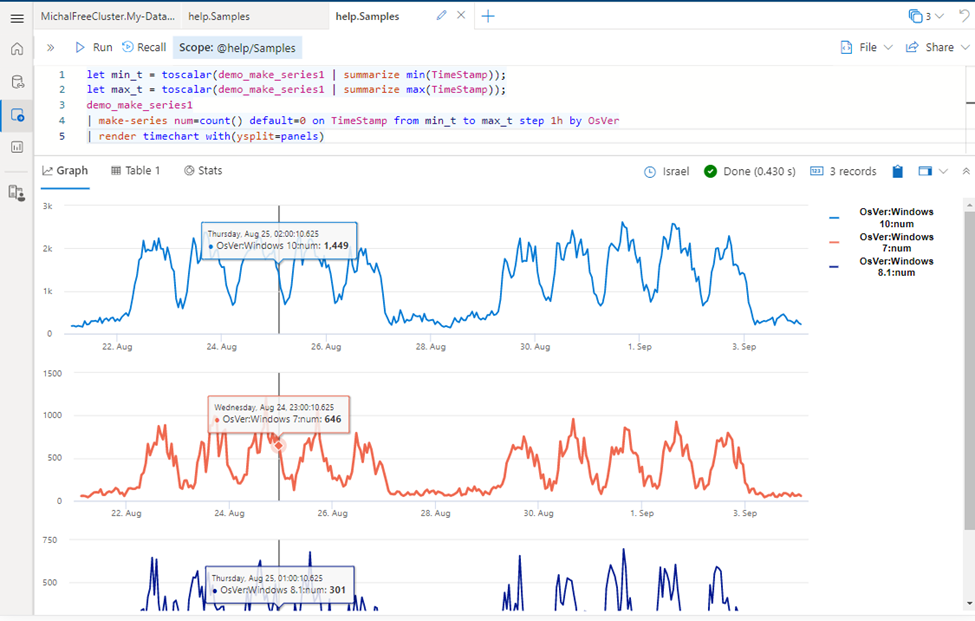

ADX Web Explorer- Crosshair support for charts (ysplit=panels)

The ysplit property now supports the crosshair visual (vertical lines that move along the mouse pointer), when using the render operator for the following charts:

- Bar

- Column

- Time

- Area

The crosshair visual provides an easier correlation of the data between multiple graphs and can be used in place of tooltips.

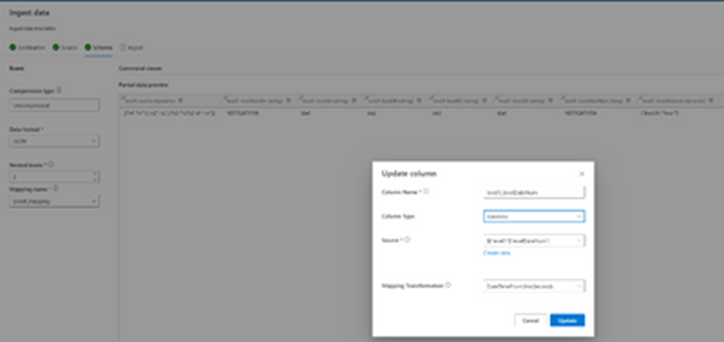

Data mapping transformations

Parquet, JSON and AVRO, are some of the data format mappings that support simple and useful ingestion-time transformations such as converting DateTime from Unix seconds, transforming JSON property bag array to a valid JSON document or adding source artifact location.

As part of the ingestion flow, you can now create mapping transformations on the table column by selecting the relevant transformation.

These transformations are super helpful for quick transformations. Where the scenario requires more complex processing at ingestion time, use Update policy, which allows defining lightweight processing using KQL expression.

To learn more, read Data mapping transformations.

Data Integration

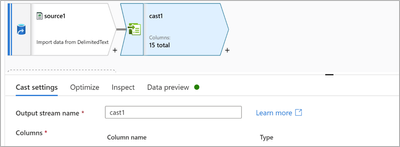

Mapping data flows now support visual Cast transformation

It is very common to transform data types into data integration workloads. An example of that is to convert strings to dates. Previously, you had to use Expression Builder and remember the correct syntax or keywords to convert data types in mapping data flows.

For example, the syntax to convert a string ‘Jul-01-2022’ to date 2022-07-01 is toDate(‘Jul-01-2022’, ‘MMM-dd-yyyy’, ‘en-US’).’

Starting this month, you can use the cast transformation to quickly transform data types.

To learn more about mapping dataflows, read Cast transformation in mapping data flow.

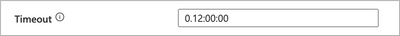

Change default activity time out from 7 days to 12 hours

We heard your feedback, and it was clear that the default pipeline activity timeout of 7 days was too long! While you can still customize the timeout, the default activity time out for Azure Data Factory and Azure Synapse Analytics is now 12 hours.

To learn more about this change, read Azure Data Factory Changing Default Pipeline Activity.

Expression builder UI update

We’ve updated our expression builder UI to make pipeline designing easier. Our pipeline expression builder used to list out all components in a single pane.

Now, to make it easier to work with different pipeline components, we’ve created new content category tabs to make it easier to find what you’re looking for.

To learn more, read Coming soon to ADF: More Pipeline Expression Builder Ease-of-Use Enhancements!

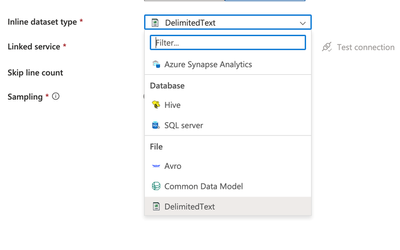

New UI for mapping dataflows inline datasets

We’ve updated our data flow source UI to make it easier to find your inline dataset type. Previously, you would have to scroll through the list or filter to find your inline dataset type. We now have categories that group your dataset types, making it easier to find what you’re looking for.

To learn more, read Mapping data flows in Azure Data Factory.

Machine Learning

A new version of the SynapseML library (previously MMLSpark) is now available on Azure Synapse. SynapseML is an open-source library that aims to simplify the creation of massively scalable machine learning pipelines. The new version, v0.10.0, includes .NET support, OpenAI language models, MLFlow integration and much more. Continue reading for details about all the highlights.

.NET, C#, and F# Support

In SynapseML v0.10, we are adding full support for .NET languages like C# and F#. This means that you can now use everything in SynapseML from any of the .NET ecosystem languages, and even load up models you built in other languages like Python, R, and Java.

Here is an example of training a LightGBM model using .NET:

To learn more, read Announcing SynapseML for .NET – Large Scale ML with a Simple API.

To learn more with a .NET example, read .NET Example with LightGBMClassifier.

OpenAI language models

SynapseML also offers a simple and scalable way to leverage Azure Cognitive Services directly from Spark. Currently, SynapseML supports over 50 Cognitive Services and we are now expanding that with the new Azure Open AI Service. This service allows users to tap into 175-Billion parameter language models (GPT-3) from OpenAI that can generate and complete text and code near human parity.

To learn more, read the SynapseML OpenAI guide.

To learn more with a simple demo, view Create Custom Search Engines from Unstructured Data with SynapseML.

Full support for MLflow

MLflow is a platform for managing the machine learning lifecycle and streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. We are very happy to announce that SynapseML models now integrates with MLflow with full support for saving, loading, deployment, and autologging!

To learn more, read MLflow in SynapseML getting started guide and SynapseML Autologging.

No cluster, no problem: Experiment in browser with Binder

We know that Spark can be intimidating for first users but fear not because with the technology Binder, you can explore and experiment with SynapseML with zero setup, install, infrastructure, or Azure account required. Simply visit the SynapseML Binder site to get started in your browser!

Other updates

In addition to the news mentioned above, there are many other great updates in this release for Responsible AI, Azure Cognitive Services, LightGBM on Spark, Wowpal Wabbit and other features. We could not capture all of it here but you can read more about this release in the detailed SynapseML v0.10.0 release notes and learn all about our rich capabilities on the SynapseML website.

Security

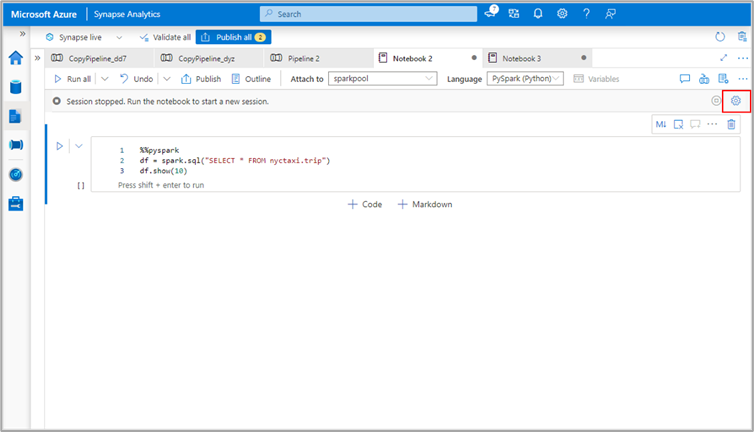

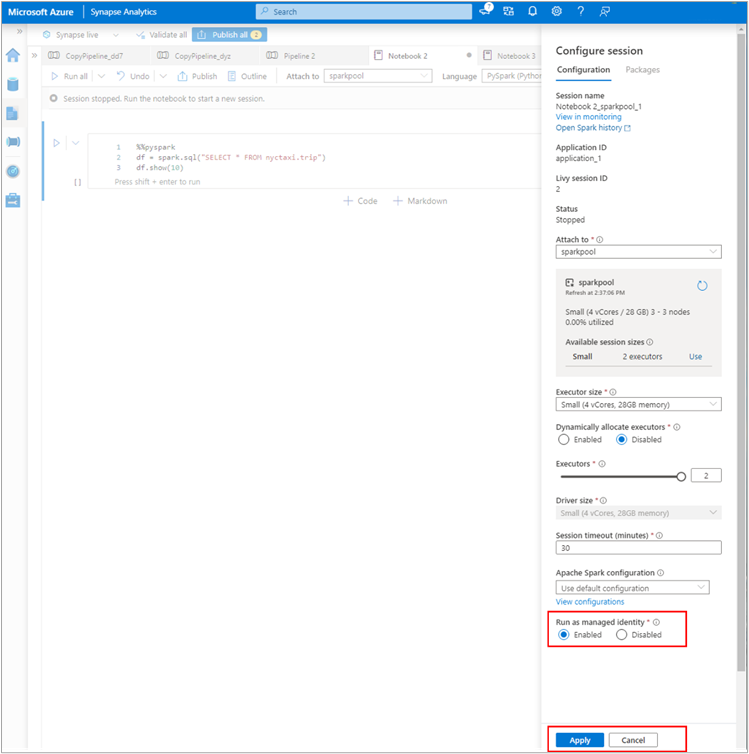

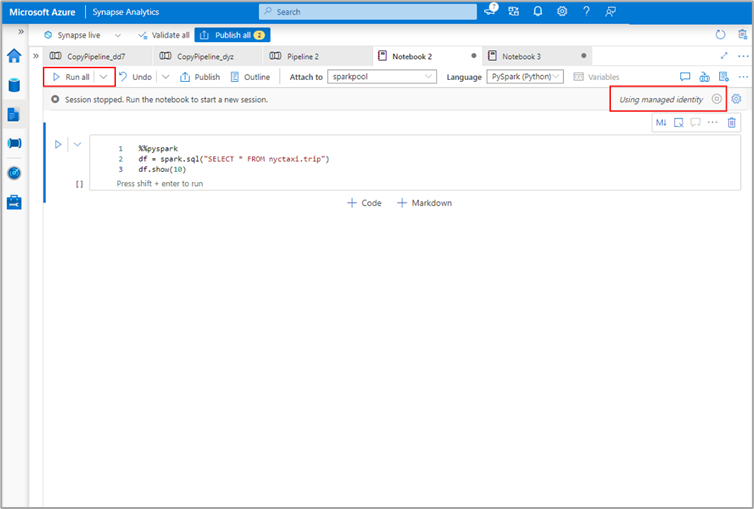

Execute Azure Synapse Spark Notebooks with system-assigned managed identity

Spark notebooks execute with the users’ Azure Active Directory identity, whereas pipelines execute with the system-assigned managed identity. In the past, if a pipeline with notebook activities failed, it used to be difficult to recreate or debug the issue. There was no easy way to execute a notebook with the system-assigned managed identity.

Starting this month, you can easily execute Spark Notebooks with the system assigned managed identity (or workspace managed identity) by enabling Run as managed identity from the Configure session menu.

With this feature, you will be able to validate that your notebook works as expected when using the system-assigned managed identity, before using the notebook in a pipeline.

To learn more, read Managed Identity for Azure Synapse.

To execute Spark Notebooks with system-assigned managed identity, you will need to have the following RBAC roles:

- Synapse Compute Operator on the workspace or selected Spark pool

- Synapse Credential User on the system-assigned managed identity