This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

With Cha Zhang, Yung-Shin Lin, Yaxiong Liu, Jiayuan Shi, and links to research papers by Qiang Huo and colleagues.

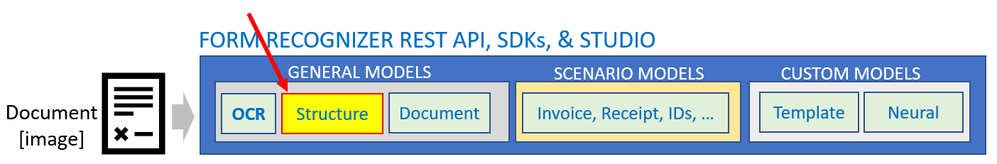

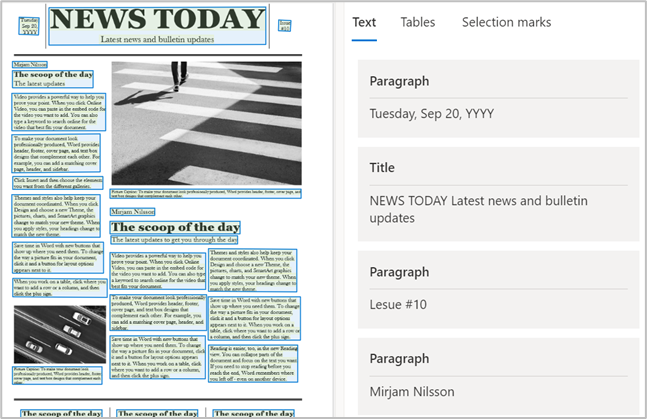

The new Form Recognizer 3.0’s document layout analysis model extracts new structural insights like paragraphs, titles, subheadings, footnotes, page headers, page footers, and page numbers. These features are best used with unstructured documents because they allow you to understand the document structure for better semantic understanding of your documents. The latest model also enhances existing features like extraction of text (OCR), tables and selection marks that customers use Layout for today.

What is document layout analysis

Document layout analysis is the process of analyzing a document to extract regions of interest and their inter-relationships. The goal is to extract text and structural elements from the page typically for better semantic understanding and more intelligent versions of knowledge mining, process automation, and accessibility experiences. The following illustration shows the typical components in an image of a sample page.

Text, tables, and selection marks are examples of geometric roles. Titles, headings, and footers are examples of logical roles. For example, a reading system requires differentiating text regions from non-textual ones along with their reading order.

Document layout analysis examples

Introduction

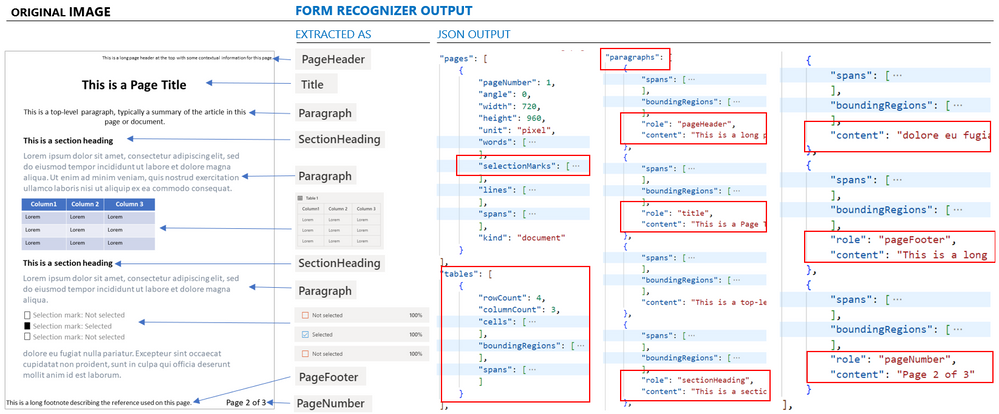

The following illustration compiled from the Form Recognizer Studio’s Layout demo shows the document layout analysis results for the sample image in the previous section. You see the rendered insights and the corresponding JSON snippets. Try your general unstructured documents and images in the Form Recognizer Studio.

Let’s dive deeper into the new features and enhancements.

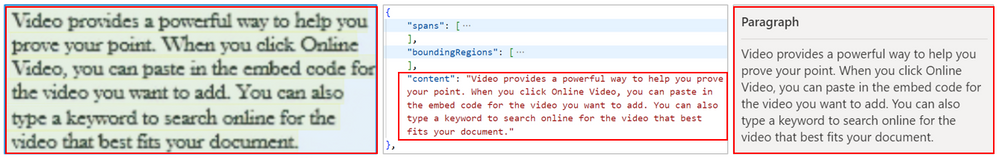

Paragraph

Paragraphs are groupings of related sentences visually separated from other groups of sentences in the document. They are composed of text lines that are in turn composed of words. In the new Form Recognizer, Read (OCR) and Layout analysis models now extract paragraphs in addition to text lines and words.

Paragraph roles

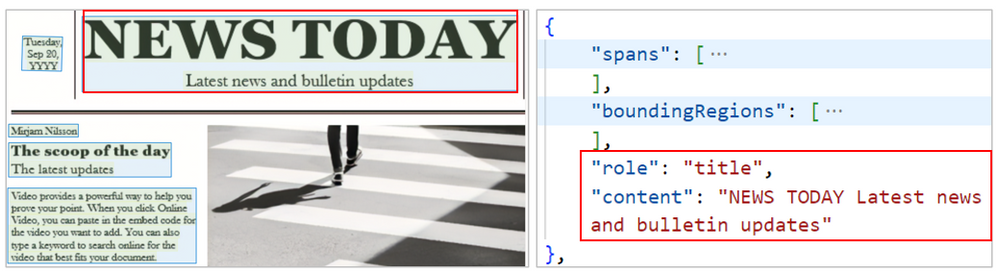

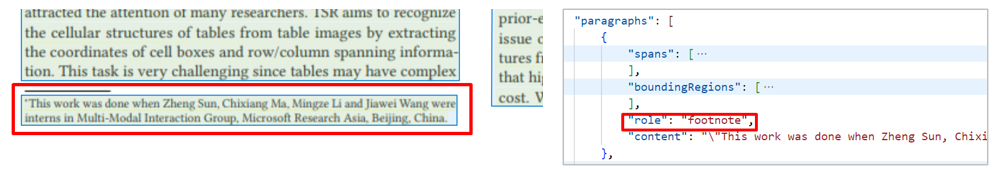

In a previous section, we mentioned titles, section headings, page headers, footers, page numbers, and footnotes as examples of logical roles found in a document.

The following table shows examples of the logical roles extracted by the Layout model in the new release.

|

Title The Title on a page is typically at the beginning of a page and describes the contents. |

Document layout analysis example - Title Document layout analysis example - Title

|

|

Section heading A section heading typically precedes one or more paragraphs of text and visuals.

|

Docu |

|

Page footer The page footer is located at the bottom of a page and typically contains some useful information. |

Document layout analysis example - Page footer Document layout analysis example - Page footer

|

|

Page header Page headers and footers are used interchangeably and contain very similar contextual information. |

Document layout analysis example - Page header Document layout analysis example - Page header

|

|

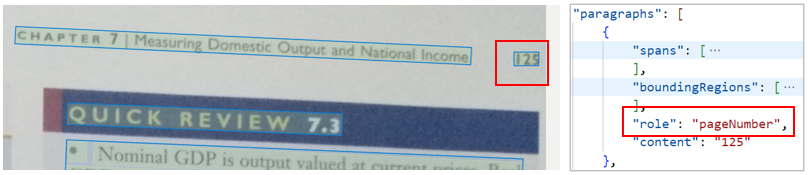

Page number This is self-explanatory. |

Document layout analysis example - |

|

Footnote The Footnote is located near the bottom of a page and typically contains context about the contents. |

Document layout analysis example - Footnote Document layout analysis example - Footnote

|

Form Recognizer 3.0 also includes many enhancements for text extraction (OCR), tables and selection mark extractions. Let’s review them next.

Recognition of tables in documents - background

Tables are a popular way of representing large volumes of structured data in financial statements, scientific papers, invoices, purchasing orders, etc. Extracting this data efficiently from documents into enterprise data repositories for search, validation, automation, and several other business scenarios has become critical in recent times. Therefore, automatic table detection and structure recognition techniques have become necessary for research, development, and continuous improvement.

Challenges

Both table detection and structure recognition are hard problems due to the several challenges. Tables have diverse styles including borderless tables with complex hierarchical header structures, empty or spanning cells, even blank spaces between neighboring columns. Adjacent tables can make it hard to determine whether they should be merged or not. Invoice tables may only contain a couple of rows and some others may span multiple pages. Nested tables present another level of challenge making table boundaries ambiguous. Finally, images may be of poor quality and even have distorted tables. Not to mention tables can contain other complex regions like paragraphs, images and others that can further make the disambiguation difficult.

Research

In recent years, computer vision researchers have been exploring deep neural networks for detecting tables and recognizing table structures from document images. These deep learning-based table detection and structure recognition approaches have substantially outperformed traditional rule or statistical machine learning based methods in terms of both accuracy and capability. Most deep learning based table detection approaches treat table as a specific object and borrow various CNN-based object detection and segmentation frameworks, like Faster R-CNN.

Form Recognizer's table extraction is based on a couple of research initiatives. First, a new table detector research to achieve high table localization accuracy leading to better end-to-end table detection performance. The model also uses a new table structure recognizer for distorted and curved tables. For details, refer to the research paper, “Robust Table Detection and Structure Recognition from Heterogeneous Document Images”.

Another enhancement was a table structure recognition (TSR) approach, called TSRFormer, to robustly recognize complex table structures with geometrical distortions. Unlike previous methods, the researchers proposed treating table separation line prediction as a line regression problem instead of an image segmentation problem with a new two-stage Transformer based object detection approach to predict separation lines directly from images.

The third image in the following visual shows the improvement for empty cells over other algorithms. For details, refer to the research paper, “TSRFormer: Table Structure Recognition with Transformers”.

Table extraction enhancements in the new release

After reviewing some background on table extraction, let’s see a few examples of relevant enhancements in the new release.

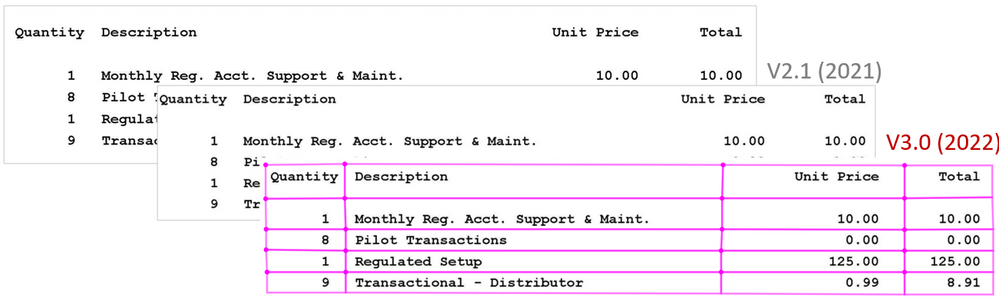

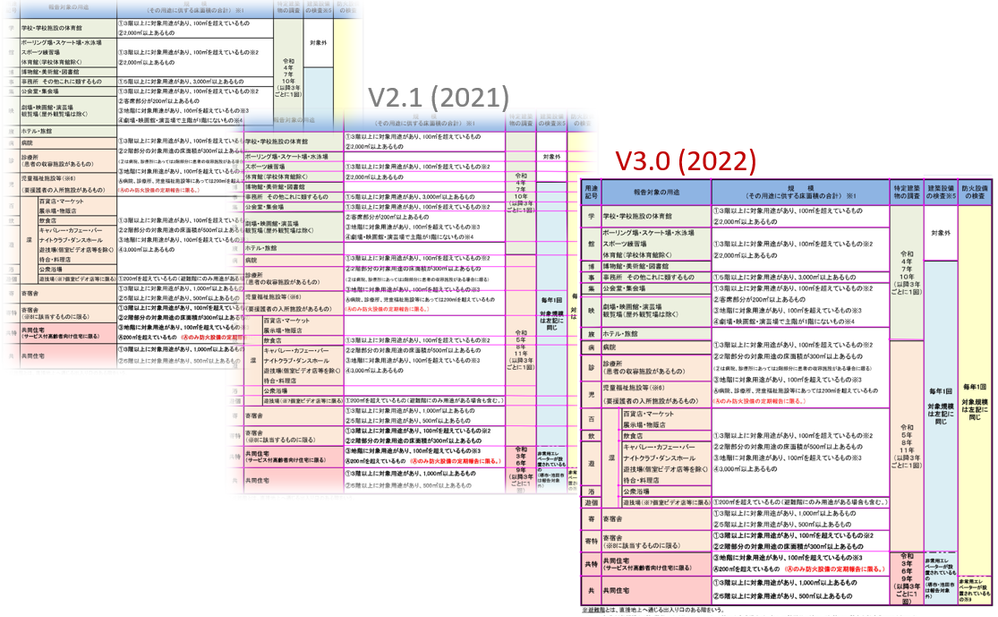

Borderless table detection

The extraction of tables that are missing any borders and cell boundaries has been improved from the previous version. The following example shows a sample result from the latest version.

Dense table extraction

As customers try to compress more information into each page, table rows are getting narrower to include increasing rows of text than ever before. The extraction of these dense tables has improved from the previous version. The following example shows a sample result from the latest version.

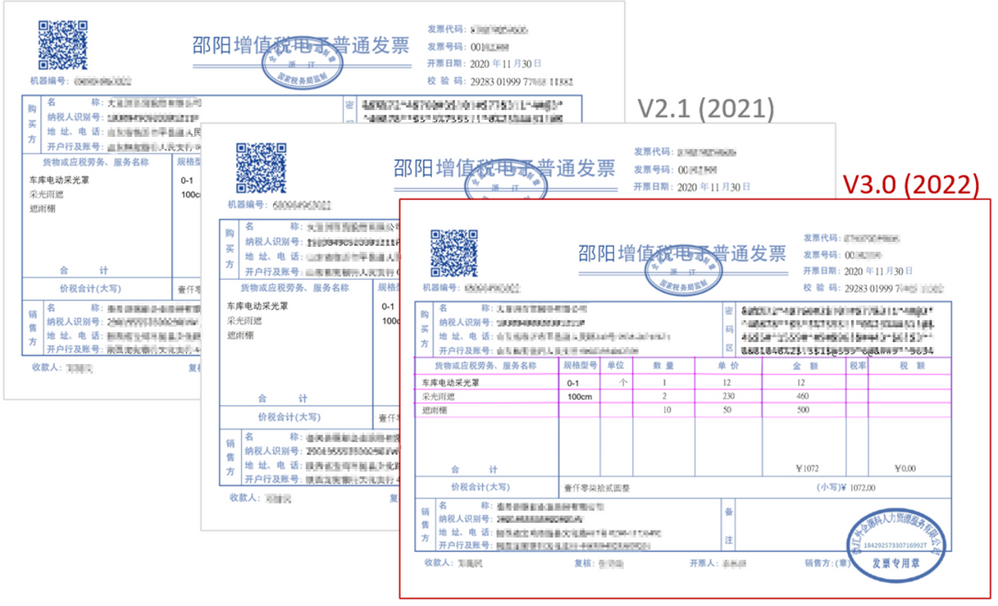

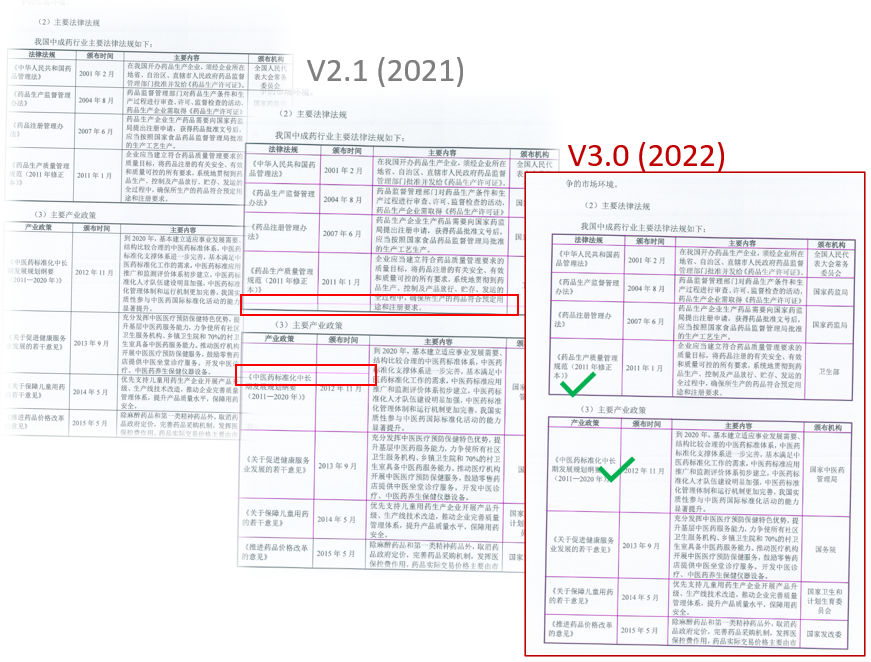

Tables in Asian language documents

The new Form Recognizer release has enhanced support for Chinese, Japanese, Korean (CJK) table extraction. The following example shows the missed vs. extracted table in the latest version.

Invoice style tables with long descriptions

Analyzing invoice documents with line items requires correct extraction of tables with long and descriptive content relative to rest of the table for the line items. The following example shows the improved table detection in the latest version.

Tables with large spanning cells and vertical text

Analyzing documents with vertical text in Asian languages requires recognition of long spanning columns with vertical text. The following example shows improved table extraction for an example image.

General table improvements

In addition to the specific examples mentioned previously, the latest layout analysis model contains several general table improvements like reducing occurrence of additional rows mid-table. The following image shows one such example from the latest model.

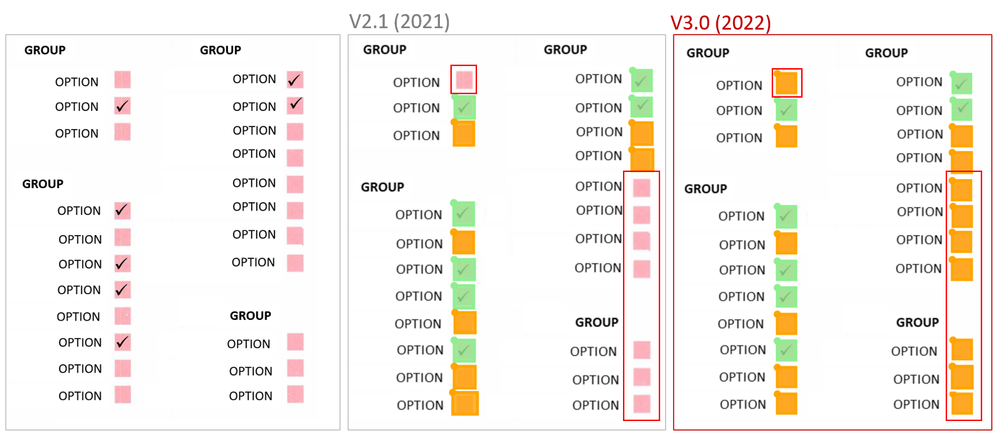

Selection mark extraction improvements

Selection marks extraction for shaded selection marks is enhanced in the latest model. The following example shows the improvement in the latest output compared with the previous version.

Text extraction (OCR) enhancements

The latest layout analysis model includes several OCR enhancements that work with structural analysis to output the final combined results. Check out the Form Recognizer’s Read OCR enhancements blog post to learn more about the following improvements in the latest layout model:

- Print OCR for Cyrillic, Arabic, and Devnagari languages

- Handwriting OCR for Chinese, Japanese, and Korean and Latin languages

- Dates extraction

- Boxed and single character extraction

- Check MICR text extraction

- LED text extraction

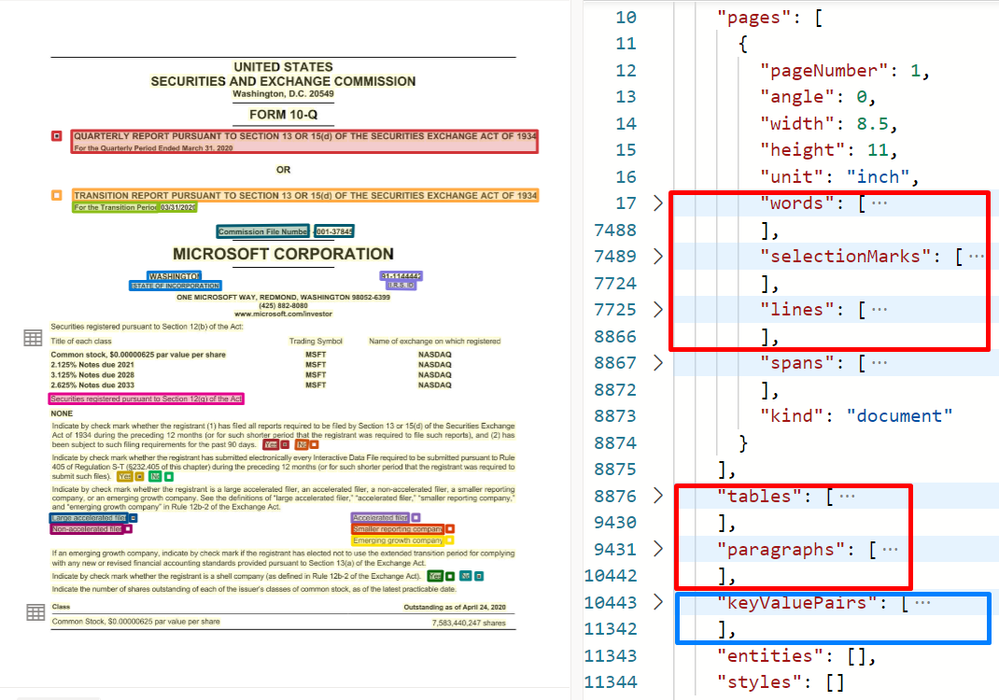

Layout results in other Form Recognizer models

Form Recognizer’s document layout analysis model powers its General Document, prebuilt, and Custom model capabilities to varying degrees. If you are using those models, Layout extractions like text, tables and selection marks might be included in those outputs depending on fit.

For example, in addition to key-value pairs, the following General Document model output shows the extracted words, lines, paragraphs, tables, and selection marks from the layout analysis step. Custom models also include the same results in their JSON output.

Get Started with Form Recognizer Layout model

Start with the new Layout model in Form Recognizer with the following options:

1. Try it in Form Recognizer Studio by creating a Form Recognizer resource in Azure and trying it out on the sample document or on your documents.

2. Start with the SDK QuickStarts for code samples in C#, Python, JavaScript, and Java. The following code snippet uses the Python SDK to show how to use the Layout analysis model. Refer to the QuickStart for the full sample.

3. Follow the REST API QuickStart. All it takes is two operations to extract the results.

Customer success – EY

Trust plays a huge role for the EY organization while serving its clients, and emerging technologies play a crucial role in this. One important initiative at EY organization is to improve the accuracy of the extracted data from important business documents such as contracts or invoices. The EY Technology team collaborated with Microsoft to build a platform that hastens invoice extraction and contract comparison processes. Using Azure Form Recognizer (Form Recognizer) and the Azure Custom Vision API (Vision), EY teams have been able to automate and improve the Optical Character Recognition (OCR) and document handling processes for its consulting, tax, audit, and transactions services clients.

Learn more about the EY story and other Form Recognizer customer successes.

Additional resources

The Form Recognizer v3.0 announcement article covers all new capabilities and enhancements.