This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

This is a follow-up blog to ForEach Loop to CopyData and Capture Metadata in ControlTable - Part 1(Environment Setup)

In the previous blog we setup the pre-requisites

- ADLS Gen2 account with 3 containers and 6 delimited text files. Each container having a directory with the same name.

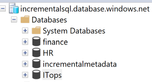

- Azure SQL server with 3 target databases (finance, HR, ITops) and one database that holds the metadata (incrementalmetadata)

- We created schemas and empty tables which will serve as sink tables

- We inserted values in the MetadataControlTable

We shall now begin in setting up the Data Factory Environment which comprises of

Linked services ->Linked services are much like connection strings, which define the connection information that's needed for Data Factory to connect to external resources.

Datasets -> Datasets represent data structures within the data stores, which simply point to or reference the data you want to use in your activities as inputs or outputs.

Pipeline -> A data factory might have one or more pipelines. A pipeline is a logical grouping of activities that performs a unit of work. Together, the activities in a pipeline perform a task

Activity -> Activities represent a processing step in a pipeline. For example, you might use a copy activity to copy data from one data store to another data store

Note: For this lab we shall be using Azure Integration Runtime and the same naming conventions for Linked Services and Datasets

Environment Setup

1. Creating Dynamic/Static Linked Services

We shall be creating 3 linked services to point at ADLS Gen2 Account, Azure SQL Server (for dynamic databases), Azure SQL Server (for static table MetadataControlTable)

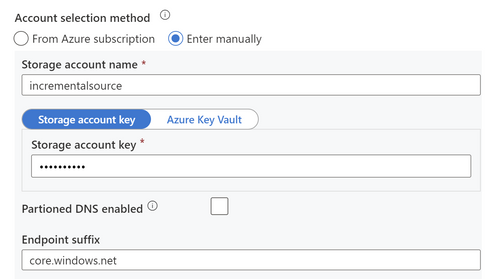

A) Create the incrementalsource Blob Storage linked service as follows

Select the storage account which you have created in the previous lab.

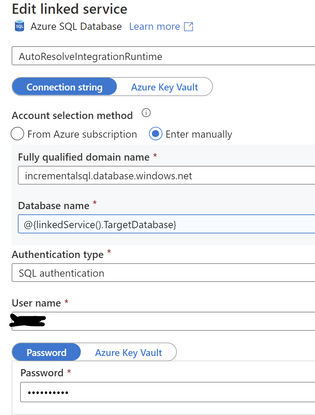

B) Create the incrementalsink AzureSQLDatabase linked service as follows

Create an Azure SQL Database linked service and pass the server name which you created in the previous lab. An additional step will be declaring variables to pass database names, schema names and target tables names

Ensure you are creating & declaring the following parameters in the parameters section

(Note: it does not matter what string you mention in the default value as it will be overwritten when executing the pipeline)

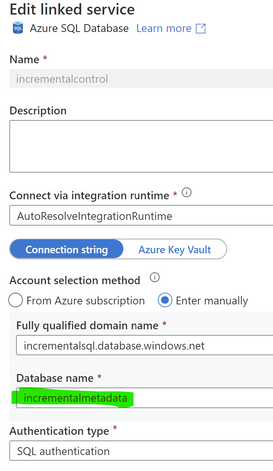

C) Create incrementalcontrol linked service which will explicitly be pointing to the incrementalmetadata database

Create another Azure SQL Database linked service as follows. This particular linked service will not have any parameters rather database name "incrementalmetadata"

Test connections before saving these linked services

2. Creating Dynamic/Static Datasets

We will now be creating

1 static dataset -> to reference the metadata control table

2 dynamic datasets -> one for each our Storage Account and other for databases within Azure SQL server

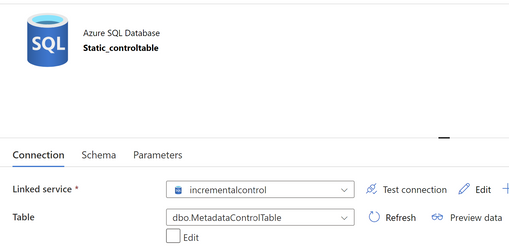

A) Static Dataset -> I named mine as Static_controltable

Let’s imagine you need to connect to 'X' Azure Storage account to copy data from and 'Y' Azure SQL databases where you need to insert that data into. If hardcoded, it would mean creating N Linked Services, and N datasets only for your source & sink data stores.

We will be parameterizing the container/directory/filenames for storage accounts and database/schema/table for the database in the datasets as well. We need now create 2 dynamic datasets

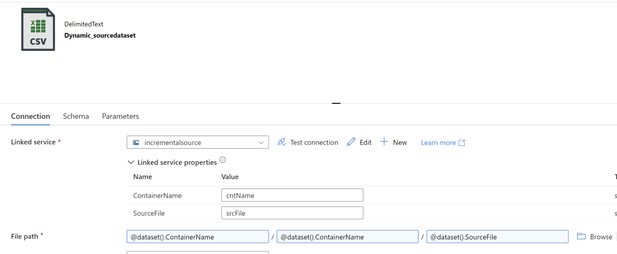

B) Dynamic Source -> I named mine as Dynamic_sourcedataset for the storage account

Since the directory name is same as the container name we passing the same variables @dataset().ContainerName twice and for filename & directory. The files are referenced with @dataset().SourceFile variable. When updating File path information you have an option to 'Add dynamic content’. Select this menu to assign these values for each of the File Path parameters as follows

The final values will look like this

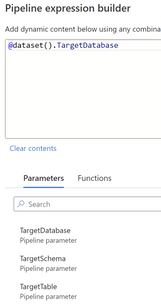

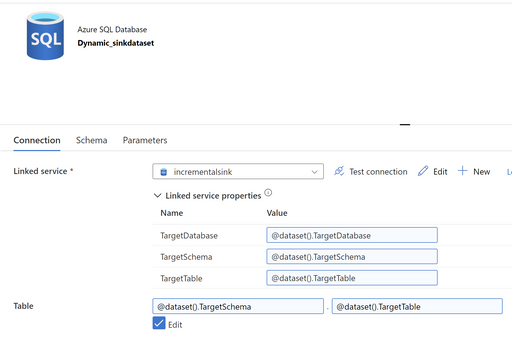

C) Dynamic Sink -> I named mine as Dynamic_sinkdataset for the storage account

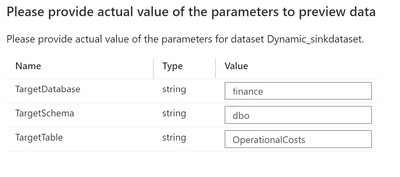

Here too we will use variables we declared when creating the dynamic linked service for Azure SQL Server. Namely TargetDatabase, TargetSchema and TargetTable. Which we will pass these respectively by clicking on 'Add dynamic content’ menu.

The final values will look like this

Test all three datasets with 'Preview Data' button. It will create pop-ups asking you to provide the values for testing the connections. Pass the values for Dynamic_sourcedataset & Dynamic_sinkdataset as follows

Source:

Sink:

At the end of this you should have 3 linked services

- incrementalsource

- incrementalsink

- incrementalcontrol

and 3 datasets namely

- Static_controltable

- Dynamic_sourcedataset

- Dynamic_sinkdataset

Post this we are ready to create our pipeline with activities. The pipeline will capture the metadata in the static table which is also referenced for mapping information. The pipeline setup will be covered in the next article

References:

- https://techcommunity.microsoft.com/t5/fasttrack-for-azure/build-metadata-driven-pipelines-in-azure-data-factory/ba-p/3443328

- https://www.mssqltips.com/sqlservertip/6320/logging-azure-data-factory-pipeline-audit-data/

- https://learn.microsoft.com/en-us/azure/data-factory/solution-template-bulk-copy-from-files-to-database

- https://learn.microsoft.com/en-us/azure/data-factory/monitor-schema-logs-events

- https://learn.microsoft.com/en-us/azure/data-factory/control-flow-expression-language-functions

- https://learn.microsoft.com/en-us/azure/data-factory/control-flow-system-variables

- https://learn.microsoft.com/en-us/azure/data-factory/parameters-data-flow

Don't forget to share a

Credit: Thanks Gary Lee, Nathan Widdup, Paul Burpo for reviews and guidance

FastTrack for Azure: Move to Azure efficiently with customized guidance from Azure engineering.