This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

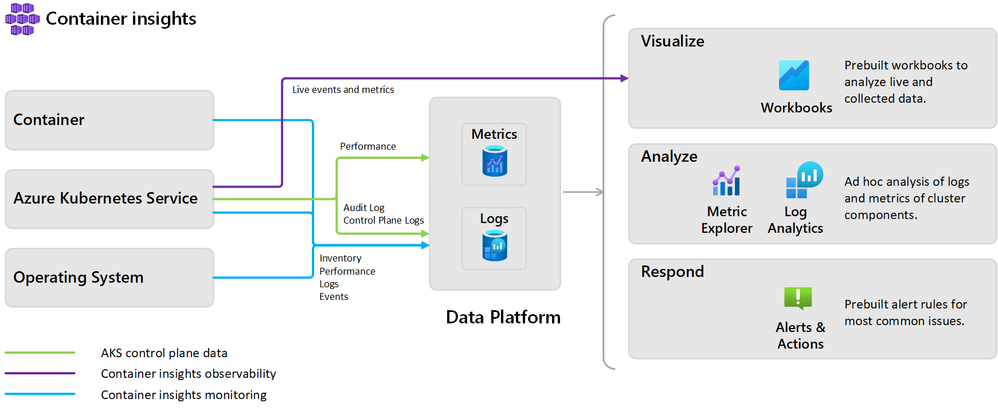

When migrating your services to AKS, you could potentially run into an issue, which has to do with logging levels and the volume of data that is being sent to Container Insights. This is especially true when you need to run hundreds or even thousands of pods, as AKS clusters are pretty chatty and generate a ton of logs. You may notice a massive volume of metrics being pushed from the containers running inside the pods into container insights (mostly CPU and Memory metrics). By default, these are collected every minute for every container.

As a result of this and the quantity of pods you have, you will be looking at GBs of data being pushed to Container Insights each day. You may think about applying some caps to help limit the impact on cost (especially if you have a non-production cluster as well - which you should be), but that will leave you blind in some areas. On the production cluster there should be no caps / limits applied for obvious visibility reasons.

For example, if you have 1000 pods running in your AKS cluster, this may mean a total estimate of 20GB of logs and metrics ingested per day (only counting the Perf, ContainerInventory & KubeInventory tables).

So what are your possible options about tuning this logging level to control the volume of metrics being pushed and thus control the associated costs?

First, you can enable “ContainerLogV2” schema table for container logs and then configure “Basic Logs”. “Basic Logs” is a new SKU for Azure Monitor Log Analytics with much lower data ingestion costs (compared to other SKU) at the cost of a lower retention period and some log query operations that are no longer available. Lower retention can be mitigated with the new log archive feature or by exporting all logs to a Storage Account for long term cold storage retention.

The above fix will work for ContainerLog tables, which is where all stdout logs from all containers go. Another table that sometimes causes issues is the ContainerInventory table. This table includes information from all pods running in the cluster and is updated every few minutes for every single pod, so large clusters might suffer from it. The solution here would be to disable the collection of environmental variables from the agent, which would probably take more than 90% of the size of the ContainerInventory table. To do this, you can set the [log_collection_settings.env_var] to false.

If the previous two actions were not enough to bring the costs down, another thing you can do is to exclude stdout / stderr or certain namespaces from log collection. With the previous two fixes this shouldn’t be needed anymore but you can always fully disable stdout / stderr and exclude namespaces from agent data collection.

But what about the Perf, ContainerInventory & KubeInventory tables? The initial thought here would be to change the inventory collection frequency interval from 1 minute to 2 minutes for example. How can you do something like this today?

The 1st short-term option you have is using a new preview feature called “Azure Monitor Container Insights DCR”. With this, you can customize the frequency of metrics collection in the agent (now it is 1 minute and cannot be changed) and the container insights collection settings using Data Collection Rules (DCR). Using this, you can make some modifications to the agent by applying some parameters, like collecting data every 5 minutes, or monitoring only certain namespaces. This can drastically reduce the cost for those specific performance insights tables, like Perf, ContainerInventory and KubeInventory, by reducing the amount of data that is collected for them. Depending on the level of filtering, the data collection frequency and how aggressive you want them to be, based on your monitoring requirements, this can cut the cost anywhere between 40%-50%.

The 2nd long-term option is going to be the “Azure Monitor Managed Prometheus” service. You may want to use this option if you really want to have all that data collected, like you still need to monitor all your namespaces, or maintain the same metrics collection frequency in the agent. This is expected to be a lot cheaper in comparison to the logging component and will eventually replace the logging solution for the performance counters. This is already in public preview.

For starters, you can sign up for a private preview of the short-term solution, that is going to go live by end of 2022, and then eventually, switch to the long-term solution, starting when the Azure Prometheus service goes to GA (expected to be around March 2023), where all those large performance tables will be replaced by Prometheus metrics. That would reduce the costs and give you a more permanent solution.

For signing up to the “Azure Monitor Container Insights DCR” feature, you must give your subscription ids, in order for them to be whitelisted and be able to access it. The link you can use for this is the following: https://forms.office.com/r/1WLhGJaeJz. If you choose to sign up, you will be given exact instructions on how to use it.

Generally, the recommendation for private preview features is to not use them in production workloads. But, specifically for this one, when it moves to public preview a few months later, it is up to you to evaluate the stability of your platform and whether you are feeling confident of using it. Nevertheless, we would say that it should be relatively safe to have it, because we are not actually using any preview backend engineering work under the hood for this. Instead, we are using GA level stability constructs like DCRs in our agent for data collection.

Note: There is also a 3rd option called “Ingestion-Time Transformation”, but it is not something that we recommend and is also in preview. The reason why we do not recommend it is because it is going to break your container insights experience (e.g., if you have pinned dashboards, or certain queries and alerts setup already on top of it). Generally, the ingestion-time transformations can reduce the amount of log collection that is sent to Log Analytics for those tables. The difference between this option and the DCR settings (1st option) is that it does not actually reduce the total amount of data ingestion, but it just reduces what is sent to Log Analytics.