This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Azure Neural Text-to-Speech (Neural TTS) is a powerful AIGC (AI Generated Content) service that allows users to turn text into lifelike speech. It has been applied to a wide range of scenarios, including voice assistants, content read-aloud capabilities, and accessibility uses. During the past months, Azure Neural TTS has achieved parity with the natural human recordings (see details) and has been extended to support more than 140 languages and variances (see details). These highly natural voices are available on cloud, and on prem through containers.

At the same time, we have received many customer requests to support Neural TTS on devices, especially for scenarios where devices do not have network availability or the network is not stable, and scenarios that require extremely low latency or have privacy constraint. For example, users of screen readers for accessibility (such as the speech feature on Windows) are asking to improve the voice experience with better on-device TTS quality. Automobile manufacturers are requesting features to enable voice assistants in cars when disconnected.

To address the needs for high-quality embedded TTS, we developed a new-generation device neural TTS technology which has significantly improved the embedded TTS quality compared to the traditional on-device TTS voices, e.g, those based on the legacy SPS (Statistical Parametric Speech Synthesis) technology. Thanks to this new technology, natural voices on-device have been released to Microsoft’s flagship products such as Windows 11 Narrator, and are now available in Speech services for Azure customers.

Neural voices on-device

A set of natural on-device voices lately became available with Narrator on Windows 11. Check the video below to hear how natural the new voices sound and how much better they are than the old-generation embedded voices.

Windows11’s Narrator Natural Voices, start from 30’27’’ to 32’44’’

With Azure Speech service, you can embed the same natural on-device voices into your own business scenarios easily. Check the demo below to see how seamlessly a mobile speech experience is switched from a connected environment to disconnected, with neural TTS voices available both on cloud and embedded.

Seamless switch between cloud TTS and device TTS with Azure device neural TTS technology

This new generation on-device neural TTS has three key advances: high quality, high efficiency, and high responsiveness.

High quality

Traditional TTS on-device voices are built with the legacy SPS technology and the voice quality is significantly lower than the cloud-based TTS, typically with a MOS (Mean Opinion Score) gap higher than 0.5. Now, with the new device neural TTS technology, we have closed the gap between the device TTS and cloud TTS. Our MOS and CMOS (Comparative Mean Opinion Score) tests have shown that the device neural TTS voice quality is very close to the cloud TTS.

Check below table for a comparison of voice naturalness, output support and features available among traditional device TTS (SPS), embedded neural TTS and cloud neural TTS. Here ‘traditional device TTS’ is the device SPS technology we shipped on Windows 10 and the previous Windows versions, which is also the major technology used for embedded TTS in the current industry.

|

|

Traditional device TTS |

Device neural TTS |

Cloud neural TTS |

|

MOS gap (on-device neural TTS as the base) |

~-0.5 |

0 |

~+0.05 |

|

16kHz fidelity |

Yes |

Yes |

Yes |

|

24kHz fidelity |

No |

Yes |

Yes |

|

48kHz fidelity |

No |

No |

Yes |

|

No |

No |

Yes |

As you can tell from the above comparison, with the new technology, the naturalness of device neural TTS voices have reached near parity with the cloud version. Hear how close they sound with below samples.

|

Voice |

Device neural TTS |

Cloud neural TTS |

|

Jenny, En-US |

||

|

Guy, En-US |

||

|

Xiaoxiao, Zh-CN |

||

|

Yunxi, Zh-CN |

High efficiency

Deploying neural network models to IoT devices is a big challenge for both those performing AI research as well as multiple industries today. For device TTS scenarios and customers, the challenge is even bigger due to lower end devices and lower CPU usage reservation in the system according to our customers’ experience. So, we must create a super highly efficient solution for our device neural TTS.

Below are the metrics and the score card for our device neural TTS system. Overall, its efficiency is close to some traditional device TTS systems and can meet almost all customers’ requirements on efficiency.

|

Metrics |

Values |

|

CPU usage (DIMPs) |

~1200 |

|

RTF1 (820A2, 1 thread) |

~0.1 |

|

Output sample rate |

24 kHz |

|

Model Size (Bytes) |

~5 Mb (Acoustic Model + Vocoder) |

|

Memory usage (Bytes) |

<120 Mb |

|

NPU3 support |

Yes |

Notes:

- RTF, or Real-Time Factor, is the measurement of the time in seconds to generate the audio of 1 second in length.

- 820A is a type of CPU that is broadly used in car systems currently. It is a typical platform that device TTS is running on and most customers can adopt, so we use this CPU as our platform for measurement.

- NPU, or Neural Process Unit, is one of the critical components in the CPU, especially for AI related processing. It can accelerate the neural network inferencing efficiently without increasing general CPU usage. Recently more and more IoT devices like car manufacturers are using NPU to accelerate their systems.

High responsiveness

High synthesizing speed and low latency are critical factors that affect the user experience in a text-to-speech system. To ensure a highly responsive system, we designed the device NTTS to synthesize in a streaming mode, which means that the latency is independent of the length of the input sentence. This allows for a consistently small latency and a highly responsive experience when synthesizing. To achieve streaming synthesizing, both the acoustic model and vocoder must be able to be inferenced in a streaming manner.

With the streaming inference design, we achieved 100ms latency on 820A with 1 thread.

How did we do that?

To improve the device TTS technology with neural networks, overall, we adopted a pipeline architecture similar to the cloud TTS. The pipeline contains three major components: text analyzer, acoustic model, and vocoder.

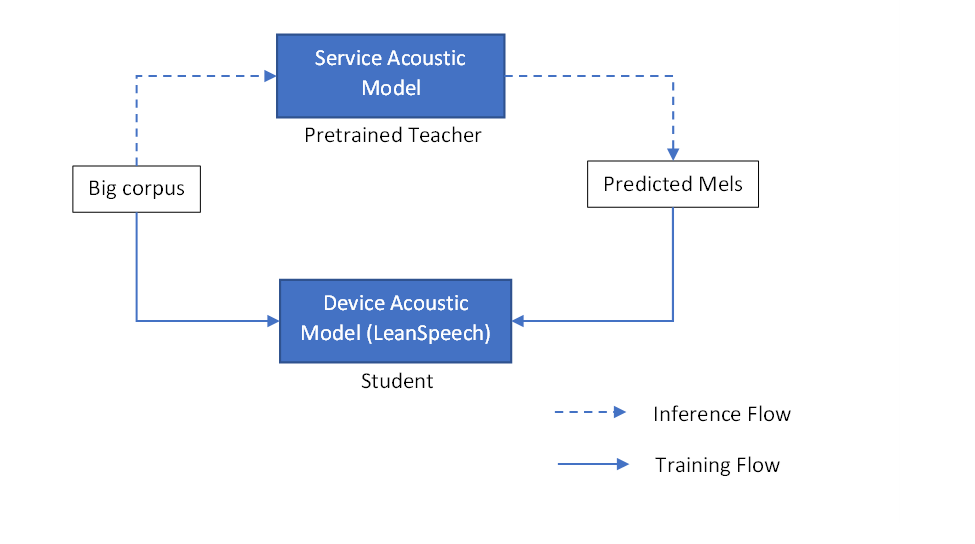

For acoustic model, we designed a totally brand-new model architecture, named “LeanSpeech”, which is a super light efficiency model with high learning capability. We use LeanSpeech as a student model to learn from the service model which acts as a teacher. With this design, we achieved an acoustic model with a 2.9Mb size in bytes and close quality to the service acoustic model on cloud.

In addition, we developed the device vocoder based on our last service HiFiNet vocoder on cloud. The biggest challenge we faced was the computation cost. If we just simply applied LeanSpeech + HiFiNet, HiFiNet contributed to higher than 90% of the computation cost, and the total CPU usage would block adoption on some low-end devices or systems that only have limited CPU usage budget , like in many on-car assistant scenarios.

To solve these challenges, we re-designed our HiFiNet, using highly efficient model units and applied model compression methods like model distillation. Finally, we reduced the on-device model size to 7x smaller and decreased the computation cost by 4x compared to the service vocoder on cloud.

Get started

Embedded Speech with device neural TTS is in public preview with limited access. You can check how to use it with the Speech SDK here. Apply for access through the Azure Cognitive Services embedded speech limited access review. For more information, see Limited access for embedded speech.

We have below languages and voices released through Azure Embedded Speech public review. More languages will be supported bases on business needs.

|

Locale Name |

Voice Name |

Gender |

|

en-US |

Jenny |

Female |

|

en-US |

Aria |

Female |

|

zh-CN |

Xiaoxiao |

Female |

|

de-DE |

Katja |

Female |

|

en-GB |

Libby |

Female |

|

ja-JP |

Nanami |

Female |

|

ko-KR |

SunHi |

Female |

|

en-AU |

Annette |

Female |

|

en-CA |

Clara |

Female |

|

es-ES |

Elvira |

Female |

|

es-MX |

Dalia |

Female |

|

fr-CA |

Sylvie |

Female |

|

fr-FR |

Denise |

Female |

|

it-IT |

Isabella |

Female |

|

pt-BR |

Francisca |

Female |

|

en-US |

Guy |

Male |

|

zh-CN |

Yunxi |

Male |

|

de-DE |

Conrad |

Male |

|

en-GB |

Ryan |

Male |

|

ja-JP |

Keita |

Male |

|

ko-KR |

InJoon |

Male |

|

en-AU |

William |

Male |

|

en-CA |

Liam |

Male |

|

es-ES |

Alvaro |

Male |

|

es-MX |

Jorge |

Male |

|

fr-CA |

Jean |

Male |

|

fr-FR |

Henri |

Male |

|

it-IT |

Diego |

Male |

|

pt-BR |

Antonio |

Male |

Microsoft offers the best-in-class AI voice generator with Azure Cognitive Services. Quickly add read-aloud functionality for a more accessible app design or give a voice to chatbots to provide a richer conversational experience to your users with over 400 highly natural voices available across more than 140 languages and locales. Or easily create a brand voice for your business with the Custom Neural Voice capability.

For more information:

- Visit our voice gallery

- Read our documentation

- Check out our quickstarts

- Check out the code of conduct for integrating Neural TTS into your apps