This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

This blog post will provide an overview of the Synapse security environment focused on Dedicated SQL Pool, Serverless SQL Pool, and Spark.

Security has many layers and frequently it will determine how you build your process. I start this post by reviewing several important security considerations which you can later apply to your Synapse environment.

Security considerations

1) Least Privilege

This security principle of least privilege asserts that users and applications should be granted access only to the data and operations they require to perform their jobs. In other words: Prevent overprivileged applications by revoking unused and reducible permissions.

2) Environments

A multi-environment approach lets you build, test, and release code with greater speed and frequency to make your deployment as straightforward as possible. A common multi-environment architecture includes four tiers:

- Development

- Test

- Staging

- Production

With this architecture, your product transitions in order from Development (the environment where you develop changes to the software) through Production (the environment your users directly interact with). You may also want to consider a User Acceptance Test (UAT) environment to validate end-to-end business flow.

| Environment | Description |

| Development | Your development environment (dev) is where changes to software are developed. |

| Test | Your test environment allows either human testers or automated tests to try out new and updated code. Developers must accept new code and configurations through unit testing in your development environment before allowing those items to enter one or more test environments. |

| Staging | Staging is where you do final testing immediately prior to deploying to production. Each staging environment should mirror an actual production environment as accurately as possible. |

| User Acceptance Testing | User Acceptance Testing (UAT) allows your end-users or clients to perform tests to verify/accept the software system before a software application can move to your production environment. |

| Production | Your production environment (production), sometimes called live, is the environment your users directly interact with. |

Check out Environments - Cloud Adoption Framework for more information.

Firstly, secure a project using the least privileged user principle across the environment. The secured environment should be organized in a way that mitigates data leaking and accidental/intentional errors. Building different environments: Dev, Test, UAT, and Prod allows you to narrow the permissions required according to the current state phase of the project. For example, a Developer should be able to change the code in a Dev environment, but not in a UAT environment.

Another way to organize your environment also could be adding users to different groups. Example, Dev users would exist in the Dev group, Test users in a Test group, etc. You could also enhance the security by enabling the MFA for those users to authenticate on the workspace. I will provide more details explaining this in the next security consideration.

3) AAD - Azure Active Directory

AAD Security Groups

Azure Active Directory (Azure AD) provides several ways to manage access to resources, applications, and tasks. With Azure AD groups, you can grant access and permissions to a group of users instead of an individual user. You can create an AAD security group and add it to your Synapse Workspace, managing the permission according to the role the people represent inside of the project.

MFA

Azure AD Multi-Factor Authentication works by requiring two or more of the following authentication methods:

Something you know, typically a password.

Something you have, such as a trusted device that's not easily duplicated, like a phone or hardware key.

Something you are - biometrics like a fingerprint or face scan.

When users register themselves for Azure AD Multi-Factor Authentication, they can also register for self-service password reset in one step. Administrators can choose forms of secondary authentication and configure challenges for MFA based on configuration decisions.

Check out the following resources for more information:

- Learn about groups and group membership - Azure Active Directory

- Increase application security with the principle of least privilege

- Secure access control using groups in Azure AD

- MFA

- Using MFA

In summary, we have the least privileged user applied to AAD security groups across different environments during the project development cycle. These are generic security considerations applicable for most projects. Another security point you must be careful of while working on a project is SQL injection.

4) SQL injection

SQL injection is a type of security vulnerability that arises when an unauthorized user is able to access and manipulate the SQL code, and execute directly on the database. This could be done on any SQL, through pipelines or Spark code for example. As a generic guideline:

- Try to use prepared statements

- Validate the inputs

- Enforce least privilege user

- Test and more testing! To explore the different scenarios to mitigate the risk

SQL injection is a type of security vulnerability that could impact Spark, ADF, or SQL runtimes (Dedicated or Serverless) itself. Preventing SQL injection requires proper input validation and sanitization of user input, as well as using secure coding practices like parameterized queries and prepared statements.

Please take note of these security considerations that could be applicable to projects not just related to Synapse. Now let's dig into Synapse.

Synapse

Synapse Workspace

Once you are about to deploy your environment you need to make a decision that can't be undone, unless you recreate the workspace. The key decision is determining if you will be using Manage VNET, Not Manage VNET or DEP.

Manage VNET means the virtual network will be managed by Azure it also ensures that your workspace has a network isolated from other workspaces. Data integration and Spark resources are deployed inside of it and for Serverless SQL Pool and Dedicated SQL Pool, private links are automatically created. Access using the public internet can be enabled and disabled and if you are using Apache Spark inside of the workspace, it means this can be connected to a storage that is behind a firewall through the private endpoints.

Not Managed VNET means you have to manage the virtual network created for your environment. The access still can be done through the public internet, but there is no option to enable and disable it exist in managed VNETs. If you are using Apache Spark inside of the workspace, it means this can connect only to the storage that is not behind the firewall but open to the public internet. If you try to connect to the storage behind the firewall, you will receive the error 403.

DEP is data exfiltration protection, which guards against malicious users accessing your Azure resources and exfiltrating sensitive data to locations outside of your organization’s scope. In workspaces with data exfiltration protection, resources within the managed virtual network always communicate over managed private endpoints and the Synapse SQL resources can only connect to authorized Azure resources (targets of approved managed private endpoint connections from the workspace). There are some limitations, please review the limitations before using this configuration.

To learn more, check out the following:

- Managed virtual network

- What is a private endpoint? - Azure Private Link

- Connect to a secure storage account from your Azure Synapse workspace

- How Data Exfiltration Protection (DEP) impacts Azure Synapse Analytics Pipelines

- Synapse Connectivity Series Part #1 - Inbound SQL DW connections on Public Endpoints

- Synapse Connectivity Series Part #2

- Connect to workspace resources in Azure Synapse Analytics Studio from a restricted network

Workspace encryption

Workspaces can be configured to enable double encryption with a customer-managed key at the time of workspace creation. Enable double encryption using a customer-managed key on the "Security" tab when creating your new workspace. Check out Azure Synapse Analytics encryption for more information.

Synapse Workspace permissions

Azure Synapse Workspace is integrated with Azure role-based access control (Azure RBAC) to manage its resources. Azure RBAC allows you to manage Azure resource access through role assignments. You can assign these roles to users, groups service principals, and managed identities as you can see in Fig 1.

Fig 1.

Access control on Synapse Studio

You can open Synapse Studio and view details of the workspace and list any of its Azure resources such as SQL pools, Spark pools, or Integration runtimes. You will see if you've been assigned any Synapse RBAC role or have the Azure Owner, Contributor, or Reader role on the workspace. Access Control can be accessed from Synapse Studio as you can see on Fig 2.

Recommendation: You can create a basic group and add your members at the same time using the Azure Active Directory (Azure AD) portal and add permissions as applied, you can use the following step outlined at Access control in Synapse workspace how to.

Fig 2.

To learn more about the Permissions on the Access Control menu, check out the following docs:

- Understand the roles required to perform common tasks in Azure Synapse

- How to review Azure Synapse RBAC role assignments in Synapse Studio

- Access control in Synapse workspace how to

- How to manage groups - Azure Active Directory

Synapse Dedicated SQL Pool

If you have a Synapse Dedicated SQL Pool, be aware that the connection uses proxy by default so if there is a firewall between your environment and the cloud you may need to allow the IPs to the public gateway to pass through. Check out Synapse Connectivity Series Part #1 - Inbound SQL DW connections on Public Endpoints blog for more details.

I recommend enabling Defender for the cloud. This will monitor potential SQL injection attacks, anomalous database access, and query patterns (like an abnormally high number of failed sign-in attempts with different credentials - a brute force attempt) or suspicious database activity. To learn more, check out SQL Advanced Threat Protection.

Synapse Dedicated Pool also supports - Transparent Data Encryption (TDE), which helps protect against the threat of malicious activity by encrypting and decrypting your data at rest. When you encrypt your database, associated backups and transaction log files are encrypted without requiring any changes to your applications. To learn more, check out Transparent data encryption (TDE) - SQL Server.

Dedicated SQL Pool - Data

Dedicate SQL Pool supports AAD or SQL user authentication. You can create a user inside of the database and give permissions to the schemas and objects according to your requirements. However, from a security point of view be careful with ownership chaining.

Ownership chaining means a user with ALTER permission on a schema can use ownership chaining to access securable in other schemas, including securable to which that user is explicitly denied access. This is because ownership chaining bypasses permissions checks on referenced objects when they are owned by the principal that owns the objects that refer to them. Check out this blog to learn about Inconsistent permissions or ownership chaining.

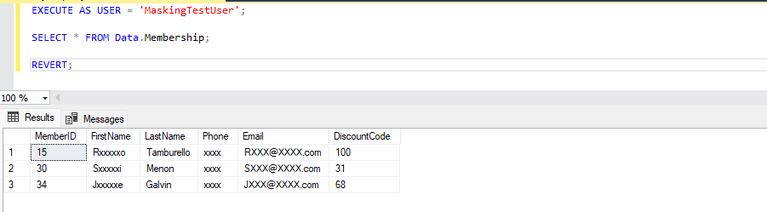

Data Masking - Dynamic data masking helps prevent unauthorized access to sensitive data by enabling customers to designate how much of the sensitive data to reveal with minimal impact on the application layer. This only will be possible if the unmask permission is not given to the user. It means an administrator will never be able to see the data as masked. Check out Dynamic Data Masking - SQL Server for more details.

CREATE TABLE [data].[Membership]

(

[MemberID] [int] NOT NULL,

[FirstName] [varchar](100) MASKED WITH (FUNCTION = 'partial(1, "xxxxx", 1)') NULL,

[LastName] [varchar](100) NOT NULL,

[Phone] [varchar](12) NULL MASKED WITH (FUNCTION = 'default()') NULL,

[Email] [varchar](100) MASKED WITH (FUNCTION = 'email()') NOT NULL,,

[DiscountCode] [smallint] MASKED WITH (FUNCTION = 'random(1, 100)') NULL

)

WITH

(

DISTRIBUTION = ROUND_ROBIN,

CLUSTERED COLUMNSTORE INDEX

)

GO

-- inserting sample data

INSERT INTO Data.Membership (FirstName, LastName, Phone, Email, DiscountCode)

VALUES

('Shakti', 'Menon', '555.123.4570', 'SMenon@contoso.net', 50),

('Zheng', 'Mu', '555.123.4569', 'ZMu@contoso.net', 40)

CREATE USER MaskingTestUser WITHOUT LOGIN;

GRANT SELECT ON SCHEMA::Data TO MaskingTestUser;

-- impersonate for testing:

--test on SSMS

EXECUTE AS USER = 'MaskingTestUser';

SELECT * FROM Data.Membership;

REVERT;

Results in Fig 3:

Fig 3.

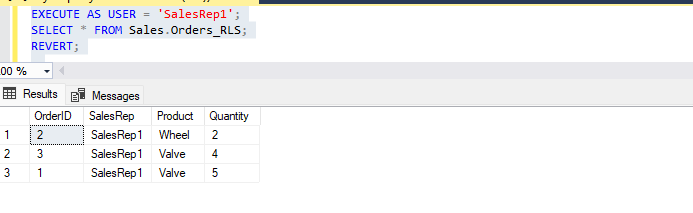

Row-level security allows security administrators to establish and control fine-grained access to specific table rows based on a predicate. Check out Row-Level Security - SQL Server for more information.

CREATE SCHEMA Sales

GO

CREATE TABLE [Sales].[Orders]

(

[OrderID] [int] NULL,

[SalesRep] [nvarchar](50) NULL,

[Product] [nvarchar](50) NULL,

[Quantity] [smallint] NULL

)

WITH

(

DISTRIBUTION = ROUND_ROBIN,

CLUSTERED COLUMNSTORE INDEX

)

GO

INSERT INTO Sales.Orders VALUES (1, 'SalesRep1', 'Valve', 5);

INSERT INTO Sales.Orders VALUES (2, 'SalesRep1', 'Wheel', 2);

INSERT INTO Sales.Orders VALUES (3, 'SalesRep1', 'Valve', 4);

INSERT INTO Sales.Orders VALUES (4, 'SalesRep2', 'Bracket', 2);

INSERT INTO Sales.Orders VALUES (5, 'SalesRep2', 'Wheel', 5);

INSERT INTO Sales.Orders VALUES (6, 'SalesRep2', 'Seat', 5);

Go

CREATE USER Manager WITHOUT LOGIN;

CREATE USER SalesRep1 WITHOUT LOGIN;

CREATE USER SalesRep2 WITHOUT LOGIN;

GO

GRANT SELECT ON Sales.Orders TO Manager;

GRANT SELECT ON Sales.Orders TO SalesRep1;

GRANT SELECT ON Sales.Orders TO SalesRep2;

GO

CREATE SCHEMA Security;

GO

CREATE FUNCTION Security.tvf_securitypredicate(@SalesRep AS nvarchar(50))

RETURNS TABLE

WITH SCHEMABINDING

AS

RETURN SELECT 1 AS tvf_securitypredicate_result

WHERE @SalesRep = USER_NAME() OR USER_NAME() = 'Manager';

GO

CREATE SECURITY POLICY SalesFilter

ADD FILTER PREDICATE Security.tvf_securitypredicate(SalesRep)

ON Sales.Orders

WITH (STATE = ON);

GO

GRANT SELECT ON Security.tvf_securitypredicate TO Manager;

GRANT SELECT ON Security.tvf_securitypredicate TO SalesRep1;

GRANT SELECT ON Security.tvf_securitypredicate TO SalesRep2;

EXECUTE AS USER = 'SalesRep1';

SELECT * FROM Sales.Orders;

REVERT;

EXECUTE AS USER = 'SalesRep2';

SELECT * FROM Sales.Orders;

REVERT;

EXECUTE AS USER = 'Manager';

SELECT * FROM Sales.Orders;

REVERT;

Results on Fig 4: The predicate here is the SQL user, as you can see on the code that regulates the row level security. In the following example, the user is SalesRep1. SalesRep1 will only be able to query the data that belongs to SalesRep1, if the user is SalesRep2 only the rows that belong to SalesRep2 is visible. Check out Row Level Security for more information.

Fig 4.

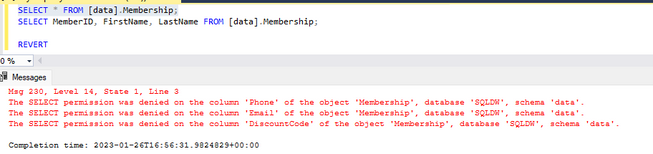

Column-level security allows security administrators to set permissions that limit who can access sensitive columns in tables. The permissions is set at the database level and can be implemented without the need to change the design of the data model or application tier. Check out Column-level security for dedicated SQL pool for more information.

CREATE TABLE Membership

(MemberID int IDENTITY,

FirstName varchar(100) NULL,

SSN char(9) NOT NULL,

LastName varchar(100) NOT NULL,

Phone varchar(12) NULL,

Email varchar(100) NULL

)

WITH

(

DISTRIBUTION = ROUND_ROBIN,

CLUSTERED COLUMNSTORE INDEX

)

GO

GRANT SELECT ON Membership(MemberID, FirstName, LastName, Phone, Email) TO TestUser;

EXECUTE AS USER = 'TestUser';

GO

--Queries executed as TestU will fail if they include columns whis user has no access

SELECT * FROM Membership;

SELECT MemberID, FirstName, LastName FROM [data].Membership;

REVERT

Results: It works when querying the columns to which the user has access. If the user tries to query the columns they don't have access to, the results will show permission denied. The example, using a select *, demonstrates the denied permission results in Fig 5.

When the user has no access - example:

Fig 5.

Synapse Serverless SQL Pool

A Serverless SQL pool enables you to centrally manage the identities of database users and other Microsoft services with Azure Active Directory integration. Azure Active Directory (Azure AD) supports multi-factor authentication (MFA).

Jovan Popovic wrote this very nice blog post about how to customize row-level security on Serverless SQL Pool. Check out How to implement row-level security in serverless SQL pools for more details.

Let's review two approaches using SQL user and MSI to configure and restrict permission on your Serverless SQL Pool.

MSI - Managed Identity

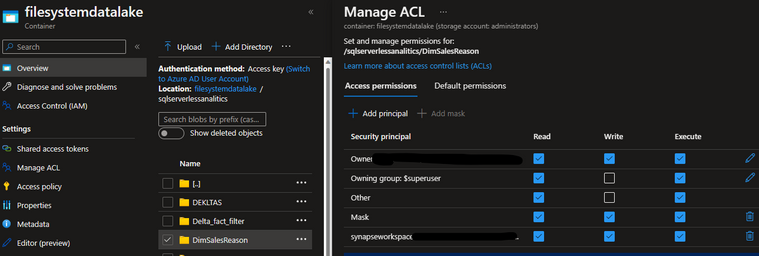

Scenario: Suppose you want to remove all the user access to the storage and enable only access through MSI to enhance the security of the queries running on Serverless against that Storage Account. You also want the MSI access given folder to folder, as different Synapse workspaces would have different data access through the Storage Account folders.

In that scenario, instead of RBACS, you can use ACL permissions. As for Serverless SQL Pool, you can configure Scope Credential by pointing a query to a Data Source as follows. Check out Quickstart: Use serverless SQL pool - Azure Synapse Analytics for more information.

CREATE MASTER KEY ENCRYPTION BY PASSWORD= '...';

-- Create a database scoped credential.

CREATE DATABASE SCOPED CREDENTIAL Nameapp WITH IDENTITY = 'Managed Identity'

CREATE EXTERNAL DATA SOURCE [LLive_sqlserverlessanalitics]

WITH (LOCATION = N'https://STORAGE.dfs.core.windows.net/Container/Folder/'

, CREDENTIAL = [Nameapp]

)

GO

---****Remove storage permissions, leaving only the owner which is inherited and test.---****

SELECT *

FROM OPENROWSET(

BULK 'Folder/',

DATA_SOURCE = 'LLive_sqlserverlessanalitics',

FORMAT = 'Delta'

) as X

The Database Scope credential is using the system-managed identity permissions associated with the workspace to connect to the Storage. This means, when configuring the storage, the permissions given to my workspace would be the ones Serverless SQL Pool will use to validate if it is possible to query. That Scope is associated with a data source which refers to the storage path that later will be used on the Openrowset query to reach the file and retrieve the data.

For the Storage ACLs, you can configure it with the details in Fig 6. ACL should be enabled top -> bottom and give the permissions through containers.

Fig 6.

If certain folders should not be accessed by the workspace. You need to explicitly add this to the Storage as depicted in Fig 7.

Fig 7.

My workspace now has permissions on the folder DimSalesReason, but it does not have the permission on the folder DimSalesReason_NONPart.

SQL user

You can create a SQL user on Serverless SQL Pool however, for this user to connect to the Storage Account you will need to give permissions to the Scoped Credentials using something like MSI to connect to the Storage.

Jovan Popovic blog Securing access to ADLS files using Synapse SQL permission model provides a great end to end example.

This scenario example has a testuser with permission on the Database Scope Credentials called NameApp and the Data source LLive_sqlserverlessanalitics. My SQL user needs those permissions to be able to query the Storage Account as I can't add a SQL user to a Storage Account.

Therefore, under the managed identity permission of the scoped credentials, the SQL testuser will be able to query the Storage Account using the Data source LLive_sqlserverlessanalitics.

--master

CREATE LOGIN testUser

WITH PASSWORD = 'xxxx'

GO

--YOURDATABASE

-- Creates a database user for the login created above.

CREATE USER testUser FOR LOGIN testUser;

GO

-- Create a database scoped credential.

--CREATE DATABASE SCOPED CREDENTIAL Nameapp WITH IDENTITY = 'Managed Identity'

--CREATE EXTERNAL DATA SOURCE [LLive_sqlserverlessanalitics]

--WITH (LOCATION = N'https://Storage.dfs.core.windows.net/Container/Folder/'

-- , CREDENTIAL = [Nameapp]

--)

--GO

---Permissions to the SQL User

GRANT CONNECT TO testUser

GRANT REFERENCES ON DATABASE SCOPED CREDENTIAL::LLive_sqlserverlessanalitics TO testUser;

GRANT REFERENCES ON DATABASE SCOPED CREDENTIAL::Nameapp TO testUser;

--Creating an external table

CREATE EXTERNAL TABLE LLive_CETAS_DimSalesReason

WITH (

LOCATION = 'DimSalesReason_Delta_LLive_CETAS/',

DATA_SOURCE = LLive_sqlserverlessanalitics,

FILE_FORMAT = Parquet_file

)

AS

SELECT *

FROM OPENROWSET(

BULK 'DimSalesReason_Delta/',

DATA_SOURCE = 'LLive_sqlserverlessanalitics',

FORMAT = 'Delta'

) as X

--Granting permission to SQl user use that external table.

GRANT SELECT ON OBJECT::LLive_CETAS_DimSalesReason TO testUser;

---open on the SSMS with the User and permission

SELECT * FROM LLive_CETAS_DimSalesReason

Synapse Spark

Spark exists inside of Synapse and can only be accessed by the users that have permission for that configuration on the Synapse Studio. Spark pools operate as a job cluster. It means that each user gets their own Spark cluster when interacting with the workspace.

Accessing data from external sources is a common pattern. Unless the external data source allows anonymous access, chances are you need to secure your connection with a credential, secret, or connection string.

Synapse uses Azure Active Directory (Azure AD) passthrough by default for authentication between resources on Spark. The TokenLibrary simplifies the process of retrieving SAS tokens, Azure AD tokens, connection strings, and secrets stored in a linked service or from an Azure Key Vault.

Synapse allows users to set the linked service for a particular storage account. This makes it possible to read/write data from multiple storage accounts in a single spark application/query.

Once we set spark.storage.synapse.source_full_storage_account_name}.linkedServiceName for each storage account that will be used, Synapse figures out which linked service to use for a particular read/write operation. Check out Using the workspace MSI to authenticate a Synapse notebook when accessing an Azure Storage account for more information.

If you do not want to give the user access to your Storage Account, you can now give permission to the managed identity and reach the data of the Storage Account through the linked service configured on your Synapse Studio. Use this linked service reference inside of the Spark code as the code example. For more information on Linked services, check out: Linked services - Azure Data Factory & Azure Synapse.

Pyspark code

%%pyspark

# Python code

source_full_storage_account_name = "teststorage.dfs.core.windows.net"

spark.conf.set(f"spark.storage.synapse.{source_full_storage_account_name}.linkedServiceName", "<lINKED SERVICE NAME>")

spark.conf.set(f"fs.azure.account.auth.type.{source_full_storage_account_name}", "SAS")

spark.conf.set(f"fs.azure.sas.token.provider.type.{source_full_storage_account_name}", "com.microsoft.azure.synapse.tokenlibrary.LinkedServiceBasedSASProvider")

df = spark.read.csv('abfss://<CONTAINER>@<ACCOUNT>.dfs.core.windows.net/<DIRECTORY PATH>')

df.show()

In summary, I have organized some important points in this blog to start a discussion on how to secure your data warehouse environment using Synapse. I started with the least privilege user principle which could be applied across different environments (Dev, UAT, Prod), using AAD security groups and managed identities. We then explored options you have inside the workspace to make it more secure like Encryption, Defender for the Cloud, Data masking. And lastly, we explained how to organize the permissions on the Storage Account using ACL and Serverless SQL Pool which also can be applied using Spark with MSI.

Please review the key points before starting your project and apply accordingly as required.

That's it!

Liliam, UK