This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

This post is co-authored by @adieldar (Principal Data Scientist, Microsoft)

In the world of AI & data analytics, vector databases are emerging as a powerful tool for managing complex and high-dimensional data.

In this article, we will explore the concept of vector databases, the need for vector databases in data analytics, and how Azure Data Explorer (ADX) aka Kusto can be used as a vector database.

What is a Vector Database?

Vector databases store and manage data in the form of vectors that are numerical arrays of data points. Vector databases allow manipulating and analyzing set of vectors at scale using vector algebra and other advanced mathematical techniques.

The use of vectors allows for more complex queries and analyses, as vectors can be compared and analyzed using advanced techniques such as vector similarity search, quantization and clustering.

Need for Vector Databases

Traditional databases are not well-suited for handling high-dimensional data, which is becoming increasingly common in data analytics. In contrast, vector databases are designed to handle high-dimensional data, such as text, images, and audio, by representing them as vectors.

This makes vector databases particularly useful for tasks such as machine learning, natural language processing, and image recognition, where the goal is to identify patterns or similarities in large datasets

Vector Similarity Search

Vector similarity is a measure of how different (or similar) two or more vectors are. Vector similarity search is a technique used to find similar vectors in a dataset.

In vector similarity search, vectors are compared using a distance metric, such as Euclidean distance or cosine similarity. The closer two vectors are, the more similar they are.

Vector embeddings

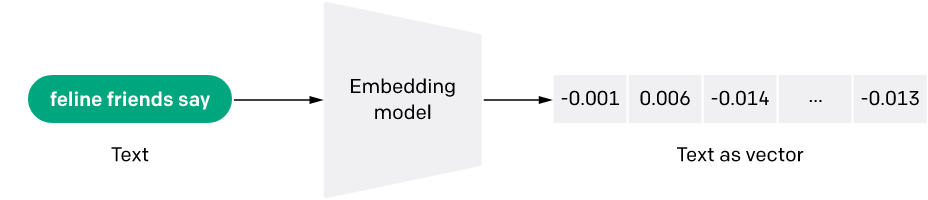

Embeddings are a common way of representing data in a vector format for use in vector databases. An embedding is a mathematical representation of a piece of data, such as a word, text document or an image, that is designed to capture its semantic meaning.

Embeddings are created using algorithms that analyze the data and generate a set of numerical values that represent its key features. For example, an embedding for a word might represent its meaning, its context, and its relationship to other words.

Let’s take an example.

Below two phrases are represented as vectors after embedding with a model.

Phrase 1

Phrase 2

(Image credits – OpenAI)

Embeddings that are numerically similar are also semantically similar. For example, as seen in the following chart, the embedding vector of “canine companions say” will be more similar to the embedding vector of “woof” than that of “meow.”

(Image credits – OpenAI)

The process of creating embeddings is straightforward, they can be created using standard python packages (eg. spaCy, sent2vec, Gensim), but Large Language Models (LLM) generate highest quality embeddings for semantic text search. Thanks to OpenAI and other LLM providers, we can now use them easily. You just send your text to an embedding model in Azure Open AI and it generates a vector representation which can be stored for analysis.

Azure Data Explorer as a Vector Database

At the core of Vector Similarity Search is the ability to store, index, and query vector data.

ADX is a cloud-based data analytics service that enables users to perform advanced analytics on large datasets in real-time. It is particularly well-suited for handling large volumes of data, making it an excellent choice for storing and searching vectors.

ADX supports a special data type called dynamic, which can store unstructured data such as arrays and property bags. Dynamic data type is perfect for storing vector values. You can further augment the vector value by storing metadata related to the original object as separate columns in your table.

Furthermore, we have added a new user-defined function series_cosine_similarity_fl to perform vector similarity searches on top of the vectors stored in ADX.

ADX as a vector database

Demo scenario:

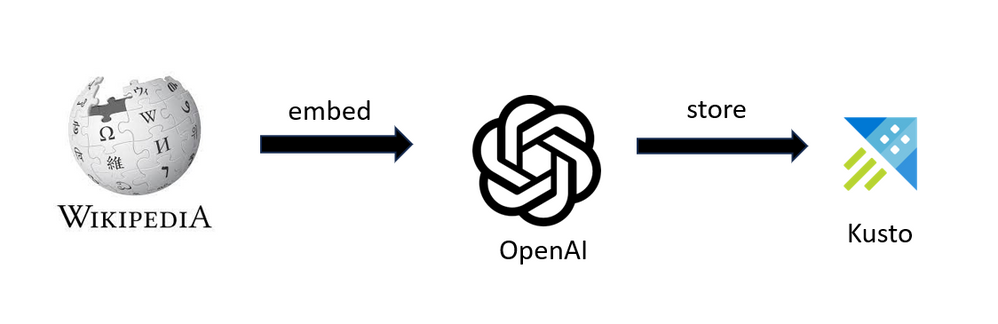

Let’s say you want to run semantic searches on top of Wikipedia pages.

We will generate vectors for tens of thousands of Wikipedia pages by embedding them with an Open AI model and storing the vectors in ADX along with some metadata related to the page.

Demo scenario

Now we want to search wiki pages with natural language queries to look for the most relevant ones. We can achieve that by the following steps:

Semantic search flow

- Create an embedding for the natural language query using Open AI model (ensure you use the same model used for embedding the original wiki pages, we will use text-embedding-ada-002)

- Open AI returns the embedding vector for the search term

- Use the series_cosine_similarity_fl KQL function to calculate the similarities between the query embedding vector and those of the wiki pages

- Select the top “n” rows of the highest similarity to get the wiki pages that are most relevant to your search query

Let’s run some queries:

WikipediaEmbeddings

| extend similarity = series_cosine_similarity_fl(searched_text_embedding, embedding_title,1,1)

| top 10 by similarity desc

| project doc_title,doc_url, similarity

This query calculates similarity score for hundreds of thousands of vectors in the table within seconds and returns the top n results.

Search query 1: places where we worship

Result: list of places to worship based on the semantic meaning of the query.

|

doc_title |

doc_url |

similarity |

|

Worship |

0.88637075345136851 |

|

|

Service of worship |

0.88088563615991156 |

|

|

Christian worship |

0.87145606116147623 |

|

|

Shrine |

0.86122073710549862 |

|

|

Church (building) |

0.856143317706441 |

|

|

Congregation |

0.8499157231442287 |

|

|

Church music |

0.84391063581165737 |

|

|

Chapel |

0.84180461792178318 |

|

|

Cathedral |

0.84131817383277074 |

|

|

Altar |

0.84050486977489425 |

Search query 2: unfortunate events in history

Result: relevant wiki pages referring to unfortunate events.

|

doc_title |

doc_url |

similarity |

|

Tragedy |

0.85184752964016219 |

|

|

The Holocaust |

0.84722225728777545 |

|

|

List of historical plagues |

https://simple.wikipedia.org/wiki/List%20of%20historical%20plagues |

0.84441133451457429 |

|

List of disasters |

0.84306311742813556 |

|

|

Disaster |

0.84033393031653025 |

|

|

List of terrorist incidents |

https://simple.wikipedia.org/wiki/List%20of%20terrorist%20incidents |

0.83616158712469169 |

|

A Series of Unfortunate Events |

https://simple.wikipedia.org/wiki/A%20Series%20of%20Unfortunate%20Events |

0.83517215368462794 |

|

History of the world |

https://simple.wikipedia.org/wiki/History%20of%20the%20world |

0.83024306935594427 |

|

Accident |

0.82689840352112709 |

|

|

History |

0.82464546620331225 |

How can you get started?

If you’d like to try this demo, head to the azure_kusto_vector GitHub repository and follow the instructions.

The Notebook in the repo will allow you to -

- Download precomputed embeddings created by OpenAI API.

- Store the embeddings in ADX.

- Convert raw text query to an embedding with OpenAI API.

- Use ADX to perform cosine similarity search in the stored embeddings

You can start by spinning up your own free Kusto cluster within seconds - https://aka.ms/kustofree

We look forward to your feedback and all the exciting things you build with vectors & ADX!