This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

OpenFOAM (Open Field Operation and Manipulation) is an open-source computational fluid dynamics (CFD) software package. It provides a comprehensive set of tools for simulating and analyzing complex fluid flow and heat transfer phenomena. It is widely used in academia and industry for a range of applications, such as aerodynamics, hydrodynamics, chemical engineering, environmental simulations, and more.

Azure offers services like Azure Batch and Azure CycleCloud that can help individuals or organizations run OpenFOAM simulations effectively and efficiently. In both scenarios, these services allow users to create and manage clusters of VMs, enabling parallel processing and scaling of OpenFOAM simulations. While CycleCloud provides a similar experience to on-premises thanks to its support to common schedulers like OpenPBS or SLURM; Azure Batch provides a cloud native resource scheduler that simplifies the configuration, maintenance and support of your required infrastructure.

This article covers a step-by-step guide on a minimal Azure Batch setup to run OpenFOAM simulations. Further analysis should be performed to identify the right sizing both in terms of compute and storage. A previous article on How to identify the recommended VM for your HPC workloads could be helpful.

Step 1: Provisioning required infrastructure

To get started, create a new Azure Batch account. At this point a pool, job or task is not required. In our scenario, the pool allocation method would be configure as “User Subscription” and public network access configured to “All Networks”.

A shared storage across all nodes would be also required to share the input model and store the outputs. In this guide, an Azure Files NFS share would be used. Alternatives like Azure NetApp Files or Azure Managed Lustre could also be an option base on your scalability and performance needs.

Step 2: Customizing the virtual machine image

OpenFOAM provides pre-compiled binaries packaged for Ubuntu that can be installed through its oficial APT repositories. If Ubuntu is your distribution of choice, you can follow the oficial documentation on how to install it, using a pool’s start task is a good approach to do it. As an alternative, you can create a custom image with everything already pre-configured.

This article would cover the second option using CentOS 7.9 as base image to show the end-to-end configuration and compilation of the software from source code. To simplify the process, it would rely on the available HPC images that provide the required pre-requisites already installed. The reference URN for those images is: OpenLogic:CentOS-HPC:s7_9-gen2:latest. The SKU of the VM we would use both to create the custom image and run the simulations is a HBv3.

Start the configuration creating a new VM. After the VM is up and running, execute the following script to download and compile OpenFOAM source code.

The last command compiles with all cores (-j), reduced output (-s, -silent), with queuing (-q, -queue) and logs (-l, -log) the output to a file for later inspection. After the initial compilation, review the output log or re-run the last command to make sure that everything was compiled without errors. Output is so verbose that errors could be missed in a quick review of the logs.

It would take a while before the compilation process finishes. After that, you can delete the installers and any other folder not required in your scenario and capture the image into a Shared Image Gallery.

Step 3. Batch pool configuration

Add a new pool to your previously created Azure Batch account. You can create a new pool using the standard wizard (Add) and fulfilling the required fields with the values mentioned in the following JSON, or you can copy and paste this file into the Add (JSON editor).

Make sure you customize the properties between < and >.

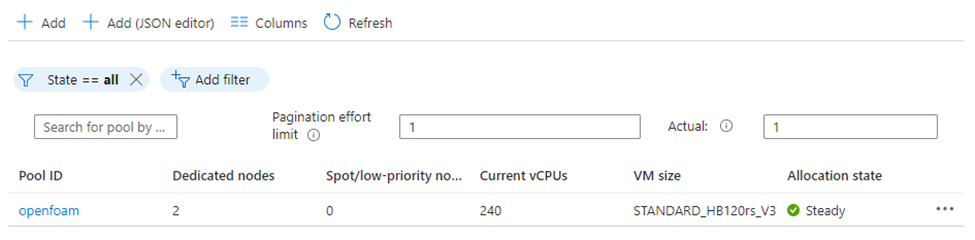

Wait till the pool is created and the nodes are available to accept new tasks. Your pool view should look similar to the following image.

Step 4. Batch Job Configuration

Once the pool allocation state value is “Ready”, continue with the next step: create a new Job. Default configuration is enough in this case. In our case, the job is called “flange” because we would use the flange example from OpenFOAM tutorials.

Step 5. Task Pool Configuration

Once the job state value changes to “Active”, it is ready to admit new tasks. You can create a new task using the standard wizard (Add) and fulfilling the required fields with the values mentioned in the following JSON, or you can copy and paste this file into the Add (JSON editor).

Make sure you customize the properties between < and >.

Task commandline parameter is configured to execute a Bash script stored into the Azure Files that Batch is mounting automatically into the '$AZ_BATCH_NODE_MOUNTS_DIR/data’ folder. You need to copy first the following scripts and the flange example mentioned above into a folder called flange inside that directory.

Command Line Task Script

This script would configure the environment variables and pre-process the input files before launching the mpirun command to execute the solver in parallel across all the available nodes. In this case, 2 nodes with 240 cores.

Mpirun Processing Script

This script would launch the task in all the nodes available. It is required to configure the environment variables and folders the solver would need to access. If this script is not executed and the solver is invoked directly on the mpirun command, only the primary task node would have the right configuration applied and the rest of the nodes would fail with file not found errors.

Step 6. Checking the results

Mpirun output is redirected to a file called solver.log in the directory where the model is stored inside the Azure Files file share. Checking the first lines of the log, it’s possible to validate that the execution has properly started and it’s running on top of two HBv3 with 240 processes.

Conclusion

By leveraging Azure Batch's scalability and flexible infrastructure, you can run OpenFOAM simulations at scale, achieving faster time-to-results and increased productivity. This guide demonstrated the process of configuring Azure Batch, customizing the CentOS 7.9 image, installing dependencies, compiling OpenFOAM, and running simulations efficiently on Azure Batch. With Azure's powerful capabilities, researchers and engineers can unleash the full potential of OpenFOAM in the cloud.