This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

While utilizing Cognitive Services, it's important to understand that each resource comes with a pre-configured static call rate measured in transactions per second (TPS). This call rate acts as a limit on the number of concurrent requests that clients can initiate towards the backend service within a specified time frame. By limiting the number of concurrent calls for the customers, the service provider can allocate resources efficiently and maintain the overall stability, reliability service. This also plays a role in the security aspect of the service, so the service itself can protect customers from malicious behavior attacking their service Additionally, this call rate allows customers to estimate their usage and manage costs effectively by preventing unexpected spikes in traffic that can result in unexpected charges.

For highly scalable systems it is important that the system scales independently within these constraints without affecting the customer experience. In this article, we'll delve deeper into what this means for the developers and also look at strategies to scale these services within these constraints to maximize the potential of Cognitive Services in large deployments.

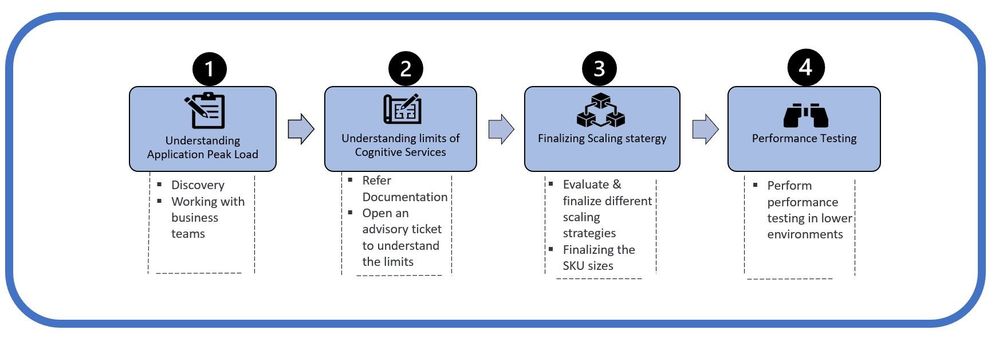

Below strategy consisting of four steps can be employed to minimize issues related to throttling and ensure that application scales seamlessly

1. Understanding application peak load

To effectively design an app, it's essential to have a comprehensive understanding of the traffic patterns it will encounter along with the business teams, both in terms of regular usage and peak load. In some cases, it is important to break down the app into different modules that use different Cognitive Services and understand its usage patterns. This knowledge enables architects to make informed decisions about infrastructure and resource allocation, ensuring that the app can handle the expected traffic without performance degradation or downtime.

E.g., For a multilingual chatbot also supporting speech below table can be used to define the load –

Scenario |

~Estimated Traffic in (%) |

Service |

Avg. TPS |

Peak Load TPS |

Expected Latency (Sec) |

| User enters non-English text | 20 | Translator | 60 | 300 | 3 |

| Users uses speech interface | 10 | Speech | 30 | 120 | |

| Frequently asked questions from customers | 40 | QnA Maker | 30 | 100 | |

| LUIS | 60 | Language Understanding | 25 | 200 | |

| Custom API Call | 30 | Custom Microservice | 20 | 200 |

2. Understanding the limits for Cognitive Services

Below are the limits for every cognitive service: -

- Request limits - Translator Service

- Limits - LUIS

- Limits and Quotas - Speech service

- Limits QnA Maker V1

Note – This may vary based on your configuration and knowledge base.

For some services like Custom Question Answering, (QnA v2.0), it also requires provisioning Cognitive Search. So, the throughput depends on the SKU selection. E.g., An Azure cognitive search with configuration S3HD,6 replicas, 1 partition per instance can provide throughput upto 800-1000 Queries per Second.

3. Scaling strategies for Cognitive Services

Following section represents the scaling strategies for cognitive services.

1. New Support Request

Typically, different Cognitive Service resources have a default rate limit. If you need to increase the limit, submit a ticket by following the New Support Request link on your resource's page in the Azure portal. Remember to include a business justification in the support request.

Note - Trade-off is between the scale and the latency, if the latency increase is fine for a high traffic situation, then the rate shouldn't be pushed to the limit.

2. Cognitive Services autoscale feature

The autoscale feature will automatically increase/decrease a customer's resource's rate limits based on near-real-time resource usage metrics and backend service capacity metrics. Autoscale will check the available capacity projection section to see whether the current capacity can accommodate a rate limit increase and respond within five minutes. If the available capacity is enough for an increase, autoscale will gradually increase the rate limit cap of your resource else autoscale feature will wait five minutes and check again. If you continue to call your resource at a high rate that results in more 429 throttling, your TPS rate will continue to increase over time.

This feature is disabled by default for every new resource using Azure Portal :-

Using CLI :-

az resource update --namespace Microsoft.CognitiveServices --

resource-type accounts --set

properties.dynamicThrottlingEnabled=true --resource-group

{resource-group-name} --name {resource-name}

Please refer link to check for cognitive services that support the autoscale feature.

Disadvantages

- You could end up paying significantly higher bill if there is an error in client application repeatedly calling the service at a high frequency or any misuse.

- There is no guarantee that autoscale will always be invoked as the ability to trigger an autoscale is contingent on the availability of capacity. If there isn't enough available capacity to accommodate an increase, 429 error will be returned and autoscale feature will be re-evaluated after 5 minutes.

- Autoscale will gradually increase the rate limit cap of your resource. If your application triggers a very high sudden spike, you may get 429 error for rate limit excess as TPS rate will continue to increase over time till it reaches the maximum rate (up to 1000 TPS) currently available at that time for that resource.

This strategy is advisable for medium scale workloads with gradual increase in traffic which can be predicted using thresholding approaches. We strongly advise testing your development and client updates against a resource that has a fixed rate limit before making use of the autoscale feature.

3. Using Azure Cognitive Services containers

Azure Cognitive Services provides several Docker containers that let you use the same APIs that are available in Azure in a containerized environment. Using these containers gives you the flexibility to bring Cognitive Services closer to your data for compliance, security, or other operational reasons. Container support is currently only available for a subset of Azure Cognitive Services. Please refer to Azure cognitive Services Container for more details. Azure Cognitive Services provide customers the ability to scale for high throughput and low latency requirements by enabling Cognitive Services to run physically close to their application logic and data. Containers don't cap transactions per second (TPS) and can be made to scale both up and out to handle demand if you provide the necessary hardware resources.

Disadvantages

- Running containers hardware and infrastructure resources that you will have to manage and maintain. This can add to your costs and operational overhead.

- The responsibility for maintaining and supporting the containers falls on you, and it can be challenging to keep up with version upgrades, updates, patches, and bug fixes.

- Ensuring security and compliance can be more challenging when running containers.

- Customers are charged based on consumption so you could end up paying significantly higher bill if there is an error in client application repeatedly calling the service at a high frequency or any misuse. So, this needs to be taken care.

This strategy is advisable for large scale workloads and is more resilient to spikes, but not the ultimate solution to this type of traffic. Currently the container support is available for only a subset of Azure Cognitive Services, so it is important to validate the availability.

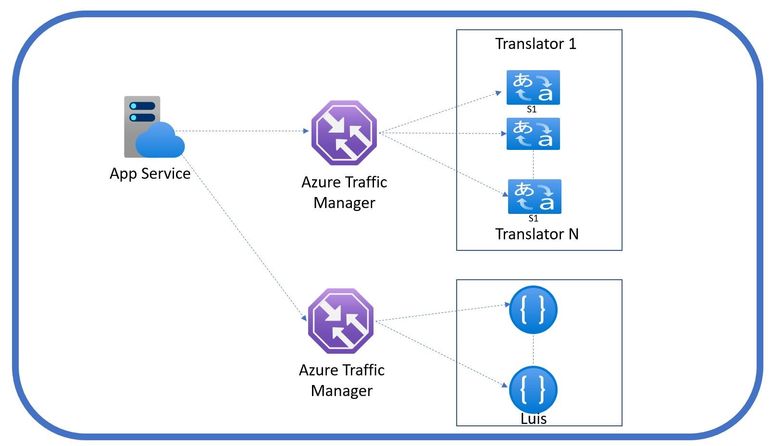

4. Use Load Balancer to scale Cognitive Service Instances based on load

In this strategy, different Instances are added based on load pattern & a load balancer (e.g., Azure Traffic Manager, Application Gateway) is used to load balance different instance of cognitive services.

In the above example, S1 SKU for translator service has limits of 40 million characters per hour but to get 120 TPS with 15 word each request and 8 characters per word 2 S1 instances are needed else S3 instance needs to be selected that can support 120 million characters may not be cost effective solution. One instance can be removed during off-peak hour.

This whole process to add and remove instances and configure the Traffic Manager can be automated. App will get the instance based on the Load balancing policy of Traffic Manager. Once the load patterns are understood an algorithm can be created to add/remove instances based on load patterns.

This strategy is much more reliable than the out of box auto scale which depends on regions capacity availability. This also help with predictable cost management as each deployed instance have their rate limits. Create an additional service resource in different regions and distribute the workload among them will also help in improving performance and high availability. Also sometime,

multiple service resources in the same region may not affect the performance, because all resources will be served by the same backend cluster.

You can also have your own traffic management (even at client side) that is based on rules or external to the service to distribute the load in a meaningful manner either per user, or per session or a round robin list that is distributed to the service to pick out it.

It is recommended to implement retry logic and backoff logic in your application.

Disadvantages

- This may need custom development for add/remove instance automation, load balancing entry and algorithm to invoke the same based on load patterns.

- Additional resources like Traffic Manager etc. needs to be managed. Also, Traffic manager can be the bottleneck if not handled well.

- This is only suitable for predictable load. This may not be suitable if there is a sudden spike in request.

Please find below table comparing above strategies under different type of loads.

| Type of load | Rate increase | Autoscale | Containers (connected) |

Load Balancer (Traffic Manager) |

| Gradual increase | Planned on the highest rate for a given latency limit and will be a consistent rate through out |

A suitable solution that doesn’t entail a lot of overhead of management. With the right thresholding, it would be able to handle scaling appropriately with the necessary lead and hysteresis |

Would allow for a bigger limit compared to the rate increase but would be with the extra cost of the dedicated hardware. So long as the load is within the boundaries of Azure as well as issues of BCDR, reliability and security |

Will handle the distribution across geographical locations and services, however the limits would be mostly in the traffic manager if it is beyond the boundaries of the service. |

| Spike increase, without notice |

Would handle within the limits of the spike. Provisioning beyond the regular average service would be an inefficient to the service backend and can be rejected. |

Would not be very responsive and can cause 429s unexpectedly | Would be more resilient to spike since the limits defined can be higher, but with the extra cost of hosting, BCDR, reliability etc. | Would leverage the accumulated TPS in the resources, but the traffic manager would be the most critical aspect. |

| Pre-determined spike increase | Hard to justify and keep changing | Can be planned by provisioning auto scale policies that are pre-planned | Will be within the limits of the container | Can be provisioned for the deployment of more resources to accommodate the spike |

| Recurring increase | Within the bounds of the TPS |

Efficient if the increase is gradual |

Will be within the limits of the container |

Can be provisioned with distribution of traffic |

Trade-offs:

- There is a trade-off between the limits of the service wrt rate and the latency of the service. Proper planning of traffic rates can help absorb short bursts of high traffic with minimal latency impact. However, if there are prolonged spikes or consistent increases in traffic, the system may not be able to handle the increased load, which can lead to performance issues and service disruptions.

- Retries and backoff policies can be ways to handle spiky traffic, especially that is not prolonged and not planned. Embedding systematic retries would help in the management of cost and security while not affecting the service experience to a great extent. Below is the Java code snippet for implementing exponential backoff strategy where the initial backoff time is multiplied by a power of 2 for each retry.

import java.util.Random;

public class BackoffExample {

private static final int MAX_RETRIES = 5;

private static final int INITIAL_BACKOFF_TIME = 1000; // in milliseconds

public static void main(String[] args) {

int retries = 0;

while (retries <= MAX_RETRIES) {

try {

// Make REST API call

String response = makeApiCall();

System.out.println("API call succeeded with response: " + response);

break; // Exit loop if call is successful

} catch (Exception e) {

System.out.println("API call failed with error: " + e.getMessage());

if (retries == MAX_RETRIES) {

System.out.println("Max retries exceeded. Giving up.");

break;

}

int backoffTime = INITIAL_BACKOFF_TIME * (int) Math.pow(2, retries);

System.out.println("Retrying in " + backoffTime + " milliseconds.");

try {

Thread.sleep(backoffTime + new Random().nextInt(1000)); // Add random jitter

} catch (InterruptedException ie) {

Thread.currentThread().interrupt();

System.out.println("Thread interrupted while sleeping.");

break;

}

retries++;

}

}

}

private static String makeApiCall() throws Exception {

// Make REST API call here

// ...

// Return response

return "Sample Response";

}

}

- Architectural considerations: The architecture can dictate the manner with which scaling should happen. Gating services that represent the entry point of all services (or aggregation of all services) would need to be considered for scaling, and with that limit other services that are less critical or less contributing to the load would then be adjusted accordingly.

Hybrid approaches of the above-mentioned elements can be the most effective. Provisioning a load balancer along with backoff and autoscaling for the expected traffic patterns can all be deployed to make sure the service is running smoothly in different conditions. Customers should also manage the load/latency trade-off and accept a larger latency with huge loads and not provision unnecessary services that would increase the development and maintainability of the service.

4. Performance Testing Apps before productionizing

To ensure that cognitive services and the entire workload are appropriately sized and scaled, it's critical to conduct load testing using a tool like JMeter. Load testing enables you to simulate a high volume of traffic and requests to assess the system's ability to handle such demands. Without load testing, you may not accurately determine the capacity requirements for your workload, which could lead to performance issues, increased costs, or other problems. Therefore, it's recommended to perform load testing regularly to optimize your use of cognitive services and ensure that your workload can handle anticipated traffic.

The parameters for performance testing may vary depending on the workload being tested. For example, in the case of a Virtual Assistant, the focus would be on metrics such as response time, the length of responses in terms of word count, and the number of steps involved in resolving a user's query etc. For a virtual assistant’s performance testing, its 2 main components will be considered -The bot service & Channel tests. The bot service can be tested on Directline. Please inform and work with the Microsoft support team before testing on Directline since load test on Directline service can be from limited set of IP’s and can be restricted. The important aspect that needs to be considered is the Peak-to-Average ratio, and the planning needs to mostly target the average and find ways that are suitable to handling peaks. Adding reasonable safeguards beyond these limits is also advisable.

Conclusion

By following the best practices outlined in this paper, you can effectively deploy and manage Cognitive Services, ensuring their scalability and efficient operation. I hope this paper has been informative and useful in helping you leverage the full potential of Cognitive Services in large-scale deployments.

Special Thanks to Nayer Wanas for reviewing and working with us on this blog!!!

If you have any feedback or questions about the information provided above, please leave a comment below or email us at raiy@microsoft.com or ameetk@microsoft.com. Thank you!