This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Welcome to our insightful blog post where we delve into the fascinating world of applying dashboarding and machine learning in business analysis. Join us as we share our experiences and reflections as master students at Radboud University, where we had the opportunity to explore the power of Power BI and Azure Machine Learning.

In this journey, we embarked on a mission to deliver a comprehensive report with data-driven recommendations to help FitCapacity, a company facing a decline in market share, regain its competitive edge. Along the way, we encountered ethical implications that sparked intriguing discussions and provided valuable insights for anyone interested in working on similar projects.

Transparency and classification emerged as two key ethical considerations that significantly impacted our approach and conclusions. We discovered the importance of making transparent decisions during the data selection and cleaning process, as even seemingly small choices can shape the outcomes and subsequent recommendations. Additionally, we grappled with the challenges of classification, realizing that a deep understanding of the community and thoughtful consideration of biases are crucial when applying categorization techniques.

Throughout this blog post, we will share the lessons we learned and provide practical recommendations to address these ethical challenges. From maintaining data quality and transparency to fostering awareness about the sensitivity of classification, we aim to empower you with the knowledge to navigate the ethical landscape of business analysis.

Join us as we unravel the intricacies of ethical decision-making in the realm of dashboarding and machine learning. Let's embark on this thought-provoking journey together, where data-driven insights meet responsible analysis.

About the authors

- J. van Ekeren

Master student Business Analysis and Modelling at Radboud University

Contact details

LinkedIn: https://www.linkedin.com/in/judith-van-ekeren-453285139 - B.L. Turan

Master student Business Analysis and Modelling at Radboud University

Contact details

LinkedIn: https://www.linkedin.com/in/berketuran

Background

In this blog, we will shortly take you through the process of applying dashboarding and machine learning to a business case from our university, and reflect on the ethical implications and learnings we encountered. From this, we derive suggestions for those interested in working on similar projects. We reflect on a few matters we encountered, leaving us with insights we would like to share.

Introduction

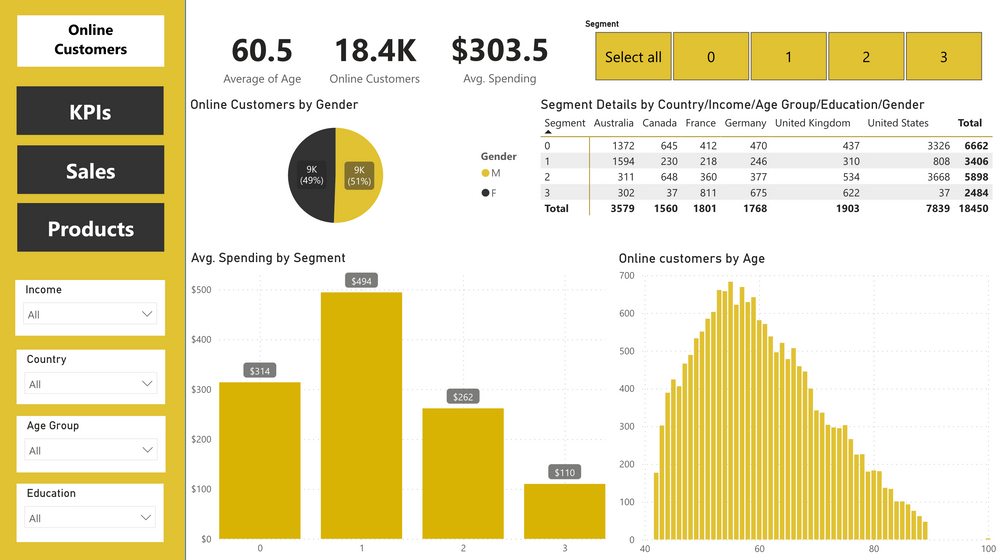

For a course at our university, we got the opportunity to follow modules on Power BI and Azure Machine Learning. The aim was to deliver a report with recommendations based on data from Power BI dashboards (descriptive analysis) and Machine Learning models in Azure (predictive analysis). In addition, the report contained a description of the data selection process, preparation, modelling, and evaluation for FitCapacity, a company suffering a decline in market share, with the aim to offer meaningful recommendations to regain it.

We started our learning journey with modules from Microsoft on PowerBI and Machine Learning in order to learn how to apply both methods and study their value. Based on the knowledge gained from this, we received data for the final project. Walking through the steps of dashboarding, machine learning and interpreting the data we encountered two main ethical issues, which we would like to state further below.

Transparency

Firstly, regarding transparency, during the data selection and cleaning process, we made several decisions that changed the outcome of the data presented. For example, regarding customer data there were multiple columns reflecting revenue. Some were before shipping costs and including taxes, and others without. But which one do you pick, and how do viewers know which definition to handle? Additionally, we excluded individuals above the age of 100 YTD from our analysis because we found that including them skewed the mean significantly as the database has not been recently updated and these customers are not recent. But is this reason valid enough for the exclusion of certain customers?

All those small decisions lead to data being displayed differently than when you would not have applied these steps, possibly leading to different recommendations and decisions and thus significantly affecting business. As a decision-maker, it is extremely important to be aware of those previous decisions, as you must know what details underlie your strategic choices. Of course, the concept of transparency is not new, as extensive research has supported its importance (Annany & Crawford, 2018; Dignum et al., 2018), but you do not realize how many of these small decisions on definitions and data selection are being done until you are in the process of building these dashboards yourself. And yet even we did not know it all, as we received access to the database, leaving room for much interpretation of the data by our biases and heuristics. Nevertheless, this awareness gave us great insight into the vulnerability of data and how carefully it should be handled.

Classification

A second important ethical learning we did was regarding classification. As Bowker and Starr (1999) argue, a deep understanding of a community's structure is needed to validly apply standards and classifications to those communities. The variety of data in the database we got access to left ample room for selecting features of customers for classifying them into segments. Without knowing the underlying structure of the community of which the data was about, we started selecting features ourselves for building these models. Eventually, we used classification in our visuals and, thus, in our interpretations for the final report.

We used the k-means clustering technique to see how unsupervised machine learning would segment these customers. Soon we found that it was not the stand-alone categories that created behavior, but a combination of multiple categories and factors. This made us realize that, as you do not understand the people you are classifying you can make assumptions based on an overly simplistic categorization of data, leading to false conclusions and strengthening biases. Machine learning uncovered these, and the complex and interrelated nature of systems and issues was more than ever at the top of our minds. Hence, we learned how sensitive categorization should be handled and the implications of using them should be carefully considered.

Of course, many other ethical matters can be touched upon. For instance regarding the consent of customers in the data collection process (Zuboff, 2015), the extensively researched matter of anonymity when drilling down and combining multiple categories (Vial, 2019), and the extent to which the purpose of data analysis is socially responsible (Bowker & Starr, 1999). However, as we got access to the dataset without individual identifiers, we reflected on those subjects to a lesser extent, leaving us with a focus on above mentioned learnings. Because of this, we will put the focus of our suggestions on these learnings. Despite this, it is essential to note that different aspects of ethics must be considered depending on the content and phases in the data analysis process.

Recommendations

Regarding data quality and transparency we touched upon, we strongly recommend two things. Firstly, be consistent. When choosing a variable to measure revenue, use only that one for visuals and measures and hide others to prevent confusion and reduce the risk of error. And secondly, keep close track of the definitions you use and state them in your dashboard, either on a separate page or the page itself. In this way, you ensure that viewers are updated about the actual meaning of the visuals they use for decision-making and reporting. Also, as you have to argue for your decisions as an analyst, bias can be reduced, and for the bias that remains, at least the viewers are aware.

As for classification, we found it insightful to use clustering methods with machine learning to become more aware of the biases enclosed in categorization. Therefore, we strongly recommend applying this when having the opportunity. On top of that, we suggest educating and engaging in discussions and conversations with the people using your dashboards to make them aware of the sensitivity of classification and create awareness that visuals and classifications, however insightful, do not always reflect the complexity of the system behind it.

Concluding

While working on this project, we experienced that being a data analyst or scientist comes with great responsibility regarding ethics. Many different factors must be considered to give a transparent, objective – as far as possible – and unbiased view of the data. In addition, your visuals decide how others will argue their decision-making, leaving you with power that must be handled responsibly. Therefore, we strongly advise to become aware of possible ethical biases your visuals might display and to always keep asking critical questions to ensure your interpretation of the data is as close to reality as possible.

The analysis in this project relied heavily on the Microsoft Learn Modules, which provided us with extensive knowledge and insights. Additionally, we greatly benefited from the helpful documentation and community of Power BI and Azure Machine Learning, enabling us to further explore and troubleshoot effectively.

Sources

- Ananny, M., & Crawford, K. (2018). Seeing without knowing: Limitations of the transparency ideal and its application to algorithmic accountability. New media & society, 20(3), 973-989

- Bowker, G. C., & Star, S. L. (1999). Building information infrastructures for social worlds—The role of classifications and standards. In Community Computing and Support Systems: Social Interaction in Networked Communities (pp. 231-248). Berlin, Heidelberg: Springer Berlin Heidelberg

- Dignum, V., Baldoni, M., Baroglio, C., Caon, M., Chatila, R., Dennis, L., ... & de Wildt, T. (2018, December). Ethics by design: Necessity or curse? In Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society (pp. 60-66)

- Vial, G. (2019). Understanding digital transformation: A review and a research agenda. The Journal of Strategic Information Systems, 28(2), 118-144

- Zuboff, S. (2015). Big other: surveillance capitalism and the prospects of an information civilization. Journal of Information Technology, 30(1), 75-89.