This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Background

This article describes how to aggregate the Azure Storage logs collected using the Diagnostic settings in Azure Monitor when selecting an Azure Storage Account as destination. This approach downloads the logs and aggregates them on your local machine.

Please keep in mind that in this article, we only copy the logs from the destination storage account. They will remain on that storage account until you delete them.

At the end of this article, you will have access to a CSV file that will contain the information for the current log structure (e.g.: timeGeneratedUTC, resourceId, category, operationName, statusText, callerIpAddress, etc.).

This script was developed and tested using the following versions but it is expected to work with previous versions:

- Python 3.11.4

- AzCopy 10.19.0

Approach

-

Create Diagnostic Settings to capture the Storage Logs and send them to an Azure Storage Account

-

Use AzCopy and Python to download and to aggregate the logs

Each step has a theoretical introduction and a practical example.

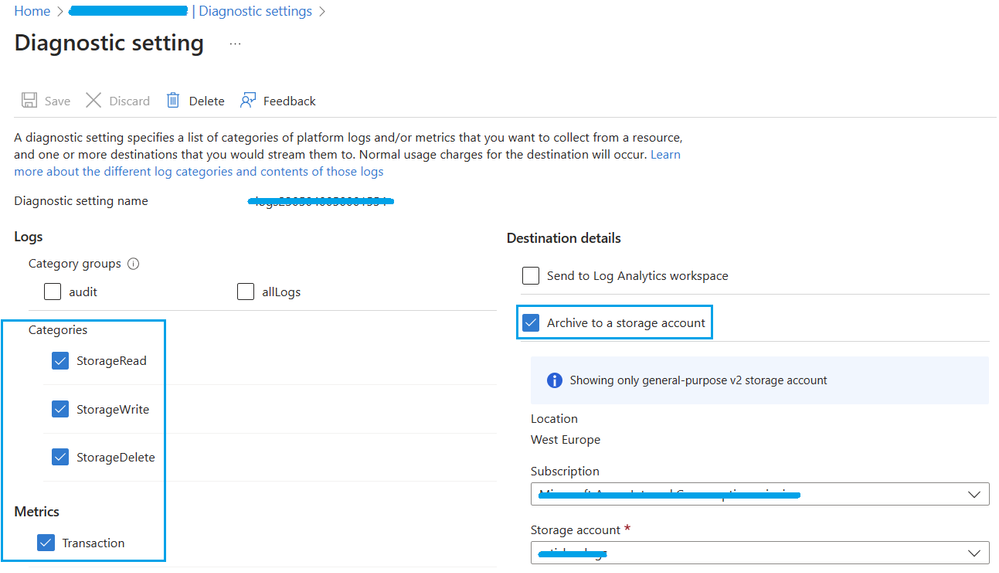

To collect resource logs, you must create a diagnostic setting. When creating a diagnostic setting you can specify one of the following categories of operations for which you want to collect logs (please see more information here Collection and routing)

- StorageRead: Read operations on objects.

- StorageWrite: Write operations on objects.

- StorageDelete: Delete operations on objects.

Support documentation:

- Information captured by the logs:

- The Diagnostics Settings service allows you to define the destination to store the logs.

- Please review here the all Destinations options

- Please review also here the Destination limitations

- Storage Analytics logged operations and status messages (REST API)

As mentioned above, in this article we will explore the scenario of using an Azure Storage account as the destination. Please keep in mind of the following:

- According to our documentation (please see here Destinations), archiving logs and metrics to a Storage account is useful for audit, static analysis, or backup. Compared to using Azure Monitor Logs or a Log Analytics workspace, Storage is less expensive, and logs can be kept there indefinitely.

- When sending the logs to an Azure Storage account, the blobs within the container use the following naming convention (please see more here Send to Azure Storage)

To understand how to create a Diagnostic Setting please review this documentation Create a diagnostic setting. This documentation shows how to create a diagnostics setting to send the logs to a Log Analytics workspace. To follow this article, you will need, on the Destination details, to select "Archive to a storage account" instead of "Send to Log Analytics workspace". You can use this documentation if you want to send the logs to a Log Analytics workspace.

An important remark is that in this article, we only copy the logs to the local machine, we do not delete any data from your storage account.

In this step, we will use AzCopy to retrieve the logs from the Storage Account and then, we will use Python to consolidate the logs.

AzCopy is a command-line tool that moves data into and out of Azure Storage. Please review our documentation about AzCopy Get started with AzCopy. On this documentation you will understand how to Download AzCopy, Run AzCopy, and how to Authorize AzCopy.

-

-

-

-

-

pip install pandas

-

-

Python script explained

Please find below all Python script components explained. The full script will be available after.

Imports needed for the script

Auxiliary functions

Function to list all files under a specific directory:

Function to retrieve the logs using AzCopy:

Parameters definition

-

AzCopy:

-

azcopy_path: path to the AzCopy executable

-

-

Storage account logs destination info (Storage account name where the logs are being stored):

-

storageAccountName: the storage account name where the logs are stored

-

sasToken: SAS token to authorize the AzCopy operations

-

- Storage account info regarding the storage account where we enabled the Diagnostic Setting logs

- subscriptionID: Subscription ID associated with the storage account that is generating the logs

- resourceGroup: resourceGroup name where the storage account that is generating the logs is

- storageAccountNameGetLogs: Name of the storage account that is generating the logs

-

start: As presented above, the blobs within the container use the following naming convention (please see more here )

insights-logs-{log category name}/resourceId=/SUBSCRIPTIONS/{subscription ID}/RESOURCEGROUPS/{resource group name}/PROVIDERS/{resource provider name}/{resource type}/{resource name}/y={four-digit numeric year}/m={two-digit numeric month}/d={two-digit numeric day}/h={two-digit 24-hour clock hour}/m=00/PT1H.json

-

If we want the logs for a specific year, 2023 for instance, you should define start = "y=2023"

-

If we want the logs for a specific month, May 2023 for instance, you should define start = "y=2023/m=05"

-

If we want the logs for a specific day, 31st of May 2023, you should define start = "y=2023/m=05/d=31"

-

-

Local machine information - Path on local machine where to store the logs

-

logsDest: Path on local machine (Where to store the logs)

-

This path is composed of the main folder to store the logs, defined by you, and inside that folder will be created a folder with the storage account name logged, and a sub folder with the start field defined above

-

For instance, if you want to store the logs collected about storage account name test, for the entire 2023 year, on a folder on the following path c:\logs. After executing the script, you will have a the following structure: c:\

-

-

-

To download all the logs

If you want to download all the logs (Delete, Read, Write operations), please keep the code below as it is. Comment the lines regarding the logs that you do not want to download. Just add # at the beginning of the line.

Merge all log files into a single file

The full python script is attached to this article.

Output

To understand better the parameters included on the logs after executing this full code script, please review Azure Monitor Logs reference - StorageBlobLogs.

Disclaimer:

- These steps are provided for the purpose of illustration only.

- These steps and any related information are provided "as is" without warranty of any kind, either expressed or implied, including but not limited to the implied warranties of merchantability and/or fitness for a particular purpose.

- We grant You a nonexclusive, royalty-free right to use and modify the Steps and to reproduce and distribute the steps, provided that. You agree:

- to not use Our name, logo, or trademarks to market Your software product in which the steps are embedded;

- to include a valid copyright notice on Your software product in which the steps are embedded; and

- to indemnify, hold harmless, and defend Us and Our suppliers from and against any claims or lawsuits, including attorneys’ fees, that arise or result from the use or distribution of steps.