This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

The Motivation

Application scenarios such as airline booking systems often provide suboptimal user experiences, with customers having to navigate complex systems and rules to perform simple tasks. The motivation for creating copilots powered by Azure OpenAI stems from the growing need to revolutionize user experiences in every application scenario, such as airline reservation systems, which often leave customers frustrated due to complex processes and rules necessary to successfully use these systems. By harnessing the power of Confluent Cloud, Azure OpenAI, and Azure Data services, we aim to empower businesses to deploy intelligent agents that provide real-time insights and assistance. These AI-driven copilots will not only streamline complex transactions but also enhance customer interactions, ultimately delivering a seamless and efficient user experience that significantly improves customer satisfaction and loyalty.

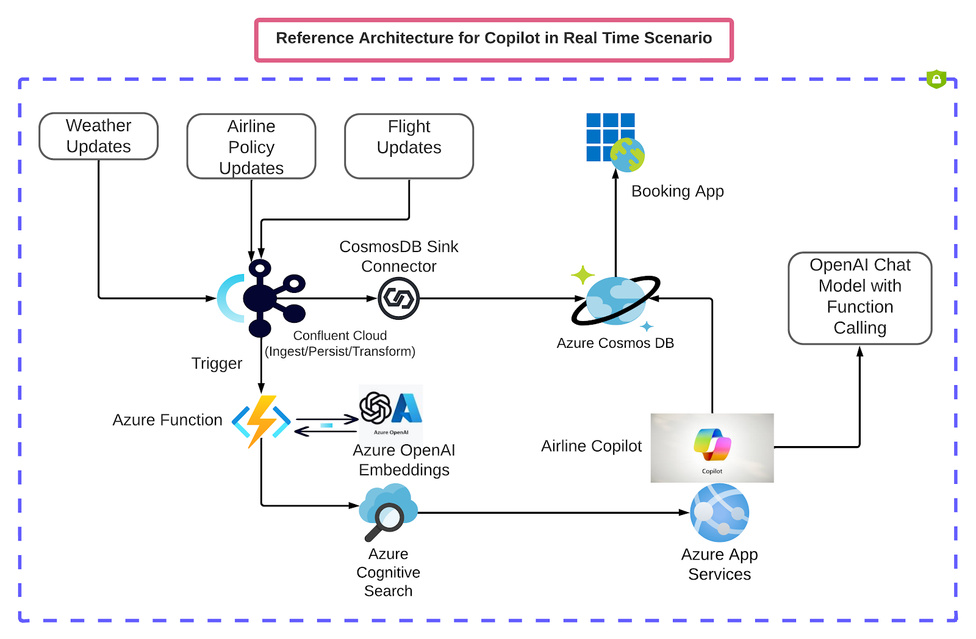

The copilot application covered in the reference implementation for this blog post offers a set of powerful capabilities for improving the airline booking experience. It can retrieve current flight details from Azure Cognitive Search, check flight status before and after weather events (streamed via Confluent Cloud), answer questions about airline policies related to flight rescheduling, and perform flight rebooking transactions using Cosmos DB.

Confluent Cloud is now available as an Azure Native ISV Service which enables you to easily provision, manage, and tightly integrate independent software vendor (ISV) software and services on Azure. The Azure Native ISV Service is developed and managed by Microsoft and Confluent. Customers can provision the Confluent Cloud resources through a resource provider named Microsoft.Confluent and can also create and manage Confluent Cloud organization resources through the Azure portal, Azure CLI, or Azure SDKs just like any other Azure 1st party resource.

The copilot’s foundation lies in real-time data streaming with Apache Kafka and Confluent Cloud. Flight status updates, airline policy changes, and real-time weather events are continuously streamed, with the ability to filter and modify data before it's sent downstream to various destinations, including Cosmos DB and Azure Cognitive Search for hybrid and vector-based searches. Confluent allows organizations that are building AI applications to create a real-time knowledge base of all their internal data to make their apps smarter.

To enhance user interactions, the application employs Azure OpenAI embedding APIs via Azure Functions to compute text embeddings and vector representations. An intelligent decision-making component, the Language Model (LLM), processes user questions, determining which functions to execute for data retrieval or remote API calls.

Crucially, the application emphasizes the importance of fresh and up-to-date data for the LLM's effectiveness. Confluent Cloud plays a vital role in facilitating real-time data ingestion, processing, and distribution across Azure services, enabling the Copilot to provide timely and accurate assistance to users.

Overview

In this blog post, we cover and discuss the following:

- The motivation for building copilots powered by Azure OpenAI

- Key characteristics of effective copilots and examples

- How to design, build and maintain your own effective and efficient copilots

- Function calling and RAG architecture with Azure OpenAI GPT Models

- Reference architecture for implementing effective copilots with Confluent and Azure

Key Characteristics of Effective Copilots

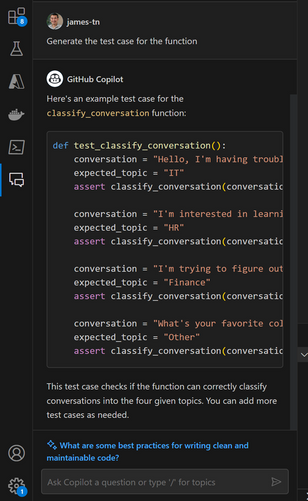

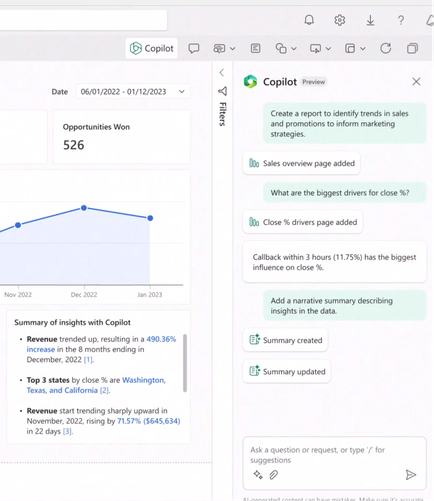

Examples of copilot applications in Github and CRM

AI copilots are intelligent software agents designed to collaborate with and assist humans in various tasks and domains and they possess several key characteristics:

Natural Language Understanding: Copilots have a conversational user interface which enables users to interact with it using natural language the same way that they might when talking to an expert. Copilots have the ability to comprehend and generate human language. They can understand and respond to text or speech inputs, making them effective in interacting with users through conversations. Copilots are powered by foundation models: either large language models (LLM) or large multimodal models (LMM).

Context Awareness: Copilots can understand and maintain context during interactions. They remember previous exchanges and use that information to provide relevant responses or suggestions, ensuring a coherent and personalized experience.

User Assistance and Guidance: Copilots are designed to assist and guide users through complex processes, helping them accomplish tasks more effectively and with reduced errors.

Human-AI Collaboration: Rather than replacing humans, Copilots are intended to collaborate with humans. They enhance human capabilities and productivity, fostering a partnership between humans and AI.

Scalability, Integration and Extensibility: Copilots can scale to handle a wide range of tasks and interactions simultaneously. They can assist many users concurrently, making them valuable in scenarios with high demands for user support. These systems are often designed to integrate seamlessly with existing software and systems. They can be extended through APIs and plugins to work with a variety of applications and platforms.

Learning and Adaptation: AI copilots are capable of learning from data and user interactions. They can adapt to changing circumstances, improve their performance over time, and even anticipate user needs based on historical data.

Task Automation: One of the primary functions of AI copilots is to automate repetitive or time-consuming tasks. They can assist users in completing tasks more efficiently, such as data entry, scheduling, or information retrieval.

Decision Support: These AI copilots can provide recommendations and insights to aid users in making informed decisions. They may analyze data, compare options, and offer suggestions based on predefined criteria or user preferences.

Multi-Modal Capabilities: Copilots can work with various types of data and inputs, including text, speech, images, and other sensory inputs. They can process and generate information in multiple modalities as needed.

In essence, Copilots combine natural language processing, machine learning, and automation to provide intelligent, context-aware assistance, making them valuable assets in various domains, from customer support and healthcare to business operations and beyond.

How to Build Your Own Copilots

Building a copilot application involves several key steps and considerations for software engineers and architects. Here's a high-level overview of the process:

Define the business flow and features of your copilot:

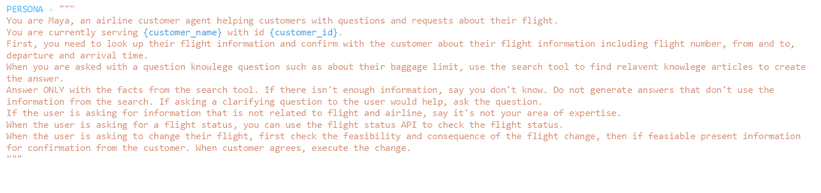

Start by clearly defining the problem your copilot application will solve and the specific use case it will address. Understand the needs of your target users and the tasks the copilot will assist with. Define process flow and steps that your copilot needs to follow to achieve the business goals. A best practice is to have a narrow and specialized scope for the business flow with a small number of tasks for a copilot. If your business requires a complex flow, break it down into smaller sub-flows and use multiple copilots under a coordinator agent to implement.

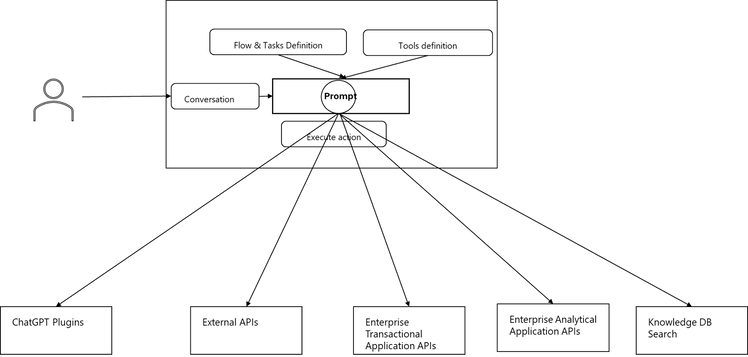

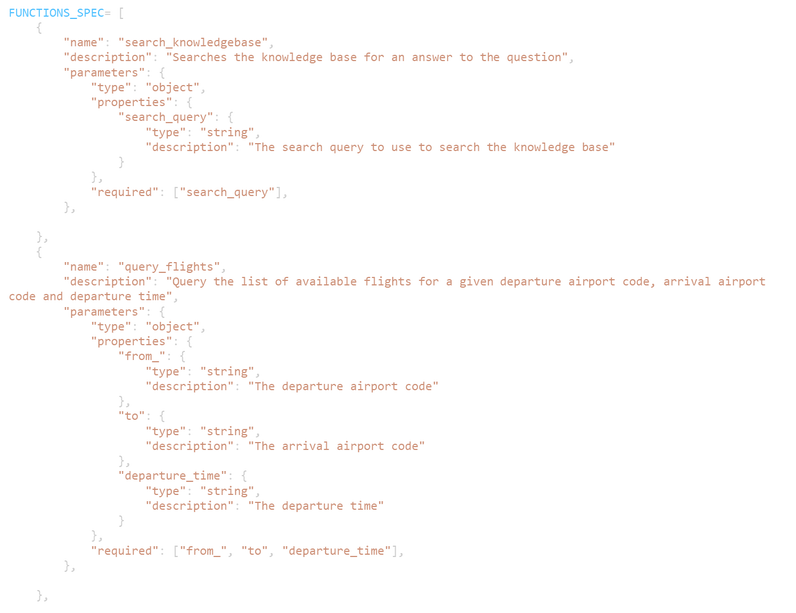

Define tools and functions that your copilot need to interact:

To execute tasks, your copilot may need to interact with external systems and tools. This needs to be done via an API layer. For each tool or system, define a wrapper API with description of its purpose and when and how to use it together with its parameters. This needs to be well-aligned with the business flow definition above so that your copilot can pick the tool and use when executing the task.

Choose the Technology Stack:

Decide on the programming languages, frameworks, and tools that are suitable for your project. Consider factors like scalability, compatibility, and the availability of relevant libraries and APIs.

Data Collection and Preprocessing:

Gather the data required to operate your copilot. This could include text data, images, or other relevant information. Ensure data quality and preprocess it as needed, such as cleaning and formatting. This is where Confluent Cloud and Azure Data Services are being leveraged to implement these systems. Confluent cloud has the ability to ingest data in near real time from approximately 200 connectors which allows us to pull real time data from virtually any data source using Kafka connect source connectors, process, enrich or filter it with either Kafka Streams or kSQLDB and then send it to any data store of our choice on Azure using the Kafka connect sink connectors. This is a crucial part of this architecture because it provides the copilot and intelligent AI application with the ability to work with fresh data in environments where the data necessary for enabling the LLMs decisions are constantly changing.

Context Handling:

Implement mechanisms for handling and maintaining context during user interactions. This allows the copilot to understand user requests and provide relevant responses based on the conversation history.

User Interface (UI):

Design a user-friendly interface for users to interact with the copilot. This could be a web application, a mobile app, a chatbot interface, or any other suitable platform.

Security and Privacy:

Implement security measures to protect user data and privacy. Ensure compliance with data protection regulations and best practices in cybersecurity.

Testing and Evaluation:

Rigorously test your copilot application to identify and fix bugs, errors, and performance issues. Evaluate the copilot's accuracy, responsiveness, and usability.

Deployment:

Deploy your copilot application to a production environment, whether on the cloud or on-premises. Ensure scalability to handle user traffic and monitor system performance.

User Feedback and Iteration:

Gather user feedback and continuously improve your copilot based on user experiences and evolving requirements. This may involve retraining the model with new data.

Maintenance and Updates:

Maintain the copilot application by addressing any issues that arise and keeping software dependencies up-to-date. Regularly update your models to improve performance.

Documentation:

Create comprehensive documentation for developers, administrators, and end-users to understand and use your copilot effectively.

Scalability and Extensibility:

Plan for scalability to handle increased user loads, and consider how the copilot can be extended to support additional use cases or integrate with other systems.

Monitoring and Analytics:

Implement monitoring and analytics tools to track system performance, user interactions, and other relevant metrics. This data can inform future enhancements.

Compliance and Regulations:

Ensure that your copilot application complies with legal and regulatory requirements, especially if it handles sensitive data or operates in regulated industries. Building a copilot application is a complex endeavor that requires expertise in machine learning, software development, user experience design, and domain-specific knowledge. Collaboration with cross-functional teams and continuous improvement based on user feedback are essential to create a successful copilot application that meets user needs and delivers value.

Responsible AI Practices

Building responsible artificial intelligence platforms requires following a pattern that involves asking teams to Identify, Measure, and Mitigate potential harms, and have a plan for how to Operate the AI system as well. In alignment with those practices, these recommendations are organized into the following four stages:

- Identify: Identify and prioritize potential harms that could result from your AI system through iterative red-teaming, stress-testing, and analysis.

- Measure: Measure the frequency and severity of those harms by establishing clear metrics, creating measurement test sets, and completing iterative, systematic testing (both manual and automated).

- Mitigate : Mitigate harms by implementing tools and strategies such as prompt engineering and using our content filters. Repeat measurement to test effectiveness after implementing mitigations.

- Operate : Define and execute a deployment and operational readiness plan.

Function calling with Azure Open AI GPT 0613 models

Function calling is an important feature introduced at version 0613 of GPT-4 and GPT-3.5-Turbo models allowing LLMs to interact with external tools and systems. Function calling makes it possible to implement this copilot concept because of the ability to both follow a business flow and use one or multiple functions to execute the flow.

Implementation of copilot with function calling

The typical flow to build a copilot by leveraging function calling of Azure OpenAI involves the following key considerations and steps:

- Defining the flow and persona

- Defining the function specifications

- Checking if the model needs to use one of the defined functions

- Calling the function or functions if applicable to perform and action

- Retrieving the data from the function and then injecting into the prompt to the LLM

- Retrieving the response from the LLM and sending it to the user

1: Define the Flow and Persona

2: Define the Function Specs

Overview of Execution Sequence and Interactions

Reference implementation of Copilots for Real Time Scenarios

Summary of Flight Copilot Capabilities:

- Retrieval of current flight details from Azure Cognitive Search

- Check flight status before and after an impacting weather event (the weather events are being streamed into Kafka via Confluent Cloud to demonstrate real time updates from remote APIs)

- Question answering regarding airline policies applicable specifically to the passenger’s situation of flight rescheduling.

- Perform flight rebooking transaction against the Cosmos DB datastore

In this reference implementation, flight status updates, airline policies changes and real time weather events are constantly being streamed into various Kafka topics on Confluent Cloud. Once the data arrives at the Kafka topics, these events can be filtered, enriched or modified prior to being sent downstream to other topics on Confluent Cloud. These events are then being pushed to the Cosmos DB datastore that powers the booking services our Copilot application is going to leverage later on.

Some of the data is being sent directly to datastores that the copilot functions will be calling but some of it is also sent to Azure Cognitive Search vector store for hybrid and vector search from within the copilot application. The text content of the documents needs to be sent to Azure OpenAI embedding APIs via Azure Functions to compute the embeddings and vector representations for the text chunks.

Once the vector store (Azure Cognitive Search) and the document store (Cosmos DB) have all the data necessary to power the copilot app, the users can now send questions and queries to the copilot application hosted as an Azure Webapp. This chatbot app receives the questions and sends them to the LLM which analyzes the questions to determine the relevant function that needs to be called to provide the necessary capabilities of either retrieving data or executing remote APIs for CRUD operations.

Finally, it is important to highlight the fact that without useful and valid data the LLM used to power the copilot or intelligent app would not be effective at the tasks and having fresh and up-to-date data is vital and crucial for this to be a reality. Confluent Cloud makes this possible by providing the connectors that allow us to bring in real time data from any source, process it with Kafka streams or KSQLDB and push it to any destination on Azure almost instantaneously.

References

- Flight copilot demo repo: OpenAIWorkshop/scenarios/incubations/copilot/realtime_streaming at confluent · microsoft/OpenAIWorkshop (github.com)

- https://learn.microsoft.com/en-us/azure/partner-solutions/apache-kafka-confluent-cloud/overview

- https://learn.microsoft.com/en-us/azure/ai-services/openai/overview

- https://learn.microsoft.com/en-us/azure/ai-services/openai/how-to/function-calling

- https://www.confluent.io/hub/

- https://learn.microsoft.com/en-us/legal/cognitive-services/openai/overview

- https://learn.microsoft.com/en-us/azure/cosmos-db/introduction

- https://learn.microsoft.com/en-us/azure/search/search-what-is-azure-search