This post has been republished via RSS; it originally appeared at: Azure Developer Community Blog articles.

Introduction

As an AI enthusiast, I have been literally amazed by the latest progress in the field, namely the so-called Generative AI. OpenAI has made a remarkable work. We can surely say that we definitely entered the age of AI, which will infuse in the society and every single system.

Microsoft brings the tremendous OpenAI capabilities into the Azure ecosystem through Azure Open AI. This is a significant addition to the existing Azure AI services, which we could already use to bring AI capabilities to our custom applications. While we can use OpenAI's offering directly, Azure brings the additional compliance bits that most mid/large sized companies require. Instead of having a walkthough on "how to do a,b,c?", the value proposition of this blog post is rather to focus on the concepts and to exhibit a possible blueprint on using Azure OpenAI within your enterprise.. The code I'll be using is for illustration purposes only and is not the most important part. Let's get started with some NLP concepts.

Natural Language Processing (NLP)

Generative AI goes far beyond mere NLP but I will only focus on NLP in this post. While I was studying NLP at university, I was far from thinking that I'd still be alive to see what we have achieved today. Years later, I developed a few chatbots and I still remember the pain. Most people do not realize how hard NLP is. Natural language is full of ambiguity, humor, irony, homonyms, polysemy etc. Even as human beings, we do not always understand each other. We often have to repeat ourself, to disambiguate some statements. We typically disambiguate by bringing extra information about the context.

The hardest piece in NLP is to extract semantics, meaning, the understanding of words in the context of use. Models like GPT turbo 3x and 4x are rather impressive in that matter, and guess what, we can add our own data and plug our own code into the mix. Let's see a few key NLP concepts before diving into some code samples.

Tokenization

In NLP, no matter what the pursued goal is, it all starts with tokens! It's a step where we preprocess text and prepare it for further NLP tasks. For example, the sentence:

Once tokenized with Python's tiktoken library:

becomes:

Tokens represent words, subwords or even characters (punctuation). AI models are trained with tokenized text and decompose our queries into tokens behind the scenes. Tokenization is part of the toolbox of every NLP practitioner. By the way, GPT-like models that are made available by Azure OpenAI are subject to a token limit. You cannot send millions of tokens at once when interacting with a model. Here are the current limits:

| Model name | Maximum limit |

| gpt-35-turbo | 4000 |

| gpt-35-turbo-16k | 16000 |

| gpt-4 | 8100 |

| gpt-4-32k |

32000 |

| text-embedding-ada-002 |

8191 |

Any interaction with a model with a higher number of tokens would result in a failure. When dealing with large text blocs, you have to chunk them into smaller ones to make sure you do not go beyond that limit.

POS-Tagging

One way to extract more insights out of a piece of text, is to use POS Tagging. This technique consists in identifying words and their role in a sentence, and is still useful today. While tokens represent individual "unrelated" chunks, tags help understand the grammar of a given a text, in a more comprehensive way. The following in an example of POS Tagging in action:

The corresponding tags would be:

We know each word and its role in the sentence. Punctuation also plays an important role, especially ".", "!", "?", expressing the end of a phrase, a question, etc. POS-Tagging is often based on stochastic techniques such as the Hidden Markov Model (HMM), which shines in predicting the most probable letter/word/tag/phoneme thanks to transition probabilities.

The above output calls for two other concepts, namely, lemmatization and entity recognition.

Lemmatization

With POS Tagging, we have extracted each word and its role in the sentence. However, natural languages are full of derivatives, which can sometimes add noise. From our previous example:

The verb am, is derived from the verb to be. Similarly, words such as "are", "be", "been", "is", "was", etc. are all derivatives of "to be". Lemmatization helps reduce noise by identifying the lemma of each word. It differs from stems in that they actually mean something. Stems are useful in search engines as they identify root of words, but these roots do not especially represent a word that means something. One of the best known stemming engine is Porter's Stemmer algorithm.

Back to lemmatization! Not only verbs are subject to lemmatization, but also nouns, as they might have multiple forms (plural, gender, etc.). So, for example, the lemma of mice is mouse. In the above sentence, only the verb am has a different base. Lemmatization combined with POS-Tagging can already help capture the essence of a piece of text. Great! Next, we have to deal with the so-called named entities.

Named Entity Recognition (NER)

Still from our previous example:

The words Stephane Eyskens, Azure, and Cloud Architect are named entities, because they represent something in the physical world. Stephane Eyskens is a person, Azure is a specific Cloud platform and Cloud Architect is a job title. Each entity is associated to a broader concept (Person, Cloud Platforms, Job). NER is still very important nowadays, because entities can be captured by an NLP engine and passed as parameters to upstream systems. For instance, today, when using Azure's Conversational Understanding service, each entity will be captured from the initial user query by the system and passed to the upstream process that handles the query. You can take advantage of this to train the model to identify and capture custom entities, such as an Incident Number of a ServiceNow ticket...That is what I did back in the days with LUIS (Language Understanding Intelligent Service), where dynamic entities such as an incident number were captured for further treatment. NER remains a very important aspect in NLP.

Text embeddings

The rise of Deep Learning caused the widespread adoption of the text embedding concept. Bidirectional Encoder Representations from Transformers (BERT) is, among others, a transformer-based deep learning model that is able to create embeddings, which are vector-based numeric representations of text. Other medias such as images, audio, etc. can be vectorized too, but let's stick to text. The purpose of text embeddings is to gather as much as possible contextual information and to store it on the form of numeric vectors. Vectors can be compared with others to see if they look similar or not. Nowadays, we typically compare vectors by calculating their Cosine Similarity. Note that other comparison techniques exist.

Embeddings serve a certain number of use cases, such as "vague search queries". Unlike keyword-based search, vector-based search is more likely to take into account the context of a user query when extracting search results. This increase the semantic understanding of a search engine, or any system that gets a query in natural language and must find corresponding results in a vector database.

As always, a small example may help understand the concept, these two sentences contain the word crane:

As human beings, we instantly identify the context and we understand that, despites crane being the same word, it has a totally different meaning because of the context in which it is used. That is what transformers like BERT help identify. BERT would calculate vectors for both sentences. Let's use Azure OpenAI to vectorize and calculate the cosine similarity between our two sentences above. If I run this piece of code (truncated for clarity):

With Azure OpenAI, the result of the above code is 0.76, which still seems to indicate a high degree of similarity between the two sentences. A result close to 0 means not similar, while a result close to 1 means similar. The score you get depends on the model used to compute the embeddings.

An important aspect of Azure OpenAI's embedding engine text-embedding-ada-002, is that it always returns a vector with 1536 dimensions, no matter what you try to get the embeddings for. Given that behavior, it is obvious that the larger the input text is, the more accurate the vector dimensions. As a rule of thumb, we can say that cosine similarity will be more accurate when using broader text blocs, such as documents. Using cosine similarity to compare two short sentences, like in our example, might not give the best results. By this, I mean that you shouldn't have an algorithm that says "whenever I have a cosine similarity > 0.5, I take decision A or B", when working with short pieces of text. Mind the token limit highlighted before as it also applies to embedding operations.

As I cannot work with large documents in a blob post, I'll keep using short sentences to illustrate further the cosine similarity concept.

Let's regroup together the following sentences into a dataframe:

Let us now calculate the embeddings of each row and add it as a new column to our dataframe:

The generate_embeddings function is not included in this example but it goes query Azure OpenAI to get the corresponding embeddings. We now have a dataframe that includes the original text and its corresponding embedding:

The output is the following:

To wrap it up, cosine similarity will be very useful when you try to detect how similar, but not identical, text blocs are. You should always refine the cosine similarity value to sort or filter on the highest one. As you may have understood by now, embeddings are not meant to be used in a context where you expect a binary answer (0 or 1).

NLP in the Generative AI era

GPT is a Large Language Model (LLM) powered by deep learning and transformer architectures. While probability-based models such as HMM are very efficient, they fail in learning new things on their own. They need to be retrained with updated corpus data to remain efficient. That is where Generative AI makes a difference, in that, the most recent models are able to rely on techniques such as Few-Shot and Zero-Shot Learning, whose the names are self-explicit. We have reached a point where AI can go beyond its training data, although it still heavily relies on it and is still subject to biases included within the trained data. GPT builds on top of Deep Learning which in turn builds on top of statistical models. Concepts such as tokenization, named entities etc. remain fully applicable today.

With Generative AI, not only the understanding of the natural language is more accurate than before, but it can also be creative, for good or bad! This increased understanding and creativity opens doors to many use cases such as:

- Responding to emails automatically

- Writing a marketing campaign

- Ideation and problem solving

- Creation of dynamic contents on web sites/blogs (ie: Python tip of the day, thought of the day, etc.)

- Translating contents

- Performing NLP tasks (yes, you can ask GPT to extract entities, to perform sentiment analysis, etc.)

- Boost developers/engineers productivity through tools like GitHub copilot based on Codex, a GPT variant

- Boost workers productivity through Office 365 copilots

- Data analysis

- Decision making

- etc.

The good news is that you don't need to be an AI expert yourself to leverage these new capabilities. You only need to acquire a few skills. On top of what we've seen so far, you should also be familiar two more specific concepts:

- Model hallucinations: this is when the model returns something that doesn't exist at all. Generative AI can lead to situations where the model response seems very accurate and very true, but it actually isn't.

- Prompt engineering: a technique you can use to fine tune the model's behavior. Prompt engineering, among other things, may help prevent hallucinations. A few rules of thumb apply to prompt engineering (we'll see examples later):

- Use the system role to tell the model what you want it to do.

- Use at least one sample shot by simulating what a query (user) and its answer (assistant) should look like. This will help the model better understand your expectations.

Now that you have a bit of NLP vocabulary and you are more familiar with the two concepts listed above, let's see how to concretely use GPT in our applications.

Using Azure OpenAI in your applications

Beyond the typical chatbots, we can leverage Azure OpenAI capabilities inside our solutions, in different ways, including decision making. GPT is now able to analyze a query and suggest a callback function that is supposed to handle the query. This can be useful in numerous use cases, such as:

- End-user query handling and let the model suggest a function/API that may help satisfy the query

- Non-structured and structured data analysis and let the model suggest how that data should be handled

- Any type of automation where GPT could play the role of an orchestrator

In a nutshell, the model tries to understand the user query and derives which function is the most suitable to do the job. The user can be a human being, as well as a system without any human involved. The latter means that the chat functionality is actually not restricted to humans. We could even make two models talk together...

Microsoft made some nice samples available in the following GitHub repo, which I encourage you to look at. I'll start with an even more basic example about using functions.

Getting started with functions

To illustrate this with an even more basic example than the one provided by Microsoft, I split the calculator app they provided, into smaller functions. The functional flow is as follows:

- I let the user express his needs. Something like: "how much is 1 plus 1?"

- I let GPT suggest which function can resolve my query.

- I read the GPT's answer and I call the respective function accordingly.

In this scenario, I have a just four basic functions: add, subtract, divide, multiply. I will skip some part of the code to focus only on the essentials. Here is the Python code of these four basic functions:

This is the actual Python code that your application calls as suggested by the model. To let the model know about those functions, we must inject their signature, the following way (truncated and limited to the add function only):

You'll have to send those function signatures to Azure OpenAI, together with your query:

With that simple interaction, we send a prompt to the model, as well as our functions. We specify the function selection mode to be automatic. For sake of brevity, I directly print the model's response, but you should of course use proper exception handling. The following response is returned:

The model suggests that the add function should be called. Notice how the numbers 5 and 2 were captured and suggested as function arguments. Next you have to evaluate the answer, perform some validation checks to make sure the model did not hallucinate (meaning inventing a function that you did not provide), and that it made a good job with the parameters. I will skip that part because that's not the point I want to make.

So far so good, but I asked a very simple question. What about the following prompt:

The model returns this:

which can be seen as an hallucination in our context because I did not provide the model with any square root function. That's where prompt engineering comes into play. You can add additional messages to influence the model's behavior. If I send more explicit messages such as:

The model's response will simply look like this:

We simply added a system prompt to influence the model's behavior. Similarly, with that system prompt in place, a user query such as "Can you tell me about planet Earth" would result in the following response:

While it may look simple enough, it can be challenging to have the perfect behavior. For example, the following prompt:

would cause the model to return a single function (divide). It wouldn't chain the use of the division and the multiply functions, although it knows about both. So, we'd have some sort of false positive since it would suggest to call the divide function only, which would not satisfy the user query entirely. Now that you got the basics, let's see a slightly more advanced and realistic scenario.

Beyond a mere calculator

In this more realistic scenario, I'll pretend that I want to let the model decide whether a batch of purchase orders is subject to approval or not. It will either call the sendBatchToApproval function, either the authorizeBatch one. I have the following basic data set (truncated):

| BATCHID | POID | AMOUNT |

| 1 | 2023-01 | 100 |

| 1 | 2023-02 | 50 |

| 1 | ... | ... |

I want the model to evaluate the data and to suggest the appropriate function. I have defined both functions in Python (not important here), and I'm sending them to the AI using the following snippet:

Now, with a little bit of prompt engineering, I get the results I want:

Notice that I added a system prompt to tell what is expected, but I also send the 3 first lines of the dataset and I tell the model what is the expected answer, by adding another prompt targeting the assistant role. Then, I inject the actual dataset. I checked that the 3 first lines were not subject to approval up front to make sure I used the right function in my prompt.

Azure OpenAI's answer is as follows:

It understood what was expected. The entire dataset was indeed subject to approval, while the 3 first rows used in my prompt were not. Azure OpenAI understood what was expected. This open doors to many scenarios, also and even more with unstructured data.

Keeping the conversation context

Every call to the ChatCompletion method will cause a new conversation to start, which means that the context does not survive API calls. To be clear, if I run the following two API calls:

this will be two different conversations and GPT will ask me more context about my second question. It is up to you to preserve the context across API calls. The only way to do this is to re-send both questions and answers to the next API call, as shown below:

Like this, GPT knows about the context and replies with the following answer:

because I sent the answer of the first API call as input to my second call. However, remember that the max number of tokens per API call, includes both the chat history as well as the completion. So, you cannot really have very lengthy conversations. You should only use a conversation when you want to involve GPT in a multi-step scenario. To keep track of the overall token consumption, you can inspect the value of the total_tokens attribute as follows:

You should ideally keep track of this before attempting a new API call since going beyond the token limit will result in a failure. Let's now see how Cognitive Search can be used together with Azure OpenAI.

Combining Azure OpenAI and Cognitive Search together

One of the new features of Azure search is the so-called vector search. We have discussed text embeddings earlier in this post, and it turns out that we can use OpenAI to generate the embeddings, send them to Azure Cognitive Search, which serves as a vector database, against which we can run vector-based search queries. Today, text embeddings are only usable with the push model. In other words, Azure Cognitive Search's indexer feature does not generate embeddings when crawling documents.

The vector search engine is based on Hierarchical Navigable Small Worlds (HNSW), which takes a vectorized query as input and finds the approximate nearest neighbor. In simpler terms, this is a highly performing algorithm to perform vector search. Fortunately, there is no need to know how exactly this algorithm works internally.

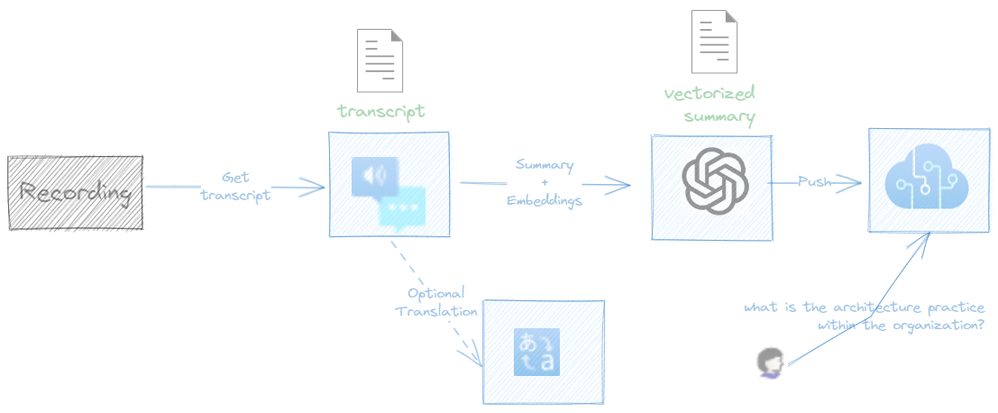

The combination of OpenAI and Azure Cognitive Search opens up many possible use cases. For example, we can think of scenarios like the following one:

We spend countless hours in meetings, and sometimes (not always :)), important information is shared during meetings. What if we would record them all, generate transcripts optionally translated to different languages, get transcripts summarized by GPT and everything pushed to the Cognitive Search? This would give us the possibility to perform search queries such as "Tell me what you know about the architectural practices of the organization". You can use Azure Cognitive Search as a vector database and Azure OpenAI as a way to craft and vectorize smarter queries or a way to refine search results or suggest functions to call according to the results. Again, this is not especially targeting applications with end users, but also machine to machine automation processes that can leverage both services to take better decisions.

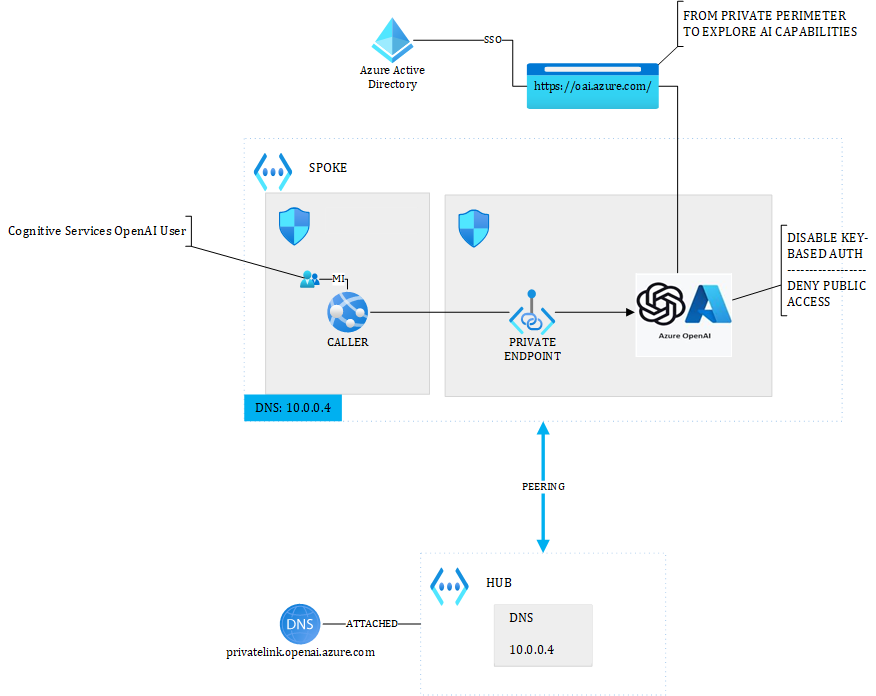

Architecture blueprint for Azure OpenAI

As I told you in the introduction, Microsoft brought OpenAI inside of Azure for us to integrate it with our assets and meet our enterprise security standards. It goes with no surprise that the following diagram looks like a possible blueprint for using Azure OpenAI inside your organization:

where we deny public access and integrate Azure OpenAI into our Hub & Spoke topology. We also make sure to disable key-based authentication by setting the disableLocalAuth property to true. Doing so constraints Azure OpenAI consumers to get an access token from Azure Active Directory to consume the service. In the above example, the caller is an Azure Web App with managed identity enabled, to which I granted the Cognitive Services OpenAI User role. The code hosted on that web app must request a token to Azure Active Directory by leveraging its managed identity.

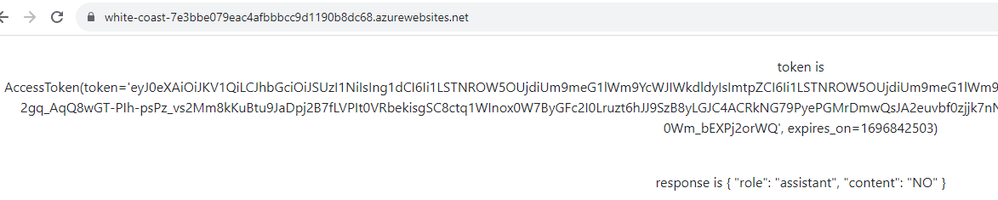

Here is an example of a Python webapp. I have hard-code things for sake of simplicity:

The important bits are about the usage of the ChainedTokenCredential class, which allows you to get an access token using the managed identity that's associated to the web app. The cool thing about this class, is that it also allows you to debug your code locally, where the system will fallback on your own identity (which also must be granted the required permissions). After a deployment in Azure and the proper assignment of the required permissions, we can indeed see this code in action:

Hope this post brought some clarity about older and the most modern NLP techniques and the type of scenarios that can be enabled by this technology.