This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Following our recent update on the Azure OpenAI Service with new fine-tunable models, we’re thrilled to announce that fine-tuning is now available for GPT-3.5-Turbo, Babbage-002, and Davinci-002 in Azure Machine Learning model catalog.

Fine-tuning OpenAI models provides significant value by enabling customization and optimization for specific tasks and applications. It leads to improved performance, cost efficiency, reduced latency, and tailored outputs. Whether you're enhancing customer service automation, generating content, and auto-completion, enabling seamless translation, or simplifying text summarization, fine-tuning can be a game-changer. Fine-tuning becomes more effective when used alongside other methods like prompt engineering and information retrieval.

You can train Azure OpenAI models using either Azure Machine Learning Service or Azure OpenAI Service. We offer supervised fine tuning, where users provide examples (prompt/completion or conversational chat, depending on the model) to retrain the model. This blog specifically explores the fine-tuning capabilities available within the Azure Machine Learning model catalog.

In this blog we’ll talk about...

- What is the new collection of OpenAI models with fine-tuning capability in Azure Machine Learning model catalog.

- How do we expose fine-tuning of OpenAI models in Azure Machine Learning.

- How Azure Machine Learning enhances fine-tuning for an enriched user experience.

- How to fine-tune and evaluate Azure OpenAI models in model catalog.

- How to deploy the fine-tuned models into Azure OpenAI Service.

- Fine-tuning best practices.

What is the new collection of OpenAI models with fine-tuning capability in Azure Machine Learning model catalog

We are adding two new base inference models (Babbage-002 and Davinci-002) and fine-tuning capabilities for three models (babage-002, Davinci-002 and GPT 3.5 Turbo) into Azure OpenAI models collection in model catalog.

GPT-3.5-Turbo, part of the GPT-3.5 family, is the most capable and cost-effective model, optimized primarily for chat applications but versatile enough for various other tasks. Babbage-002 and Davinci-002 are smaller in size and possess the ability to understand and generate natural language and code, although they lack instruction-following capabilities. Babbage-002 replaces the deprecated Ada and Babbage models and Davinci-002 replaces the deprecated Curie and Davinci models.

The Babbage-002 and Davinci-002 models provide support for a maximum of 16,384 input tokens at inferencing time, with training data available up to September 2021. On the other hand, the Turbo model supports a maximum of 4,097 input tokens at inferencing time, also with training data available up to September 2021.

How do we expose the fine-tuning of Azure OpenAI models in Azure Machine Learning

The model catalog (preview) within Azure Machine Learning studio serves as the initial hub for exploring different collections of foundational models. Model catalog offers a collection of Azure OpenAI Service models, a wide range of state-of-the-art open-source models curated by AzureML, a Hugging Face Hub Community Partner collection, a collection of Meta’s Llama 2 large language models (LLMs), and a diverse array of curated advanced open-source vision models.

The Azure OpenAI models collection is a collection of models, exclusively available on Azure. These models enable customers to access prompt engineering, fine-tuning, evaluation, and deployment capabilities for large language models available in Azure OpenAI Service.

Unlock the full potential of Azure Machine Learning with a structured fine-tuning journey. Begin by selecting your preferred base model from the model catalog, select the fine-tuning task, and provide the necessary training and validation data. Azure Machine Learning simplifies the process, offering fully managed compute resources and hyperparameter configuration. After fine-tuning, assess your model's metrics and deploy it to the Azure OpenAI Service.

Pricing:

We offer fully managed fine-tuning services, removing the need for users to manage compute resource.

The price depends on the model selected; please see the AOAI Service Pricing Page for up-to-date information on model pricing. There is no additional charge to use Azure Machine Learning. However, along with Azure OpenAI usage, compute, you will incur separate charges for other Azure services consumed, including but not limited to Azure Blob Storage, Azure Key Vault, Azure Container Registry and Azure Application Insights. To learn more about how to manage budgets, costs, and quota for Azure Machine Learning, see here.

How Azure Machine Learning enhances fine-tuning for an enriched user experience

Azure Machine Learning provides built-in optimizations like Deepspeed and ORT (ONNX RunTime), which speed up fine-tuning, and LoRA (Low-Rank Adaptation of Large Language Models), which greatly reduces memory and compute requirements for fine-tuning.

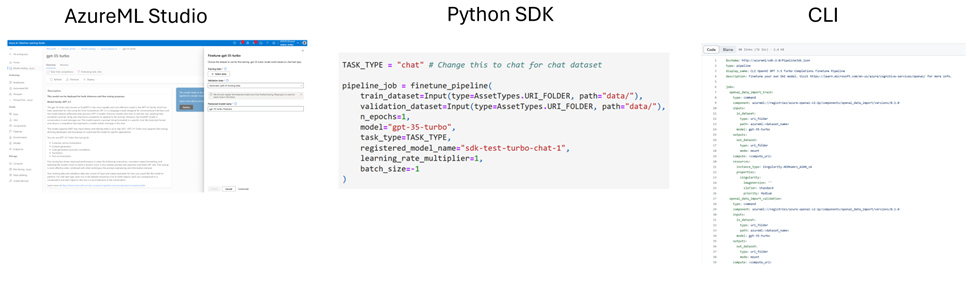

Azure Machine Learning streamlines experiment management with native LLMOps capabilities for experienced data scientists, providing tools to organize and find the best-suited model. It caters to advanced developers by offering enhanced data versioning and pipelining capabilities through fine-tuning. Collaboration, reproducibility, and efficiency are fostered in model development. Moreover, Azure Machine Learning supports both no-code and code-based experiences through SDK and CLI, empowering advanced data scientists to integrate it into custom workflows and applications.

How to fine-tune and evaluate these models in Azure Machine Learning Studio

To improve model performance in your workload, you might want to fine-tune the model using your own training data. You can easily fine-tune these models by using either the Azure Machine Learning studio or the code-based samples. You can invoke the fine-tune setting by selecting the Finetune button on the model card and pass the training and validation datasets.

Dataset Preparation:

Your training data and validation data sets consist of input and output examples for how you would like the model to perform. Make sure all your training examples follow the expected format for inference. To fine-tune models effectively, ensure a balanced and diverse dataset. This involves maintaining data balance, including various scenarios, and periodically refining training data to align with real-world expectations, ultimately leading to more accurate and balanced model responses.

For models with completions task type (Babbage-002 and Davinci-002) the training and validation data you use must be formatted as a JSON Lines (JSONL) document in which each line represents a single prompt-completion pair.

For models with chat task type (GPT 3.5 Turbo), each row in the dataset should be a list of JSON objects. Each row corresponds to a conversation and each object in the row is a turn/utterance in the conversation.

Hyperparameter tuning:

Hyperparameter tuning is crucial for LLMs, optimizing performance, saving resources, and adapting to tasks and datasets for real-world applicability. You can customize fine-tuning parameters such as n_epochs, batch size and learning rate multiplier.

Evaluating your fine-tuned model:

We offer a set of training metrics that are computed throughout the training process. These metrics include training loss, training token accuracy, validation loss, and validation token accuracy. These statistics serve as valuable indicators to ensure the smooth progression of training. Specifically, the training loss should decrease over time, while the training token accuracy should exhibit an upward trend, reflecting improved performance.

How to deploy the fine-tuned models in Azure Machine Learning Studio

After you have finished fine-tuning an Azure OpenAI model, you can find the registered model in Models list with the name you want to deploy. Select the Deploy button to deploy the model to the Azure OpenAI Service, and provide an OpenAI resource and a deployment name. You can go to the Endpoint section in your workspace to find the models deployed to Azure OpenAI Service.

Fine-tuning best practices

When conducting fine-tuning on OpenAI models, it is important to adhere to best practices to ensure optimal outcomes while upholding ethical standards.

- Define Clear Objectives: Clearly define your fine-tuning objectives, whether it's improving task performance or adapting to domain-specific data.

- Start Small and Scale Gradually: Start with a small number of training examples, around 50 or 100, and expand as necessary if you see improvements. If no progress occurs, reassess the task setup or data structure before scaling. Keep in mind that quality matters more than quantity, and even a small set of high-quality examples can lead to successful fine-tuning results.

- Prioritize Quality Training Data: Use high-quality training data that is well-labeled and closely aligned with the task. Conduct essential data preprocessing and handle outliers to optimize model performance.

- Use Consistent System Messages: Maintain uniformity by employing the same system message during both fine-tuning and deployment.

- Align Training Data: Ensure that your training data closely resembles real user queries in terms of style and content.

- Establish a Baseline: Emphasize the importance of establishing a baseline for comparison. Generate samples from both the base model and the fine-tuned model, and conduct a formal side-by-side comparison.

- Iterating on Hyperparameters: Begin with the default hyperparameters. Modify the number of epochs if you notice that the model is not aligning closely with the training data (increase the number by 1 or 2 epochs) or if the model's diversity is lower than expected (decrease the number by 1 or 2 epochs).

- Explore Other Techniques: Experiment with prompt crafting, RAG (Retrieval Augmented Generation) and few-shot learning before fine-tuning to quickly establish a baseline and optimize resource usage.

- Cost-Efficiency with Smaller Models: Consider training smaller models to achieve the desired accuracy at a lower cost.

- Iterate and Collaborate: Fine-tune your model iteratively, starting with a cautious approach and refining it based on evaluation results. Collaborate closely with domain experts and stakeholders to refine fine-tuning objectives and validate model outputs.

Want to learn more?

-

Get started with finetuning Azure OpenAI models in Azure Machine Learning

- Explore SDK and CLI Examples for Azure OpenAI models in azureml-examples github repo!

- Azure OpenAI Service fine tuning tutorial

- Learn more about Azure AI Content Safety - Azure AI Content Safety – AI Content Moderation | Microsoft Azure