This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

We are excited to announce the general availability of Azure AI Content Safety, a new service that helps you detect and filter harmful user-generated and AI-generated content in your applications and services. Content Safety includes text and image detection to find content that is offensive, risky, or undesirable, such as profanity, adult content, gore, violence, hate speech, and more. You can also use our interactive Azure AI Content Safety Studio to view, explore, and try out sample code for detecting harmful content across different modalities.

Content Safety is Paramount in Today's World

The impact of harmful content on platforms extends far beyond user dissatisfaction. It has the potential to damage a brand's image, erode user trust, undermine long-term financial stability, and expose the platform to potential legal liabilities.

It is crucial to consider more than just human-generated content, especially as AI-generated content becomes prevalent. Ensuring the accuracy, reliability, and absence of harmful or inappropriate materials in AI-generated outputs is essential. Content safety not only protects users from misinformation and potential harm but also upholds ethical standards and builds trust in AI technologies. By focusing on content safety, we can create a safer digital environment that promotes responsible use of AI and safeguards the well-being of individuals and society as a whole.

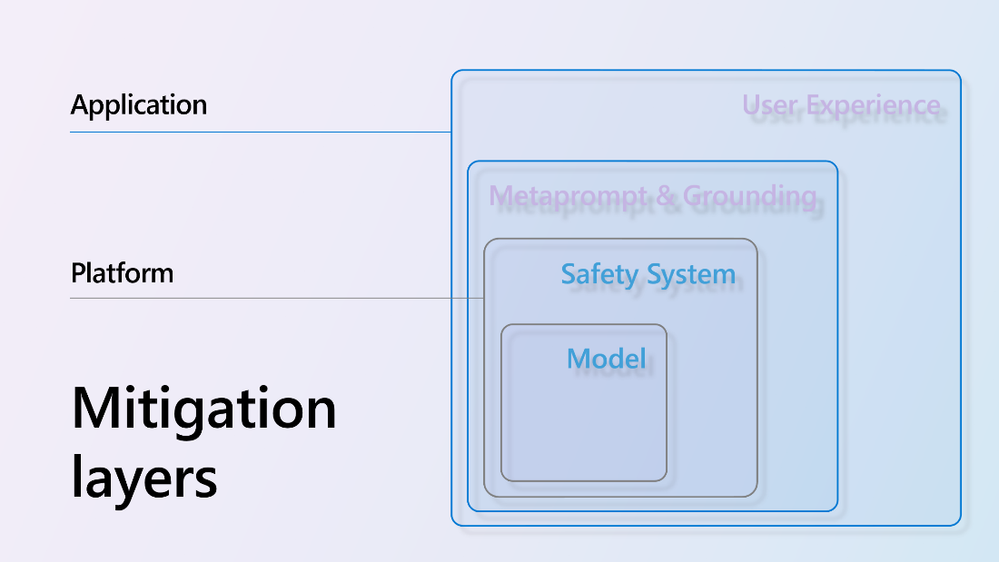

The Microsoft Responsible AI team is constructing a multi-layered system with robust safety models and technologies for these very reasons.

Explore the essential components of Azure AI Content Safety

Azure AI Content Safety has evolved into a robust tool for online safety in the digital age. With an ability to handle various content categories, languages, and threats, and effectively moderate both text and visual content it can help business redefine their approach to online safety. Here is a deeper dive into key features:

- Multilingual Proficiency: In today's globalized digital ecosystem, content is produced in countless languages. Azure AI Content Safety is equipped to handle and moderate content across multiple languages, creating a universally safe environment that respects regional nuances and cultural sensitivities.

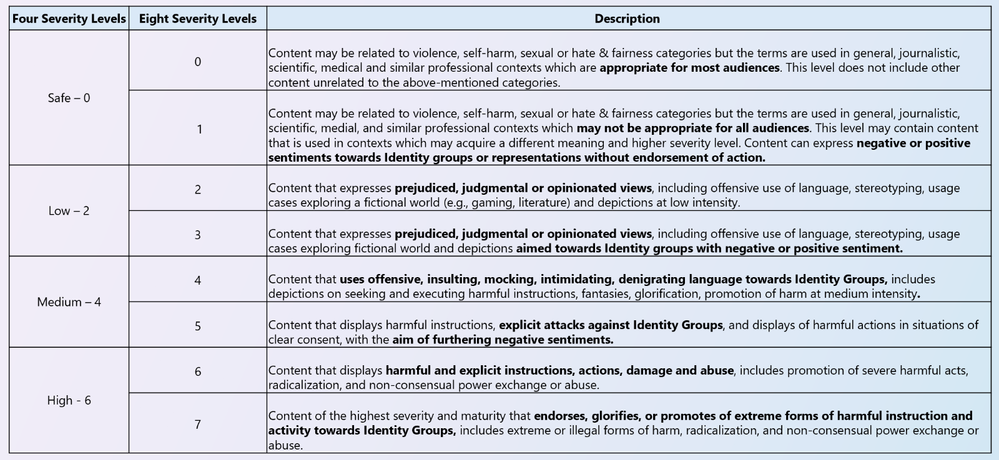

- Severity Indication: Our content safety product offers a unique 'Severity' metric, which provides an indication of the severity of specific content on a scale ranging from 0 to 7. This metric is designed to cater to different user needs, offering both four-level granularity for quick testing and eight-level granularity for advanced analysis. By providing businesses with this flexibility, they can swiftly assess the level of threat posed by certain content and develop appropriate strategies to address it. This feature empowers businesses to make informed decisions and take proactive measures to ensure the safety and integrity of their digital environment.

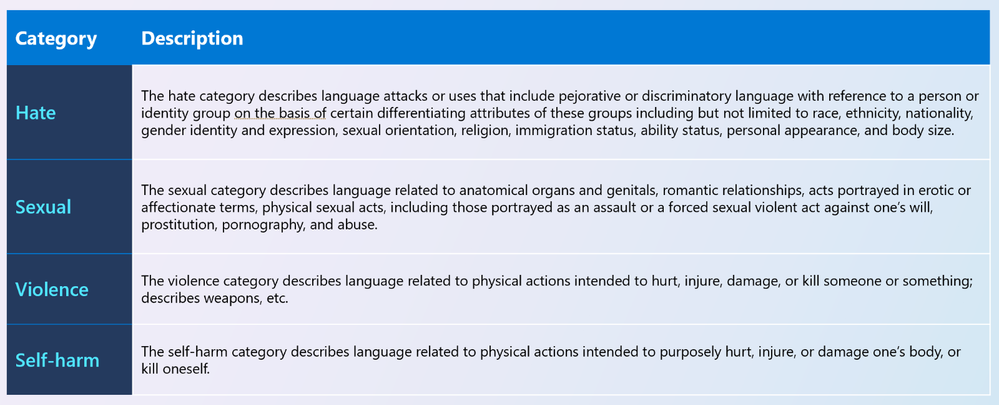

- Multi-Category Filtering: Azure AI Content Safety can identify and categorize harmful content across several critical domains.

- Hate: Content that promotes discrimination, prejudice, or animosity towards individuals or groups based on race, religion, gender, or other identity defining characteristics.

- Violence: Content displaying or advocating for physical harm, threats, or violent actions against oneself or others.

- Self-Harm: Material that depicts, glorifies, or suggests acts of self-injury or suicide.

- Sexual: Explicit or suggestive content, including but not limited to, nudity and intimate media.

- Text and image detection: In addition to text, digital communication also relies heavily on visuals. Azure AI Content Safety offers image features that use advanced AI algorithms to scan, analyze, and moderate visual content, ensuring 360-degress comprehensive safety measures.

Our Customers

South Australia Department of Education

Earlier this year, South Australia's Department of Education embraced generative AI in classrooms. However, the responsible implementation of this technology was a major concern. Simon Chapman, the department's director of digital architecture, highlighted the need to safeguard students from potentially harmful content generated by large language models trained on unfiltered internet data. Now, after conducting a pilot of EdChat, an AI-powered chatbot, involving 1,500 students and 150 teachers across 8 secondary schools, the department is evaluating the results. Azure AI Content Safety has received positive feedback for blocking inappropriate input and filtering harmful responses. This capability has enabled educators to focus on the educational benefits of EdChat. Learn more.

"We wouldn't have been able to proceed at this pace without having the content safety service in there from day one…It’s a must-have."

– Simon Chapman, the department's director of digital architecture

Shell

Shell has developed a generative AI platform called Shell E, which allows teams to build and deploy applications based on large language models. The platform helps employees access and use company knowledge more efficiently, with use cases ranging from drafting and summarizing content to code generation and knowledge management. Learn more.

“Azure AI Content Safety plays a pivotal role in Shell E platform governance by enabling text and image generation while restricting inappropriate or harmful responses.”

– Siva Chamarti, senior technical manager AI Systems, Shell

Get started with Azure AI Content Safety

Using Azure AI Content Safety is a seamless and user-friendly experience, offering various options to integrate its capabilities into your workflows.

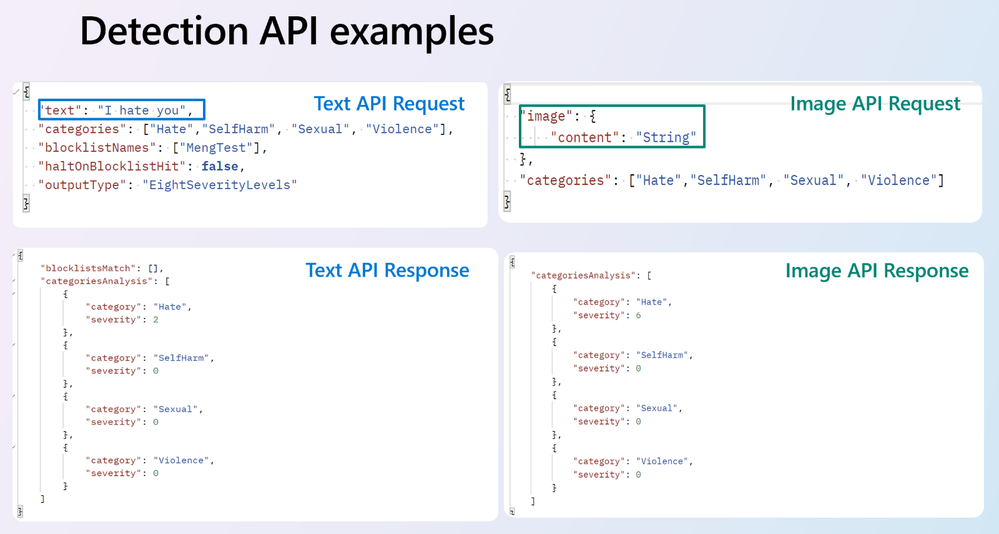

API/SDK Integration

With the Azure AI Content Safety API/SDK, integrate content safety checks seamlessly into your applications or platforms. Simply send your content for analysis and receive a response indicating the severity level. Streamline the integration process and unlock additional features and functionalities with the SDK. Refer to the quickstart documentation to play with API/SDK.

Azure AI Content Safety Studio

For a more interactive experience, Azure AI Content Safety Studio offers a comprehensive web-based interface. Experience the power of this tool as it empowers you to conduct rapid content safety testing. With the added advantage of a monitoring dashboard, you can easily track and manage content safety standards. The Studio provides a user-friendly and customizable environment, enabling you to effectively manage and maintain content safety with utmost efficiency.

Whether you choose to leverage the API/SDK integration for automated content analysis or utilize the Content Safety Studio for a more hands-on approach, Azure AI Content Safety offers a flexible and user-centric solution to meet your content safety needs.

Learn more

Azure AI Content Safety is a powerful tool which enables content flagging for industries such as Media & Entertainment, and others that require Safety & Security and Digital Content Management. We eagerly anticipate seeing your innovative implementations!

- ACOM page: https://aka.ms/acs-acom

- Documentation: https://aka.ms/acs-doc

- Studio: https://aka.ms/acsstudio

- API reference: https://aka.ms/acs-api