This post has been republished via RSS; it originally appeared at: Healthcare and Life Sciences Blog articles.

In the realm of biomedical research and healthcare analytics, one common task is the selection of specific cohorts of patients from larger datasets. These cohorts often serve as the foundation for various studies, clinical trials, and population analyses. The ability to construct patient cohort accurately and efficiently is crucial for gaining insights, conducting research, and improving patient care.

There are several things specific to the healthcare field that make cohort generation more challenging. Many datasets have restricted access and, in some cases, even data schemas are not available to researchers. Also, a dataset might contain Protected Health Information (PHI), so thorough validation of request and its result is required. In addition to the listed above, low-code / no-code users make a significant part of researchers selecting patient cohorts, so we can’t assume their ability to write or validate queries even if data schemas can be shared. In current reality it might take hours of phone calls or emails between a researcher and a data custodian to check whether dataset has required data to support researcher’s study.

With the recent advancements in natural language processing offered by Large Language Models (LLMs) such as GPT-4, there is a potential for empowering researcher to interrogate dataset independently using Natural Language (NL), reducing the reliance on manual translation and data custodian involvement, and therefore increasing the overall efficiency of data retrieval. The focus of our exploratory work was to evaluate how cohort selection experience might be improved by adding steps to clarify data requirements using LLMs. In this article, we describe the flow and the specific design that emerged from our effort to model an LLM-powered cohort builder.

Low code user use case

During our work we were focused on researcher experience and used the following set of assumptions:

- Researcher has domain knowledge and defines the requirement for the cohort using natural language.

- Researcher is a low-code / no-code user.

- Researcher does not have direct access to data and may not have knowledge of the dataset structure (for example number of tables, table schemas, etc.). Cohort building backend (System) is responsible for final query validation and execution of an actual query on data.

Initial experiment: from natural language to runnable query

One of the well know use cases of LLMs application is code generation. Therefore, we attempted to generate SQL queries directly from natural language requests. Our expectation was to obtain a query that could be directly executed on a database. However, even with strict instructions and examples in the prompt we encountered several problems with this approach:

- Fake columns added to a query - an additional field (that is not present in the table schema) consistently appeared in the WHERE clause.

- The JOIN structure was often inadequate.

- Logical operations, such as AND, OR and NOT, were applied incorrectly in some cases.

These issues might be fixed by researcher if LLMs are used in a copilot mode and researcher is familiar with dataset structure, but in our use case we cannot rely on that. Additionally, a resulting string containing a SQL query needs to be parsed and validated again before running on a real database, at least to make sure there was no malicious code injected.

Multi-step approach: introducing request card

To address the limitations encountered in the initial experiment, we developed a machine-friendly structure that (a) limits the variability of fields and (b) preserves the logic of the original request – the request card. To create the card, we used a two-step LLMs-powered approach that follows human logic:

- Extract inclusion and exclusion criteria from a natural language request.

- Generate a request card using inclusion and exclusion criteria from previous step and requiring to use a predefined dictionary of “concept keys”.

The interactive process keeps user in the loop for validation of the generated request query that is still human-readable. Once researcher is satisfied with the request card, it will be passed to a subsequent processing for (i) mapping the concept keys to the data table schema, (ii) values validation and alignment if required, (iii) converting result into a schema- and technology- dependent query (like SQL) specific to the target system. The format of request card preserving logical context (see detailed discussion below) makes card conversion a simple task that doesn’t require LLMs and researcher validation.

This approach allows the researcher to validate that the card accurately reflects the criteria and to refine it if needed without the need to understand SQL syntax. It also transfers responsibility to write and validate queries from low-code users to System. Suggested cohort selection flow is presented on Fig.1.

Figure 1: Multi-step cohort selection flow. Researcher types in cohort description in natural language. The request is transformed into eligibility criteria lists that might be validated by researcher. Then criteria lists are transformed into request card representing request logic in disjunctive normal form. When approved by researcher the request card is passed to a module converting card to SQL query. Researcher validation is not expected at this stage, cohort building tool is responsible for validation. When query is executed, its results are presented to researcher for cohort size validation.

Step 1: From natural language to criteria lists

The goal of the first step is to get researcher’s request structured by creating separate inclusion and exclusion lists. Eligibility criteria lists are an integral part of clinical trials description, and this format is well known in healthcare and biomedical field. Some request-response pairs are presented at the end of the next section, responses were produced by GPT-4 model.

Figure 2: Transforming Natural Language request into eligibility criteria.

Step 2: From criteria lists to request card

A crucial challenge in transitioning between steps was maintaining the logical context of the original natural language request. To overcome this, we employed the Disjunctive Normal Form (DNF). It’s well known that any logical statement can be converted into an equivalent DNF (see Logic And Declarative Language by M. Downward or any other textbook on Mathematical Logic). By representing the request in DNF, we preserved the logical context and ensured that the generated queries accurately reflected the intended criteria.

The implementation of DNF may vary, but for the purpose of simplified explanation let’s assume that [key: value] pair represents a particular expression for a field (key) having exact value, without going into detail of comparison operator implementation. With that assumption the request card will have the following format and logical representation:

In this structure, that all the members of “include" group are predicated with AND, all the members of “exclude" group are predicated with AND NOT, and all further ["include”:{…}, "exclude”:{…}] pairs are appended with OR predicate.

For example:

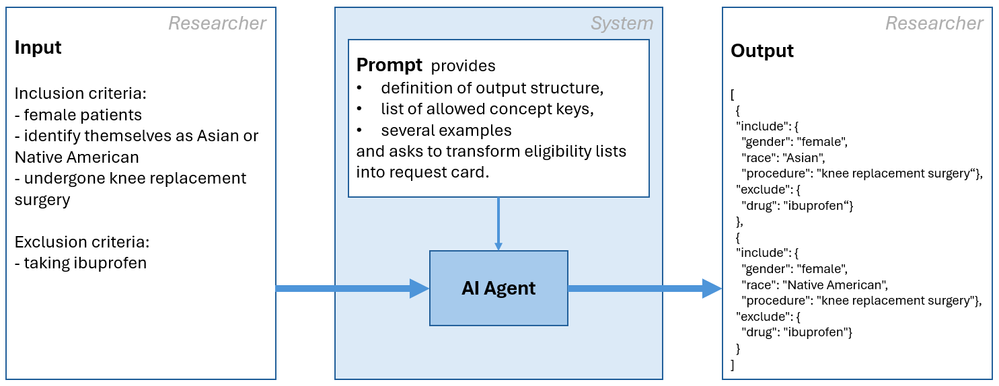

To limit the variability of fields in DNF we specified a list of allowed fields in our prompt. The prompt was based on work of Cliff Wong et al. (Scaling Clinical Trial Matching Using Large Language Models: A Case Study in Oncology. Proceedings of the 8th Machine Learning for Healthcare Conference, PMLR 219). To illustrate the transformation of a human query into Disjunctive Normal Form (DNF), let's consider a couple of examples. Assume we have the following list of allowed keys: gender, race, age, drug, condition, procedure.

Figure 3: Transforming criteria lists into Disjunctive Normal Form.

Example 1

Human Query: "Find all women over 20 years of age who are not taking heparin."

Applying the DNF transformation, the query can be represented as follows:

(gender = "woman") AND (age > 20) AND NOT (drug = "heparin")

In the transformed query, the inclusion criteria (women and age > 20) are combined using the logical AND operator, while the exclusion criteria (heparin) are negated using the logical NOT operator.

Eligibility lists and request card generated by GPT-4 model

|

Request |

Criteria lists |

Request card |

|

Find all women over 20 years of age who are not taking heparin. |

Inclusion criteria: - women over 20 years of age Exclusion criteria: - taking heparin |

Example 2

Human Query: "Retrieve all patients who have diabetes or hypertension and are older than 50."

Applying the DNF transformation, the query can be represented as follows:

((age > 50) AND (condition = "diabetes"))

OR

((age > 50) AND (condition = " hypertension "))

In this example, the inclusion criteria are combined using the logical OR operator, allowing for the selection of patients with either diabetes or hypertension. The age criterion is represented separately and combined with the condition criteria using the logical AND operator.

Eligibility lists and request card generated by GPT-4 model

|

Request |

Criteria lists |

Request card |

|

Retrieve all patients who have diabetes or hypertension and are older than 50. |

Inclusion criteria: - have diabetes or hypertension - older than 50 Exclusion criteria:

|

Thoughts on validation and prompt evaluation

The approach that we suggest provides several points where human or system validation can be performed. The main goal of human validation of request card should be confirmation that the card matches the intent of natural language request. This check point allows making changes/refinements if needed.

We observed situations when keys not presented on the allowed list were injected into request card, see key ‘location’ in Example 3 below. This request card is considered describing researcher request correctly. It will be passed to a module (not powered by LLMs) responsible for key validation, as well as query generation for a given key-data schema correspondence, with no researcher involvement. Keeping request card matching researcher request (instead of matching it to data schema) might be very useful when search is done across multiple datasets with different schemas.

Example 3

Request card created by GPT-4 model, key location doesn’t belong to the allowed list of keys

|

Request |

Criteria lists |

Request card |

|

Find all women over 60 years of age undergone knee replacement in Overlake Hospital |

Inclusion criteria: - women - over 60 years of age - undergone knee replacement - in Overlake Hospital |

The last validation point, when cohort size is returned, allows researcher to review the cohort size and its sufficiency for research purposes, and iterate through previous steps if needed.

We do not present evaluation results for prompts here since it is early exploratory work. Proper evaluation should be performed before using them in production settings. One possible evaluation approach would be to create a test set of requests with corresponding manually structured request cards and use them to compute precision and recall. Definition of match in this case might vary (for example exact vs. fuzzy) and will require careful consideration. There are other things to be taken into consideration such as variability in the list of allowed keys, each step vs. request card only evaluation, etc.

Acknowledgements

We are grateful to Christopher Gooley for giving us access to infrastructure that made experimenting with LLMs fast and simple, to Jinpeng LV for sharing knowledge on prompt engineering and to Cliff Wong for discussions on request card format.