This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Create a Simple Speech REST API with Azure AI Speech Services

Explore the world of Speech recognition and Speech Synthesis with Azure AI Services. In this tutorial, you will learn how to create your own simple Speech REST API using Azure AI Speech Synthesis and Azure OpenAI services or OpenAI API. Experience the power of speech synthesis using Azure and explore the infinite number of possibilities today unveiled to you by Azure AI Services to create powerful products.

Overview of the Demo REST API

In this Speech API we are going to build, we have 3 simple end points that all make a GET request to the Azure AI Speech Service and to OpenAI API. A request with your very own voice data is sent over to Azure AI Speech Service and a text response of what you said is returned, moreover, the returned text sent is sent to OpenAI Chat Completion API and a response is sent back. Finally, the response is sent to Azure AI Speech Service, and it returns a voice response with an accent you choose, as per your region.

Prerequisites

- Node 16+ (Long Term Support, LTS), it comes with npm

- You need to have Visual Studio Code installed

- An Open AI Platform Account, if you don’t have access to Azure OpenAI

- An Active Azure Subscription

- Have Git installed and your account configured.

- Have a Postman Account.

- Have Postman Agent installed or Install the Visual Studio Code Extension

- A voice recorder like Audacity

Create an Azure AI Speech Resource

First things first, we will need to create an Azure Speech Resource where will be able to get our API Keys from. To do this head over to your browser and open the Azure portal.

- In the search bar, type in Speech Service. Once the page loads, press the Create button. You will be then redirected to the Create Speech Services page.

- Choose a resource group or create a new one, you can choose another region or leave it as default. Type in a name for your service. Choosing your current pricing tier and click Review + create. Wait for the resource to deploy successfully then click Go to resource.

- Now we are going to fetch our API keys. To do this, head over to left tab and click Keys and Endpoint.

- Copy the any of the API keys and paste then in your notepad, or keep note of them.

Get Your OpenAI API Keys

In case you don’t have your OpenAI API Keys, you can head over to your account portal and generate your API Keys. Note that for you to use the service you must have enough credits in your account. Alternatively, if you have access to Azure OpenAI Service you can follow this guide on how to navigate and get your API Keys after creating your own instance of the service.

Installing necessary libraries

This tutorial focuses on using JavaScript and Node.js, therefore the following libraries will be installed using node package manager (npm). If you would like to review other languages, after the tutorial, there will be a set of links in the Learn More Section.

- Head over to your terminal in VS Code after creating a new project folder. You can choose to clone this repository https://github.com/tiprock-network/azureSpeechAPI or code along.

- Let’s start by creating our necessary folders. In your VS Code Editor create the folders controllers, public, routes, speech_files. In the public folder add a new sub-folder and name it synthesized.

- Now in your terminal, run this commands line by line in order to initialize our new project, and install necessary libraries.

npm init -y npm i express dotenv npm i request openai npm i microsoft-cognitiveservices-speech-sdk npm I nodemon -D

The above installs dotenv, a library that we will need for our environment variables, openai api library, microsoft-cognitiveservices-speech-sdk and nodemon is installed so that we avert restarting our node express server manually.

- If you cloned the repository, you are only required to type in the command, npm install and all of these dependencies shall be installed.

- Let’s continue coding along, head over to the package.json file created and change the scripts section to the code below.

"scripts": { "start": "node app", "dev": "nodemon app" },

- Now create the app.js and .env files in the root folder.

Create your Voice Data

Open your favorite voice recording app, in this case I am using Audactiy. Record a few simple and short voice messages between 5 – 20 seconds, saying something you want feedback on. Save and export your .wav files to speech_files folder. Give the recording files a short and relevant names according to what you have recorded e.g. greeting, food, etc.

In case you cloned the repository, there are a few .wav files pre-recorded and you can utilize them in this project.

Create REST API using Express.js

- Open app.js and add the following code to create an express server.

const express = require('express') const dotenv = require('dotenv') dotenv.config() const app = express() app.use(express.static('public')) app.use('/api',require('./routes/speechRoute')) const PORT=process.env.PORT || 5005 app.listen(PORT,()=>console.log(`Service running on port - ${PORT}...`))

- Open .env and add the following variables. Remember to add the region that you chose as shown below.

PORT=5001 API_KEY_SPEECH=add speech service api key here API_SPEECH_REGION=eastus API_KEY_OPENAI=add openai api key here

- Create routes by heading over to routes folder and in it create the file speechRoute.js. Add the following code to create the routes that perform a GET request with a specific endpoint.

const express = require('express') const router = express.Router() const speech2text = require('../controllers/speechtotextController') const textcompletion = require('../controllers/chatcompletionController') const text2speech =require('../controllers/texttospeechController') router.get('/voicespeech',speech2text) router.get('/completetext',textcompletion) router.get('/talk',text2speech) module.exports = router

- In the controllers folder create three different files with the names chatCompletionController.js, speechtotextController.js and texttospeechController.js. Add the following codes respectively.

Code for chat completion API with OpenAI

Code for Speech to Text using Azure AI Speech Service Node.js Library

Note that you can choose from a wide variety of dialect or accent that you want for example for English US you would use en-US. The example below use English Kenya to detect Kenyan accent. To see a full list of supported languages check out Language and Support for Speech Service.

Code for Text to Speech using Azure AI Speech Service Node.js Library

Test Your API

Let’s head over to the terminal and start our app. To start the app in development mode. Run the command below.

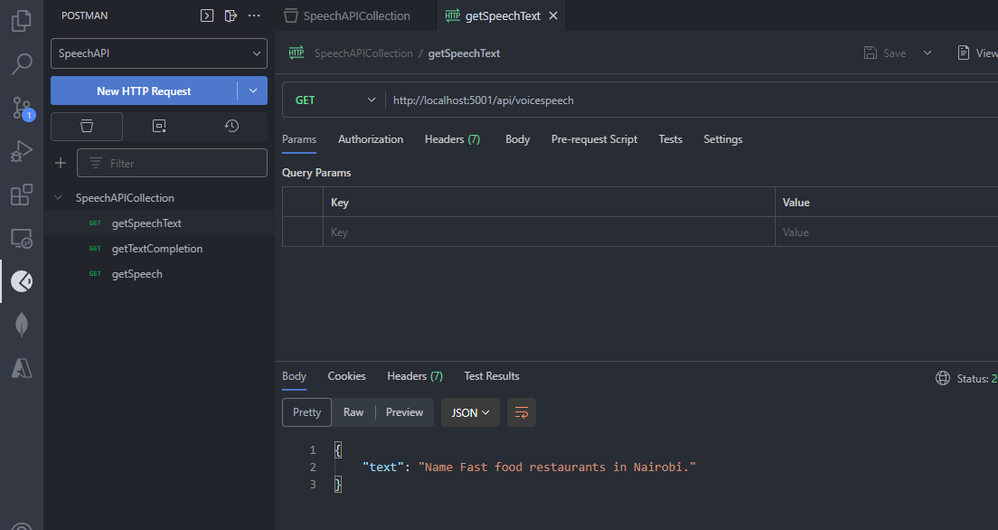

After the “server listening on port 5001” message is shown head over to the VS Code Postman Extension. Click the Postman icon on the left pane as shown below and let’s test our different endpoints. In order to test the endpoints you will have to create a new workspace then start a new collection then add a new request. Note that you will be prompted to login into your account whether you are using the desktop client or using the Visual Studio Code Extension.

Notice in the image my endpoint, http://localhost:5001/api/voicespeech, returns my speech data according to the .wav file I had set the path to in the speechtotextController.js file.

You can change this according to the different .wav files you recorder, or incase you cloned the repository you can change the path to different audio files.

Getting Chat Completion from OpenAI API

The next endpoint, http://localhost:5001/api/completetext, gets a chat completion or chat response from OpenAI API.

To get completion and chat completion from Azure Open AI Service, read the documentation on Azure OpenAI library for JavaScript.

Get Voice Data Generated by Azure AI Speech Synthesis

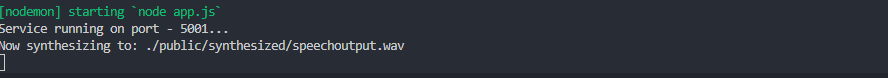

Run a similar GET request with this endpoint, http://localhost:5001/api/talk. Upon completion of the request with a Response code of 200 OK returned, the following response body should be returned.

While your VS Code terminal should have the same output as below.

Open the new speechoutput.wav file in the synthesized folder in public and listen to the generated speech as per the chat completion.

Congratulations you have just created your very own simple Speech REST API. You can now further improve it and do a range of tasks with this awesome service.

Learn More

Explore how to do the same in other programming languages.

Azure OpenAI Service REST API reference.

Learn how to work with the GPT-35-Turbo and GPT-4 models.

Get started using GPT-35-Turbo and GPT-4 with Azure OpenAI Service.