This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

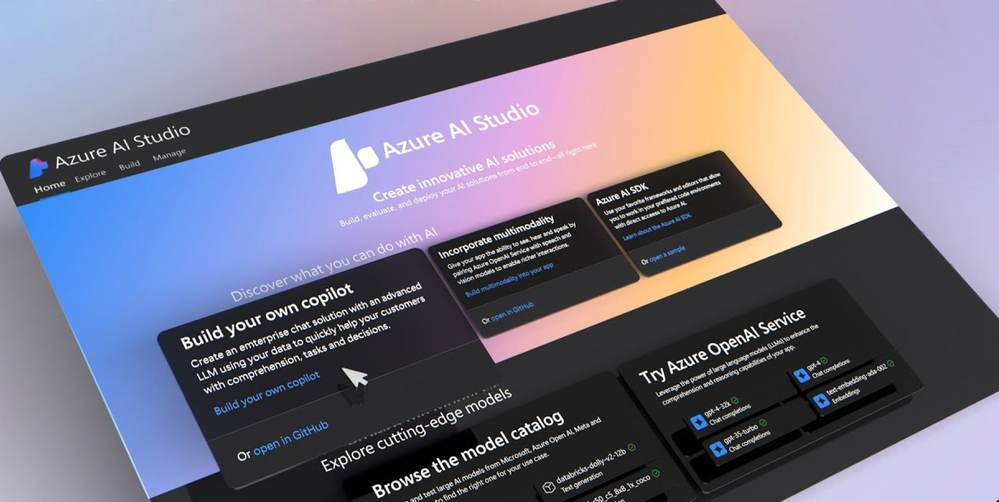

Build, test, deploy, and monitor your generative AI apps at scale from one place with Azure AI Studio. Access models in the Azure OpenAI service from Meta, NVIDIA and Microsoft Research, as well hundreds of open-source models. Integrate your own data across multiple data sets to ground your model, which is made easier through direct integration with OneLake in Microsoft Fabric. It uses shortcuts to let you bring in virtualized data sets across your data estate without having to move them.

Use Azure AI Studio for full lifecycle development from a unified playground for prompt engineering, to pre-built Azure AI skills to build multi-modal applications, using language, vision, and speech, as well as Search, which includes hybrid with semantic ranking for more precise information retrieval. Test your AI applications for quality and safety with built-in evaluation, and use a prompt flow tool for custom orchestration, as well as overarching controls with Responsible AI content filters for safety. Seth Juarez, Principal Program Manager for Azure AI, gives you an overview of Azure AI Studio.

Build a multi-modal copilot app powered by retrieval-augmented generation.

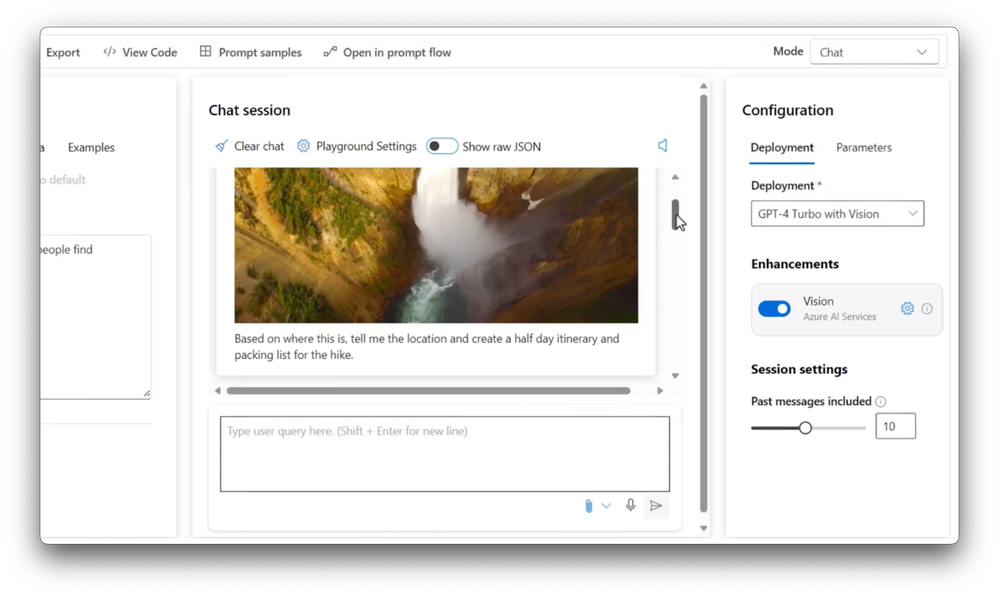

Incorporate images, text, speech and videos with Azure AI Studio. See it here.

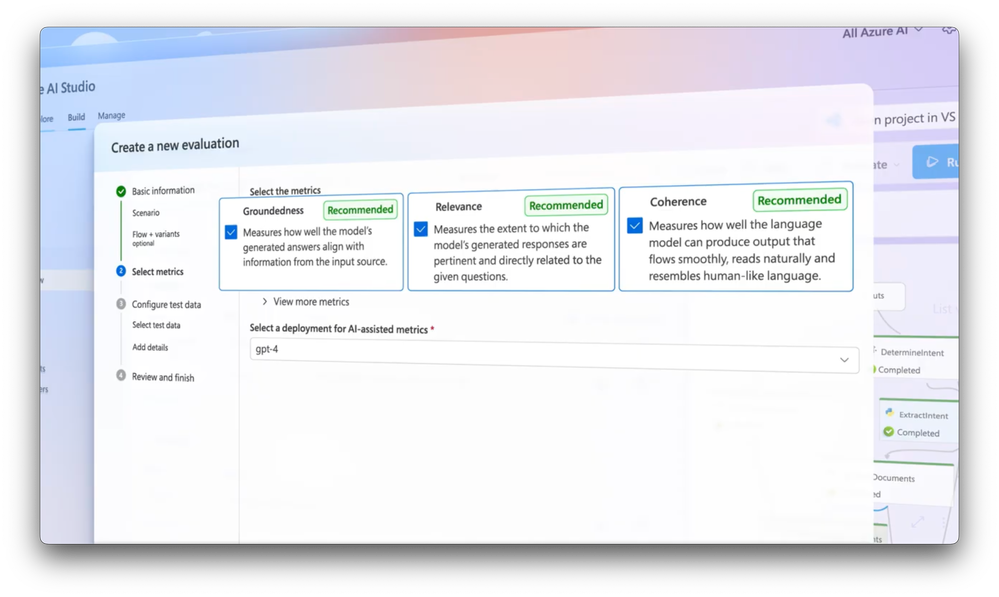

Assess prompt flows prior to putting code into production, with built-in evaluation tools.

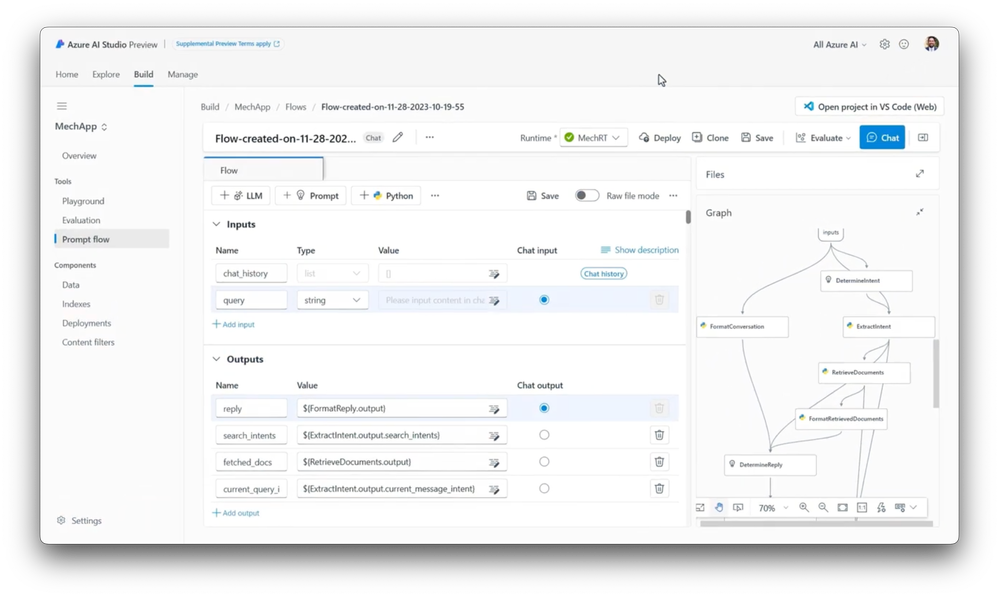

Guide the behavior of your application to better fit your use case with prompt flow. Check out Azure AI Studio.

Apply content filters to monitor quality and safety of AI app responses.

Watch our video here:

QUICK LINKS:

00:00 — Build your own copilots in Azure AI Studio

01:52 — Copilot app running as a chatbot

03:53 — Retrieval augmented generation grounded on your data

04:54 — Experiment with prompts: Multi-modality

06:47 — Advanced capabilities: Prompt flow

08:58 — Ensure quality and safety of responses

10:09 — Wrap up

Link References

Start using Azure AI Studio today at https://ai.azure.com

Check out our QuickStart guides at https://aka.ms/LearnAIStudio

Unfamiliar with Microsoft Mechanics?

As Microsoft’s official video series for IT, you can watch and share valuable content and demos of current and upcoming tech from the people who build it at Microsoft.

- Subscribe to our YouTube: https://www.youtube.com/c/MicrosoftMechanicsSeries

- Talk with other IT Pros, join us on the Microsoft Tech Community: https://techcommunity.microsoft.com/t5/microsoft-mechanics-blog/bg-p/MicrosoftMechanicsBlog

- Watch or listen from anywhere, subscribe to our podcast: https://microsoftmechanics.libsyn.com/podcast

Keep getting this insider knowledge, join us on social:

- Follow us on Twitter: https://twitter.com/MSFTMechanics

- Share knowledge on LinkedIn: https://www.linkedin.com/company/microsoft-mechanics/

- Enjoy us on Instagram: https://www.instagram.com/msftmechanics/

- Loosen up with us on TikTok: https://www.tiktok.com/@msftmechanics

Video Transcript:

-Powerful copilot experiences using GPT, and other generative AI models are fast becoming an expected part of everyday app experiences. Microsoft itself now has copilots available for its most popular workloads from Copilot in Bing to Microsoft 365 app experiences, GitHub for code generation, security investigation and response, and much more. They provide an intelligent natural language interface to the underlying data of your apps.

-And now with Azure AI Studio, you have a unified platform to build and deploy copilots of your own all from one place. The model catalog uniquely gives you access to cutting-edge models in the Azure OpenAI service, and from across the ecosystem with models from Meta, NVIDIA and Microsoft Research; as well hundreds of open-source models.

-You can integrate your own data across multiple different data sets to ground your model, which is made easier through direct integration with OneLake in Microsoft Fabric, which uses shortcuts to let you bring in virtualized datasets across your data estate without you having to move them.

-And importantly, Azure AI Studio gives you everything you need for full lifecycle development from a unified playground for prompt engineering, pre-built Azure AI skills to build multi-modal applications, using language, vision, and speech, as well as Search.

-With options including hybrid with semantic ranking for more precise information retrieval, built-in evaluation to test your AI applications for quality and safety, a prompt flow tool for custom orchestration, as well as overarching controls with Responsible AI content classifications for safety and more. One integrated studio lets you explore, build, test, deploy and even monitor your generative AI apps at scale. Let me show you an example of this in action.

-Here, I’ve got a simple copilot app running as a chatbot as part of a retail site. As I submit a prompt, you can see that it not only gives me a detailed answer, the source information it’s using is partly derived using the data it’s retrieved from my catalog data and corresponding product manuals, as you’ll see in the response with its numbered citations.

-Next let me walk through how you’d use Azure AI Studio to build something like this. First, you’ll find it at ai.azure.com and by selecting build your own copilot, you can kick-off the experience. From there, you can choose an existing project, or create a new one, which I’ll do in this case, and give it a name.

-Next, you’re prompted to choose an existing Azure AI resource with the underlying services to run your app or you can create a new one. To save time, I’ll choose an existing one and create a project which takes you directly to the Playground. And if I go to settings, you can see the underlying services already set up, and notice this is also a shared workspace for me to work together with my designated team on the app.

-That said let’s move onto deployments, I have few models already deployed. And creating a new deployment is easy. First off, you’ll pick your model, and there are dozens of model options to choose from. Then once you confirm your choice, and name it, you can kick of the deployment. That said, let’s navigate back to the Azure AI Studio Playground which gives you a natural language interface to do prompt experimentation with your deployed models.

-In this case we’re using the GPT-4 model. And the first thing I can do is customize the default system message to offer the model more context on its purpose. To help you get started you can use our prompt samples of pre-built system messages. These get appended to the user prompt to guide generated responses. For my outdoor retail app, I’m going to choose the Hiking Recommendations Chatbot, and notice how my system message changes with specific instructions to the large language model.

-Next, from here, we can focus on bringing in data from our product catalog to ground our model while also ensuring that the most relevant grounding data is presented based on the user prompt.

-For that, I’ll go to add your data and add a source. In my case I have a few options to bring in data, including OneLake in Microsoft Fabric. And by the way we index the data for you when you bring it in. In fact, I’ve already got my data loaded and indexed using an instance of Azure AI Search.

-So, I’m going select Azure AI Search and the hybrid search option which will retrieve information based on both keywords when users want specific information and vector search to better understand user intent. By default, this is combined with the semantic search re-ranker, to make sure that the best information is curated to ground our model.

-Together these options provide more accurate responses during retrieval augmented generation. And by the way, your data is not used to train the large language model, it’s only used to temporarily ground it. So now let’s go back to the Playground to experiment with prompts. I’ll prompt the model with which hiking shoes should I get?

-And while it says it can’t provide a specific recommendation, with our retrieval augmented generation pattern enabled, TrailWalker Hiking shoes appear at top of the list with a summary of why those shoes could be a good fit for me, based on the grounding information it was able to reason over. That said, beyond text-based inputs, one of Azure AI Studio’s core strengths is support for multi-modality which helps you to craft dynamic applications incorporating images, text, speech, and even videos. For example, I can generate images for my marketing and retail site.

-Here, I’ve changed the deployment to a DALL-E 3 model and the mode to images. I’ve already submitted a prompt to generate a brand logo, which you’ll see on the left. And you’ll see a few other images for hiking shoes that I can use in future campaigns. And it gets even more exciting, the GPT-4 Turbo with Vision model combined with Azure AI Vision Video Retrieval lets you provide video as an input for generative AI responses. In this test, for example, I’ve enabled the Azure AI Vision enhancement.

-To save a little time, I’ve also uploaded a 30-second video. I’ll submit my prompt with “Based on where this is, tell me the location and create a half day itinerary and packing list for the hike.” That takes a moment to generate. There it is. And I can play the video from here. And the response below the video tells me the location is Yellowstone National Park at the Lower Falls of the Yellowstone River. It also provides a tailored itinerary with detailed timings, and a full packing list with equipment recommendations for my hike!

-So, I just showed you how you can build a multi-model copilot app, powered by retrieval-augmented generation right out of the box. Let’s move onto some of the more advanced capabilities. For more control over the orchestration itself to better-fit your use case, you can use prompt flow.

-For that, right from the Playground, I can choose open in prompt flow. To get the best results, this lets you define a process that starts with the user prompt. And each of these nodes can be edited and customized using your own code and logic. In fact, I’ll click through the main steps. Up front, we are determining the user’s intent by analyzing their natural language input. That in turn gets extracted and transformed into a query which is then used to retrieve information to augment the prompt. Then that information is formatted and presented to the LLM.

-And we’re not done yet. As the reply is generated, we can specify the format of the outputted response to be more consumable. And again, we can edit all these steps if we want. Now, because LLMs are non-deterministic in nature, even minor changes can yield very different results. And that’s where our built-in evaluation tools can help you to evaluate how well the experience works prior to putting the code into production.

-For that, we can use the built-in evaluation tools to assess prompt flows. I’ll set up one for question and answering pairs, and select my flow to evaluate. And here, I can test variations with any node in the prompt flow and see how that impacts the outputs across several different metrics, including groundedness, relevance, coherence, fluency, and more. For example, I’ve created two variants of the system message to test as part of the prompt flow. The first variant uses the simple default system message.

-The second variant is quite a bit more verbose with details for things like grounding, tone, and response quality. I’ve also tested these two variants using the same dataset, which comprises several user prompts designed to create a realistic and fair comparison. To save time, I’ve already run the evaluation to compare the two, so what you’re seeing here is the output with both variants.

-On the left side are the results from the more detailed system message, which you’ll see has slightly better metrics across the board compared to the one on the right with the simpler system message. And I can go even further to help ensure both the quality and safety of responses. Using Azure AI Content Classifications, I can apply content filters to my deployed application. This enables me to create custom thresholds to filter out harmful content from both the user input as well as the output of my application.

-Then with everything tested and ready, you can deploy your app to an endpoint. For that, from the prompt flow, you’ll hit the deploy button, which adds an entry into deployments. If I click into this one, inside consume, I can see the code that I need to use this in my app with all of the configurations set and I can even choose between different languages. So, in just a few clicks you can integrate what you’ve built into a new or existing application.

-Finally, if you are a more sophisticated user with lots of experience working with large language models, there’s one more thing. Using our model as a service capabilities, we will also give you the ability to Fine-tune LLMs to customize their existing behavior. But this is an advanced scenario that requires in-house data science expertise to do correctly.

-So that was a quick overview of Azure AI Studio, which gives you one unified platform to explore, build, test and deploy your generative AI apps at scale, You can start using the Azure AI Studio today at ai.azure.com To learn more, check out our QuickStart guides at aka.ms/LearnAIStudio. Be sure to subscribe to Microsoft Mechanics for the latest AI updates, and thanks for watching.