This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

This post was written by Martin Bald, Senior Manager Developer Community from Wallaroo.AI , a Microsoft startup partner focusing on operationalizing AI. In this blog post, the first of a series of five, we’ll dive into best practices for productionizing ML models.

Introduction

Implemented correctly, model packaging helps streamline bringing ML models in production in various deployment environments such as cloud, multi-cloud/multi-region cloud, edge devices and on premises.

ML Model Packaging and the Production Process

The process of deploying machine learning models can sometimes take weeks or months. As Data Scientists and ML Engineers, you are experts in the ML field and you may be experiencing the frustration of struggling at the final hurdle to deploy the models into production despite a smooth pre-production stage of model testing and experimentation.

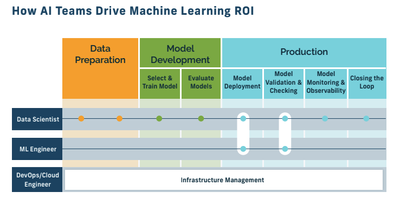

The image below depicts how production should be part and parcel of the entire end-to-end ML production process and not an afterthought. That is easier said than done, though, as developing and deploying ML models is not a trivial task.

Fig 1.

It involves many roles such as Data Scientists, ML Engineers, MLOps, DevOps etc.. It also requires a number of steps, such as data preprocessing, feature engineering, model training, evaluation, optimization, and inference. Moreover, each step may require different tools, libraries, frameworks, and environments. This makes the ML model development and deployment process complex, error-prone, and hard to reproduce. One way to address this challenge is to use ML model packaging.

What is ML Model Packaging

ML model packaging is the process of bundling all the necessary components of an ML model into a single package that can be easily distributed and deployed. A package may include the model itself, the dependencies and configurations required to run it, and the metadata that describes its properties and performance.

By using ML model packaging, we can ensure that the ML model is consistent, portable, scalable, and reproducible across different platforms and environments.

There are different ways to implement ML model packaging. Some of the common methods are:

- Using containerization technologies, such as Docker or Kubernetes, to create isolated and lightweight environments that contain all the dependencies and configurations needed to run the ML model.

- Using serialization formats, such as pickle or ONNX, to store the ML model in a binary or text file that can be loaded and executed by different frameworks and languages.

- Using standardization protocols, such as PMML or PFA, to represent the ML model in a platform-independent and interoperable way that can be exchanged and executed by different systems.

- Using specialized tools or platforms, such as MLflow or Seldon Core, to automate the ML model packaging process and provide additional features such as versioning, tracking, serving, etc.

Benefits of ML Model Packaging

The importance of investing the time to package your ML models can’t be understated as this will help set you up for an easy transition to production. There are a number of benefits for using ML model packaging. Some of them are:

- Simplifying the ML model development and deployment process by reducing the complexity and variability of the components involved.

- Improving the quality and reliability of the ML model by ensuring that it is tested and validated in a standardized way.

- Enhancing the collaboration and communication among different stakeholders of the ML model, such as Data Scientists, ML Engineers, and other roles. .

- Facilitating the reuse and sharing of the ML model by making it easy to access and consume by other applications and services.

- Enabling the monitoring and management of the ML model by providing metrics and feedback on its performance and behavior.

Deploying ML Packaged Models To Production

Once your ML models are packaged the next step is to deploy the models to production. For this article we will use a retail store example for product monitoring for cashierless checkout where CV models will be deployed to edge environments such as cameras, and checkouts. For the Edge device we will emulate this using an Wallaroo.AI inference server running on Azure.

The first step for deployment is to upload our model into our Azure cluster, as shown in the code below.

model_name = 'mobilenet'

mobilenet_model = wl.upload_model(model_name, "models/mobilenet.pt.onnx", framework=Framework.ONNX).configure(tensor_fields=['tensor'],batch_config="single")

Next, we configure the hardware we want to use for deployment. In our code below we can define the type of architecture such as the number of ARM or x86 CPUs and/or GPUs needed. In the code example below we see that the edge device will use 1 CPU and 1 GB of memory.

deployment_config = wallaroo.DeploymentConfigBuilder() \

.replica_count(1) \

.cpus(1) \

.memory("1Gi") \

.build()

Then we can construct our pipeline object around the model and deploy.

deployment_config = wallaroo.DeploymentConfigBuilder() \

.replica_count(1) \

.cpus(1) \

.memory("1Gi") \

.build()

With our environment configured and pipeline object created we can test it by running a sample image through our pipeline. In our case products on a store shelf.

pipeline_name = 'retail-inv-tracker-edge'

pipeline = wl.build_pipeline(pipeline_name) \

.add_model_step(mobilenet_model) \

.deploy(deployment_config = deployment_config)

Fig 2.

After that, we will convert this image to the expected format for 640x480 dimensions.

width, height = 640, 480

dfImage, resizedImage = utils.loadImageAndConvertToDataframe('data/images/input/example/dairy_bottles.png', width, height)

Then we run our inference.

startTime = time.time()

infResults = pipeline.infer(dfImage)

endTime = time.time()

infResults

We can then examine the output results returned. (partial view below)

Fig 3.

Finally, we can use the output above to plot out inferences on top of the product image to examine results.

elapsed = 1.0

results = {

'model_name' : model_name,

'pipeline_name' : pipeline_name,

'width': width,

'height': height,

'image' : resizedImage,

'inf-results' : infResults,

'confidence-target' : 0.50,

'inference-time': (endTime-startTime),

'onnx-time' : int(elapsed) / 1e+9,

'classes_file': "models/coco_classes.pickle",

'color': 'BLUE'

}

image = utils.drawDetectedObjectsFromInference(results)

This produces an image like the one below with the product on the shelf identified with an average confidence of 72.5% for the objects that were detected.

Fig 4.

Now we have a model in production, ready to serve inferences. We can get the inference endpoint url from the pipeline with the following call:

pipeline.url()

Giving us the below output.

If we are deploying our packaged model to a cloud cluster then we are done at this point. We are live and in production with a working endpoint in the cloud. However, for this example we want to deploy and run our model on the in-store edge device(s), in our case these may be a small processing system in the back of a store.

Publishing the model to production to an edge environment is as quick and easy as it was for the cloud deployment above. Let’s go through this additional step.

Deploying our model to the edge device involves using the “publish” command to push to a preconfigured container registry. Remember that we made this easy for ourselves in the pre-production phase and packaged our model.

The output of this “publish” command contains the location of the docker container and helm chart that I can use for edge deployment. From there, we can add a device, which will also produce a device-specific token that will be used to send data back to the Ops Center.

First we run the “publish” command using the code below.

pub = pipeline.publish()

Next we deploy to our in store edge device. In this case our device is ‘camera-1’ in a store. If we are deploying to devices in multiple stores we would add those store numbers to the command.

pub.add_edge("camera-1")

Finally we will run a pipeline logs command so we can check the output of the inference details on the deployed edge devices.

pipeline.logs()

Fig 5.

Conclusion

We have seen that ML model packaging is a useful technique that can help streamline the ML model development and deployment process while also improving its quality and efficiency.

By using ML model packaging, you can create robust and reliable ML models that can be easily distributed and deployed across different platforms and environments. We have seen this through the retail store example where we deployed our model to the cloud and also to an in store edge environment and ran inferences for on-shelf product detection in a few short steps.

The next blog post in this series will cover testing our models in production using A/B testing and shadow deployment.

If you want to try the steps in these blog posts yourself, you can access the tutorials at this link and use the free inference servers available on the Azure Marketplace. Or you can download a free Wallaroo.AI Community Edition you can use with GitHub Codespaces.

Wallaroo.AI helps AI teams go from prototype to real-world results with incredible efficiency, flexibility, and ease - in the cloud, multi-cloud and at the edge.