This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Context

Azure OpenAI Service’s Provisioned Throughput Units (known as “PTUs”) have been all the rage over the past few months. Every enterprise customer has been wanting to get their hands on their own slice of Azure Open AI Service. With PTUs, they can run their GenAI workloads in production at scale with predictable latency and without having to worry about noisy neighbors. Customers of all sizes and from all verticals have been developing groundbreaking applications, usually starting with the Pay-as-you-go (PayGo) flavor of Azure Open AI. When the time comes to deploy an enterprise-grade application to production however, most rely on reserving capacity with PTUs. These are deployed within your own Azure Subscription and allow you to enjoy unencumbered access to the latest models from Open AI such as GPT-4 Turbo. Because PTUs are available 24/7 throughout the month for use, customers need to shift the paradigm of utilizing tokens into utilizing time when considering cost. With this shift often comes the challenge of knowing how to right-size their PTUs.

To aid in that exercise, Microsoft provides tools such as the PTU calculator within the AI Studio experience. These tools, however, make assumptions such as PTUs being able to handle peak load. While this could be a valid approach in many cases, it’s only one way of thinking about choosing the right size for a deployment. Customers often need to consider more variables, including sophisticated architectures to get the best return on their investment.

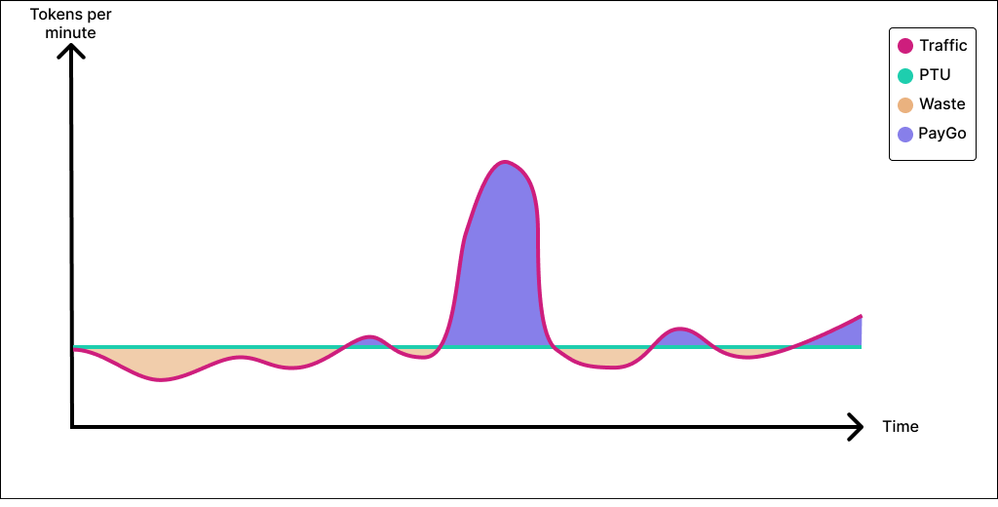

One pattern that we have seen emerge is the spillover, or bursting, pattern. With this pattern, you do not provision PTUs for peak traffic. Instead, you define the amount of PTU serviced traffic that the business can agree upon, and you route the overflow to a PayGo deployment. For example, your business may decide that it’s acceptable to have 90% of the traffic serviced by the PTU deployment with a known latency and to have 10% of overflow traffic serviced with unpredictable performance through a PayGo deployment. I’ll go into detail below on when to invoke this pattern more precisely, but if you are looking for a technical solution to implement this, you may check out this post: Enable GPT failover with Azure OpenAI and Azure API Management - Microsoft Community Hub .The twist is that depending on the profile of your application, this 10% degraded performance can save you north of 50% in unused PTU cost.

If as you’re reading this, you have found yourself in this predicament, you have come to the right place. In this blog post, we try to convey the message that PTUs done right are not necessarily expensive by characterizing customer’s scenarios anecdotally. The three application scenarios we will review are known as: The Unicorn, The No-Brainer, and The Problem Child.

The Unicorn

We will go quickly over the unicorn since nobody has ever seen it and it might not even exist. But just in case, the Unicorn application sends/receives token on a perfectly steady basis, weekdays, weekends, daytime, nighttime. If you ever have one of those, PTU makes perfect sense, you get maximum value and leave no crumb on the table. And if your throughput is meaningful in addition to being constant, you will likely also save lots of money compared to a PayGo deployment, in addition from reaping the predictable and low latency that comes with PTUs.

The No-Brainer

Next up is our No-Brainer application. The No-Brainer application profile has mild peaks and valleys. The application sends a constant baseline of tokens to the model, but perhaps there are a couple of peak hours during the day where the application sends a little more. In this case, you sure could provision your PTU deployment to cover the valley traffic and send anything extra to a PayGo deployment. However, in the No-Brainer application, the distance between our peak and valley is minimal, and, in this case, the juice might now be worth the squeeze. Do we want to add complexity to our application? Do we want to invest the engineering time and effort to add routing logic? Do we want to introduce possibly- degraded service to our application and perhaps not even be able to provision a lesser amount of PTUs increments? Again, it all comes down the distance between your peaks and valleys. If those are close, purchase enough PTU to cover for peak. No brainer.

The Problem Child

The Problem Child is that application where the traffic is bursty in nature and the variance in throughput is high. Perhaps the end of the quarter is near, and the company is behind on revenue, so every seller is hitting their sales copilot hard for a couple days in an attempt to bridge the gap to quota. How do we best cover the Problem Child with PTUs?

Option 1: Provision for peak

As we discussed above, our first inclination could be to provision for peak and that is also what most calculators will assume that you want to do so that you can cover all demand conservatively. In this instance, you maximize user experience because 100% of your traffic is covered by your PTU deployment and there is no such thing as degraded service. Everyone gets the same latency for the same request every time. However, this is the costly way to manage this application. If you cannot use your PTU deployment outside peak time, you are leaving PTU value on the table. Some customers are lucky enough to have both real-time and batch use cases. In this case, the real-time use cases utilize the PTU deployment during business hours; during downtime, the customer is then free to utilize the deployment for the batch inferencing use cases and still reap the PTU value. Other customers operate on several time zones and when one team goes offline for the day, somewhere 8 hours behind, another team comes online, and the application maintains a steady stream of tokens to the PTU deployment. But for a lot of customers, there isn’t a way to use the PTU deployment outside of peak time and provisioning for peak might not always be the soundest business decision. It depends on budgets, UX constraints and importantly, how narrow, tall and frequent the peak is.

Option 2: Provision for baseline

In option 2, the business is amenable to a trade-off. With this tradeoff, we bring our Azure Open AI cost significantly down at the expense of “some” user experience. And the hard part is to determine how much of the user experience we are willing to sacrifice and at what monetary gain. The idea here is to evaluate the application on a PayGo deployment and see how it performs. We can consider this to be our degraded user experience. If it so happens that our peaks are tall, narrow and rare, and if we are willing to say that it’s acceptable for a small slice of our traffic to experience degraded performance during peak time, then it is highly conceivable that sacrificing 5% of your requests by sending them to a Paygo deployment could yield, 30%, 40% maybe 50% savings compared to the option 1 and provisioning for peak.

The recognized savings will be a function of the area in green below.

Conclusion

PTUs can be perceived as expensive. But this could be that provisioning for peak is assumed. And this could be the best way to go if your business is such that you want to always ensure minimum latency and the best possible user experience. However, if you are willing to combine PTU with a little bit of PayGo (if your application profile lends itself to it), you could realize significant savings and reinvest the leftovers in your next GenAI project on Microsoft Azure... and also buy me a latte.

Much appreciation to my co-author Kit Papandrew