This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Hi!

It’s time to go back to AI and NET, so today’s post is a small demo on how to run a LLM (large language model, this demo using Phi-2) in local mode, and how to interact with the model using Semantic Kernel.

LM Studio

I’ve tested several products and libraries to run LLMs locally, and LM Studio is on my top 3. LM Studio is a desktop application that allows you to run open-source models locally on your computer. You can use LM Studio to discover, download, and chat with models from Hugging Face, or create your own custom models. LM Studio also lets you run a local inference server that mimics the OpenAI API, so you can use any model with your favourite tools and frameworks. LM Studio is available for Mac, Windows, and Linux, and you can download it from their website.

Source: https://github.com/lmstudio-ai

Running a local server with LM Studio

Here are the steps to run a local server with LM Studio

- Launch LM Studio and search for a LLM from Hugging Face using the search bar. You can filter the models by compatibility, popularity, or quantization level.

- Select a model and click Download. You can also view the model card for more information about the model.

- Once the model is downloaded, go to the Local Server section and select the model from the drop-down menu. You can also adjust the server settings and parameters as you wish.

- Click Start Server to run the model on your local machine. You will see a URL that you can use to access the server from your browser or other applications. The server is compatible with the OpenAI API, so you can use the same code and format for your requests and responses.

- To stop the server, click Stop Server. You can also delete the model from your machine if you don’t need it anymore.

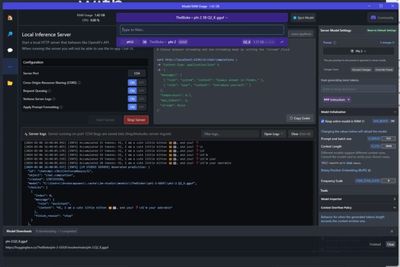

The following image shows the Phi-2 model running locally in a server on the port 1234:

SLM: Phi-2

And hey, Phi-2, what is Phi-2?

Phi-2 is a small language model (SLM) developed by Microsoft Research that has 2.7 billion parameters and demonstrates outstanding reasoning and language understanding capabilities. It was trained on a mix of synthetic and web datasets for natural language processing and coding. It achieves state-of-the-art performance among base language models with less than 13 billion parameters and matches or outperforms models up to 25x larger on complex benchmarks. We can use Phi-2 to generate text, code, or chat with it using the Azure AI Studio or the Hugging Face platform. :smiling_face_with_smiling_eyes:

Here are some additional resources related to Phi-2:

- Phi-2: The surprising power of small language models. https://www.microsoft.com/en-us/research/blog/phi-2-the-surprising-power-of-small-language-models/

- Microsoft/phi-2 · Hugging Face. https://huggingface.co/microsoft/phi-2

Semantic Kernel and Custom LLMs

There is an amazing sample on how to create your own LLM Service class to be used in Semantic Kernel. You can view the Sample here: https://github.com/microsoft/semantic-kernel/blob/3451a4ebbc9db0d049f48804c12791c681a326cb/dotnet/samples/KernelSyntaxExamples/Example16_CustomLLM.cs

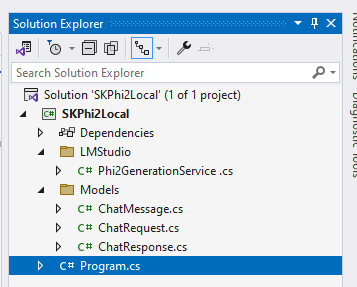

Based on that sample, I created a custom one that interacts with the Phi-2 model running in my local server with LM Studio. The following image shows the simple test project that includes

- LM Studio folder, with the Phi-2 Generation Service for Chat and Text Generation.

- Model folder to manage the request / response JSON messages.

- A main program with literally 10 lines of code.

This is the sample code of the main program. As you can see, it’s very simple and runs in a simple way.

using Microsoft.Extensions.DependencyInjection;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

using SKPhi2Local.LMStudio;

// Phi-2 in LM Studio

var phi2 = new Phi2GenerationService();

phi2.ModelUrl = "http://localhost:1234/v1/chat/completions";

// semantic kernel builder

var builder = Kernel.CreateBuilder();

builder.Services.AddKeyedSingleton<IChatCompletionService>("phi2Chat", phi2);

var kernel = builder.Build();

// init chat

var chat = kernel.GetRequiredService<IChatCompletionService>();

var history = new ChatHistory();

history.AddSystemMessage("You are a useful assistant that replies using a funny style and emojis. Your name is Goku.");

history.AddUserMessage("hi, who are you?");

// print response

var result = await chat.GetChatMessageContentsAsync(history);

Console.WriteLine(result[^1].Content);

You can check the complete solution in this public repository on GitHub: https://aka.ms/repo-skcustomllm01

Best,

Bruno