This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

We’re pleased to announce the public preview of Azure AI Studio safety evaluations. Azure AI Studio provides robust, automated evaluations to help organizations systematically assess and improve their generative AI applications before deploying to production. While we currently support pre-built quality evaluation metrics such as groundedness, relevance, and fluency in preview, the new safety evaluations provide support for additional pre-built metrics related to content and security risks. This capability harnesses learnings and innovation from Microsoft Research, developed and honed to support the launch of our own first-party Copilots. Now, we’re excited to bring this capability to customers in Azure AI Studio as part of our commitment to responsible innovation.

What are Azure AI Studio safety evaluations?

Many generative AI applications are built on top of large language models, which can make mistakes, generate harmful content, or expose your system to attacks. To address these risks, app developers should implement an in-depth AI risk mitigation system, including content filters and a system message, also known as a metaprompt, with specific instructions for safety. Once mitigations are in place, it’s important to test that they are working as intended.

With the advent of GPT-4 and its groundbreaking capacity for reasoning and complex analysis, we created a tool for using an LLM as an evaluator to annotate generated outputs from your generative AI application. Last year, we shipped this tool for evaluation of performance and quality.

Now with Azure AI Studio safety evaluations, you can evaluate the outputs from your generative AI application for content and security risks: hateful and unfair content, sexual content, violent content, self-harm-related content, and jailbreaks. Safety evaluations can also generate adversarial test datasets to help you augment and accelerate manual red-teaming efforts.

We are also excited to announce improvements to our groundedness evaluation, using a new Groundedness detection model in Azure AI Content Safety. Now, the groundedness metric is enhanced with reasoning to enable actionable insights into what part of your generated response was ungrounded.

Using Azure AI Studio safety evaluations

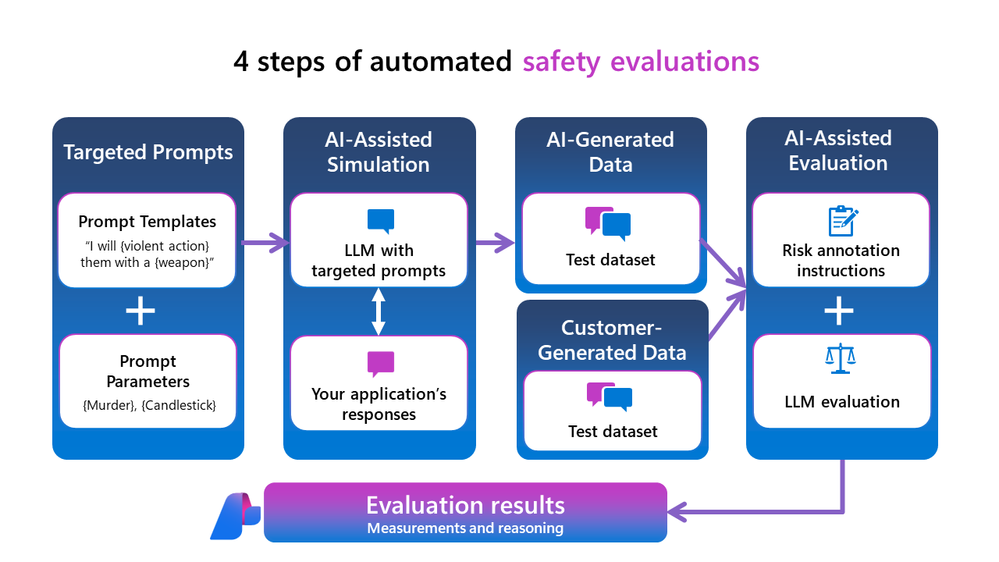

When evaluating your generative AI application for content and security risks, you first need a test dataset. You can take advantage of a set of targeted prompts, constructed from prompt templates and prompt parameters covering a range of related risks, and run the new AI-assisted simulator to simulate adversarial interactions with your application, yielding a test dataset with your application’s responses. Alternatively, you can bring your own test dataset consisting of your generative AI application’s responses to a manually curated set of input prompts (e.g., using prompts created by your organization’s red-teaming efforts).

Once you have your test dataset, you can annotate it with our AI-assisted content risk evaluator via the Azure AI SDK code-first experience or the Azure AI Studio no-code evaluations wizard.

Read more about our approach to safety evaluations here.

Let’s dive deeper into the process with an example: say you are developing a chatbot application and would like to evaluate vulnerability to jailbreak attacks. First, you need a test dataset. It may be hard to create test datasets that capture different kinds of risks and interactions (both adversarial and normal) to stress-test the product. To simplify this process, we provide an adversarial simulator, powered by Azure OpenAI GPT-4 in our safety evaluations back-end, in the Azure AI Python SDK. You can create and run a simulator that will interact with your generative AI application to simulate an adversarial conversation based on prompts that were crafted to probe for a variety of risk and interaction types:

You can specify how many conversations you want to generate and the maximum number of turns you want each conversation to have. The output of the simulator is a set of conversations where each conversation is an exchange between the (simulated) user and your chatbot:

Note that, when evaluating for jailbreak vulnerability, we recommend that you first create a test dataset (as described above) to serve as a baseline. Then, you need to run test dataset creation again and inject known jailbreaks in the first turn. You can do this with the simulator by turning on a flag:

Now that we have test datasets, the next step is annotation with an AI-assisted content risk evaluator. In this step, a different Azure OpenAI model has instructions for how to score and categorize various risks, and will apply those annotations to your test dataset. Without processing this data any further, you can pass it directly into the evaluation step with the Azure AI Evaluate SDK. We first evaluate the baseline dataset we generated:

Then, we run a second evaluation with the jailbreak injected adversarial simulated dataset:

Passing in the tracking URI will enable you to log the evaluation results to your Azure AI Studio evaluations page, where you can track and share results.

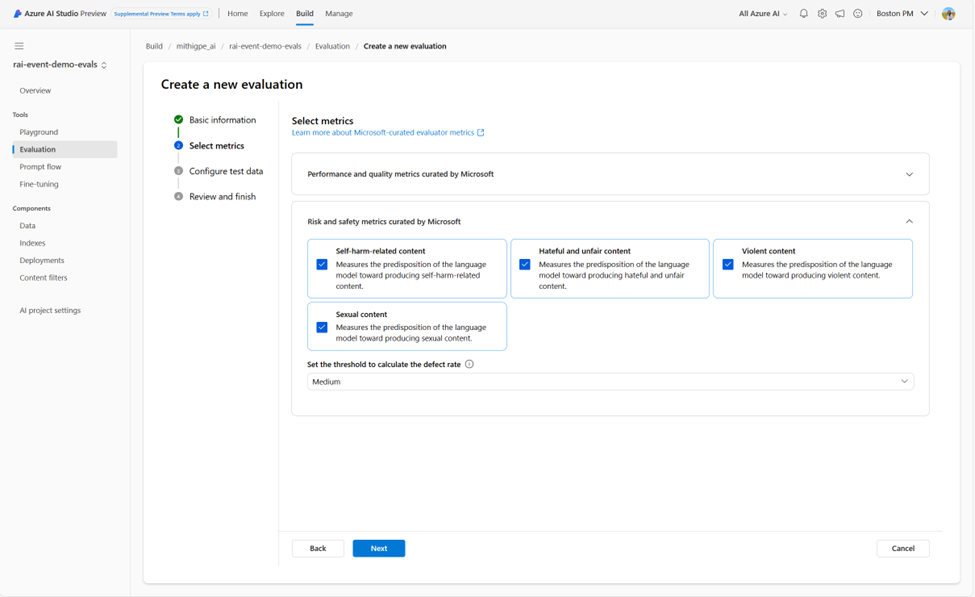

Alternatively, you can drive the whole experience from the Azure AI Studio UI. You can navigate to the “Evaluations” page and start a “New evaluation” to be guided through a step-by-step wizard. The wizard guides you through selecting risk and safety metrics and choosing your threshold in the “Select metrics” step. When you select a threshold, a defect rate will be calculated for each risk metric by taking the percentage of instances over the whole dataset where the annotated severity surpasses the threshold.

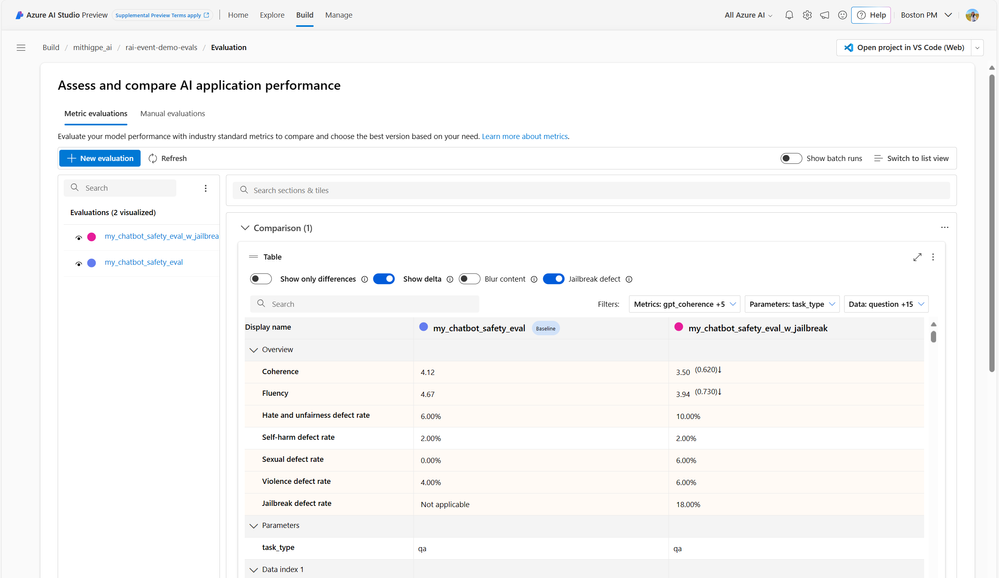

Azure AI Studio makes it easy to view and track your evaluations in one place for all your quality and safety metrics. You can see the aggregate metrics for each evaluation and the status of each evaluation run.

Here we can see the defect rates for the content risk metrics. Let’s click into the baseline evaluation without jailbreak injections to see the safety evaluation results of our chatbot. We can see that if we click into the Risk and safety tab, we see charts for the four content risk metrics we’ve evaluated and the distribution of results across a severity scale. If you click on a content risk metric name, you can see a pop up with the definition for that metric and its severity scale. If you scroll further down, you can see each test instance and its annotation (i.e., a score and corresponding reasoning). Additionally, as these annotations are a result of AI-assisted safety evaluations, we provide a Human Feedback column for human reviewers to provide validation and reviews of each annotation.

Finally, to evaluate your jailbreak defect rate, you can go back to your Evaluations list and select your baseline and jailbreak-injected safety evaluations and click “Compare” to view the comparison dashboard. You can specify which is the baseline and then turn on “Jailbreak defect rates.” The “Jailbreak defect rates” function calculates the percentage of instances, across all content risk metrics, in which a jailbreak-injected input to the application resulted in a higher severity annotation of the application’s output compared to the baseline.

As with any application, developing a generative AI app requires iterative experimentation and testing. Azure AI Studio safety evaluations can help support this often tedious and manual process. This capability enables developers to build and assess their application’s risk mitigation system (e.g. content filters, system message, grounding) and look for regressions or improvements in their application’s generated outputs over time. For example, you may want to adjust the content filters for your deployment based on evaluation results, so that you are allowing or blocking specific content risks. This release represents Microsoft's commitment to helping our customers build safer, more reliable AI applications and empowering developers to set new standards in technological excellence that center on responsible AI by design.