This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Azure AI Responsible AI team is happy to announce the Public Preview of ‘Risks & safety monitoring’ feature on Azure OpenAI Service. Microsoft holds the commitment to ensure the development/deployment of AI systems are safe, secure, and trustworthy. And there are a set of tools to help make it possible. In addition to the detection/ mitigation on harmful content in near-real time, the risks & safety monitoring help get a better view of how the content filter mitigation works on real customer traffic and provide insights on potentially abusive end-users. With the risks & safety monitoring feature, customers can achieve:

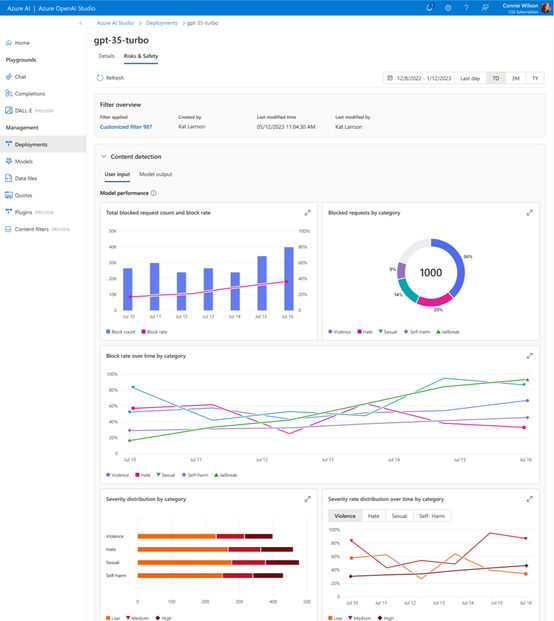

- Visualize the volume and ratio of user inputs/model outputs that blocked by the content filters, as well as the detailed break-down by severity/category. Then use the data to help developers or model owners to understand the harmful request trend over time and inform adjustment to content filter configurations, blocklists as well as the application design.

- Understand the risk of whether the service is being abused by any end-users through the “potentially abusive user detection”, which analyzes user behaviors and the harmful requests sent to the model and generates a report for further action taking.

Content detection insights:

As a developer or a model owner, you need to conform with the requirements of building a responsible app to ensure it has been used in a responsible way by end-users. By applying content filter helps to mitigate risks of generating inappropriate content. However, it is hard to know how the content filter works on the production traffic without monitoring. And the content filter configuration needs to be balanced with the end-user experience, to ensure it does not introduce negative impact to benign usage. Thus, to keep monitoring the key metrics of harmful content analysis could help catch up on the latest status and make adjustments.

- Customers can visualize the key harmful content analysis metrics, including:

- Total blocked request count and block rate

- Blocked requests distribution by category

- Block rate over time by category

- Severity distribution by category

- Blocklist blocked request count and rate

By understanding the monitoring insights, customers can introduce adjustment to content filter configurations, blocklists, and even the application design to serve specific business needs and Responsible AI principles.

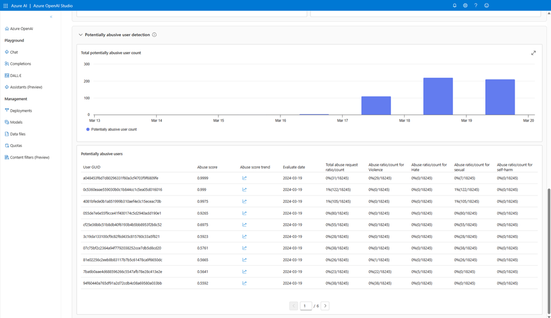

Potentially abusive user detection insights:

In addition to the content level monitoring insights, the ‘potentially abusive user detection’ helps enterprise customers have better visibility on potentially abusive end-users who continuously perform abusive behavior or send harmful requests to the model.

The ‘potentially abusive user detection’ is analyzed based on the requests that sent to the models of Azure OpenAI service (with ‘user’ field info), if any content from a user is flagged as harmful and combining the user request behavior, the system will make a judgement on whether the user is potentially abusive or not. Then a summarized report will be available in the Azure OpenAI Studio for further action taking.

The potentially abusive user detection report consists of two major parts:

- The total potentially abusive user count over time to give customers a sense of the trend of service being abused.

- (Coming soon) Then follows with a breakdown analysis of potentially abusive end-users, with the analyzed score as well as the harmful request breakdown by category.

After viewing the potentially abusive user detection report, the developer or the model owner can take response actions according to the code of conduct of their service and eventually ensure the responsible use of both LLM-based applications as well as the foundation model.

Note:

- To enable this analysis, customers need to send “userGUID” information within the Azure OpenAI API calls through “user” field. Customers should not provide sensitive personal data in the field.

- The potentially abusive user detection feature is not the same with abuse monitoring function in Azure OpenAI Service. The prior one analyze abuse at “user” level leveraging the “user” field sent by the customers, The later one is analyzed at Azure OpenAI Service resource level.

- The potentially abusive user detection feature leverages data-in-transit, and the analysis is fully automated leveraging large language models.

Technical highlights:

Potentially abusive user ranking and abuse report generation

- Abusive score per user will be calculated as a weighted combination of all the annotation results for all harmful categories and from all channels, including small machine learning models, large language model prompts, etc.

- Then either a threshold of user-level abusive score will be applied to the user list or top N users from the user list will be taken as final abusive users.

- Abuse report will be generated with detailed analysis on user behaviors and harmful request statistics, then visualized through Azure OpenAI Studio.

- Administrators of models can take actions according to the abuse report considering both user behaviors and request statistics.

Try it out!

Following this document to try out the new features which are now available in the following regions:

- East US

- Switzerland North

- France Central

- Sweden Central

- Canada East