This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Context

The Azure OpenAI Service provides REST API access to OpenAI's advanced language models, including GPT-4, GPT-4 Turbo with Vision, GPT-3.5-Turbo, and the series of Embeddings models. These state-of-the-art models are highly adaptable and can be tailored to a variety of tasks such as generating content, summarizing information, interpreting images, enhancing semantic search, and converting natural language into code.

To fit everyone's different needs and technical requirements, the Azure OpenAI Service can be accessed through several interfaces: REST APIs for direct integration, a Python SDK for those who prefer working in a Python environment, and the Azure OpenAI Studio, which offers a user-friendly web-based interface for interacting with the models. This flexibility ensures that developers and businesses can leverage the power of OpenAI's language models in the way that best suits their project needs.

Developers utilizing the Python SDK, usually follow these steps:

- Obtain a unique key and endpoint from Azure.

- Configure these credentials as environment variables, ensuring they're readily available for the application.

- Implement the code snippet analogous to the one below to instantiate the Azure OpenAI client:

client = AzureOpenAI( azure_endpoint = os.getenv("AZURE_OPENAI_ENDPOINT"), api_key=os.getenv("AZURE_OPENAI_API_KEY"), api_version="2024-02-01" ) - Define a usage scenario that aligns with project objectives, such as chat, content completion, or assistant functionalities.

While developing a function app or web app, it's standard practice to store sensitive data such as keys in the local.settings.json file for local testing. When deploying to a cloud environment, these values are typically managed via app configuration settings. For enhanced security, engineers leverage Azure KeyVault to securely store and access all secret keys directly within their applications.

However, even with these security measures in place, vulnerabilities can still arise. Common risks include the accidental hardcoding of sensitive information within source code or configuration files, improper storage of secrets in unsecured locations, or inadequate rotation and revocation practices, potentially leading to extended periods of exposure.

These challenges highlight the difficulty developers face in managing the critical security components, such as secrets, credentials, certificates, and keys. Azure managed identities offer a robust solution, removing the burden from developers by automating the secure management of these elements.

Azure OpenAI can be used in many cloud-based scenarios, such as in Azure Functions, App Services or even jupyter notebooks running on Databricks. In this tutorial, we will guide you through the creation of an Azure Function that establishes a secure connection to the OpenAI service using managed identities.

Preparation

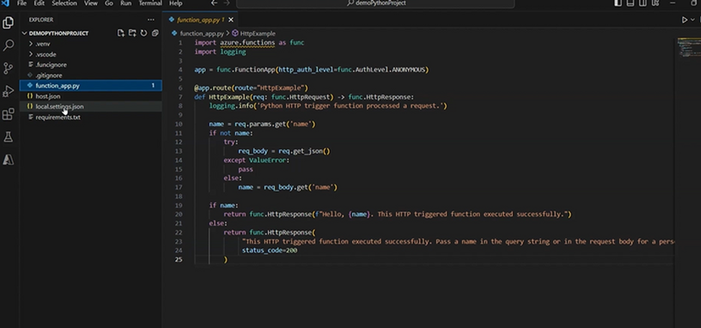

Begin by creating a basic Azure Function, call your function process_image and deploy it on the Azure platform. For comprehensive guidance, consult the documentation provided here. Our function requires the http trigger and the V2 programming model. The project should be organized like this

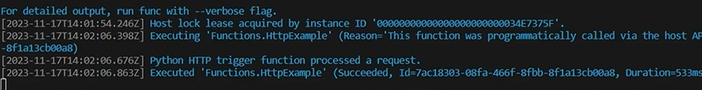

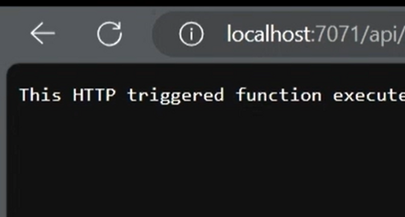

Test your function and make sure it works well and your environment is ready to go by using Postman or a Web browser.

Subsequently, proceed to establish an Azure OpenAI resource and its deployment, which can be effortlessly accomplished by following the instructions outlined in the provided reference. It is recommended to assign the deployment a name identical to the model name for ease of identification.

Once both the deployment and the Azure OpenAI resource have been configured, make sure to record the deployment name and the OpenAI resource name for future reference.

Although this tutorial utilizes the GPT-4 vision model, the steps are equally applicable to any text-based model you may choose to implement.

Configuration

Let's proceed with configuring a Managed Identity. As previously discussed, while developers can securely store secrets in Azure Key Vault or App Settings, services require a mechanism to retrieve these secrets from Azure Key Vault. Managed Identities provide a seamless solution by granting an automatically controlled identity within Microsoft Entra ID, which applications can leverage to authenticate with resources compatible with Microsoft Entra. This enables applications to obtain Microsoft Entra tokens effortlessly, without the need to manage sensitive credentials directly.

Managed identities come in two types, System-assigned and User-assigned:

- System-assigned. Some Azure resources let you enable a managed identity directly on the resource. When you enable a system-assigned managed identity, Microsoft Entra ID creates a service principal of a special type for the identity. The service principal is tied to the lifecycle of that Azure resource. When the Azure resource is deleted, Azure automatically deletes the service principal for you. By design, only that Azure resource can use this identity to get tokens from Microsoft Entra ID. You grant the managed identity access to one or more services. The name of the system-assigned service principal is always the same as the name of the Azure resource it is created for. For a deployment slot, the name of its system-assigned identity is <app-name>/slots/<slot-name>.

- User-assigned. You can also create a managed identity as a separate Azure resource. You can create a user-assigned managed identity and assign it to one or more Azure Resources. When you enable a user-assigned managed identity, Microsoft Entra ID creates a service principal, managed independently of the resources that use it. User-assigned identities can be used by multiple resources. You grant managed identity access to one or more services.

You need "Owner" permissions at the appropriate scope (your resource group or subscription) to make the required resources and handle the roles.

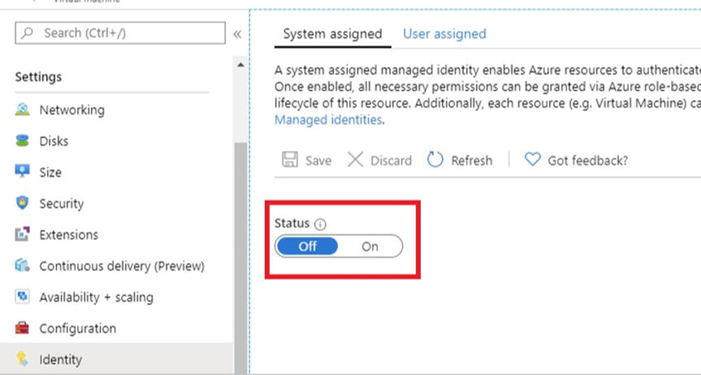

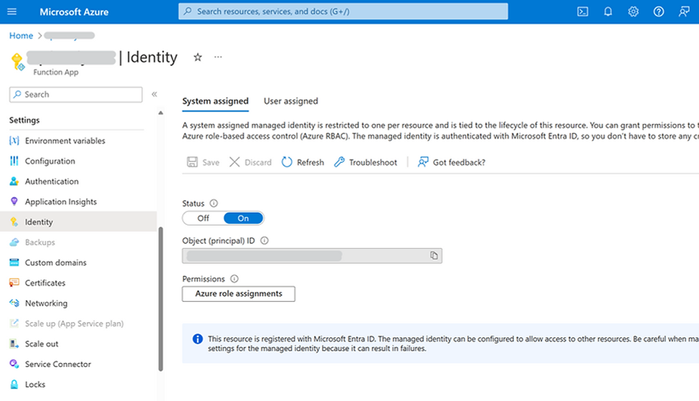

This guide will show you how to make a system-assigned managed identity. Enabling a system-assigned managed identity is a one-click experience. You can do it when you create your function app or in the properties of an existing function app. Go to your function app and on the left side find the Identity under the Settings section.

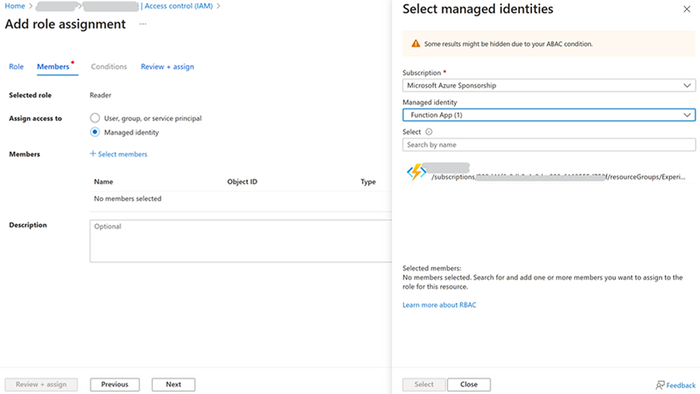

With managed identities for Azure resources, your application can get access tokens to authenticate to resources that use Microsoft Entra authentication. So, you need to grant the identity of our Function App access to a resource in Azure Resource Manager, which is Azure Open AI in this case. We assign the Reader role to the managed-identity at the scope of the resource.

Next, the crucial step. You need to create a token to access the service. Install azure identity in your virtual environment.

Import the library

And use this code in your Azure function to create a token

Next, install the openai python package

In the same manner import the library

Add two environment variables to your local.settings.json, GPT4V_DEPLOYMENT and GPT4V_DEPLOYMENT, that match your endpoint url and deployment name. Next, you need to initialize your OpenAI client

You may have noticed that we still use the endpoint and the deployment name, but we don't need any secrets in the code anymore, which is a great advantage.

Sample Scenario

To show an example, we'll make a simple service that examines an input image according to the user instructions. For this we'll get the image from the http input, store it in the temp storage (this step is necessary to create a valid data url), and send the image to OpenAI.

Conclusion

This tutorial has demonstrated how to apply a different Azure OpenAI scenario that does not require key sharing, and thus is more appropriate for the production environment. This scenario has several advantages. If you employ managed identities, you don't have to handle credentials. In fact, credentials are not even accessible to you. Moreover, you can use managed identities to authenticate to any resource that supports Microsoft Entra authentication, including your own applications. And finally, managed identities are free to use, which is also significant if you have multiple applications that use OpenAI. I hope this helps you.