This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Ingesting AWS Commercial and GovCloud data into Azure Government Sentinel

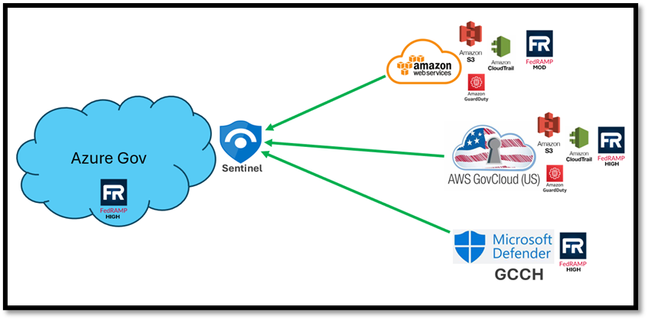

This blog will be focusing on how to ingest AWS Commercial and AWS GovCloud data into a Microsoft Sentinel workspace in Azure Government.

This picture provides a high-level visual of the architecture we will walk through in this part of the blog series.

Overview of ingestion architecture

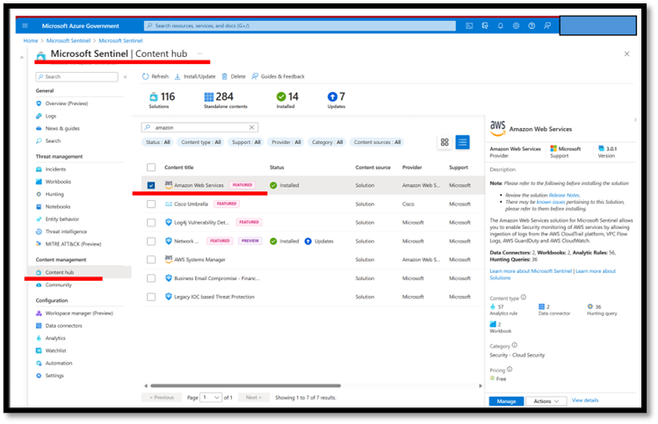

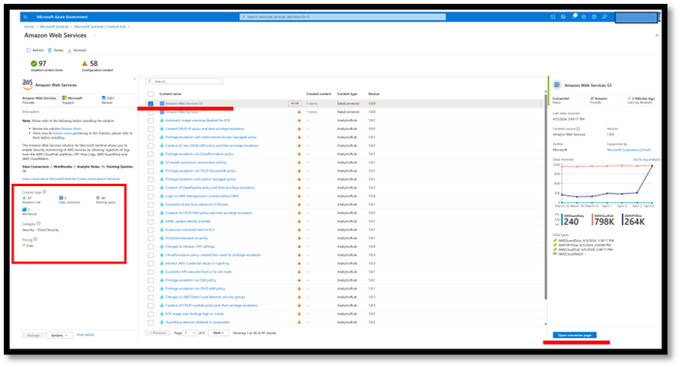

Installing the Solution in Sentinel from the Content Hub

Microsoft Sentinel’s Content Hub in Azure Government includes a solution for AWS. This solution bundles the data connector and relevant analytics (rules), workbooks (dashboards), and hunting queries, into a single package. To make this content available in your Sentinel workspace, you first need to navigate to Content Hub and install the solution. Once the solution is installed, the content will be available for use, and you will now see a button to “Manage” the solution.

Sentinel Content Hub

When you click the “Manage” button, the solution page will open allowing you to manage the various components of the solution.

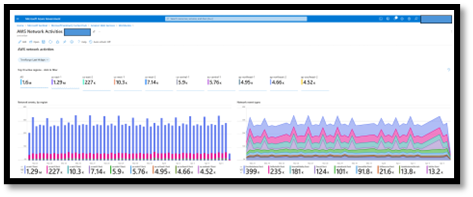

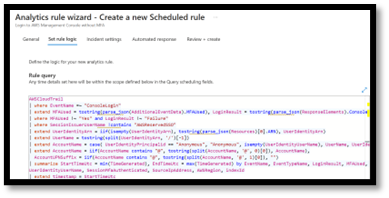

In addition to the actual data connector the solution includes analytic rules, workbooks, and hunting queries. These components are designed to help you easily ingest the data into the platform and quickly get insights out of the data, reducing time to value. Here is an example of one of the workbooks focused on VPC data and of the underlying KQL logic included with one of the out-of-the box Analytics rules.

Examples of AWS workbook and analytic query

Installing the Connector

On the main AWS solution page in content hub - select the connector and open the connector page.

AWS Solution Page

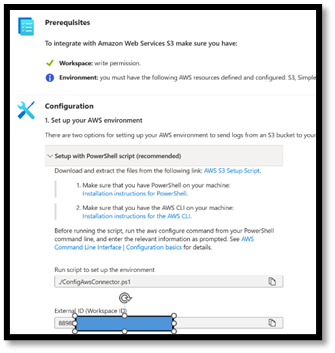

This will open the connector page, detailing information about the connector, the prerequisites required to configure the data connector, and the step-by-step guidance to complete the configuration.

AWS Connector configuration

This connector includes a PowerShell script to help automate the configuration process. To get started, download the included PowerShell script.

When running the script use Windows PowerShell. There are Differences between Windows PowerShell and PowerShell 7.x - PowerShell

Make note of the log analytics workspace ID, this will be required when running the ConfigureAwsConnector.ps1 script. To further simplify this process, a Cloud Formation Template is in development. That information will be added to this blog series upon release.

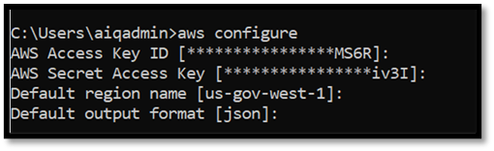

AWS CLI installation is required by the configuration script to create the connections in AWS. Here is the link from Amazon to setup AWS CLI, AWS CLI Installation instructions.

The configuration script will confirm these settings when it runs. If this process returns any errors, check the *_accessKeys.csv file that you downloaded from AWS when you set up the access key, and ensure that the region is correct. In the AWS Console, the region displays US-Gov-West. You will need to update this to us-gov-west-1 when you run the AWS configure command or the script will throw an error.

Verified AWS access configuration

Next, you will need to run the ConfigureAwsConnector.ps1 script. Depending on your organization’s policies, you may not be able to run the PowerShell script without setting the Windows PowerShell execution policy Set-ExecutionPolicy to allow running unsigned scripts. You can check your policies by running.

PS C:\> Get-ExecutionPolicy –List

If you need to change the policy run this command to allow running unsigned scripts

PS C:\> Set-ExecutionPolicy -ExecutionPolicy Unrestricted

Once you are done installing the connections, you should set the execution policy back. More information about setting execution policies can be found on this page Execution Policies - PowerShell

Now that you’ve,

- Setup the AWS CLI

- AWS IAM user

- Access Key are set up

- Downloaded the ConfigureAwsConnector.ps1 script

- Set the Powershell execution policy if required

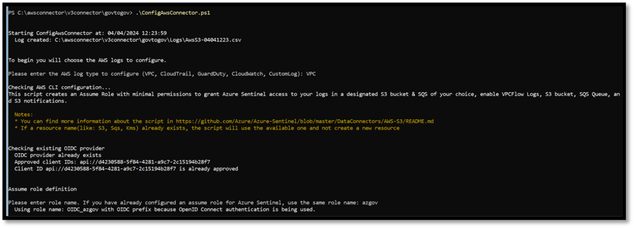

You are ready to run the ConfigureAwsConnector.ps1 script. In this example we are connecting VPC Flow logs.

The script will ask for what type of log you want to setup & for the AWS Role to setup for the OpenID Connection ((OIDC)).

AwsConfigurationConnector script

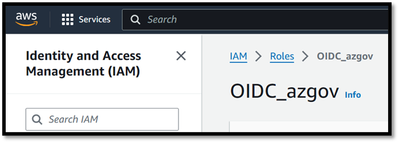

If you’re running this script again for additional log types and want to use the same role again, you’ll need to add OIDC_ to the role name that was created the first time the script ran. In the example above the role entered was azgov, this created the role OIDC_azgov in AWS.

OIDC Role in AWS IAM

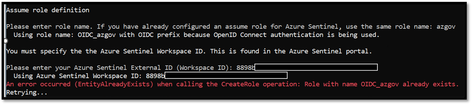

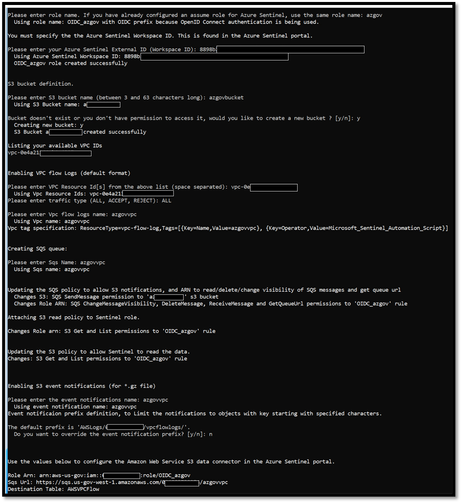

In subsequent execution of the ConfigAwsConnector.ps1 script you would need to enter OIDC_azgov for the assume role definition. If you do not match the role name, you’ll get an error when the script runs

Assume Role Error

Once the assume role definition name is entered you will be asked for the Log Analytics workspace ID where Sentinel is installed and the AWS configuration information like S3 bucket, SQS queue, VPC ID(s) & Event Notification name. For the Event Notification, I just accepted the default prefix.

AwsConfigurationConnector script

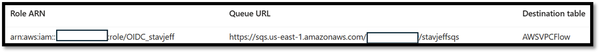

Once the script finishes you will be presented with the connection data required in the Sentinel Connector configuration page. Save this data -

Role Arn: arn:aws-us-gov:iam::##########:role/OIDC_azgov

Sqs Url: https://sqs.us-gov-west-1.amazonaws.com/###########/azgovvpc

Destination Table: AWSVPCFlow

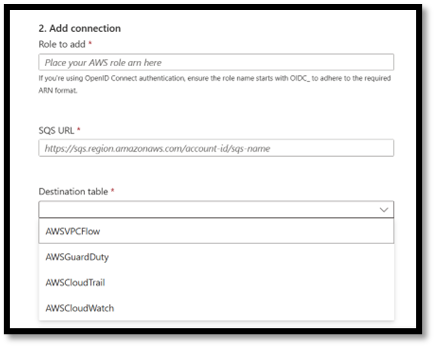

On the AWS connector in Sentinel copy data & select the table that corresponds to the log, in this case the AWSVPCFlow table.

Sentinel AWS connector configuration

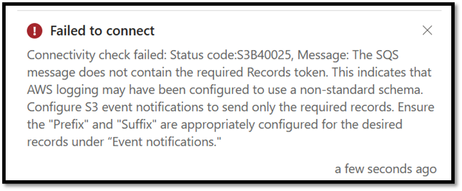

Then select connect. The first time you connect, you may see an error (ID25).

Error Message

This is due to a test message the new SQS queue sends on first connection. The test message does not have the correct record that the connector is expecting and throws the error. Microsoft is working on either clarifying the error message or suppressing the error. When you click connect a second time and then the SQS queue sends the correct message and the connection will be successful.

Connected VPC Flow Logs

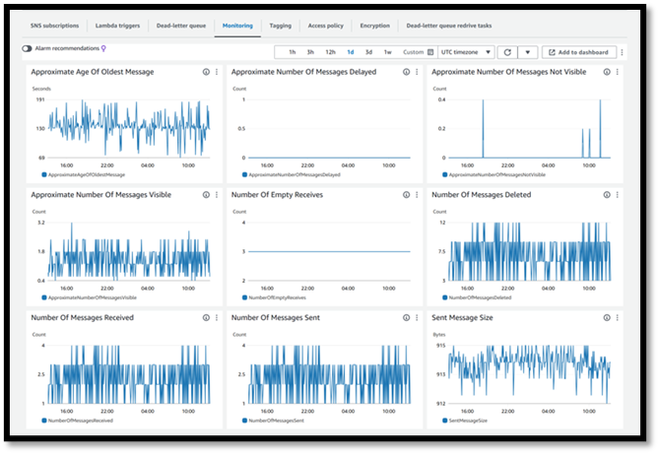

You can repeat this process for all the other AWS logs you want to bring into to Sentinel. Your connection page will look similar if you bring in all the logs from AWS and AWS GovCloud. This example also shows using the same OIDC role for AWS and different OIDC roles for AWS GovCloud.

Connected AWS logs from AWS & AWS GovCloud

Here is the link to instructions on Microsoft Sentinel site - Connect Microsoft Sentinel to Amazon Web Services to ingest AWS service log data.

Verifying and querying AWS data

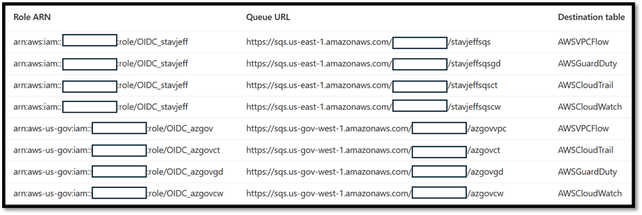

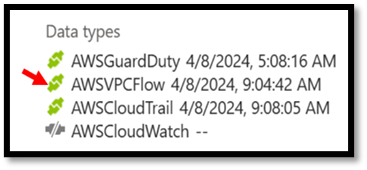

Once you’ve made the connection(s), after a few minutes you will see the connection status change to green and data in the graph on the connector page.

Sentinel AWS Connection

If you don’t see data, try refreshing the page. You can also verify the AWS side of the connection. Check the SQS and verify that you are seeing messages flowing through the queue. Here is an example of the SQS queue that our VPCFlow logs are using.

AWS SQS queue monitoring page

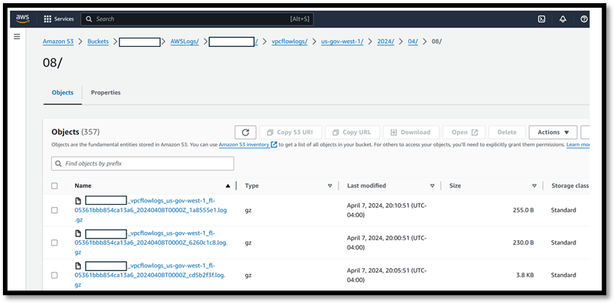

You can also look in the S3 bucket and verify that you are seeing log files. Here is an example of the same VPCFlow logs in the S3 bucket.

AWS S3 bucket log data

Once you’ve verified that you have data into Sentinel here are a couple quick ways to take a quick look at the data in Log Analytics.

On the connector page you can click on the data type, and it will take you into Log Analytics and let you know that there is data in the AWSVPCFlow table

Verifying AWS data flowing into log analytics

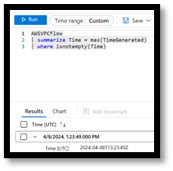

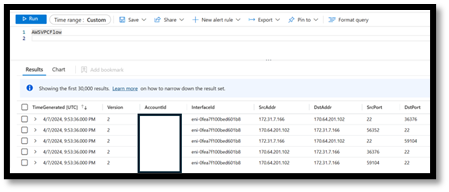

This is a great way to check and see that there is data in the table, but it does show you what that data is. You can take quick look at the data by querying the table(s). Here are examples of AWSVPCFlow & AWSGuardDuty tables.

Data in log tables

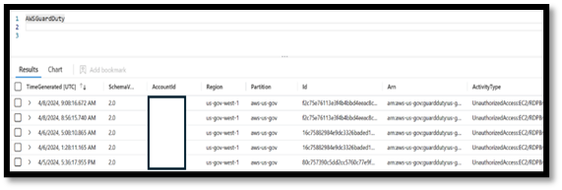

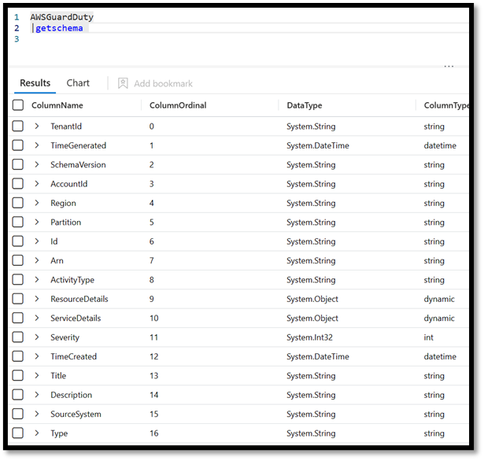

Another query that can be helpful to understanding your AWS data is the GetSchema command in log analytics. Here are examples of the schema for AWSVPCFlow and AWSGuardDuty tables.

AWS tables Schema

Summary

In this part of this series, we’ve walked through setting up the CLI and scripts required to establish the connection from AWS into Sentinel, verifying that data is flowing and how we can query that data. We encourage you to explore the workbooks, analytics and hunting queries that are installed with the AWS Solution. These concepts here are some helpful links

- Visualize your data using workbooks in Microsoft Sentinel | Microsoft Learn.

- Detect threats with analytics rule templates in Microsoft Sentinel | Microsoft Learn

- Hunting capabilities in Microsoft Sentinel | Microsoft Learn

In the following parts of this series, we’ll walk through the same process for ingesting AWS & AWS GovCloud into Azure commercial, using the new Cloud Formation Template that is being developed and finally ingesting GCP data into Microsoft Sentinel.