This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Recent developments in AI require more computing power to train the models. During training, the model learns from data how to perform specific tasks. Now, recent models like GPT, which have billions of parameters, require memory-intensive processing and power-intensive trainings. This requires a massive infrastructure. Thus, we have two main components for AI model training: data and compute. In my post, I explained training by drawing simple comparisons with human learning, discussed why the cloud can be a perfect fit for training AI models, and outlined some future directions that I believe will be important.

Inferencing and training

Let's start by briefly comparing the training of AI models with the human learning process. A major difference is that the training of current models is massive and relatively fast, whereas human learning is much more diverse and incremental process, spanning over a long period of time. Consider the development of a baby's or child's brain; the learning process here includes observations and discoveries across a wide range of fields over the years. Human learning is a much more incremental and slow process compared to the massive model training conducted today.

AI models are trained on massive datasets, through which they learn to perform specific tasks. During training, these models learn from a training dataset that contains broad knowledge, enabling them to exhibit behaviours that can be described as common sense/behaviour. The objective of this base training is to instil such common sense, where the model showcases human-like behaviours based on general/shared knowledge. In contrast, the human brain undergoes a different kind of trainings by nature, where the subjects are diverse and the environment is constantly changing. Each day, we learn from a multitude of small observations across various fields.

key concepts: multimodality and model refinement.

Multi-modality & Domain-specific Finetuning

As the brain is complex, we are not sure exactly how different pieces of information correlate within it. Take a simple example: How does reading books improve math skills? We don't know the exact correlation between them, but we do know that one exists. Skills or insights gained from one area might be beneficial when performing tasks in another field. This underscores the importance of direction with multi-modality, which enhances a model's ability to reason about an answer. Reasoning is also related to common sense, which we mentioned earlier. A model can exhibit better behaviour when trained on diverse tasks from different domains. Referring again to human learning, we noted that it is more like a slow, incremental process across various fields over a long period. Here, we can draw some parallels between multi-modality/training in different domains and human learning across different fields.

Optimized Fine-tuning

As trained models should be adapted for production use, model refinement is becoming an increasingly important topic. Today, model refinement is achieved through a process called fine-tuning. Through fine-tuning, the model is adapted to specific domains by changing/reconfiguring its parameters. To make it easier to understand, let's consider an example of human learning. Imagine an exam period where you have exams on different subjects, with one week between each exam. Ideally, you should focus your preparation on the subject of the next exam each week. At the exam, this enables you to answer questions related to that subject more effectively. Fine-tuning is similar in some respects, as the model is fine-tuned specifically for a domain to perform tasks better within that domain. When you fine-tune your model on physics datasets, it is expected to answer physics-related questions better. Similarly, when you fine-tune on Azure Q&A, you can expect your model to answer questions in the Azure context more accurately.

Here we speak of a customization of the model. The model is refined in terms of we adjust model parameters. Thus, we do not require to retrain the base model from scratch. But just to mention, not like training but fine-tuning is also a compute & cost intensive process.

A significant improvement in this area came with LoRA. The concept is to conduct training focused only on the parts of the model that need improvement. Thus, a very small part of the model is fine-tuned (<1%). This requires much less memory in comparison. The added parameters, known as LoRAs, act as adapters associated with the task. The resulting models with adapters can be viewed as experts, effective in their respective tasks. They are referred to as LoRA Experts.

LoRA: Low-Rank Adaptation of Large Language Models - Microsoft Research

To draw an analogy with the exam period example mentioned earlier, imagine you have one exam each week from a different subject. For each exam, you need specific knowledge for that subject, so ideally, you prepare the week before to be capable of understanding and solving the exam problems related to that specific subject. I see many similarities with the concept of LoRA here. Using different LoRA adapters will provide expert models that can be used for task- or domain-specific applications. Based on a user query, the appropriate LoRA adapter can be selected from a library. This allows the use of an expert model (LoRA Expert) for the task, where it is expected to perform well. The analogy here is like a person who has prepared for a week for a biology exam, then taking the biology exam.

Training LoRA models is very energy-efficient, as it reduces the number of trainable parameters. This introduces a new concept: centralized training for the base model (like GPT) and decentralized training for the Expert models (LoRA adapters).

The initial large-scale training required will be done centrally to generate a base model. This base model will then be used with relatively small refinements, known as LoRA adapters, in a decentralized manner. This approach is logical by nature because the fine-tuning of the model is mostly done for production, where the model is expected to serve a specific domain that requires domain-specific adaptation. This does not necessarily require large-scale centralized training.

Initially, we discussed multi-modality. I don't want to delve deeply into LoRA here. I would like to just highlight a recent work on multi-modal adaptation of LoRA, which involves the addition of ~2.5% more parameters to the model. The user can refer to [2311.11501] MultiLoRA: Democratizing LoRA for Better Multi-Task Learning (arxiv.org)

Transparent checkpointing

During training, things can go wrong. Ideally, you don't want the training to have to restart, as this may not be possible due to time and cost constraints. Transparent checkpointing is a promising step forward. It allows you to set checkpoints during the model training process. If an error occurs during training, you can revert to the previous checkpoint without needing to restart from the beginning. This is a significant cost-saving improvement and also makes it easier to change models at the hardware level. Since the model states can be saved, training can be paused and another model, along with its state, can be uploaded. This offers a lot of flexibility, especially in the cloud. I believe that in the future, it might even provide interesting insights into training itself, as it allows observation of disk states at different training milestones.

- It is demonstrated as the GPU-adapted version of CRIU for Linux. With CRIU it is possible to freeze and save the CPU state by containers.

- As checkpointing builds some kind of insurance, as no complete training restart is needed. Still there is a custom decision needed to be made, where you can choose to set less or more frequent checkpoints. Here comes the trade-off between the cost of more frequent checkpoint vs costs in the case of training failure/loss.

Why checkpoint optimization for large model training matters? - MS Learn

Energy Consumption

The topic of energy consumption and the efficiency of AI systems is very important. To create a sustainable platform, the underlying hardware must be efficient as first. Two key aspects to consider are the efficiency and utilization of the infrastructure. The hardware should be used efficiently and be inherently efficient.

The benefits of training AI models on Azure Cloud Infrastructure include scalability. We are talking about multiple GPUs not operating on the same board and not even in the same rack. They are spread out through consumption and heat. It has a global scheduler across one global hardware fleet of CPUs and GPUs. Thus, this infrastructure can scale from modest training sessions up to an OpenAI supercomputing system.

Before the Azure AI infrastructure was announced, it was expected that the infrastructure would be mostly used for training. However, unexpectedly, the trend appears to be different, showing a comparable utilization of the platform for both training and inference. Inference is an ongoing process that also requires a significant amount of power.

The power consumption is often correlated with the complexity of the task. It is not easy to measure. As of today, we can track the input and output tokens of the model. You can think of tokens as pieces of words, where approximately 750 words are represented by ~1000 tokens. Pricing is also based on the number of tokens. When we consider this, each prompt can lead to a task with varying complexity. Energy usage is highly correlated with complexity, as a more complex prompt will require more memory and hardware resources. As energy becomes more of a critical aspect and even a limitation, assessing the prompt in terms of complexity might help in finding the most efficient hardware fit and provide insights about the required power.

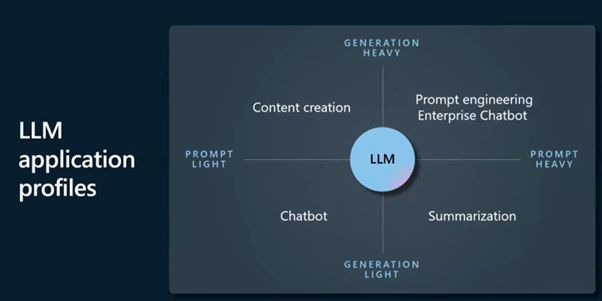

LLM application profiles

Currently, an interesting approach is the use of LLM application profiles. With LLM application profiles, users can be categorized according to the complexity of their queries. This enables matching the complexity of the query with the required or more specialized compute power. Here you can see a categorization of four different use cases with respect to whether the user query requires more prompt or generation-oriented processing: Content Creation, Prompt Engineering, Summarization, and Chatbot.

An optimization related to this is Splitwise. Splitwise introduces the concept of Phase Splitting, which separates the prompt computation and token-generation phases. This is intended to optimally utilize available hardware and parallelize as well. User can refer to Splitwise improves GPU usage by splitting LLM inference phases - Microsoft Research

Project Forge

Recent work like Project Forge showed that using a specialized GPU/accelerator could also be possible and optimal. Forge is a resource manager that can schedule across GPUs/accelerators. It has a global scheduler on one global hardware fleet CPUs and GPUs. The GPUs are clustered with High bandwidth network – Infiniband - to provide a good quality connectivity. On layer on top of them are the hypervisors. Networks are taken care of and tuned to be maintained with a high utilization & efficiency.

Models will be available via AI Frameworks like ONNIX, Deepspeed (to train), Azure OpenAI on top of custom kernels and different kernel libraries. This will provide the user the opportunity to use different and specialized accelerators on NVIDIA, AMD GPUs and Maia.

What runs ChatGPT? Inside Microsoft's AI supercomputer | Featuring Mark Russinovich

What to learn?

You don’t want your kids to see harmful content or to go to locations where they might witness non-exemplary behaviour. The same applies to AI models. When a model is exposed to harmful elements or biased content during its training, it might also reflect that in its learned behaviour. You should keep your model away from the toxic junk on the internet.

Even if you protect your kid/model from harmful content, a bad actor/malicious user can still attempt to interact with it for their own purposes. A recent article by Crescendo reveals an interesting case of a 'jailbreak' as well, where a user tries to convince/fool the model into saying harmful information. This reported behaviour showcases how complex it can be to draw the lines.

What to unlearn? (forget)

Above, we talked mostly about training and learning. But what about forgetting? We can refer to this as 'unlearning' by AI models. The concept of enabling models to forget involves generating a process, after training, that allows them to unlearn information associated with content that needs to be removed from the training data. I think this is promising, as it can be used to unlearn harmful or biased content. Furthermore, it can be utilized for protecting intellectual property (IP).

The question here is: While the model unlearns certain content, can it still perform as well as before on tasks that are unrelated to that content? The paper presents promising results, reporting that performance on benchmarks remains comparable.

Who's Harry Potter? Making LLMs forget - Microsoft Research

A second, related question arises: How big does the model need to be to continue performing the task effectively? The user can refer to for further insights: TinyStories: How Small Can Language Models Be and Still Speak Coherent English? - Microsoft Research

Unlearning information, characters, events, and items related to the person/event, while carrying forward valuable and helpful configurations, allows the model to continue performing the task effectively.

Today, we often view models as a black box, lacking the capability to fully understand them due to their complexity. The reasoning behind the model's output and interpretability is the focus here, to make AI more explainable. In research, this direction is referred to as Explainable AI. I think that, among all the new research directions above, the new fine-tuning adapters, forgetting capabilities, multi-modality, and the ideal model size will help us gain some insights and unlock some mysteries with efficient learning to perform the tasks. In parallel, this also unlocks new possibilities in GPU usage as a consequence.

all the images in this post are AI-generated - by Copilot Designer.