This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

In the first part of 2-part series , we performed attack simulation of Capital one Breach scenario using Cloud Goat scenario - cloud_breach_s3 . In this second part, we will analyze logs generated from simulation and see how we can hunt for some of the attacker techniques from AWS data sources on boarded to Azure Sentinel. We will also walk-through how to ingest relevant data sources, develop detection or hunting queries using Kusto Query Language(KQL) and also use Azure Sentinel incident workflow and investigation features.

Identify Threat Hunting opportunities in AWS Logs.

If you have not seen it already, read our blogpost Identifying Threat Hunting opportunities in your data to understand potential threat hunting approaches across your data sources.

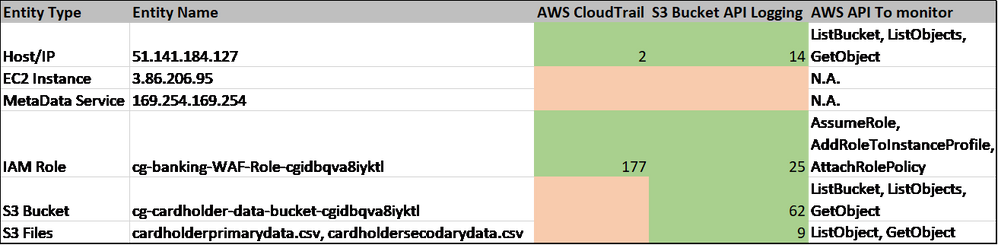

The goal of this blog is walk through an attack simulation to identify what activities show up in logs and can be converted into Detection or Hunting queries to provide proactive protection capabilities in the future. In the first part of this blog we performed the attack simulation and therefore know the suspicious entities involved. We can start mapping those entities to relevant data types and then investigate further. AWS has several data types that capture information related to API and network activities, but in this case, we are limiting our investigation to AWS CloudTrail and S3 Bucket API logging to monitor file level operations. These datasources are primary logging datasources and provide best coverage of all activity. We also enabled GuardDuty during the simulation to see if any of its built-in alerts trigger.

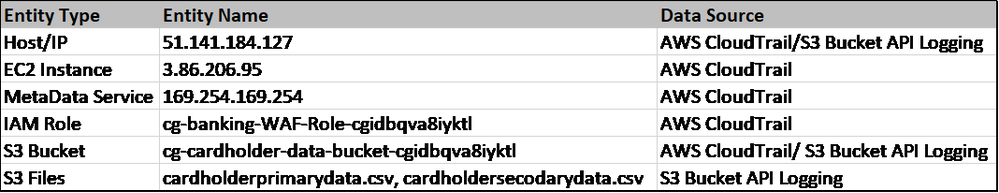

The below table represents entities involved in attack mapped against potential data sources.

Storage access Logging vs Object Level Logging

To monitor file level operations in S3 buckets, there are 2 types of logs available storage access logs and S3 object level API logs. However, for Incident response and detection purposes, S3 object level logging is recommended as it includes more services, comes with detailed and structured log format and additionally promises timeliness and reliable log delivery. This blog AWS S3 Logjam: Server Access Logging vs. Object-Level Logging is a recommended read which goes deeper and explains the differences between both log formats and pros and cons of each.

Enable S3 Object Level Logging

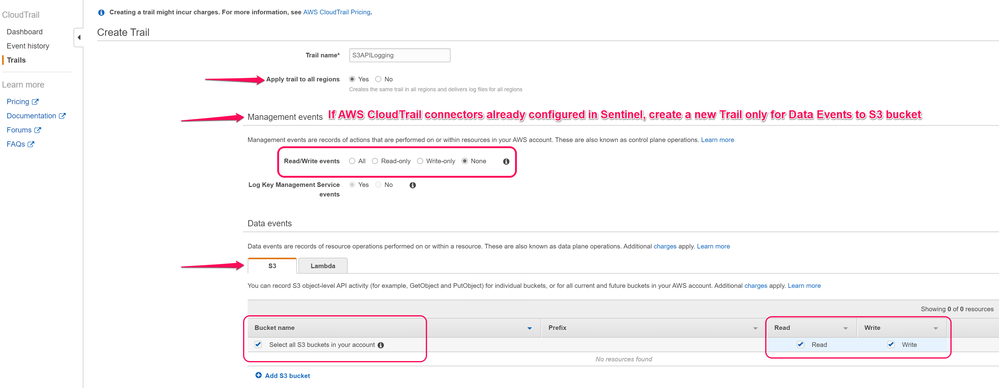

To enable S3 object logging, you need to create new CloudTrail with destination as S3 bucket.

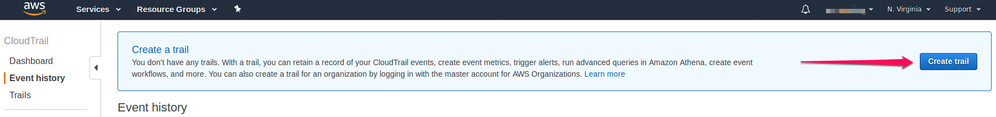

Navigate to the CloudTrail Console. Go to Event history and in Create a trail select Create trail.

In Trails, Enter a trail name, Select Apply trail to all regions else to cover all buckets from all regions. In the same window, Under navigate to the S3 Tab, select all S3 buckets in your account with Read and Write selected as shown in below screenshot. This will enable logging for all existing as well newly created buckets. You also have option to selectively go to specific bucket and enable object level logging for existing buckets.

Refer AWS guidance: How Do I Enable Object-Level Logging for an S3 Bucket with AWS CloudTrail Data Events?

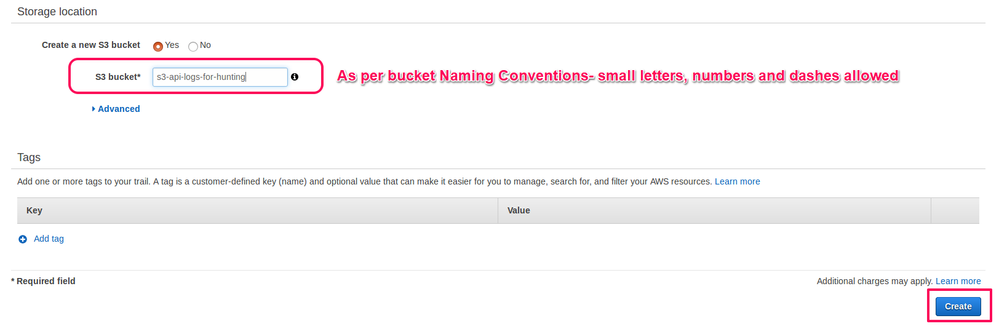

Follow the Amazon S3 Bucket Naming Requirements as specified in AWS documentation.

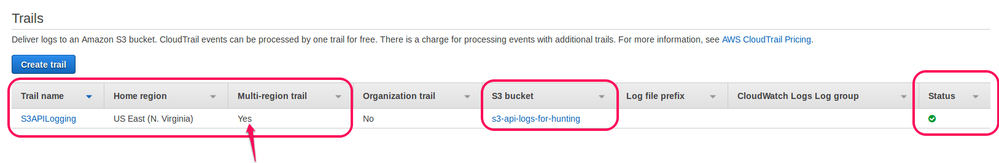

After creating the trail, make sure status shows Green and multi-region trail as Yes as per below screenshot.

Ingest S3 Logs to Azure Sentinel via Logstash

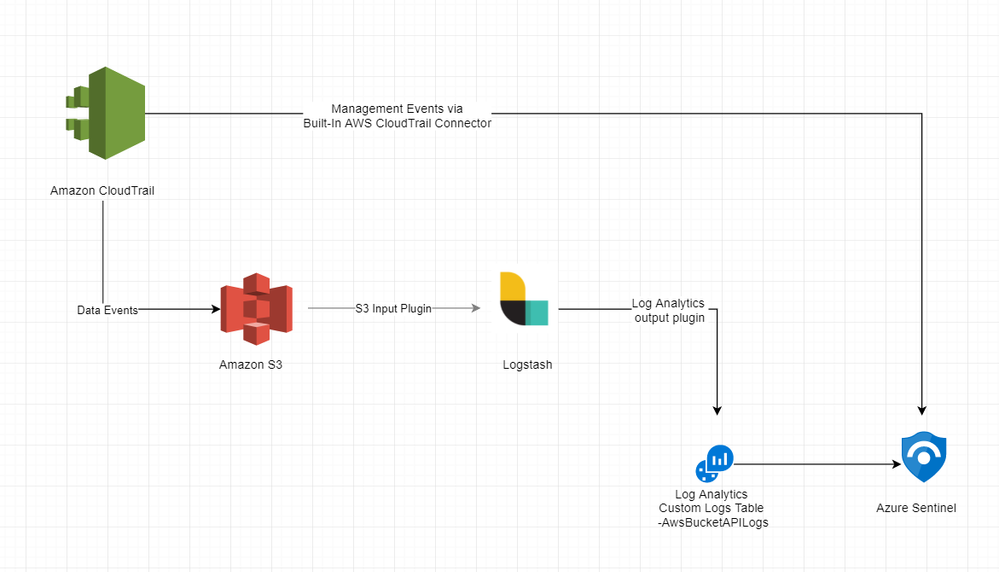

Currently you can use the AWS connector to stream all your AWS CloudTrail events into Azure Sentinel by using the instructions at Connect Azure Sentinel to AWS CloudTrail. It currently only ingests management events and does not support Data Events logged to S3 bucket. While our Product Engineering team works through adding support for other services and improving connector coverage, Azure Sentinel has multiple option to ingest Custom Logs from such unsupported sources. You can also read about it in our tech community blog post - Azure Sentinel: Creating Custom Connectors.

For this article, we are going to use Logstash, which has an S3 input plugin and a Log Analytics output plugin available. Please check the Azure Sentinel GitHub wiki Ingest Custom Logs using Logstash for detailed installation steps of Logstash and how to use a sample configuration file to send logs to Azure Sentinel. In order to parse json logs from an S3 bucket, you can use the configuration file available in Azure Sentinel under section Parsers - input-aws_s3-output-loganalytics.conf

For better understanding, you can check below diagram on how data ingestion will take place.

Writing Detections/Hunting query for TTPs

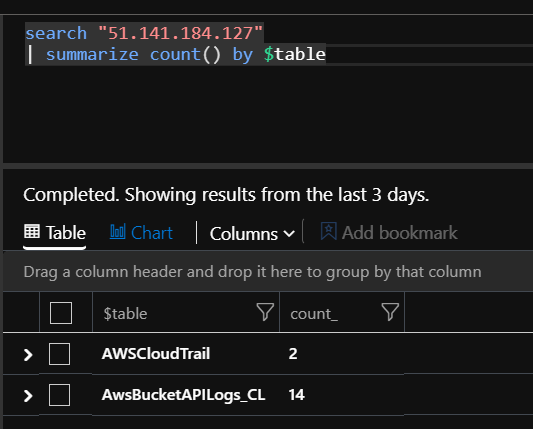

Now we have entities and ingested relevant data sources, let’s start looking into what logs are available for investigation. You can start with free text search queries for each of these using the KQL ‘search’ function.

For example:

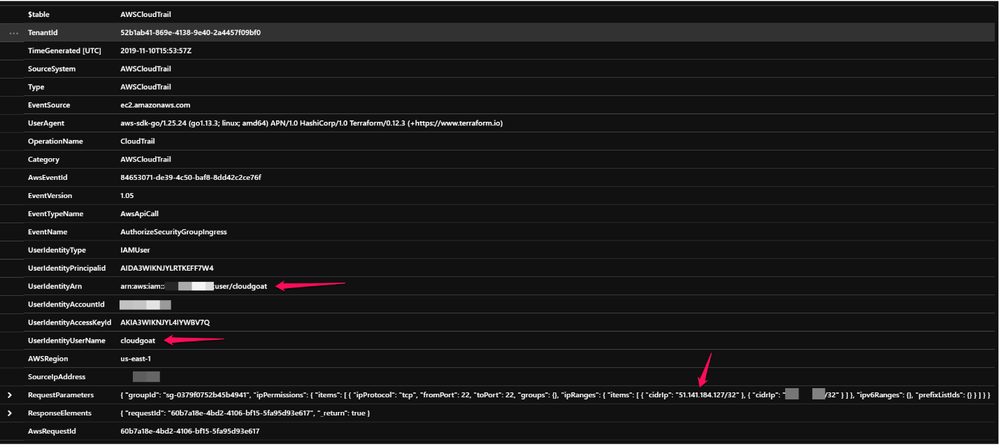

You can also start looking at sample events to identify key attributable columns for UserIdentity, IP address, S3 bucket name and so on. The below screenshot shows a sample event from AWSCloudTrail connector of the UserIdentity == Cloudgoat adding IP Addresses provided during whitelisting at resource deployment.

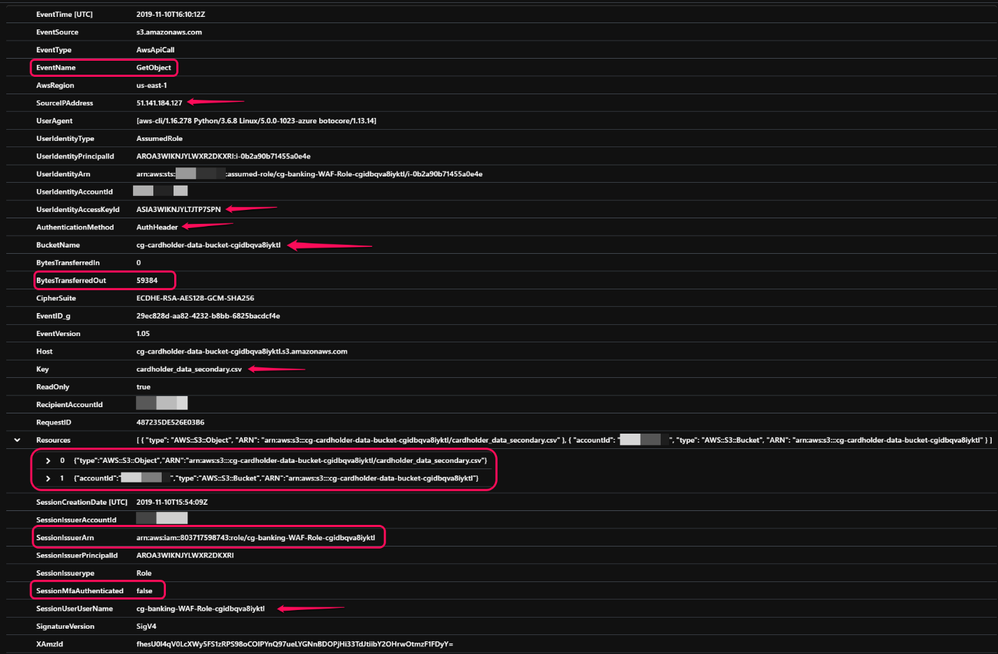

Another event shows, sample GetObject API call made to the S3 bucket and downloading resources from it. You can use AWSS3BucketAPILogsParser we have published in Github which has normalized column names to better align with CloudTrail event schema. Follow the parser notes to create a function and use below query to take a sample event.

After completing queries for each of the entities from the table, we can populate the Entity to datasource mapping table below, where green indicates it is present in the data type and Red indicates it is not present. The number indicates the count of events. Also, add any interesting event types from monitoring/alerting perspective.

Detection and Hunting Queries

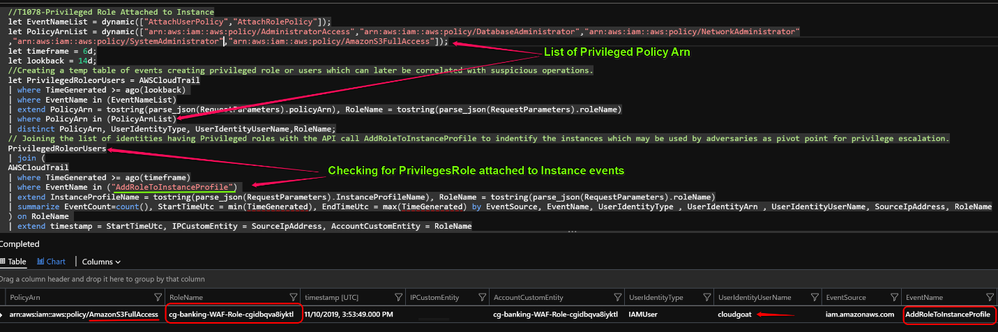

T1078: Privileged role attached to Instance

This technique maps back to initial vulnerable resources associated with scenario deployed by cloudgoat.

Github Query Link : This query looks for when a Privileged role is attached to existing instance or new instance at deployment. This instance may be used by adversary to escalate normal user privileges to administrative user. There may be legitimate use cases in case of cross account access to do administrator activity, in such cases whitelisting the UserIdentity invoking and RoleName combinations based on historical analysis will reduce False positives.

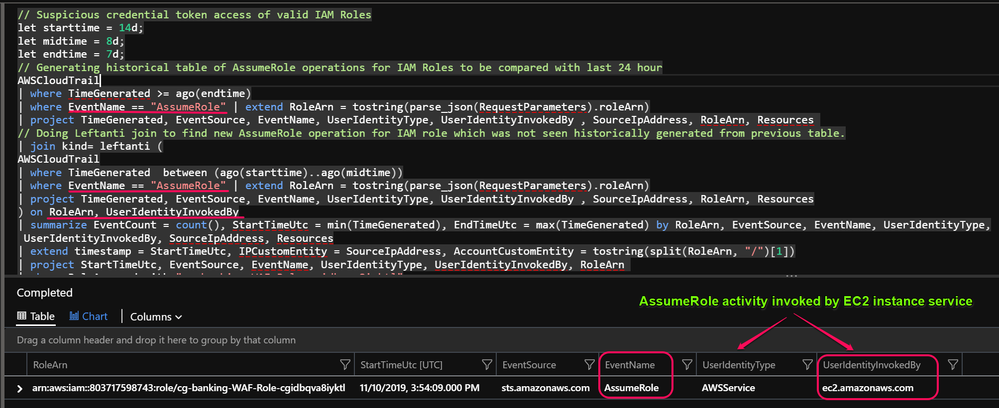

T1078 : Suspicious credential token access of valid IAM Roles

This log is a result of second command from scenario cheatsheet which requested temp credentials via curl request.

Github Query Link : This query will look for AWS STS API Assume Role operations for RoleArn (Role Amazon Resource Names) which was not historically seen invoked by Useridentity

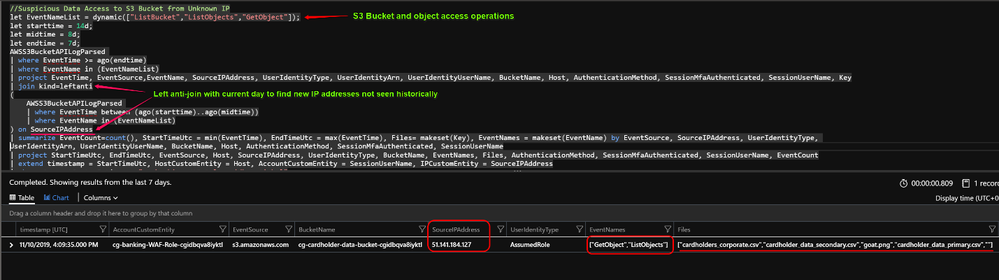

T1530 : Suspicious Data Access to S3 Bucket from Unknown IP address.

This technique is a result of third and last command executed from scenario cheatsheet to use IAM role credentials retrieved from previous steps to list buckets and retrieve object contents.

Github query : This query will look for any access originating from Source IP which was not seen historically accessing the bucket or downloading files from it via AWS S3 API.

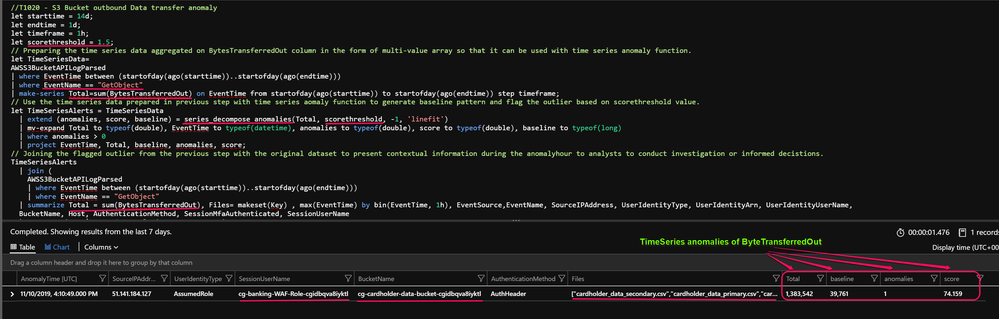

T1020 : S3 Bucket outbound Data transfer anomaly

This technique is a result of s3 sync command executed from scenario cheatsheet to retrieve object contents. Identifies an anomalous spike in data transfer from an S3 bucket based on GetObject API call and BytesTransferred out field.

Github Query: The query leverages KQL builtin anomaly detection algorithms to find large deviations from baseline patterns. Sudden increase in amount of data transferred from S3 buckets can be indication of data exfiltration attempts.

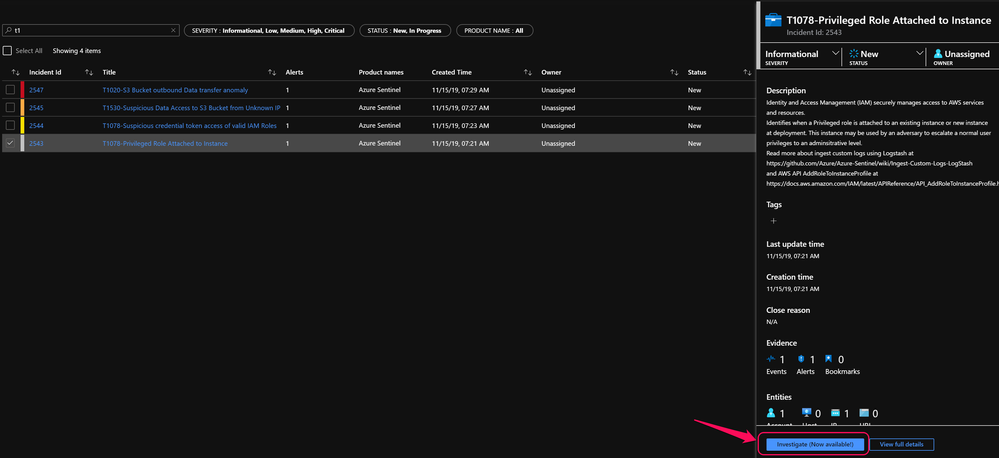

Azure Sentinel Incident Workflow

You can follow the tutorial – create custom analytic rules based on the queries mentioned in above steps. While scheduling the alerts, we will run the analytics on historical data already ingested in Sentinel so as to trigger incidents.

From the incidents page, you will see related incidents.

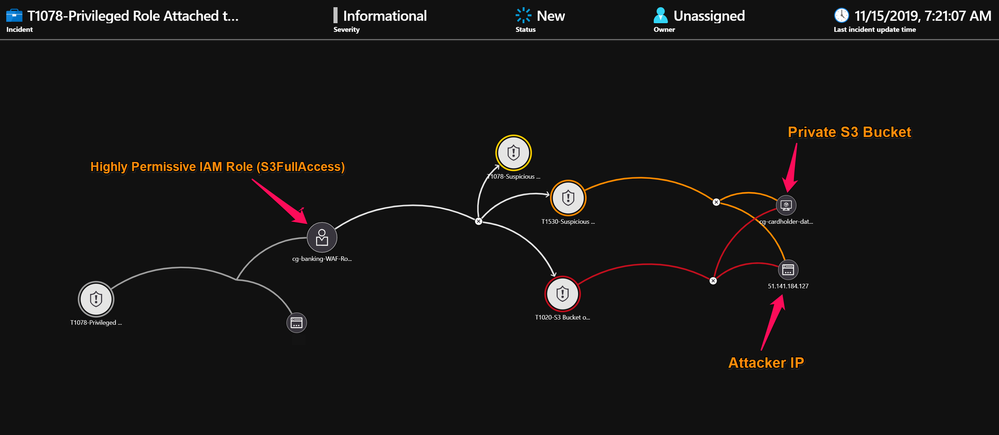

After clicking on the Investigate, it will open Graph view for Incident. You can further right click on entities and show related alerts. Repeat the same for alerts to show additional related entities.

You can then see similar entity relationship graph as below.

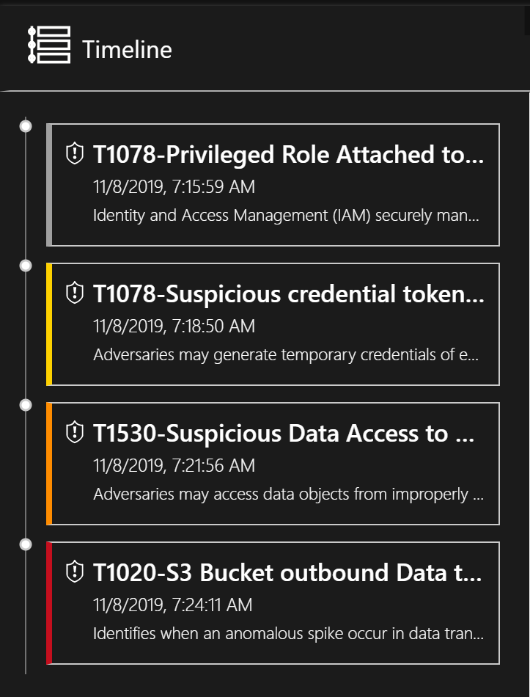

You can also see Timeline view of the triggered alerts.

You can read more about investigate incidents at Tutorial: Investigate incidents with Azure Sentinel.

Conclusion

Adversary simulation or vulnerable by design labs are great sources for generating interesting data on which a defender can write new detection or validate existing detections. In this 2-part series, we demonstrated how Cloudgoat provides the capability to simulate the attack scenario by deploying vulnerable resources and conducting the attacks. While conducting the simulation, we enabled relevant log data types, ingested them into Azure Sentinel and analyzed the events to correlate the attack activity. Although there is no built-in connector support currently for some data types, such as AWS S3 bucket API logs, we can ingest these as custom log sources via other methods such as the Logstash method described in this article.

Once we have relevant data sources onboarded to Azure sentinel, we can start mapping published resources to MITRE ATT&CK Techniques and turn them into detection or hunting queries. In the real world, separating interesting events from normal behavior can be challenging for analysts, therefore, developing and maintaining detections or hunting queries mapping to MITRE ATT&CK Techniques can help you in detecting attacks at earlier stages.

If you are looking for similar list of security alerts on Azure storage accounts, be sure to check

Advanced Threat Protection for Storage. Advanced Threat Protection for Storage provides an additional layer of security intelligence that detects unusual and potentially harmful attempts to access or exploit storage accounts.

Feel free to submit your own queries to the public Azure Sentinel github for review and eventual inclusion in the Azure Sentinel User Interface.

Additionally, if you have additional feedback, you can reach out to me via Twitter. Happy Hunting.

Note: Also read more about EC2 Instance Metadata Service (IMDSv2) published yesterday on 19th at AWS Security blogs. IMDSv2 adds new “belt and suspenders” protections such as restricting IAM roles and using local firewall rules to restrict access to the IMDS.

References

- Hunting for Capital One Breach TTPs in AWS logs using Azure Sentinel - Part I

- Logging Data Events with the AWS Management Console

- Azure Log Analytics output plugin for Logstash

https://github.com/yokawasa/logstash-output-azure_loganalytics

- AWS CLI – S3 API Command References

https://docs.aws.amazon.com/cli/latest/reference/s3api/index.html#cli-aws-s3api

- Logstash S3 input plugin

https://www.elastic.co/guide/en/logstash/current/plugins-inputs-s3.html

- CloudTrail Log Event Reference

https://docs.aws.amazon.com/awscloudtrail/latest/userguide/cloudtrail-event-reference.html

- AWS S3 Logjam: Server Access Logging vs. Object-Level Logging

https://www.netskope.com/blog/aws-s3-logjam-server-access-logging-vs-object-level-logging

- AWS S3 Operations on Objects – Get Object

https://docs.aws.amazon.com/AmazonS3/latest/API/RESTObjectGET.html

- List of Security alerts for Advanced Threat Protection for Storage

- Azure Sentinel Pull Request : Logstash Parser, Sentinel hunting queries for AWS Cloudtrail and S3BucketAPILogs

https://github.com/Azure/Azure-Sentinel/commit/083781f95c18e56a990df6751b04e6746e5bbba5

- Add defense in depth against open firewalls, reverse proxies, and SSRF vulnerabilities with enhancements to the EC2 Instance Metadata Service