This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

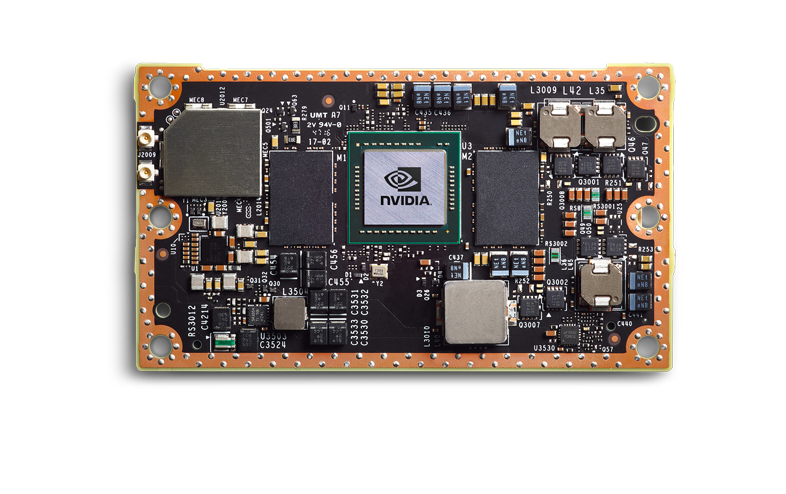

Jetson TX2

Jetson TX2 is the fastest, most power-efficient embedded AI computing device. This 7.5-watt supercomputer on a module brings true AI computing at the edge. It's built around an NVIDIA Pascal™-family GPU and loaded with 8GB of memory and 59.7GB/s of memory bandwidth. It features a variety of standard hardware interfaces that make it easy to integrate it into a wide range of products and form factors.

Jetson Developer Kit Academic Discount

Are you affiliated with an educational institution? If so, you're eligible for a significant discount on the Jetson TX2 Developer Kit. As long as you have a valid accredited university email address, you will be sent a one-time use code to order the Jetson TX2 Developer Kit.

Jetson TX2 Developer Kit

The Jetson TX2 Developer Kit gives you a fast, easy way to develop hardware and software for the Jetson TX2 AI supercomputer on a module. It exposes the hardware capabilities and interfaces of the module and is supported by NVIDIA Jetpack. Learn More.

To apply for the Education Discount, click on your location see https://developer.nvidia.com/embedded/buy/jetson-edu

So Starting simple with getting to use your Jetson Board

The following is a guest post by Spyros Garyfallos is a Senior Software Engineer at Microsoft

We will get started by exploring a simple program that detects human faces using the camera input and renders the camera input with the bounding boxes. This Blog was originally published on the following site https://havedatawilltrain.com/

The example I will use is based on the Haar Cascades and is one of the simplest ways to get started with Object Detection on the Edge. Azure IoT Edge is a fully managed service built on Azure IoT Hub. Deploy your cloud workloads—artificial intelligence, Azure and third-party services, or your own business logic—to run on Internet of Things (IoT) edge devices via standard containers. By moving certain workloads to the edge of the network, your devices spend less time communicating with the cloud, react more quickly to local changes, and operate reliably even in extended offline periods.

There is no Jetson platform dependency for this code, only on OpenCV.

I'm using a remote development setup to do all of my coding that uses containers. This way you can experiment with all the code samples yourself without having to setup any runtime dependencies on your device.

Here is how I setup my device and my remote development environment with VSCode.

Start by cloning the example code. After cloning, you need to build and run the container that we'll be using to run our code.

Clone the example repo

https://github.com/paloukari/jetson-detectors

cd jetson-detectors

To build and run the development container

sudo docker build . -f ./docker/Dockerfile.cpu -t object-detection-cpu

sudo docker run --rm --runtime nvidia --privileged -ti -e DISPLAY=$DISPLAY -v "$PWD":/src -p 32001:22 object-detection-cpu

The --privileged is required for accessing all the devices. Alternatively you can use the --device /dev/video0.

Here's the code we'll be running. Simple open the cpudetector.py file in VSCode and hit F5 or just run: python3 src/cpudetector.py. In both cases you'll need to setup the X forwarding. See the Step 2: X forwarding on how to do this.

~23 FPS with OpenCV

We get about 23 FPS. Use the tegrastats to see what's happening in the GPU:

We're interested in the GR3D_FREQ values. It's clear that this code runs only on the device CPUs with more than 75% utilization per core, and with 0% GPU utilization.

Next up, we use go Deep

Haar Cascades is good, but how about detecting more things at once? In this case, we need to use Deep Neural Networks. We will need to use another container from now on to run the following code.

To build and run the GPU accelerated container

sudo docker build . -f ./docker/Dockerfile.gpu -t object-detection-gpu

sudo docker run --rm --runtime nvidia --privileged -ti -e DISPLAY=$DISPLAY -v "$PWD":/src -p 32001:22 object-detection-gpu

WARNING: This build takes a few hours to complete on a TX2. The main reason is because we build the Protobuf library to increase to models loading performance. To reduce this the build time, you can build the same container on a X64 workstation.

In the first attempt, we'll be using the official TensorFlow pre-trained networks. The code we'll be running is here.

When you run python3 src/gpudetector.py --model-name ssd_inception_v2_coco , the code will try to download the specified model inside the /models folder, and start the object detection in a very similar fashion as we did before. The --model-name default value is ssd_inception_v2_coco, so you can omit it.

This model has been trained to detect 90 classes (you can see the details in the downloaded pipeline.config file). Our performance plummeted to ~8 FPS.

Run python3 src/gpudetector.py

~8 FPS with a the TensorFlow SSD Inception V2 COCO model

What happened? We've started running the inference in the GPU which for a single inference round trip now takes more time. Also, we move now a lot of data from the camera to RAM and from there to the GPU. This has an obvious performance penalty.

What we can do is start with optimizing the inference. We'll use the TensorRT optimization to speedup the inference. Run the same file as before, but now with the --trt-optimize flag. This flag will convert the specified TensorFlow mode to a TensorRT and save if to a local file for the next time.

Run python3 gpudetector.py --trt-optimize:

~15 FPS with TensorRT optimization

Better, but still far from perfect. The way we can tell is by looking at the GPU utilization in the background, it drops periodically to 0%. This happens because the code is being executed sequentially. In other words, for each frame we have to wait to get the bits from the camera, create an in memory copy, push it to the GPU, perform the inference, and render the original frame with the scoring results.

We can break down this sequential execution to an asynchronous parallel version. We can have a dedicated thread for reading the data from the camera, one for running inference in the GPU and one for rendering the results.

To test this version, run python3 gpudetectorasync.py --trt-optimize

~40 FPS with async TensorRT

By parallelizing expensive calculations, we've achieved a better performance compared to the OpenCV first example. The trade off now is that we've introduced a slight delay between the current frame and the corresponding inference result. To be more precise here, because the inference now is running on an independent thread, the observed FPS do not match with the number of inferences per second.

What we have achieved: We've explored different ways of improving the performance of the typical pedagogic object detection while-loop.

Previously, in the process of increasing the observed detector Frames Per Second (FPS) we saw how we can optimize the model with TensorRT and at the same time replace the simple synchronous while-loop implementation to an asynchronous multi-threaded one.

We noticed the increased FPS and the introduced trade-offs: the increased inference latency and the increased CPU and GPU utilization. Reading the web camera input frame-by-frame and pushing the data to the GPU for inference can be very challenging.

Now lets try using DeepStream Object Detector on the Jetson TX2

The data generated by a web camera are streaming data. By definition, data streams are continuous sources of data, in other words, sources that you cannot pause in any way. One of the strategies in processing data streams is to record the data and run an offline processing pipeline. This is called batch processing.

In our case we are interested in minimizing the latency of inference. A more appropriate strategy then would be a real time stream processing pipeline. The DeepStream SDK offers different hardware accelerated plugins to compose different real time stream processing pipelines.

NVIDIA also offers pre-built containers for running a containerized DeepStream application. Unfortunately, at the time of this blog post, the containers had some missing dependencies. For this reason, this application is run directly on the TX2 device.

DeepStream Object Detector application setup

To run a custom DeepSteam object detector pipeline with the SSD Inception V2 COCO model on a TX2, run the following commands.

I'm using JetPack 4.2.1

Step 1: Get the model.

This command will download and extract in /temp the same model we used in the Python implementation.

wget -qO- http://download.tensorflow.org/models/object_detection/ssd_inception_v2_coco_2017_11_17.tar.gz | tar xvz -C /tmp

Step 2: Optimize the model with TensorRT

This command will convert this downloaded frozen graph to a UFF MetaGraph model.

python3 /usr/lib/python3.6/dist-packages/uff/bin/convert_to_uff.py \

/tmp/ssd_inception_v2_coco_2017_11_17/frozen_inference_graph.pb -O NMS \

-p /usr/src/tensorrt/samples/sampleUffSSD/config.py \

-o /tmp/sample_ssd_relu6.uffThe generated UFF file is here: /tmp/sample_ssd_relu6.uff.

Step 3: Compile the custom object detector application

This will build the nvdsinfer_custom_impl sample that comes with the SDK.

cd /opt/nvidia/deepstream/deepstream-4.0/sources/objectDetector_SSD

CUDA_VER=10.0 make -C nvdsinfer_custom_implWe need to copy the UFF MetaGraph along with the label names file inside this folder.

cp /usr/src/tensorrt/data/ssd/ssd_coco_labels.txt .

cp /tmp/sample_ssd_relu6.uff .

Step 4: Edit the application configuration file to use your camera

Located in the same directory, the file deepstream_app_config_ssd.txt contains information about the DeepStream pipeline components, including the input source. The original example has been configured to use a static file as source.

[source0]

enable=1

#Type - 1=CameraV4L2 2=URI 3=MultiURI

type=3

num-sources=1

uri=file://../../samples/streams/sample_1080p_h264.mp4

gpu-id=0

cudadec-memtype=0

If you want to use a USB camera, make a copy of this file and change the above section to:

[source0]

enable=1

#Type - 1=CameraV4L2 2=URI 3=MultiURI

type=1

camera-width=1280

camera-height=720

camera-fps-n=30

camera-fps-d=1

camera-v4l2-dev-node=1Save this as deepstream_app_config_ssd_USB.txt.

TX2 comes with an on board CSI camera. If you want to use the embedded CSI camera change the source to:

[source0]

enable=1

#Type - 1=CameraV4L2 2=URI 3=MultiURI 4=RTSP 5=CSI

type=5

camera-width=1280

camera-height=720

camera-fps-n=30

camera-fps-d=1

Save this file as deepstream_app_config_ssd_CSI.txt.

Both cameras are configured to run at 30 FPS with 1280x720 resolution.

Execute the application

To execute the Object Detection application using the USB camera, run:

deepstream-app -c deepstream_app_config_ssd_USB.txt

DeepStream detector @16 FPS with the USB Camera

The application will print the FPS in the terminal, it's ~16 FPS. This result is very similar to the TensorRT Python implementation where we had achieved 15 FPS in a simple Python while loop.

So what's the fuzz about DeepStream?

In a closer look, we can tell there is a substantial difference. The tegrastats output shows an semi-utilized GPU (~50%) and an under utilized CPU (~25%).

The USB camera throughput is the obvious bottleneck in this pipeline. The Jetson TX2 development kit comes with an on board 5 MP Fixed Focus MIPI CSI Camera out of the box.

The Camera Serial Interface (CSI) is a specification of the Mobile Industry Processor Interface (MIPI) Alliance. It defines an interface between a camera and a host processor. This means that the CSI camera can move the data in the GPU faster than the USB port.

Let's try the CSI camera.

deepstream-app -c deepstream_app_config_ssd_CSI.txt

DeepStream detector @24 FPS with the CSI Camera

The average performance now is ~24 FPS. Note that the theoretical maximum we can get is 30 FPS, since this is the camera frame rate.

We can see an obvious increase of the system utilization:

Let's try running both applications side by side:

The GPU now is operating at full capacity.

The observed frame rates are ~12 FPS for each application. Apparently, the maximum capacity of this GPU is ~24 inferences per second for this setup.

Note: NVIDIA advertises that a DeepStream ResNet-based object

detector application can handle concurrently 14 independent 1080p 30fps video streams on a TX2. Here we see that this is far from true when using an industry standard detection model like SSD Inception V2 COCO.

What have we achieved: We've explored an optimized streaming Object Detection application pipeline using the DeepStream SDK and we've achieved the maximum detection throughput possible, as defined by the device's hardware limits.