This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Resume feature is available in ADF!

We have enhanced the resume capability in ADF by which you can build robust pipelines for many scenarios. With this enhancement, if one of the activities fails, you can rerun the pipeline from that failed activity. When moving data via the copy activity, you can resume the copy from the last failure point at the file level instead of starting from the beginning, which greatly increase the resilience of your data movement solution especially on large size of files movement between file-based stores. This resume features in copy activity applies to the following file-based connectors: Amazon S3, Azure Blob, Azure Data Lake Storage Gen1, Azure Data Lake Storage Gen2, Azure File Storage, File System, FTP, Google Cloud Storage, HDFS, and SFTP.

Customers have built robust pipelines to move petabytes of data with this feature

Customers can benefit from this improvement when they are doing data lake migration, for example to migrate terabytes or petabytes of data from Amazon S3 to Azure Data Lake Storage Gen2. The benefits include increasing the success rate on data lake migration projects, gaining flexibility to control the process on data migration, and saving effort and time on data partitions or any solutions explicitly created for high resilience. Some early adopters trying out this feature under preview had successfully moved petabytes of data from AWS S3 to Azure with a simple pipeline containing a single copy activity. Once any unexpected failure happened during the data migration, they simply rerun this pipeline from last failure or specify retry count to let copy activity retry automatically, and the copy activity continued to copy rest of the files on AWS S3 without starting from any files which had been already copied to the destination last time.

How to resume copy from the last failure point at file level

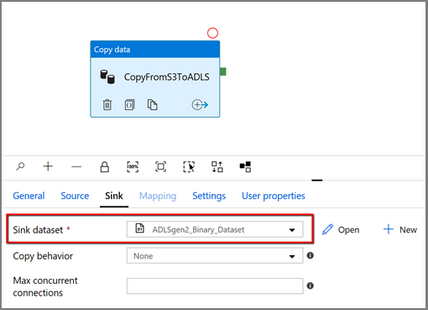

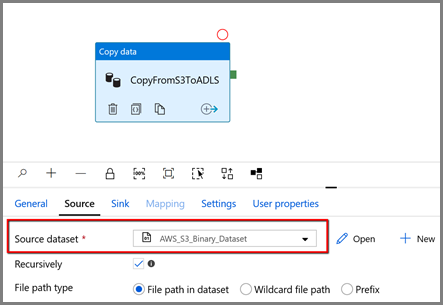

Configuration on authoring page for copy activity:

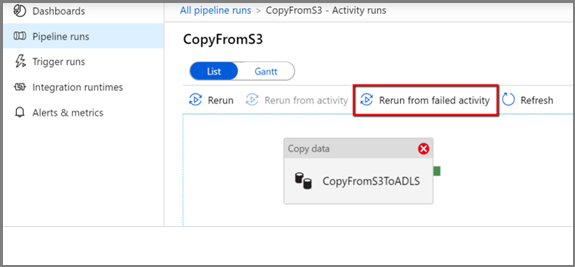

Resume from last failure on monitoring page:

Note:

When you copy data from Amazon S3, Azure Blob, Azure Data Lake Storage Gen2 and Google Cloud Storage, copy activity can resume from arbitrary number of copied files. While for the rest of file-based connectors as source, currently copy activity supports resume from a limited number of files, usually at the range of tens of thousands and varies depending on the length of the file paths; files beyond this number will be re-copied during reruns.

For more concrete information, please refer this.