This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Guest post Noah Gift https://noahgift.com/

Noah Gift is the founder of Pragmatic AI Labs. Noah is currently lectures at MSDS, at Northwestern, Duke MIDS Graduate Data Science Program, and the Graduate Data Science program at UC Berkeley and the UC Davis Graduate School of Management MSBA program, and UNC Charlotte Data Science Initiative. Noah roles include teaching and designing graduate machine learning, A.I., Data Science courses, and consulting on Machine Learning and Cloud Architecture for students and faculty. These responsibilities include leading a multi-cloud certification initiative for students.

His most recent books are:

- Pragmatic A.I.: An introduction to Cloud-Based Machine Learning (Pearson, 2018)

- Python for DevOps (O’Reilly, 2020).

- Cloud Computing for Data Analysis

- Testing in Python

Jupyter notebooks are increasingly the hub in both Data Science and Machine Learning projects. All major vendors have some form of Jupyter integration. Some tasks are more oriented in the direction of engineering and others in the direction of science.

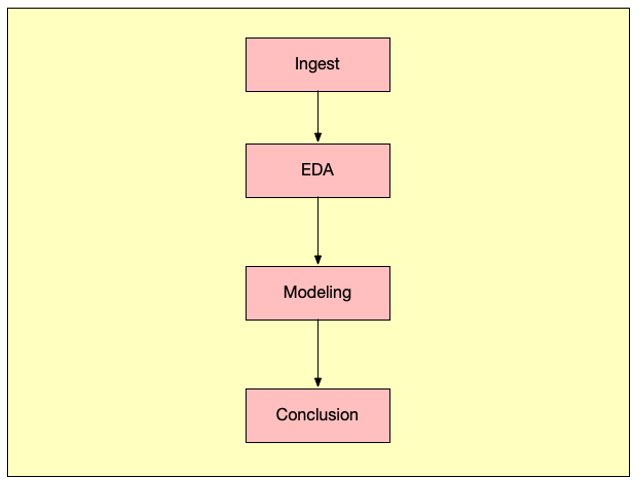

A good example of a science focused workflow is the traditional notebook-based Data Science workflow. First, data is collected, it could be anything from a SQL query to a CSV file hosted in GitHub.

Next, the data is explored using visualization, statistics and unsupervised machine learning. A model may be created, and then the results are shared via a conclusion.

Data Science Notebook Workflow

This often fits very well into a markdown-based workflow where each section is a Markdown heading.

Often that Jupyter notebook is then checked into source control. Is this notebook source control or a document?

This is an important consideration and it is best to treat it as both.

DevOps for Jupyter Notebooks

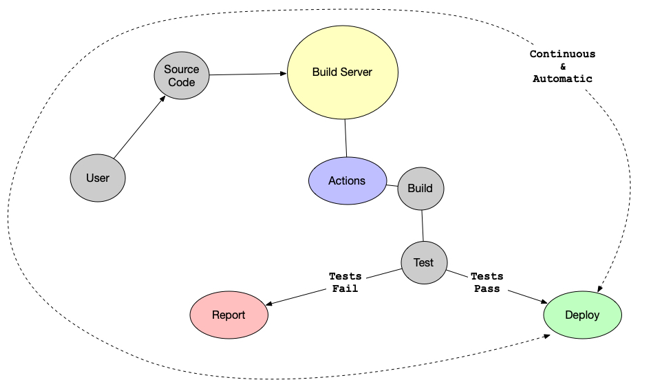

DevOps is popular technology best practice and it is often used in combination with Python. The center of the universe for DevOps is the build server. The build server enables automation. This automation includes linting, testing, reporting, building and deploying code. This process is called continuous delivery.

DevOps Workflow

The benefits of continuous delivery are many. The code is automatically tested, and it is always in a deployable state. Automation of best practices creates a cycle of continuous improvement in a software project.

A question should crop up if you are a data scientist. Isn't Jupyter notebook source code too? Wouldn't it benefit from these same practices?

The answer is yes.

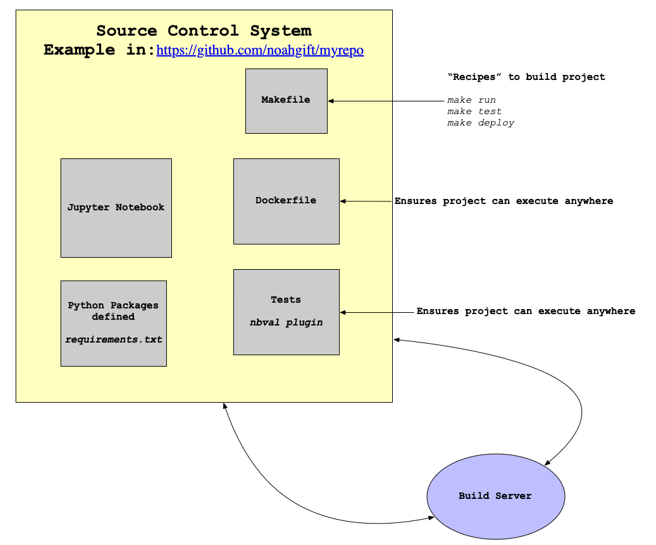

This diagram below exposes a proposed best practices directory structure for a Jupyter based project in source control.

The Makefile holds the recipes to build, run and deploy the project via make commands: make test, etc. The Dockerfile holds the actual runtime logic which makes the project truly portable.

FROM python:3.7.3-stretch

The Jupyter notebook itself can be tested via the nbval plugin as shown.

The requirements for the project are kept in a requirements.txt file.

Every time the project is changed the build server picks up the change and runs tests on the Jupyter notebook cells themselves.

DevOps isn't just for software only projects. DevOps is a best practice that fits well with the ethos of Data Science.

Why guess if your notebook works, your data is reproducible or that it can deploy?

You can read more about Python for DevOps by reading the book.

You can learn about Cloud Computing for Data Analysis, the missing semester of Data Science by reading the book.

You can read more about Testing in Python, by reading the book.

Other Resources

Microsoft Learn http://docs.microsoft.com/learn

Azure Notebooks http://notebooks.azure.com