This post has been republished via RSS; it originally appeared at: Core Infrastructure and Security Blog articles.

The Scenario

Have you ever found yourself lost in the maze of credit card options, navigating through countless comparison websites, unsure of the accuracy and timeliness of their information? I certainly have. Recently, I embarked on a quest to find the perfect credit card, one that rewards my spending habits with frequent flyer points. However, relying solely on popular comparison platforms left me questioning the reliability of their data, often overshadowed by biased advertisements.

But then, a beacon of hope emerged: the realization that all bank product information is accessible through a common API. With this revelation, I set out to craft my own solution – a personalized Copilot to guide me through the sea of credit card offerings.

Building your own Copilot allows customization so that you can tailor it to your specific needs, industry, or domain, ensuring that it provides more relevant and accurate suggestions. In addition, it is great for data privacy because when using your own data, you maintain control over its privacy and security, avoiding concerns about sharing sensitive information with third-party platforms.

Furthermore, it provides improved performance. With access to your proprietary data, the Copilot can offer insights and suggestions that are more aligned with your organization's unique challenges and objectives. Moreover, building your own Copilot can potentially provide cost savings in the long run compared to relying on external services, especially as your usage scales up.

Finally, developing your own Copilot allows you to innovate and differentiate your products or services, potentially giving you a competitive advantage in your market.

Now, you might wonder, why not utilize existing tools like Bing Chat or Gemini? While they offer convenience, their reliance on scraped data from comparison websites introduces the risk of inaccuracies and outdated information, defeating the purpose of informed decision-making.

This endeavor is not about dispensing financial advice. Instead, it's a testament to the power of leveraging your own data to create your own Copilot. Consider it a journey of exploration, driven by curiosity and a desire to learn more about AI.

Important Concepts

It is important that I highlight some important concepts before we start.

- RAG: Retrieval-augmented generation (RAG) enhances generative AI models by integrating facts from external sources, addressing limitations in LLMs. While LLMs excel at responding quickly to general prompts due to their parameterized knowledge, they lack depth for specific or current topics.

- Vector Database: A Vector Database is a structured collection of vectors, which are mathematical representations of data points in a multi-dimensional space. This type of database is often used in machine learning and data analysis tasks, where data points need to be efficiently stored, accessed, and manipulated for various computational tasks.

- Prompt: In the context of AI and natural language processing, a prompt refers to a specific input or query provided to an AI model to generate a desired output. Prompts can vary in complexity, from simple questions to more elaborate instructions, and they play a crucial role in directing the AI's behaviour and generating meaningful responses.

- LLM stands for Large Language Model. It refers to a class of AI models, such as OpenAI's GPT (Generative Pre-trained Transformer) models, that are trained on vast amounts of text data to understand and generate human-like text. LLMs are capable of tasks like language translation, text completion, summarization, and more, making them versatile tools for natural language processing applications.

The High-Level Design

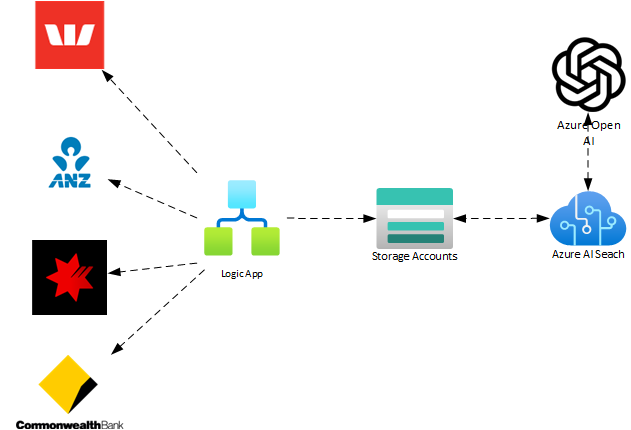

The following are the resources we will leverage for this scenario.

- Logic Apps

- Storage Account

- Azure OpenAI

- Azure AI Search

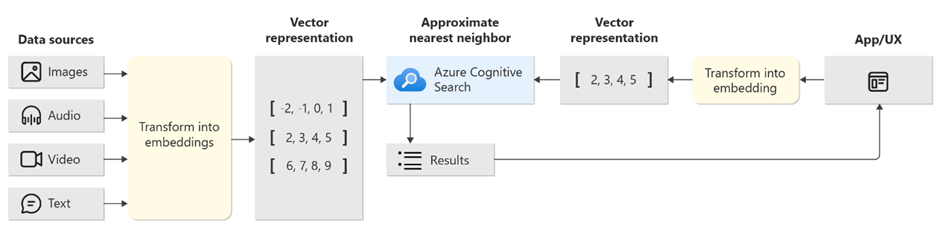

The following is a high-level diagram of how the solution looks like.

The Implementation

Now let’s break it down how this can be accomplished. I will assume you already have all these resources deployed. I will just go into the configuration details. However, you can easily deploy everything that is required with the following commands:

Note: You may have to install some Az modules if they are not already installed. Also ensure you choose unique names for your resources.

Collecting The Data

Let’s start looking at how we can do the retrieval of the bank product data and make it our own. This is the data that will be used to augment the response you will receive back from the LLM.

Before you start:

- Make sure you enable System Managed Identity for your Logic App resource.

- Make sure you give the Logic App managed identity the Storage Blob Data Contributor role in the Storage Account, and you create a container named products.

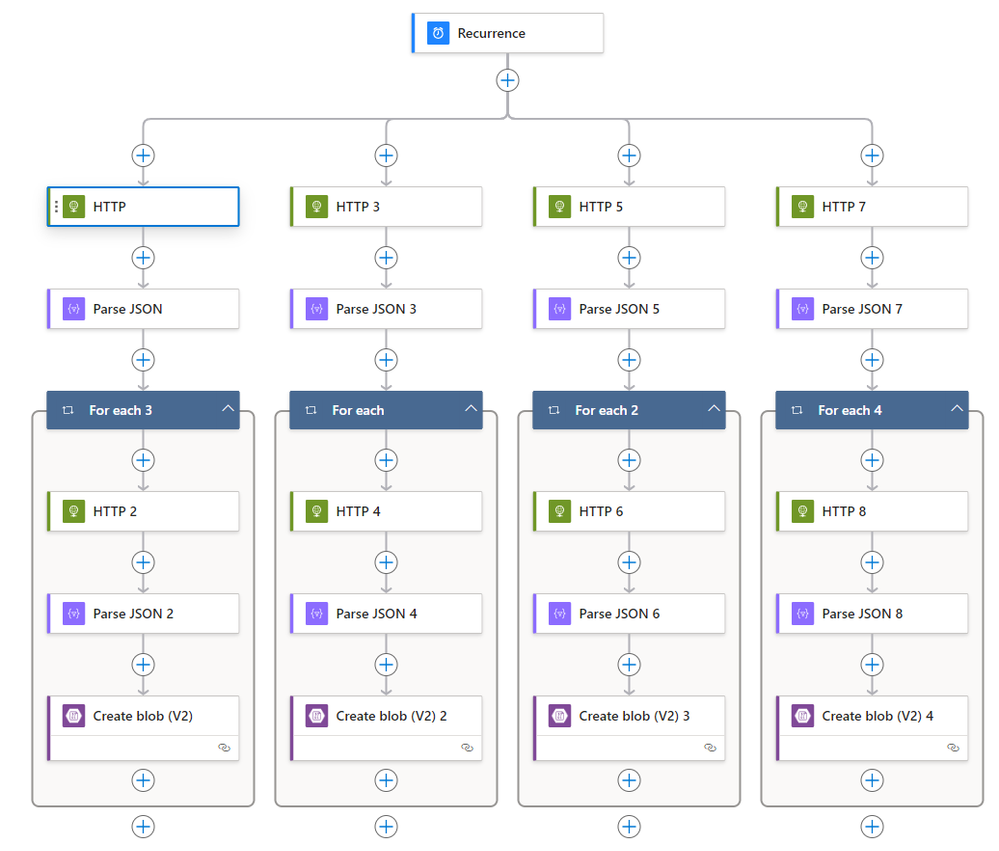

I have created a Logic App with a Recurrence Trigger of once a day.

I also have setup four parallel branches, one for each bank.

Let’s take a deeper look at the first branch. The other branches will be the same except for the URIs we are hitting.

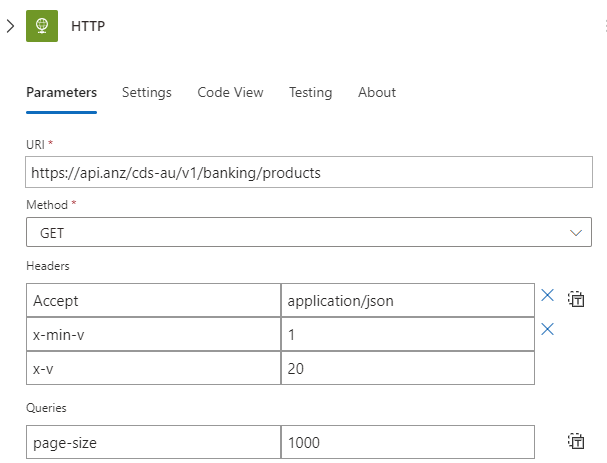

- ANZ

https://api.anz/cds-au/v1/banking/products - Westpac

https://digital-api.westpac.com.au/cds-au/v1/banking/products/ - CBA

https://api.commbank.com.au/public/cds-au/v1/banking/products/ - NAB

https://openbank.api.nab.com.au/cds-au/v1/banking/products

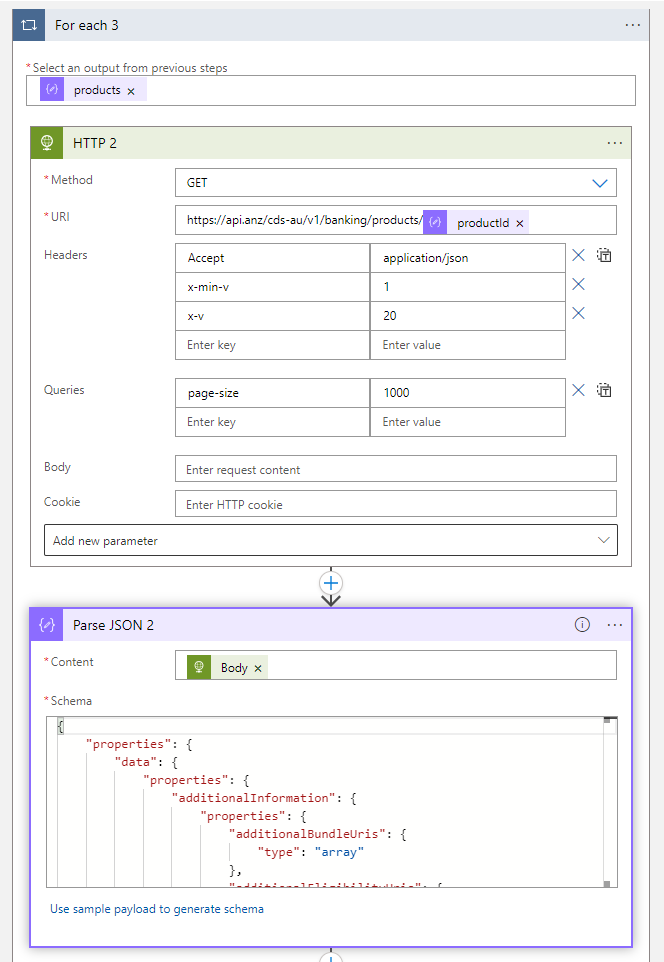

First step we are doing a GET request to the bank API. If you are replicating this, just enter the values as below. These are the minimum and maximum API versions and the maximum number of results to return.

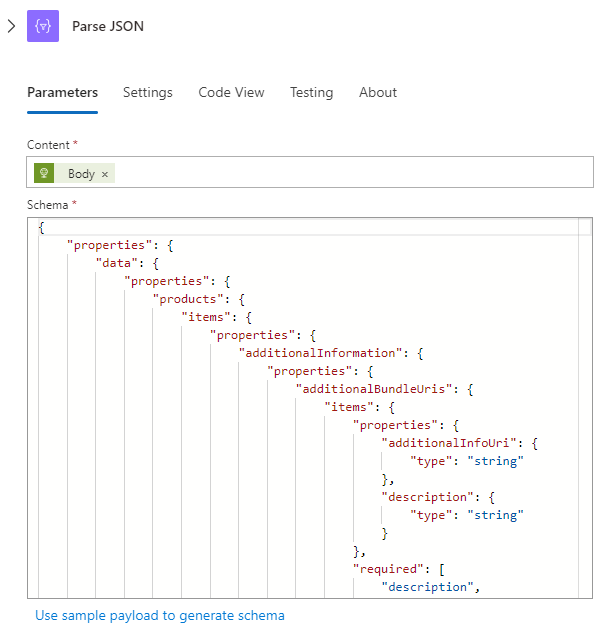

Next, we parse the JSON using the Body of the Request as input. You can get the schema from the response sample banks provided in their API documentation. For example, for CBA in can retrieve from here.

Now that we have all the bank products, we will iterate through each product, using the productid from the parsed JSON object to retrieve the details about each product. We will then do the same as we did before and parse the JSON. Again, the schema of a product can be retrieved from the bank API documentation.

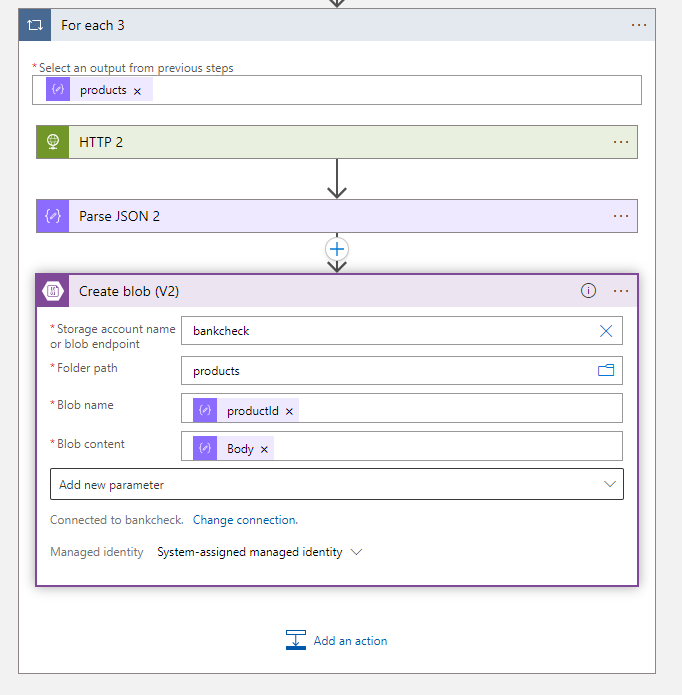

Finally, we configure where we want those documents to be stored. We are going to use the Logic Apps managed identity to securely connect with the Storage Account and send those documents to a container named products there.

Once we establish that connection, we can define it will create the blobs on container “products”, name each blob with the product ID and the content of the blob will be the product details we got from the bank.

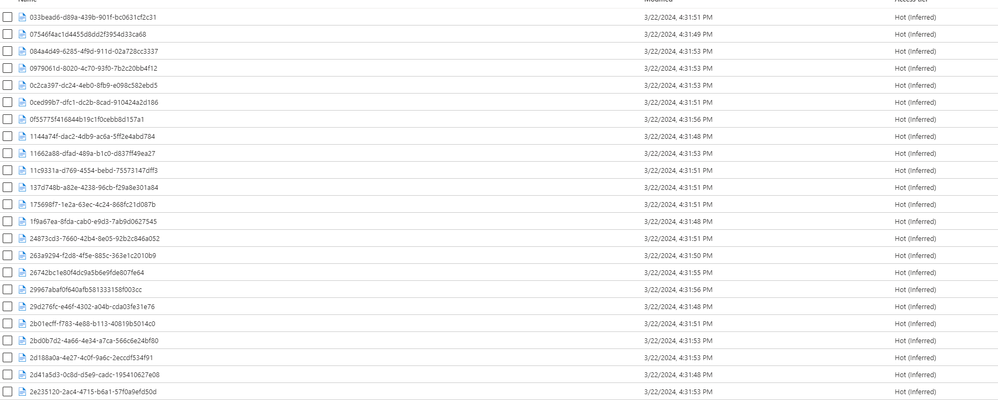

Once you trigger your Logic App workflow and it completes successfully, you will see several new blobs in the products container as per below.

Great, now we have the data to augment our responses!

Azure OpenAI Deployments

Before you start:

- Ensure you enable Azure AI Search Managed Identity for your Azure AI Search resource.

- Make sure you give the Azure AI Search managed identity the Cognitive Services OpenAI User role in the OpenAI resource.

- Ensure you enable System Managed Identity for your Azure OpenAI resource.

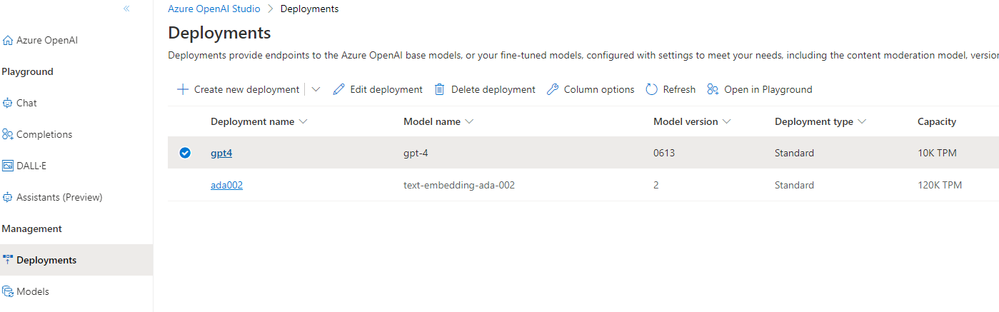

Now let’s setup the Azure OpenAI with the models you need.

Open the Azure OpenAI Studio. Go to Deployments and Create two new Deployments. In the first one select GPT-4 and the second one select ADA002 as displayed below.

You are performing this step because these models will be used in the next sections.

The GPT-4 model is the LLM used to generate the responses based on our prompts and the ADA-002 model is the model used to generate the embeddings.

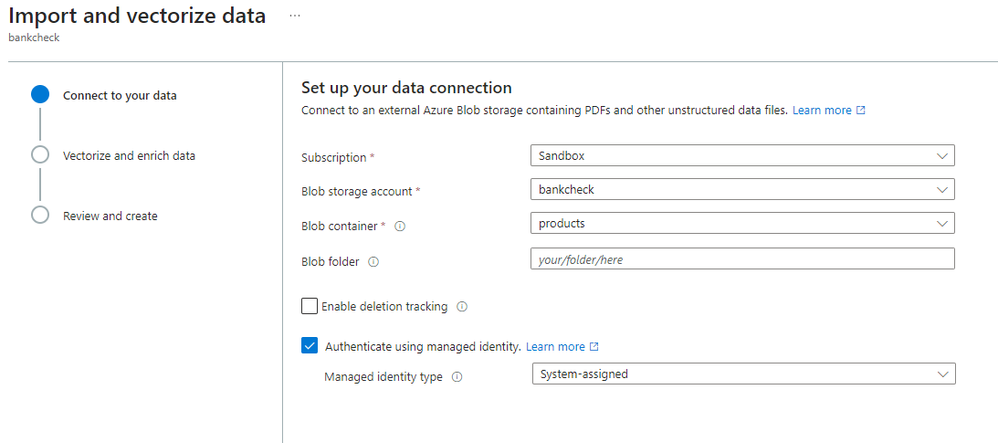

Import and Vectorize Data

Before you start:

- Make sure you give the Azure AI Search managed identity the Storage Blob Data Reader role in the Storage Account.

Now you can move to Azure AI Search where you import and vectorize the data. Azure AI Search takes vector embeddings and uses a nearest neighbors’ algorithm to place similar vectors close together in an index. Internally, it creates vector indexes for each vector field.

To do this, is very simple. Go to Azure AI Search resource and click on Import and Vectorize Data.

Select your subscription, the storage account, container and make sure you tick the box to authenticate using the managed identity.

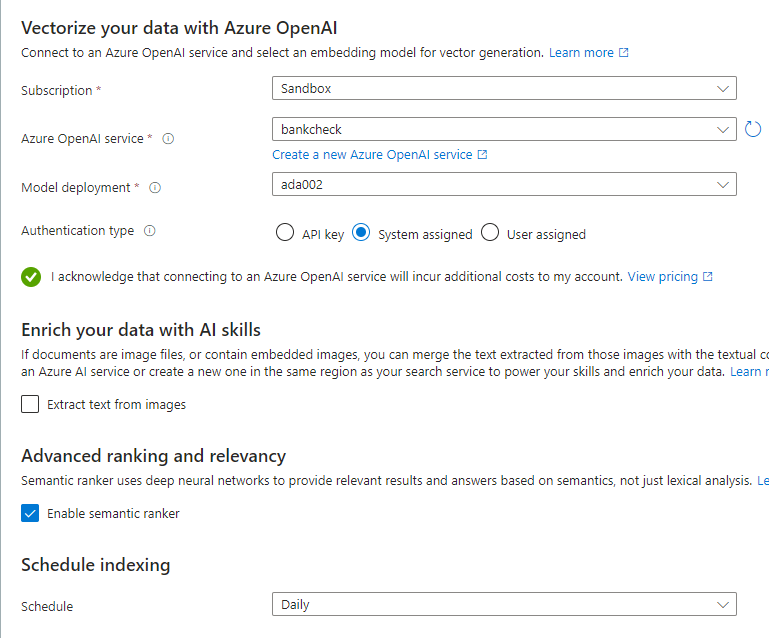

Next, select the Azure OpenAI Service, select the ADA002 model you deployed earlier and make sure authentication type is managed identity, semantic ranker is enabled, and schedule indexing is set to Daily.

The ADA002 model was specifically designed to create the embeddings based on semantic meaning. These embeddings are stored in a vector database which in this case is the Azure AI Search itself.

The Semantic Ranker adds the following:

- A secondary ranking over an initial result set that was scored using BM25 or RRF. This secondary ranking uses multi-lingual, deep learning models adapted from Microsoft Bing to promote the most semantically relevant results.

- It extracts and returns captions and answers in the response, which you can render on a search page to improve the user's search experience.

Basically, semantic ranking improves search relevance by using language understanding to re-rank search results.

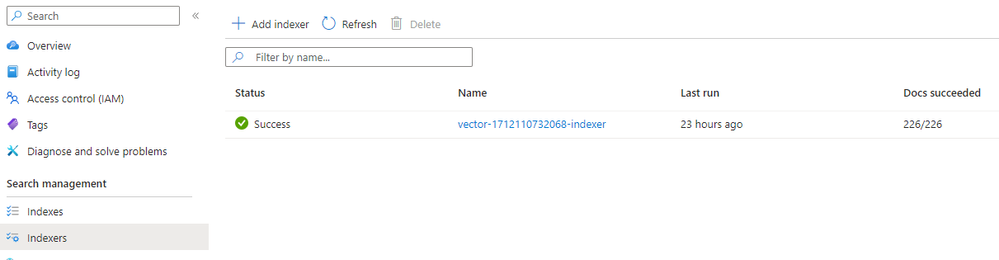

You can verify everything worked as expected by checking your indexers in Azure AI Search. The figure below shows the indexer was successfully created with 226 documents indexed.

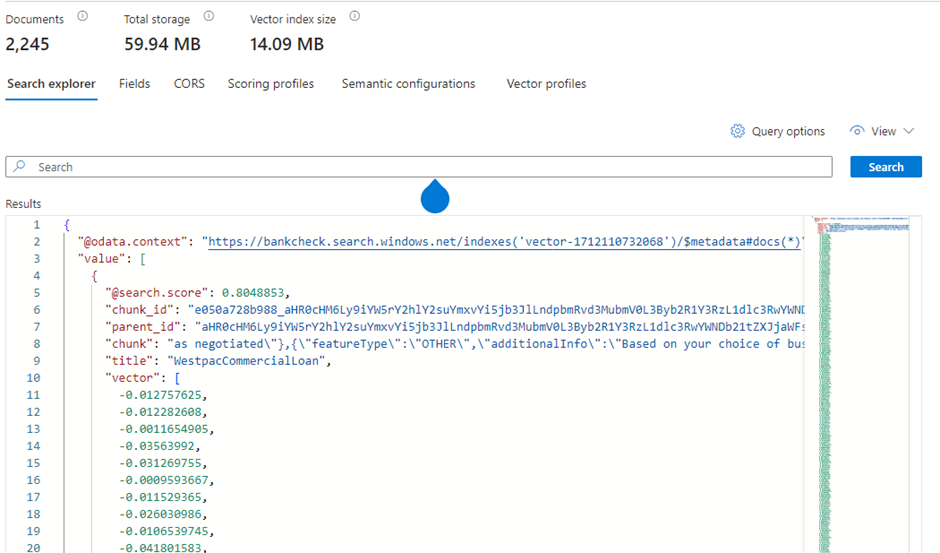

You can also visualize all vectors, by selecting them and hitting the Search button. Notice that here it displays 2245 documents because those 226 documents are broken down into chunks. The vector is an array of 1536 floating point values, which corresponds to the size of embeddings produced by the ada-002 model.

The Flow

From the left:

- Define your data sources.

- Deploy the models to be used.

- Transform your data into embeddings.

- Store those embeddings into a vector store.

From the right:

- Start with a user question or request (prompt).

- That is transformed into embeddings so that we can do semantic and lexical search.

- Send it to Azure AI Search to find relevant information.

- Send the top ranked search results to the LLM.

- Use the natural language understanding and reasoning capabilities of the LLM to generate a response to the initial prompt.

The Prompt

Once all the previous steps have been completed, you are now able to start prompting directly from the Azure AI Studio.

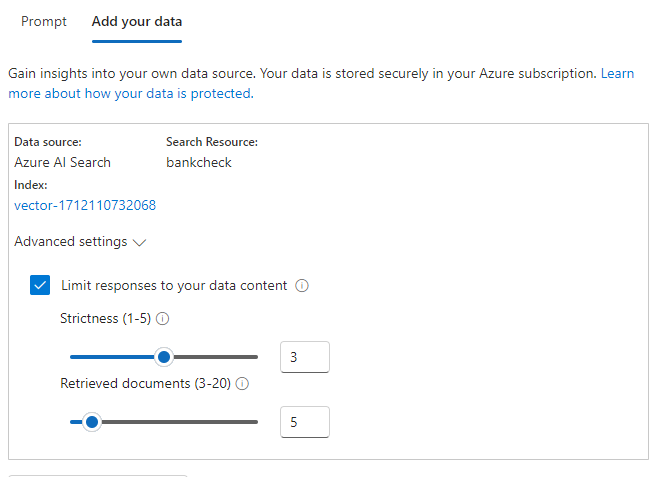

Now you should add our data source to the chat playground so that you ground your data and provide extra context to the LLM. This will help you to get more accurate answers back.

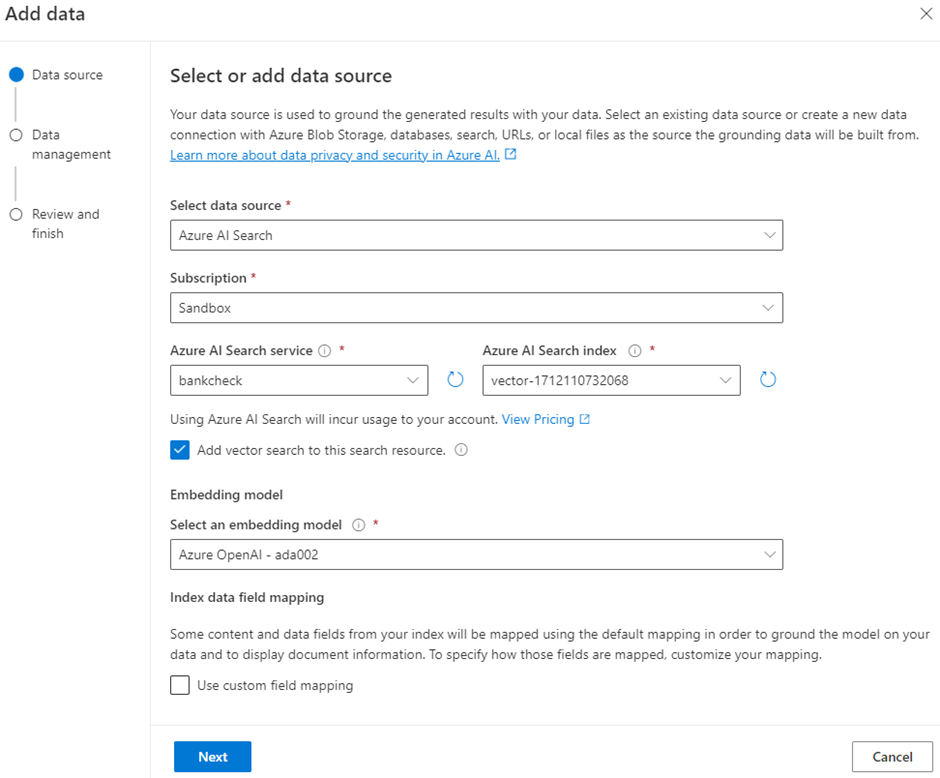

From the Chat Playground in Open AI Studio, select Add your data and select + Add a data source.

Select Azure AI Search as your data source, select the subscription where that is located, the Azure AI Search resource, your index which you have created in a previous step, tick the box to Add vector search to this resource and select the ADA002 model we deployed earlier.

Now, select Hybrid + semantic as the search type and an existing semantic configuration.

Great! Let’s ask our first question.

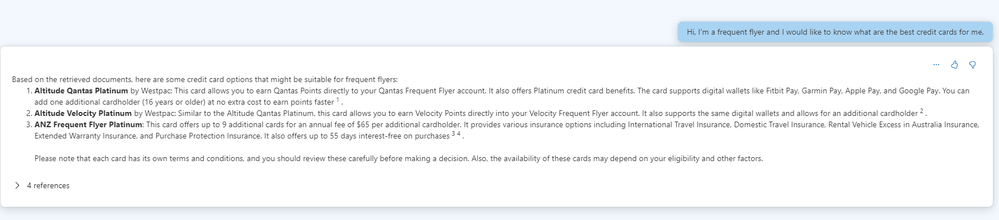

My prompt was that I fly a lot and I want to find out what credit cards are best for me.

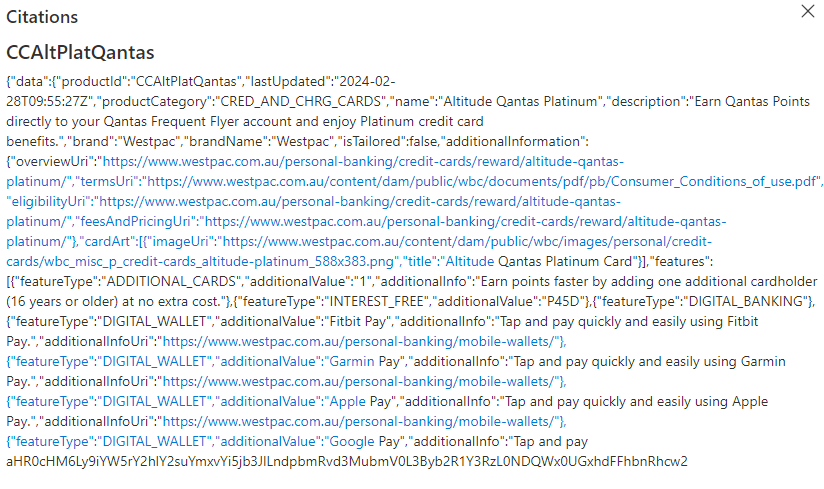

Using my data, it replied with the 3 best credit cards, and it even provided references to the data. I clicked on one of the citations and quickly see where the data was cited from.

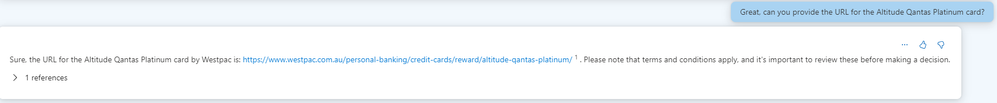

Let’s ask BankCheck Copilot to provide a link to the first card.

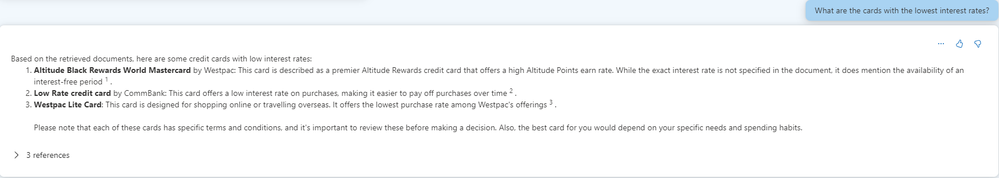

Awesome, let’s do one more question before we wrap this up.

One thing to note is that by default we are limiting the responses to our own data content as you can see below.

Therefore, if we prompt it, for example, to write a calculator program in python, it will not do it as depicted below.

Conclusion

The post outlined the process of building a personalized AI Copilot using your own data, highlighting the benefits of customization, data privacy, improved performance, cost savings, and innovation. It introduced key concepts like Retrieval-augmented generation (RAG), Vector Database, AI prompts, and Large Language Models (LLMs).

I shared a specific scenario of searching for the best credit card, emphasizing the advantage of using direct bank API data over relying on potentially outdated comparison sites. The technical implementation involved using Logic Apps, Azure OpenAI, and Azure AI Search to collect, store, and analyse bank product data. The post detailed steps for data collection, vectorization, and embedding, followed by a demonstration of querying the system with specific prompts. The outcome was a Copilot that can provide accurate, personalized credit card recommendations, illustrating the power of leveraging proprietary data and AI for tailored solutions.

A special mention to Olaf Wrieden who reviewed and provided some good feedback before I released the final version of the article.

As always, I hope this was informative to you and thanks for reading.

Felipe Binotto, Cloud Solution Architect

References

Retrieval Augmented Generation (RAG)

Australia Consumer Data Standards

ChatGPT Over Your Data by LangChain

Disclaimer

The sample scripts are not supported under any Microsoft standard support program or service. The sample scripts are provided AS IS without warranty of any kind. Microsoft further disclaims all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The entire risk arising out of the use or performance of the sample scripts and documentation remains with you. In no event shall Microsoft, its authors, or anyone else involved in the creation, production, or delivery of the scripts be liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the sample scripts or documentation, even if Microsoft has been advised of the possibility of such damages.