This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

In this new age of collaboration including a more refined focus on Data Engineering, Data Science Engineering, and AI Engineering it becomes necessary to be clear that the choppy “swim-lanes” of responsibility have become even more blurred.

In this short post, I wanted to cover a new feature in the Azure Machine Learning Studio named, Data Drift Detection. Data Drift for this article is the change in model input data that leads to model performance degradation. It is one of the top reasons where model accuracy degrades over time, thus monitoring data drift helps detect model performance issues.

Some major causes of data drift may include:

Causes of data drift include:

- Upstream process changes, such as a sensor being replaced that change the unit of measure being applied. Maybe from Imperial to Metric?

- Data quality issues, such as a failed deployment of a patch for a software component or a failed sensor reading 0.

- Natural drift in the data, such as mean temperature changing with the seasons or deprecated interface features no longer being utilized.

- Change in relation between features, or covariate shift.

With the use of Dataset monitors in Azure Machine Learning studio, your organization is able to setup alerts to assist in the detection of data drift which can be useful in helping you maintain a healthy and accurate Machine Learning Model in your deployments.

There are 3 primary scenarios for setting up dataset monitors in Azure Machine Learning

| Scenario | Description |

|---|---|

| Monitoring a model's serving data for drift from the model's training data | Results from this scenario can be interpreted as monitoring a proxy for the model's accuracy, given that model accuracy degrades if the serving data drifts from the training data. |

| Monitoring a time series dataset for drift from a previous time period. | This scenario is more general, and can be used to monitor datasets involved upstream or downstream of model building. The target dataset must have a timestamp column, while the baseline dataset can be any tabular dataset that has features in common with the target dataset. |

| Performing analysis on past data. | This scenario can be used to understand historical data and inform decisions in settings for dataset monitors. |

Your dataset will require a timestamp; however, once you have a baseline dataset defined - your target dataset or incoming model input will be compared on the intervals specified to help your system become more proactive.

Using the azureml SDK you would execute the following code to create a workspace (your Azure Machine Learning workspace), your originating Datastore, and the Dataset you wish to monitor.

Once this is established you can build a dataset monitor to detect drift with the delta range you provide

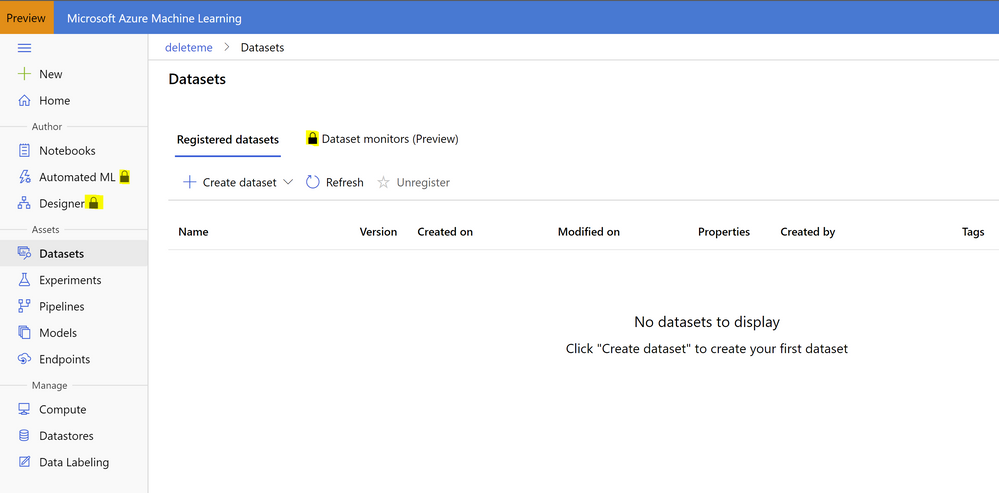

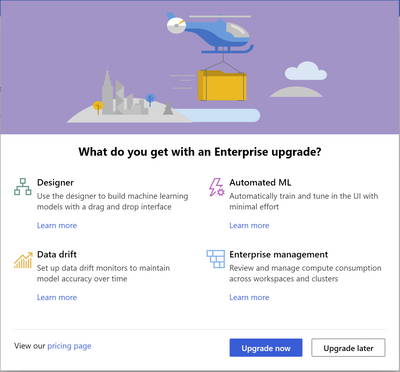

Of course, you can do this all through the Azure ML Studio (ml.azure.com) if you run the Enterprise features. Oops, there are locks beside them!

Not to worry, if you see a lock beside the features. just click on Automated ML or Designer in the left-hand navigation pane and you'll see an upgrade modal appear and it literally takes just a few seconds to do.

In the next article we'll setup a new dataset and also ensure that we are collecting and monitoring for data drift.