This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

The Azure Percept is a Microsoft Developer Kit designed to fast track development of AI (Artificial Intelligence) applications at the Edge.

Percept Specifications

At a high level, the Percept Developer kit has the following specifications;

Carrier (Processor) Board:

- NXP iMX8m processor

- Trusted Platform Module (TPM) version 2.0

- Wi-Fi and Bluetooth connectivity

Vision SoM:

- Intel Movidius Myriad X (MA2085) vision processing unit (VPU)

- RGB camera sensor

Audio SoM:

- Four-microphone linear array and audio processing via XMOS Codec

- 2x buttons, 3x LEDs, Micro USB, and 3.5 mm audio jack

Percept Target Verticals

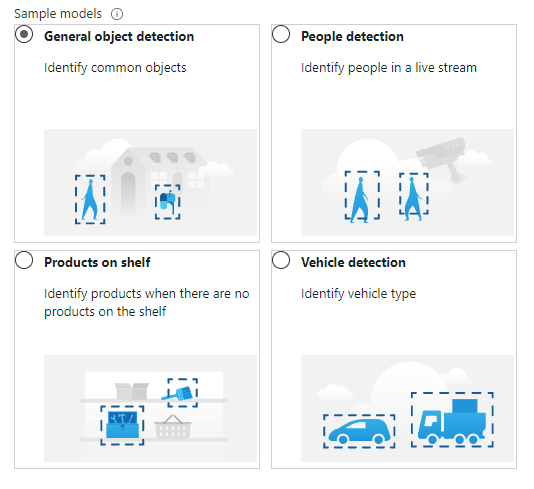

The Percept is a Developer Kit with a variety of target industries in mind. As such, Microsoft have created some pre-trained AI models aimed at those markets.

These models can be deployed to the percept to quickly configure the Percept to recognise objects in a set of environments, such as;

- General Object Detection

- Items on a Shelf

- Vehicle Analytics

- Keyword and Command recognition

- Anomaly Detection etc

Azure Percept Studio

Microsoft provide a suite of software to interact with the Percept, centred around Azure Percept Studio, an Azure based dashboard for the Percept.

Azure Percept Studio is broken down into several main sections;

Overview:

This section gives us an overview of Percept Studio, including;

- A Getting Started Guide

- Demos & Tutorials,

- Sample Applications

- Access to some Advanced Tools including Cloud and Local Development Environments as well as setup and samples for AI Security.

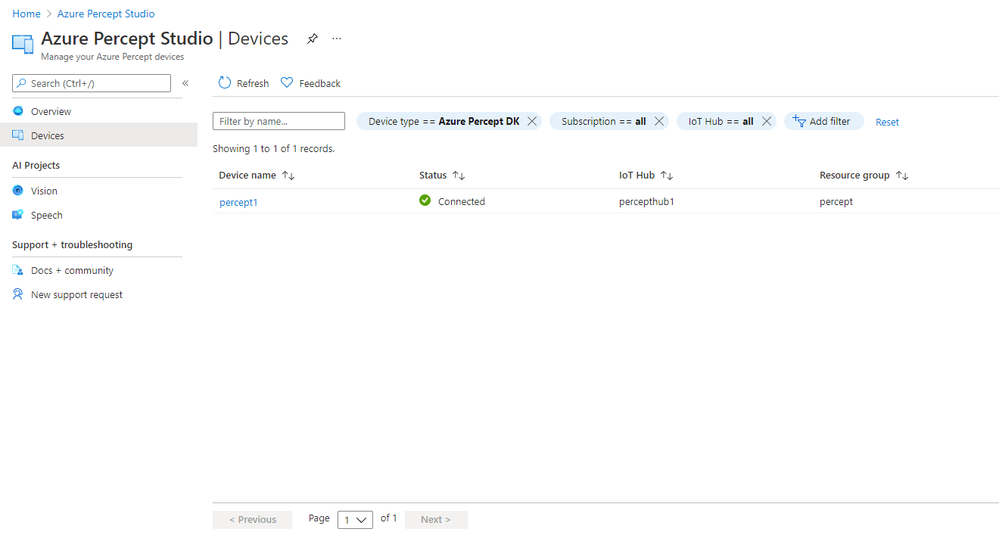

Devices:

The Devices Page gives us access to the Percept Devices we’ve registered to the solution’s IoT Hub.

We’re able to click into each registered device for information around it’s operations;

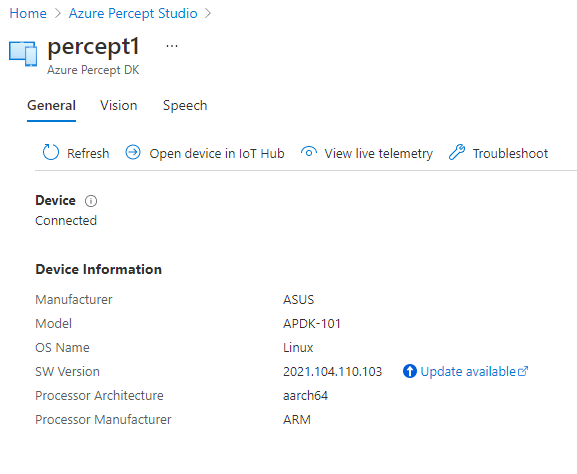

This area is broken down into;

- A General page with information about the Device Specs and Software Version

- Pages with Software Information for the Vision and Speech Modules deployed to the device as well as links to Capture images, View the Vision Video Stream, Deploy Models and so on

- We’re able to open the Device in the Azure IoT Hub Directly

- View the Live Telemetry from the Percept

- Links with help if we need to Troubleshoot the Percept

Vision:

The Vision Page allows us to create new Azure Custom Vision Projects as well as access any existing projects we’ve already created.

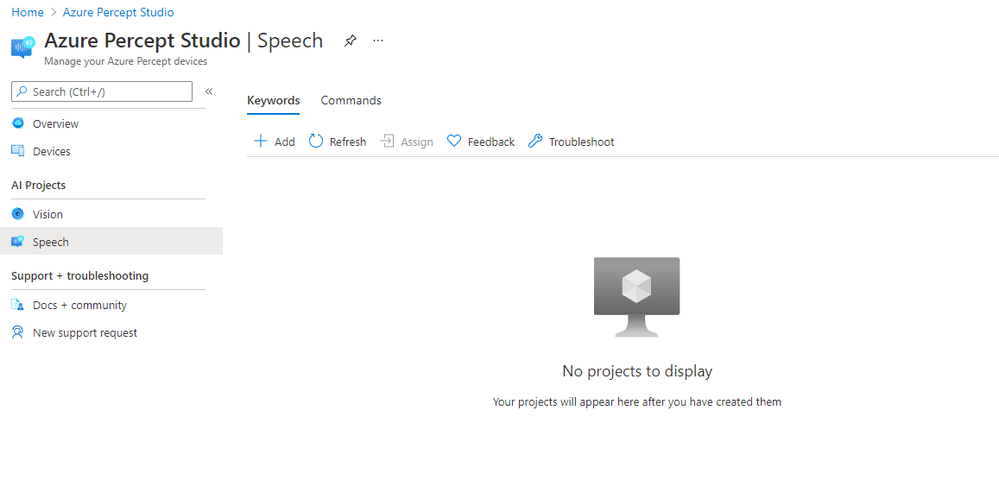

Speech:

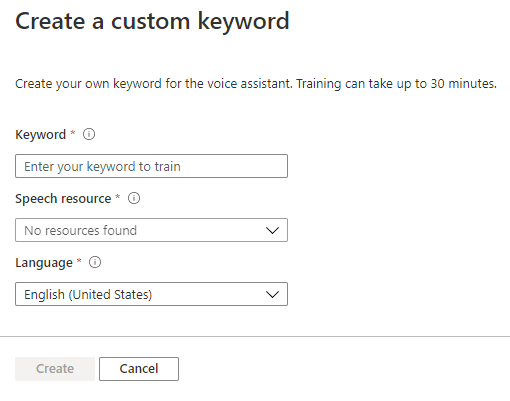

The Speech page gives us the facility to train Custom Keywords which allow the device to be voice activated;

We can also create Custom Commands which will initiate an action we configure;

Percept Speech relies on various Azure Services including LUIS (Language Understanding Intelligent Service) and Azure Speech.

Other Resources

- Cliff Agius has created an excellent blog post around his first impressions of the Azure Percept Developer Kit…. You can find that here

- Myself, Cliff Agius, Mert Yeter and John Lunn recorded a Percept Special IoTeaLive show of the unboxing and first steps on the AzureishLive Twitch Channel.

- Official Azure Percept Page

- Azure Percept MS Docs

- The Azure Percept YouTube Channel has some fantastic videos around the Percept

- Olivier Bloch from the Microsoft IoT Team has hosted some great IoT Show Azure Percept Shows