This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Mithun Prasad, PhD, Senior Data Scientist at Microsoft

“I don’t have enough relevant data for my project”! Nearly every data scientist has uttered this sentence at least once. When developing robust machine learning models, we typically require a large amount of high-quality data. Obtaining such data and more so, labelled or annotated data can be time-consuming, tedious and expensive if we have to rely on experts. Hence, there is a compelling need to generate data for modelling using an automated or a semi-automated way. Specifically, in this work, we explore how we can utilize Open AI’s Generative Pre-trained Transformer 3 (GPT-3) for generating data to build models for identifying how credible news articles are.

GPT-3

GPT-3 is a language model that leverages deep learning to generate human-like text. GPT-3 was introduced by Open AI in May 2020 as a successor to their previous language model (LM) GPT-2. It is considered to be better than GPT-2. In fact, with around 175 billion trainable parameters, OpenAI GPT-3’s full version is one of the largest models trained so far.

Fake News Generation

In this blog post, we discuss the collaborative work between Microsoft's ACE team and the Dataclair AI lab of O2 Czech Republic, where the goal is to identify fake news. Fake news is defined as a made-up story with an intention to deceive or to mislead. The general motive to spread such news is to mislead the readers, damage the reputation of any entity, or gain from sensationalism. The creation of a dataset for identifying credible news requires skilled annotators and moreover, the task of comparing proposed news articles with the original news articles itself is a daunting task as it’s highly subjective and opinionated. This is where the recent advances in natural language modelling and those in text generation capabilities can come to the rescue. We explore how new language models such as GPT-3 can help by generating new data.

We generate fake news data using GPT-3 by providing prompts that contain a few sample fake news articles in the Czech language. Doing something like this would have been unthinkable a few years ago, but the massive advancement of text generation through language models opened doors to such experiments. As the research paper describing GPT-3 shows, GPT-3 is very good at generating news articles of high quality that even humans are not capable of detecting as computer-generated:

Source: https://arxiv.org/pdf/2005.14165.pdf

The plot also shows how text generating models improved via having access to more parameters. GPT-3 is the furthest to the right and the plot conveys how accurately people were able to recognize generated articles from those written by humans.

Prompt Engineering

“Prompts” are a way to get the model to produce text by specifying an instruction in natural language and showing some demonstrations of how to follow the instructions well. GPT-3 has an incredible capacity to mimic writing styles. When the prompt is set up correctly, GPT-3 adheres to the example just enough to copy those underlying elements (for example: includes or excludes citations, etc.) and introduce a new twist to the generated text. It is even capable of creating its own complex arguments. Thus, it is not just a replication of pre-existing data, but a creation of new and original articles from which the model can learn.

An example of a prompt and parameters used to generate fake news are as follows in bold. The generated text is in italics.

Generate a news article based on the headline and with the same style of writing as given in the example.

Headline: Where do leftist extremists get the audacity to speak for the nation?

Text:

My fellow Czechs, we must shake off the shame that the USA, Brussels and other countries have been forced on us with the help of our own "experts" and "journalists". The same people who are now digging into our nation with the help of a genuine community of the USA and Brussels - the Pekarová and other forces...

Parameters:

Temperature: 0.7, Max tokens: 1000, Top p: 1, Frequency penalty: 0, Presence penalty 0

From these parameters, the most important ones are temperature and max tokens. Temperature controls randomness in the text. Therefore, a temperature of 0.7 was chosen to produce less deterministic results that still follow the structure and writing style of the example. Max token value was set to 1000 tokens (~4000 characters) because this is the average length of a news article. It should be noted that when working with GPT-3, the process of finding the right parameters is about experimentation.

Admittedly, there are still challenges to deal with. One of them is the need to manually inspect if GPT-3 returns articles that are relevant and in the right credibility category. Due to the sensitivity of the topic of article credibility, data quality checks will need to be implemented. Another minor limitation is that while GPT-3 understands many articles that it has been trained on, it has problems when analysing newer topics. For example, it is unable to fully grasp the significance of COVID-19 and it usually avoids writing about it due to not having enough knowledge about the global pandemic. Thus, it generates less realistic articles when faced with such a topic.

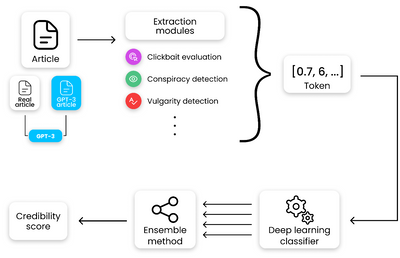

Nevertheless, if those obstacles are kept in mind, GPT-3 can help make dataset creation faster and more reliable. This is something that the O2 CZ team plans to utilize for their disinformation recognition AI model. “Our model extracts different features (aggressivity, clickbait etc.) from the article via smaller extraction modules. Those features are then evaluated by the deep learning classification module and subsequently transformed into one number by the ensemble method. For the system to work, we need as many articles as possible for training the classification module, which we hope to obtain with the help of GPT-3,” described by Filip Trhlik, a Data Scientist at the Dataclair AI lab.

Disinformation recognition AI model diagram

In conclusion, artificially generating new data is a very exciting use case of language models and even though the data generated requires a small amount of manual inspection, it is very beneficial for downstream modelling tasks. The ability to generate a large amount of synthetic data in a short time is very promising.