This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

The 1.4.2 release of MSTICPy includes three major features/updates:

- Support for Azure sovereign clouds for Azure Sentinel, Key Vault, Azure APIs, Azure Resource Graph and Azure Sentinel APIs

- A new visualization — the Matrix plot

- Significant update to the Process Tree visualization allowing you to use process data from Microsoft Defender for Endpoint, and generic process data from other sources.

We have also consolidated our visualizations into a single pandas accessor to make them easier to invoke from any DataFrame.

Important Note:

If you’ve installed release 1.4.0 or 1.4.1 of MSTICPy, please upgrade to v1.4.2 — a lot of the functionality described below didn’t make it into the 1.4.0 release due to a publisher (i.e. me) error!

Azure sovereign cloud support

What is an Azure sovereign cloud? Unless you are using one, you may not know. Most Azure customers use the Azure global cloud — this includes the public portal and Azure APIs typically located in the .azure.com domain namespace. However, there are a set of independent clouds with their own authentication, storage and other infrastructure — these may be used where there is a strict data residency requirement like that of Germany. Currently supported Azure clouds are:

- global — the Azure public cloud

- cn — China

- de — Germany

- usgov — US Government

I wasn’t able to find a single document that describes Azure sovereign clouds but this overview of the US Government cloud will give you a reasonable understanding.

For systems accessing a sovereign cloud it’s critical that they use a consistent set of endpoints to authenticate and access resources belonging to that cloud.

The updates to MSTICPy allow you to specify the cloud that you’re using in your msticpyconfig.yaml file. Once this is done, all of the Azure components used by MSTICPy will select the correct endpoints for authentication, resource management and API use.

To set the correct cloud for your organization, run the MpConfigEdit configuration editor and select the Azure tab.

from msticpy import MpConfigEdit

mp_conf = MpConfigEdit()

mp_conf

Azure configuration in MSTICPy Configuration editor

Azure configuration in MSTICPy Configuration editor

The top half of the tab lets you select from the global, China (cn), Germany (de) and US government (usgov) clouds. The lower half, lets you select default authentication methods for Azure authentication (see below).

After you save the settings (you need to hit the Save button to confirm your choices, then the Save Settings button to write the settings to your configuration file), you can reload the settings and start using them.

import msticpy

msticpy.settings.refresh_config()

Unfortunately, there isn’t anything very spectacular to see with this feature — other than for people using sovereign clouds (in which case, the MSTICPy Azure functions will begin working as they do for global cloud users).

The MSTICPy components affected by this are:

- Azure Sentinel data provider (and the underlying Kqlmagic library)

- Azure Key Vault for secret storage

- Azure Data provider

- Azure Sentinel APIs

- Azure Resource graph provider

Azure default authentication methods

In the same Azure settings tab, you can also specify the default authentication methods that you want to use. MSTICPy uses ChainedCredential authentication, allowing a sequence of different authentication methods to be tried in turn. The available methods are:

- env — Use credentials set in environment variables

- cli — Using credentials available in an local AzureCLI logon

- msi — Using the Managed Service Identity (MSI) credentials of the machine you are running the notebook kernel on

- interactive — Interactive browser logon

You can select one or more of these. This gives you more flexibility when signing in. For example, if you have cli and interactive enabled, MSTICPy will try to obtain an access token via an existing Azure CLI session, if there is one, otherwise will fall back to using interactive browser logon.

The first three methods refer to credentials available on the Jupyter server (e.g., an Azure ML Compute) and not necessarily on the machine on which your browser is running. For example, if you want to use Azure CLI credentials you must run az login on the Jupyter server, not on the machine you are browsing from.

Note: MSI authentication is not currently support on AML compute.

Using Azure CLI as your default login method

As a side note to this, using an Azure CLI logon gives you many benefits, particularly when running multiple notebooks. Rather than have to authenticate for each notebook, the ChainedCredential flow will try to obtain an access token via the CLI session, giving you an effective single sign-on mechanism.

Due to its ability to cache credentials, we strongly recommend using Azure CLI logon. This allows all MSTICPy Azure functions to try to obtain current credentials from Azure CLI rather than initiate a new interactive authentication. This is especially helpful when using multiple Azure components or when running multiple notebooks. We recommend selecting cli and interactive for most cases.

To log in using Azure CLI from a notebook enter the following in a cell and run it:

!az login

You can read more about the Azure cloud and Azure authentication settings in the MSTICPy documentation. If you have any requirements for cloud support not listed here please file an issue on our GitHub repo.

Matrix Plot

This is a new visualization for MSTICPy. It uses the Bokeh plotting library, which brings with it all of the interactivity common to our other visualizations.

We are indebted to Myriam and her CatScatter article on Towards Data Science for the inspiration for this visualization.

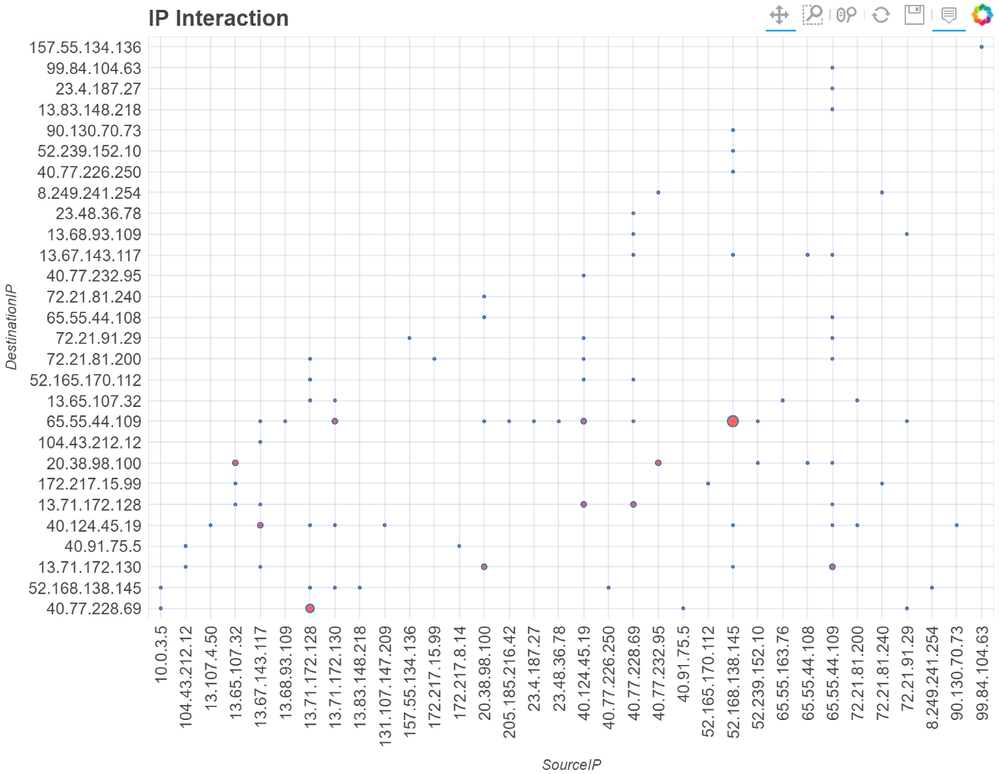

The Matrix plot is designed to be used where you want to see interactions between two sets of data — either to see whether there was any interaction at all, or get a sense of how much (or how little) interaction there was.

A canonical example would be to view connections between sets of IP addresses. This is shown in the following screen shot where the size of each circle at the grid intersections is proportional to the number of connections recorded.

Matrix plot of communications between source and destination IP Addresses

Matrix plot of communications between source and destination IP Addresses

The syntax for creating a matrix plot is straightforward. Once you’ve loaded MSTICPy you can plot directly from a pandas DataFrame. You need to specify the “x” parameter (the horizontal axis) and the “y” parameter (vertical axis).

net_df.mp_plot.matrix(

x="SourceIP",

y="DestinationIP",

title="IP Interaction"

)

By default, the circle size at the intersection of the x and y values is the number of interactions (i.e., the number of rows in the source data where the distinct x and y pairs appear).

There are several variations of the basic plot:

- Use a column in the input DataFrame to size the intersection circle, rather than a raw count (using the

value_col=column_nameparameter). The column must be a numeric value (an integer or float), for example BytesTransmitted. - Scale the sizing (using

log_size=True). This is useful to “flatten” the variations between different count values where these values are skewed. - Invert the sizing. This plots the inverse of the interaction point value. It is particularly useful to highlight rare, rather than common interactions (using

invert=True). - Plot circle size using a count of distinct values for a named column (using

dist_count=column_name). The column_name column can be of any data type. - Ignore sizing and plot a fixed-sized circle for any interaction between the x and y pairs. This is useful to be able to quickly see all interactions — using the default scaled circle size can result in some interaction points being very small and difficult to see.

The x and y columns don’t have to be interacting entities such as IP addresses or hosts. Either axis can be an arbitrary column from the source DataFrame. For example, you could plot Account on the y axis and ResourceIdentifier on the x axis to show how often a particular account accesses a resource.

There are a few more options controlling font sizes, title, axis sorting. You can read more in our online documentation describing the Matrix plot in detail.

Process Tree Visualization

The process tree visualization has been in MSTICPy for a while but it was closely bound to the Azure Sentinel data schema. We’ve reworked the process tree plotting and support libraries to be data source agnostic. We’ve also built specific support for MS Defender for Endpoint (MDE) process logs.

Making the process tree schema-agnostic

We’ve removed hard coded references to columns such as TenantId and TimeGenerated. For your data source, you need to create a dictionary that maps the following generic property names (InternalName column) to the columns in your data (DataSourceName). You can also use the ProcSchema class to define your column mapping.

The example below shows the mapping for Linux auditd data read from Azure Sentinel.

| InternalName | Description | DataSourceName | Required |

| process_name | Name/path of the created process | exe | Yes |

| process_id | Process ID | pid | Yes |

| parent_id | Parent process ID | ppid | Yes |

| logon_id | Logon/session ID | ses | No |

| cmd_line | Process command line | cmdline | Yes |

| user_name | Process account name | acct | Yes |

| path_separator | "\\" or "/" | "/" | No |

| host_name_column | Host running process | Computer | Yes |

| time_stamp | Process create time | TimeGenerated | Yes |

| parent_name | Parent name/path | No | |

| target_logon_id | Effective logon/session ID | No | |

| user_id | ID/SID of account | uid | No |

| event_id_column | Column in input data that identifies the event type (only needed if mixed events in data) | EventType | No |

| event_id_identifier | The value of event_id_column to use to filter only required events | SYSCALL_EXECVE | No |

And here is an example of how to define a schema for your input data.

from msticpy.sectools.proc_tree_builder import ProcSchema

my_schema = ProcSchema(

time_stamp="TimeGenerated",

process_name="exe",

process_id="pid",

...

path_separator="/",

user_id="uid",

host_name_column="Computer",

)

lx_proc_df.mp_plot(schema=my_schema)

Using MDE process data

MDE process data contains groups of data for each process row:

- CreatedProcess attributes

- InitiatingProcess attributes (the parent of the CreatedProcess)

- InitiatingProcessParent attributes (the grandparent of CreateProcess)

MSTICPy process tree builder flattens this structure into a single process per row (with attributes) and adds a key that links the process to its parent process. In some cases, rows for the parent and grandparent processes are already in the input data set. Where these are missing, MSTICPy will infer these records from the InitiatingProcess and IntiatingProcessParent data in the child process row. Once this is done, the process tree is displayed. Some attributes are shown in the box for each process; more are available as “tooltips” as you hover over each process in the tree.

The process tree module will indentify MDE data from the columns present: you do not need to define a schema.

Process tree for MS Defender for Endpoint data

Process tree for MS Defender for Endpoint data

Uniform access to plotting from pandas DataFrames

We’ve consolidated the various plotting functions into a single accessor named mp_plot. This lets you access any of the MSTICPy main visualization functions from a DataFrame. These are:

- Event Timeline —

timeline - Event Timeline values —

timeline_values - Process Tree —

process_tree - Event Duration —

timeline_duration - Matrix Plot —

matrix

The syntax for all of these is similar. The accessor is loaded automatically if you run init_notebook. You can also load it manually using the following code.

from msticpy.vis import mp_pandas_plot

Here are some examples of usage:

df_mde.mp_plot.process_tree(legend_col="CreatedProcessAccountName")

net_df.mp_plot.matrix(

x="SourceIP",

y="DestinationIP",

title="IP Interaction"

)

net_df.mp_plot.timeline(

source_columns=["SourceIP", "DestinationIP"]

title="IP Timeline"

)

Conclusion

You can read more details on our documentation pages using the links provided in this article.

You can also read the release notes for details of all of the other fixes and minor changes in this release.

Please let us know about any issues or feature requests on our GitHub repo.

Enjoy!