This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

The Azure Stream Analytics Tools (ASA Tools) extension for Visual Studio Code (VS Code) has always allowed developers to run jobs locally, from live inputs or local files. Up until now the outputs of such runs could only be local files. With the latest release of ASA Tools, local executions can now target live outputs for Event Hub, Blob Storage and SQL database. It has never been easier to test and debug a job end-to-end.

Azure Stream Analytics Tools (ASA Tools)

Stream Analytics offers multiple experiences for developers. Small work such as demos and prototypes can be quickly addressed in the Azure Portal. But most users will find that VS Code delivers the most complete developer experience for Azure Stream Analytics via the ASA Tools extension. See the quickstart for how to get started.

In VS Code, it has long been possible to develop, test and run jobs locally, without even needing an Azure subscription. Stream Analytics jobs can be run locally on sample data files or using the live sources defined as inputs.

In both modes, the resulting datasets are output as local files in a generic JSON format that doesn’t honor the serialization format defined in the output configuration. This approach simplifies local development and enables unit testing via the asa cicd package.

But this was not enough. ASA developers need to be able to understand a job output in its downstream context, or to troubleshoot more quickly a failing output, without having to deploy and run a job live. It gets better with the release 1.1.0 of ASA Tools, as a live output mode has been added to local runs in VS Code.

From VS Code, it is now possible to start a job in four ways:

- Local run with local input and local output: offline development at no cost, unit testing…

- Local run with live input and local output: input configuration, deserialization, and partitioning debugging…

- Local run with live input and live output: output configuration, serialization, and type mismatch debugging…

- Deploy to Azure and run: integration testing, performance testing and production…

This overview goes into more details about local runs.

Local run with live input and live output

The new local run mode only supports output to Event Hub, Blob Storage and SQL Database for now.

In VS Code, create a new ASA project or open an existing one. If necessary, create live inputs and outputs. Edit the query so it uses these live inputs and outputs.

Start a local run using either:

- the breadcrumb menu at the top of the script editor (“Run Locally”, as shown above)

- the context menu in the script editor (right click anywhere > “ASA Start Local Run”)

- the context menu in the explorer (right click on the .asaql file > “ASA Start Local Run”)

- the command palette (F1 > “ASA Start Local Run”)

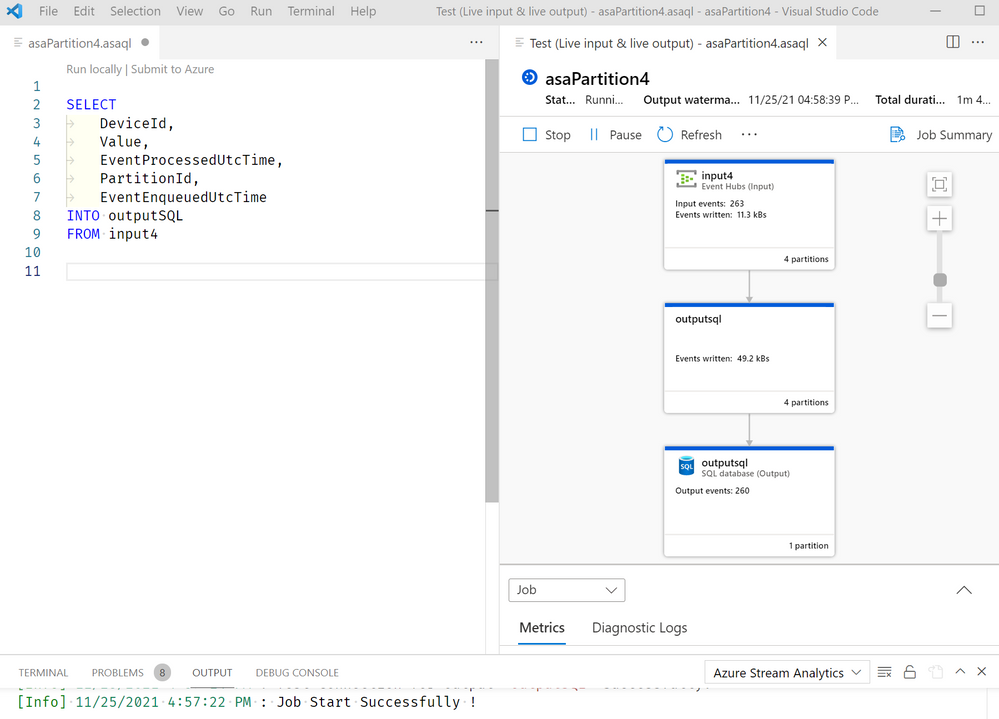

When prompted for the “input source and output for testing”, pick “Use Live Input and Live Output”. After a few seconds, ASA Tools should start ingesting records from the input, applying the query logic and pushing records to the live output (an Azure SQL database in the capture below):

Running a job this way doesn’t require to provision a live job. All processing is done locally. No costs are occurred from the Azure Stream Analytics service.

Application: fast conversion error debugging for schema-on-write outputs (SQL Database)

On the example above, the input schema from Event Hub matches the output table definition in Azure SQL. But after a few tests we realize that the value field was mistyped as a string. Looking at live data in VS Code (central query node, result, open file), we see JSON records showing: "Value":"43.7532766227393". The fact that the number is between quotes means that it will be interpreted as a string by ASA, despite being numeric. When we initially created the destination table we made it a NVARCHAR(50) without realizing it should be a number instead.

To fix the situation, we stop the job, alter the table in SQL and start the job again:

We are immediately notified of conversion errors happening on the column we just changed. After a short investigation, we realize that we changed the type in the SQL table to integer, which generate errors as value is in fact numeric. It’s interesting to see that the error message mentions a conversion from string to integer, as for ASA that field is still a string. ASA will try to implicitly cast the field to the expected output type, but in this case that’s not enough.

To fix the situation, we can either alter the table a second time and use a valid numeric type (decimal or float), or round value in the query to make it an integer. Let’s not lose data here and update the table in Azure SQL.

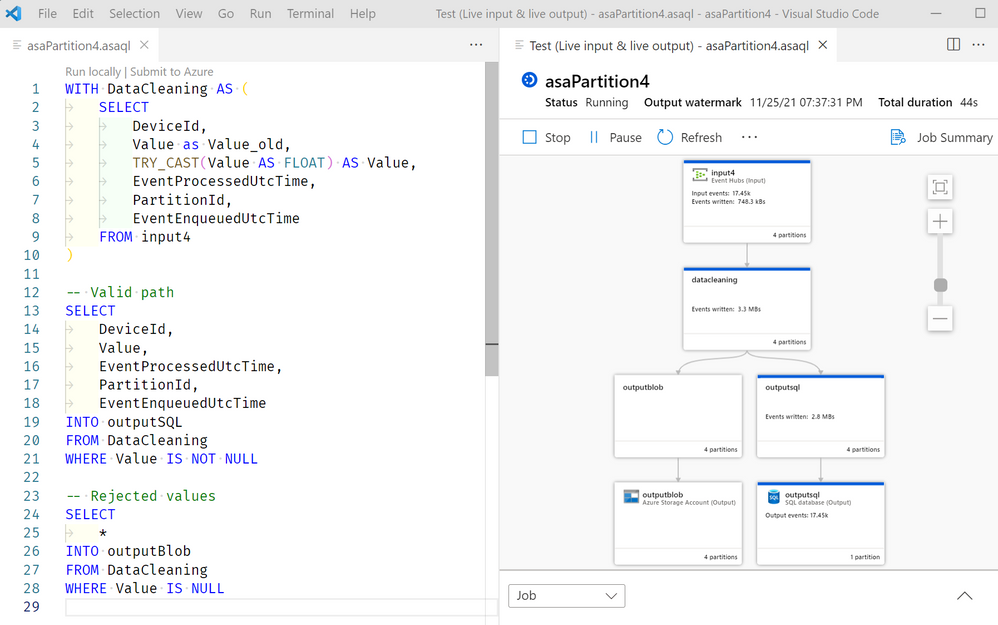

We could leave it at that, and the job would now run. ASA would implicitly cast the string to numeric and generate errors only when that wouldn’t work. But let’s be thorough and explicitly cast the field instead.

Here we extended the query to TRY_CAST value as float (the only numeric type currently supported in ASA). TRY_CAST will return NULL if the conversion fails. Then we output valid rows to our SQL table and rejected rows to a new output less regarding in terms of typing. We can later set up an alert on that blob store to be notified when rows are rejected, without losing them.

Get the most complete ASA developer experience with ASA Tools

To get started with Azure Stream Analytics, VS Code and ASA tools, you can use one of our quick starts on the documentation page.