This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Data security is an important consideration for organizations when complying with data protection regulations. There are different options to choose from to protect sensitive data - Tokenization is one of the options to preserve data privacy and protect sensitive data at rest.

Protegrity is a global leader in data security, has the serverless User Defined Function (UDF) that provides external data tokenization capabilities and a SQL Gateway is in the roadmap as another option by Protegrity.

In this post, we will describe how customers can use the Protegrity Protector UDF with Azure Synapse SQL pool to tokenize or detokenize data at scale.

Tokenization is a technique for de-identifying sensitive data at rest while retaining its usefulness. This is particularly vital to companies that deal with Personally Identifiable Information (PII), Payment Card Industry (PCI), and Protected Health Information (PHI).

Azure Synapse Analytics

Azure Synapse Analytics is a limitless analytics service that brings together data integration, enterprise data warehousing and big data analytics. It gives you the freedom to query data on your terms, using either serverless or dedicated resources—at scale. Azure Synapse brings these worlds together with a unified experience to ingest, explore, prepare, manage and serve data for immediate BI and machine learning needs.

One of the key use cases that most customers that are on their migration journey to Azure is data tokenization and masking. While there are multiple options possible , in this blob we will look at the option that addresses the below customer requirements –

- Multi Cloud scenario where uses cases are split between cloud providers and there is a need for a single centralized data control that can ensure data privacy and compliance regulations to deliver the right data to the right user

- In an on-prem scenario, the customer runs Microfocus agents on all of the Hortonworks cluster nodes (48 of them) which tokenize/detokenize the data. On Azure though we’ve agreed that the sensitive data (PII, financial etc) will be tokenised on-prem and will be ingested onto Azure Blob. The advanced analytics team need access to PII data which is not tokenised, to build their ML models. The data needs to be detokenized when its being served.

- However this presents its own challenges. Is it viable to ‘push’ the de-tokenised data to Azure Synapse SQL Pool and leverage the ‘Dynamic Data Masking’ feature?

About Tokenization and Different Acts

Tokenization is widely used and approved practice both in on-premise and cloud to protect sensitive data. If data is tokenized correctly , full benefit of data can be derived without exposing any sensitive data. One other challenge a number of customer might have is lack of data dictionary , metadata information on the PII , PCI and other sensitive information. In other words they don’t know what data to tokenize. For those situations full encryption might be the way to go and it does increase the risk and FSI and banks will be apprehensive.

- Tokenization is one of the best methods to easy to remove secure information and replace with non-sensitive data for analytics purpose and it works with structured data vs unstructured data , for example. Most of the time for Data science workloads you don’t need to touch the PII related information to run meaningful analysis.

- The idea behind Tokenization is a non-mathematical approach to protecting data while preserving its type and length. We replace sensitive data, regardless of nature (PII, PHI, PCI), type or format, with non-sensitive substitutes. This mitigates impact should a breach occur and offers more flexibility than FPE (Field preserving Encryption).Tokens, by definition, look like the original value in data type and length while de-identifying sensitive information, enabling it to travel throughout its lifecycle without modification to systems. Furthermore, tokens can keep specific data fully or partially visible for processing and analytics, overcoming many of the problems associated with encryption.

Tokens can be formatted to:

- Preserve the format (length and data type), and leading/trailing

- Preserve length but not data type, and leading/trailing

- Mask a portion of the token when a full value is not needed or desirable (can’t be subsequently translated back)

- Tokens generally maintain the length and format of the original data so that applications require little or no modification.

With the above definition , following are some of the sample of tokenization. As we can see its still possible to derive relevant information without sharing any specific data.

|

Data type |

Description |

Example Data |

Example Tokenization |

|

General any type of data |

This format returns an ASCII, length-preserving token retaining the original first six and last four digits of the input data |

1324-123-4845796

|

1324-1TFTF05796 |

|

PCI |

This format returns an alphanumeric, length-preserving token retaining the original first four and last four digits of the input data |

4242424242424242 |

4242ZYAV5124242 |

|

PII |

This format is used to tokenize a Social Security number. |

123-45-6789 |

958-47-5126 |

Disadvantage of Tokenization

- Data need to constantly pushed back on-premise (if you keep all your references on-premise) for all mapping and validation and it will add to informational latency. Tokens uses a large database table to create lookup pairs that associate a token with the encrypted sensitive information, such as credit card numbers, for which it is a substitute.

- The only way to get the original information or its token, is to reference the lookup table, taking data out of scope for audit. As the lookup table grows with each instance of tokenization, lookups slow down and as a result performance suffers. vault-based tokenization requires costly synchronization capabilities to maintain reliability, high availability, and to avoid collisions. Additionally, vault-based tokenization is too complex to tokenize anything more than credit card numbers without massive architectural problems.

- In certain circumstances it makes sense to use encryption rather than tokenization, typically when data is unstructured, such as binary files, images, biometrics and unstructured data, That’s one of the disadvantages of Tokenization.

Privacy mechanisms in big data - following are the potential approaches

|

Approach |

Protects from adversarial access |

Focus |

|

Data separation |

Cloud |

Only does partitioning as a measure to handle privacy , Does not deal with key generation in map phase , Does not deal with adversarial users. |

|

Pseudonymization Tokenization |

Cloud |

Structured data to obfuscates PII , PCI and General information. Its non-mathematical approach to protecting data at rest that replaces sensitive data with non-sensitive substitutes, referred to as tokens. While the tokens have no extrinsic or exploitable meaning or value, they allow for specific data to be fully or partially visible for processing and analytics while sensitive information is kept hidden. Tokenization does not alter the type or length of data, which means it can be processed by legacy systems such as databases that may be sensitive to data length and type. This also requires much fewer computational resources to process and less storage space in databases than traditionally-encrypted data. |

|

Homomorphic encryption |

Cloud |

User does not trust cloud infrastructure , Supports counting operation only , Purpose specific. A homomorphic encryption allows a user to manipulate without needing to decrypt it first. |

|

Secret sharing |

User and cloud |

Cost overhead |

|

Differential privacy |

User |

Full trust on cloud providers and Cannot guarantee privacy for calculations which output keys created by untrusted mappers. For example google and apple adopt this model to collect user analytics. See the apple use case of differential privacy . https://www.wired.com/2016/06/apples-differential-privacy-collecting-data/ |

A typical customer use case

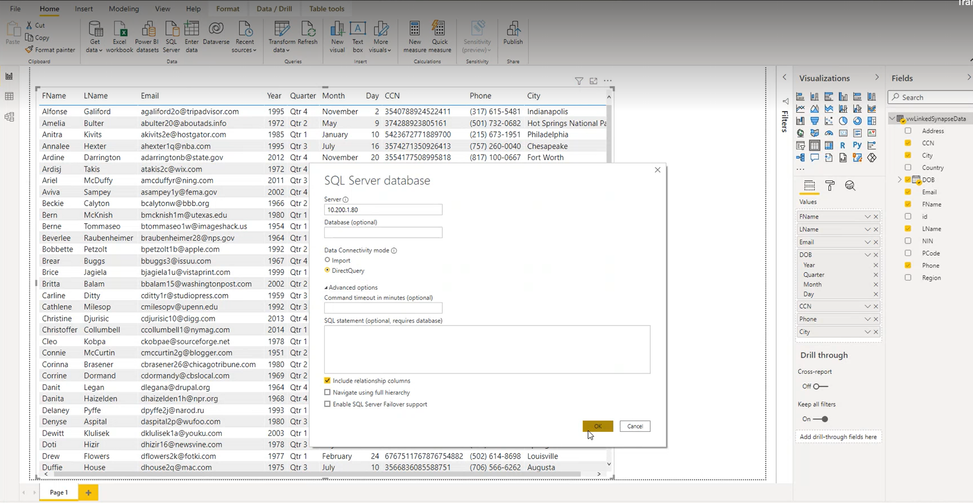

A typical customer requirement is to integrate Power BI with Azure Synapse SQL pool and control the data masking based on the User roles. As technical requirement no un-protected data can be stored at rest and needs to be protected prior to loading into Synapse SQL pool . Hence, the Dynamic Data masking in Azure Synapse is not an option which masks the data when it is retrieved vs masking prior to data load. Power BI Import mode is also not an option and requires the use of Direct Query in Power BI.

The key differences between selecting Import and DirectQuery:

- Import: The selected tables and columns are imported into Power BI Desktop. As you create or interact with a visualization, Power BI Desktop uses the imported data. To see underlying data changes since the initial import or the most recent refresh, you must refresh the data, which imports the full dataset again.

- DirectQuery: No data is imported or copied into Power BI Desktop. For relational sources, the selected tables and columns appear in the Fields list. For multi-dimensional sources like SAP Business Warehouse, the dimensions and measures of the selected cube appear in the Fields list. As you create or interact with a visualization, Power BI Desktop queries the underlying data source, so you’re always viewing current data.

A limitation to using DirectQuery is - if the Power Query Editor query is overly complex, an error occurs. To remedy the error, either delete the problematic step in Power Query Editor, or import the data instead of using DirectQuery. Hence the option of first fetching the protected data from Synapse and then un-protecting in the Power Query is not possible.

For other limitation of Direct Query refer - Use DirectQuery in Power BI Desktop - Power BI | Microsoft Docs

Integration with External Tokenization System

- Protegrity, a global leader in data security, provides data tokenization by employing a cloud-native, serverless architecture.

- Serverless tokenization with Protegrity delivers data security with the performance that organizations need for sensitive data protection and on-demand scale.

- Tokenization is a non-mathematical approach to protecting data while preserving its type, format, and length. Tokens appear similar to the original value and can keep sensitive data fully or partially visible for data processing and analytics.

- Historically, vault-based tokenization uses a database table to create lookup pairs that associate a token with encrypted sensitive information.

- Protegrity Vaultless Tokenization (PVT) uses innovative techniques to eliminate data management and scalability problems typically associated with vault-based tokenization. Using SQL MI with Protegrity, data can be tokenized or de-tokenized (re-identified) with SQL depending on the user’s role and the governing Protegrity security policy.

Setting up tokenization with Protegrity and Azure Synapse Analytics

Protegrity provides protection/unprotection via the ability to utilize the industry-standard database standard database platform capability of external functions . The external functions calls code that is executed outside the database platform. The remotely executed code is also known as a remote service. Information sent to a remote service is usually relayed through a proxy service. The database platform stores the security related external function information in an API integration

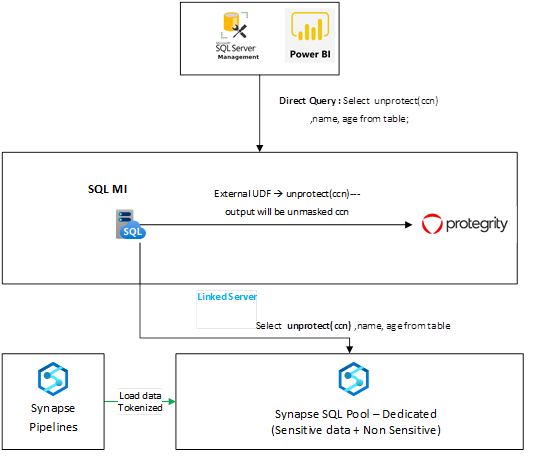

Protegrity currently supports protection/unprotection via external UDFs and Protegrity SQL Gateway is an in the roadmap. To support external UDFs, a SQL Managed Instance / SQL Server on Azure VM can be used as a gateway server that hosts the external UDFs to integrate with Protegrity Engine and the query is pushed down to Azure Synapse Analytics using the Linked Server created in SQL MI to Synapse SQL Pool.

The below approaches help with addressing the requirements of invoking an external UDF to detokenize the data in Synapse SQL Pool –

- Power M Query to invoke a Rest API

- SQL Server Gateway - Linked Server between SQL Server and Synapse SQL Pool

- SQL Server Gateway – Polybase

Approach 1 : Power M Query to invoke Rest API

Power M Query to invoke a rest api , post fetching results from Synapse. When integrated with Protegrity - the detokenize api will need to be invoked and the detokenized column will need to be added to the resultset.

The approach is not good for large datasets direct query and will lead to a performance issue – hence requires Import mode to work which doesn’t meet the customer requirement , considering all data will have to be stored on Power BI server in detokenized form if Import mode is used. Approach is to invoke a Rest api , and then adds the result into a new column into the result set of Synapse table ( previously fetched via direct query).

The code to achieve the same is as below –

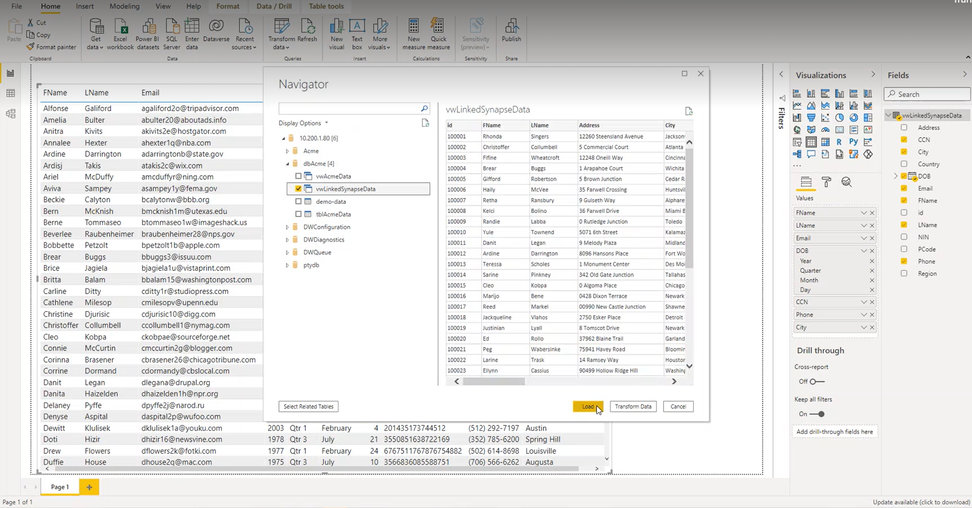

Approach 2 : Setting up linked servers – between SQL Server and Synapse ( SQL Server acts as a proxy)

The following diagram illustrates the sample flow of a query from Power BI ( Direct Query ) to Synapse SQL Pool

Linked servers in Managed Instance enable you to link remote SQL Server or Azure SQL endpoint and send remote queries or join remote tables with the local ones. Since the Dedicated SQL query endpoint in Azure Synapse Analytics is a T-SQL compatible query engine, you can reference it using a linked server:

You can also use UI in SSMS to create this linked server and just these parameters. Once you setup your linked server to Synapse SQL default query endpoint, you can start reading data from the Synapse dedicated pool.

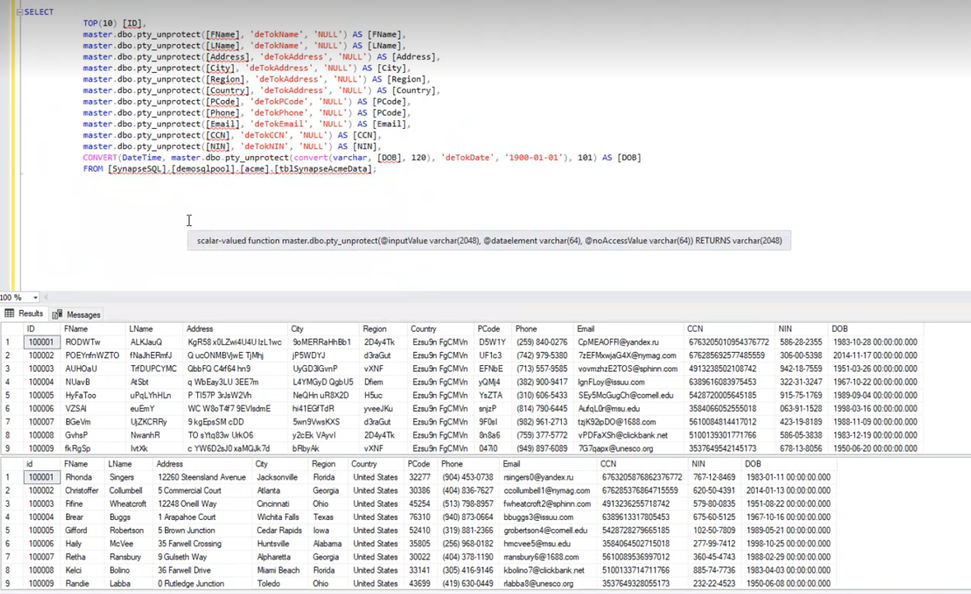

Select query with Protegrity unprotect scalar value function

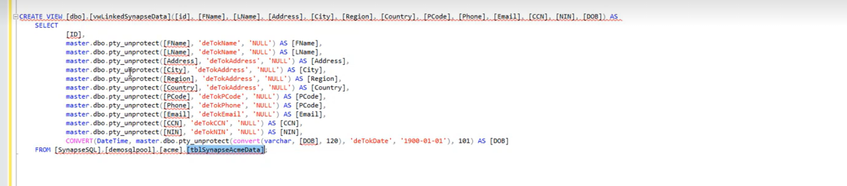

- Create view with Protegrity unprotect scalar value function

- Set up Power BI to connect to SQL MI in Direct Query Mode

- Select the view created with unprotect function call – the view runs the query on linked server to Synapse

- Approach 3 : Setting up external tables via Polybase

This approach requires deploying the UDF function provided by Protegrity in the SQL Server and creating external tables in SQL server for every table to be referred to in Synapse SQL pool. This approach is similar to the linker server approach , though requires the overhead of creating external tables and maintenance in the SQL Server