This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Authors: Yang Cui, Lei He, Sheng Zhao

Neural Text-to-Speech (Neural TTS), a powerful speech synthesis capability of Azure Cognitive Services, enabling developers to convert text to lifelike speech. Neural TTS is utilized in voice assistant scenarios, content read aloud capabilities, accessibility tools, and more. In December 2021 Azure text to speech reached a milestone with new neural models that even more closely mirror natural speech. In addition to this technology innovation, over 120 languages are now supported in Azure TTS, including a wide variety of locales. Users can choose from a rich portfolio of ready-to-use voices or record their own voice audio to be used with our Custom Neural Voice service to train new voices.

With the advancement of the neural TTS technology which makes synthetic speech indistinguishable from human voices, comes a risk of harmful deepfakes. As part of Microsoft's commitment to responsible AI, we are designing and releasing AI products with the intention of protecting the rights of individuals and society, fostering transparent human-computer interaction and counteract the proliferation of harmful deepfakes and misleading content. We have limited the access to Custom Neural Voice and are actively advocating a Code of Conduct for Text-to-Speech integrations that defines the requirements that all TTS implementations must adhere to in good faith.

Is there a way to provide technical protection against deepfakes when implementing neural TTS? In this blog, we introduce TTS watermark, a new technology to help developers identify synthetic voices and prevent TTS from being misused.

Need for watermark

Digital audio watermarking is a data-hiding technology that can embed specific messages in audio content and cannot be heard by human ears. The embedded information can only be extracted by corresponding watermark detectors with a correct key. Digital audio watermarking has applications in such fields as copyright protection, content recognition, and multimedia data management.

Presently, neural TTS-generated content can be widely used in media creation such as short videos. At times, it is difficult to tell whether the audio comes from a recording, synthetic voice, or tell whether it is from a specific synthetic voice. Based on such considerations, we investigated digital audio watermarking technology to identify the synthetic voice. We can embed a watermark on the synthetic voice, which will allow us to identify whether it is synthesized and which voice it is synthesized from.

How watermark works

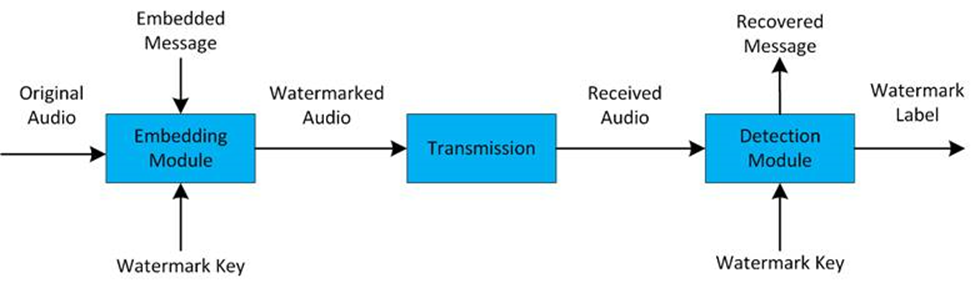

The overall framework is shown in the figure below. The watermark is embedded into the audio with a message through the embedding module. Then the watermarked audio is transmitted through the realistic channel before releasing. Finally, the detection module receives the released audio and detects whether the watermark exists in the audio then decodes the embedded message.

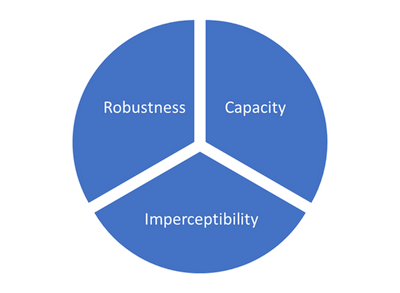

The performance of digital audio watermarking is measured in three dimensions: imperceptibility, capacity, and robustness.

Imperceptibility is the basic requirement of audio watermarking, which means the watermark can be hardly heard by human ears, otherwise, the perceptual voice quality would derogate too much.

The performance of capacity limits the amount of information that the watermark carries in a determined time, which indicates the number of characters per second. It is a critical index in the scenario where a large number of messages need to be embedded in a short-time audio.

Robustness is a performance indicator for the watermark against possible damage in practical applications. Audio content needs to pass through various channels in a realistic environment before the final sound is heard. These channels may include media codec, network transmission, speaker playback, microphone recording, room reverberation, and background noises or other interferences. These factors affect the audio as well as the watermark, thus bringing challenges to the identification. Furthermore, an attacker may deliberately destroy the watermark through any of the channels mentioned above. Therefore, the watermark must be robust enough to deal with such challenges.

However, these three indicators are inherently contradictory and cannot be improved at the same time. After the embedding method is determined, improving one indicator will always derogate the other two indicators. The following chart is an illustration of the relationship among the three indicators.

Therefore, it is necessary to make a balance between these three indicators in real applications. Such balance is like building a castle. The wall of the castle should be built higher to defend against the enemies. On the other hand, the wall should not be too high to make it difficult to build and maintain.

Three classical types of watermarking methods have been developed. They are echo hiding method, quantization index modulation method, and spread spectrum method. The echo hiding is a method that embeds the message in a delayed version of the audio. The quantization index modulation embeds the message in the quantized vector from the decomposed audio component. The spread spectrum method expands the message of narrow band to the wide band audio. The echo hiding method supports high capacity but does not perform well in robustness. The quantization index modulation performs well in imperceptibility, but not well in robustness. The spread spectrum method performs well in robustness, but not well in imperceptibility.

The single method introduced above can easily achieve a good performance in one indicator. But it is difficult to meet all the required performances by using one single method. Thanks to the Fourier analysis theory, an audio signal can be decomposed into a few orthogonal frequency components, like a symphony composed of many harmonics. This theory inspires that a compound audio watermarking method is feasible to leverage different single watermark methods in different frequency bands.

The compound audio watermarking method can make use of the advantages of different watermarking methods and perform better than a single method.

Specifically, audio signal can be decomposed into high-frequency band component, mid-frequency band component and low-frequency band component separately. According to the character of each frequency band, a few watermarking methods are selected for the compound method. Through the combination of several methods in different bands, the watermark methods play their respective advantages without interfering with each other. Among the watermark methods, the spread spectrum method is selected in one frequency band for excellent robustness in a challenging environment. An auto correlation-based method is also leveraged for the compound method in another frequency band. Such method outperforms the spread spectrum method in some scenarios, and provides time synchronization information to adjust the detection module.

In the embedding module, a 96-bit length key is given to generate the parameters of each method. A series of messages are also sequentially embedded in the watermark. In the detection module, the same key is used to regenerate the watermark parameters. A detector is leveraged to detect whether the watermark exists in the audio. If the watermark is detected, the decoding module will be enabled to decode the messages in it as output. If no watermark is detected, a non-watermark label will be given as output.

To enhance the imperceptibility, the psychoacoustic model is introduced to control the strength of the watermark. The results of subjective tests (CMOS – Comparative Mean Opinion Score) have shown the good performance of imperceptibility. In a CMOS test, each judge is asked to give a score from -3 to 3 of the preferred voice from watermarked and unwatermarked audios. The average score difference between the unwatermarked and watermarked audio is less than 0.05, which shows the watermarked and unwatermarked audios are indistinguishable in human ears.

The watermark has also been tested in various environments including the realistic channels. For example, raw audio is a usual format by network transmission. But some processing may also be applied to the raw audio, including the reverberation, lossy codec or adding background music (BGM). Moreover, the audio may also go through a sound reproduction system to replay, such as loudspeakers and microphones, which makes it harder to detect the watermark. As shown in the table below, our detection tool can achieve an above 99% detection rate in raw audio scenario, and an above 95% detection rate in real scenarios.

|

Scenario |

Accuracy |

|

Raw audio scene |

> 99.9% |

|

Reverberation scene |

> 97.0% |

|

Codec scene |

> 97.8% |

|

BGM scene |

> 96.7% |

|

Replay scene |

> 95.3% |

For the capacity, a 32-bit message can be embedded in one-second length audio. The perfect transmission rate (transport 32-bit message without any error) is above 99%. These indicators indicate that the watermark method is robust and reliable to use in real applications.

Security is another indicator which needs to be ensured. The length of the secret key is critical, because once the method was known by the attackers who intend to remove or corrupt the watermark, the secret key would be the only guarantee to protect the watermark. The key length can provide 80 octillion (the 96th power of 2) complexity for brute cracking, which would take a very long time to crack it for even a supercomputer (about 2 quintillion years).

With such techniques above, we have developed the watermark for neural TTS that can be applied in the practical environment. We will continue to make effort to protect the privacy of customers and the security of products and to design AI products responsibly.

How to use watermark

The watermark capability can be used to identify whether a voice is synthesized or from which voice it is synthesized. It can be applied to Custom Neural Voice upon request and protect the copyright of customers’ branded voices. Customers who want to identify their branded custom voices using watermark can contact mstts [at] microsoft.com for details.

With TTS watermark, customers’ synthetic voices and the audios generated with the synthetic voices will be marked. And a detector tool can be provided to allow people to identify if an audio is created from Azure TTS, or Custom Neural Voice.

Check out the samples below of TTS synthetic audios with and without a watermark embedded.

|

Voice |

Raw sample with Azure Neural TTS |

Watermarked sample |

| English (US), female |

|

|

| English (US), male |

|

|

| Chinese (Mandarin, simplified), female |

|

|

| Chinese (Mandarin, simplified), male |

|

|

One can hardly hear the difference between the samples with and without a watermark. That means enabling watermarks does not bring noticeable regression on the voice quality, while allowing watermark detectors to identify the synthetic voice.

For more information

Neural TTS and Responsible AI

Our technology advancements are guided by Microsoft’s Responsible AI process, and our principles of fairness, inclusiveness, reliability & safety, transparency, privacy & security, and accountability. We put these ethical standards into practice through the Office of Responsible AI (ORA), which sets our rules and governance processes, the AI, Ethics, and Effects in Engineering and Research (Aether) Committee, which advises our leadership on the challenges and opportunities presented by AI innovations, and Responsible AI Strategy in Engineering (RAISE), a team that enables the implementation of Microsoft responsible AI rules across engineering groups.

As part of Microsoft's commitment to responsible AI, we are designing and releasing Neural TTS and Custom Neural Voice (CNV) with the intention of protecting the rights of individuals and society, fostering transparent human-computer interaction and counteract the proliferation of harmful deepfakes and misleading content. Check out more information regarding the responsible use of neural TTS and CNV here:

Where can I find the transparency note and use cases for Azure Custom Neural Voice?

Where can I find Microsoft’s general design guidelines for using synthetic voice technology?

Where can I find information about disclosure for voice talent?

Where can I find disclosure information on design guidelines?

Where can I find disclosure information on design patterns?

Where can I find Microsoft’s code of conduct for text-to-speech integrations?

Where can I find information on data, privacy and security for Azure Custom Neural Voice?

Where can I find information on limited access to Azure Custom Neural Voice?

Where can I find licensing resources on Azure Custom Neural Voice?

Get started with Azure AI Neural TTS

Azure AI Neural TTS offers more than 330 neural voices across over 129 languages and locales. In addition, the Custom Neural Voice capability enables organizations to create a unique brand voice in multiple languages and styles.

For more information:

- Try the demo

- See our documentation

- Check out our sample code