This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

In the previous post, Migrate Amazon RDS for SQL Server to Azure SQL Managed Instance - Overview (microsoft.com), we have introduced an overview of the series and summarized different migration options to migrate from Amazon RDS for SQL Server to Azure SQL Managed Instance.

In this post, we shall demonstrate how to use the SQL Server native backup and restore features to migrate from Amazon RDS for SQL Server to Azure SQL Managed Instance.

This involves performing a full backup followed by differential backups on the source instance and restoring the same backups to the target SQL Managed Instance. With the help of differential backups, we significantly reduce application cutover time during the migration process.

Note that Amazon RDS for SQL Server currently does not support extraction of the transaction log backups and hence does not allow a PITR (Point in Time Restore) technique allowing to restore database to any particular moment in time.

On Azure SQL Managed Instance, the native backup and restore functionality is supported with the help of GUI, T-SQL commands, Powershell commands or REST API.

SQL Server Backup and restore

SQL Server has three database recovery models - Full, Bulk-Logged and Simple. A recovery model is a database property that controls how transactions are logged, whether the transaction log requires (and allows) backing up, and what kinds of restore operations are available.

- Full backup – Backs up the whole database, all extents from all data files, and backs up the transaction logs required in order to recover the database after a restore operation. This backup is supported in all recovery models.

- Differential backup – Backs up the extents modified since the last full backup. Like full backups, this method is supported in all recovery models.

You can use native backup restore method to migrate the databases from a SQL Server instance to an Azure SQL Managed Instance.

Many of our customers use native SQL Server backup and restore features to migrate to Azure SQL Managed Instance. When using the native SQL Server backup and restore functionality, you can simplify the database migration process by performing a full backup restore on the target SQL Managed Instance. In addition, with the help of differential and log backups, you can significantly reduce your application cutover time.

Note: RDS SQL Server doesn’t support taking log backups.

In this post, we shall walk you through a two-step process that enables you to migrate SQL Server databases to Azure SQL Managed Instance. The first step includes transferring a one-time full backup to Azure Blob Storage to create a replica of your SQL Server in SQL managed Instance. The second stage includes transfer of differential backups to keep the SQL Server in Azure SQL Managed Instance up to date until the cutover.

Amazon RDS for SQL Server supports native backup and restore for Microsoft SQL Server databases. It lets you backup individual databases from an SQL Server RDS instance. The native backup and restore functionality are supported with the help of stored procedures. These stored procedures let you create a differential or full backup of your Amazon RDS for SQL Server DB instances and store the backup files on Amazon Simple Storage Service (Amazon S3). You can restore these backups to on-premises instance that is running SQL Server or Azure SQL Managed Instance.

Prerequisites:

Before you get started, you must complete the following prerequisites:

- Set up and configure the Source RDS SQL Server instance backup option and Amazon S3 bucket by following Importing and exporting SQL Server databases.

- Prepare your target SQL Server Managed Instance running on Azure for migration.

- Ensure that the credentials used to connect to source SQL Server instance have db_owner or sysadmin permissions.

- Ensure that the credentials used to connect to target Azure SQL Database Instance have CREATE DATABASE and dbcreator permission on the target databases.

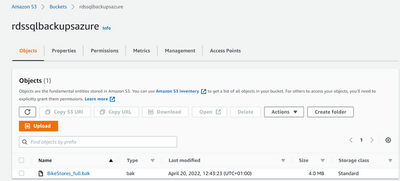

- Create a folder in S3 bucket to store backups in AWS, which is the source storage for your SQL Server backup files.

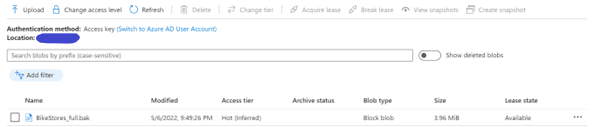

- Create a Blob storage container in Azure Storage account which is used as target storage for backup files.

- Create SAS tokens for blob in the Azure portal by following Microsoft doc

- Shared access signature (SAS) security token with read, write, and list permissions generated for the Blob Storage container

- Choose your Network connectivity for Azure blob storage. The two most common options are to communicate over the internet using the public service endpoints or over a private connection using site-to-site VPN or Express route. Follow the network requirements to configure your network environment.

- Create a Windows VM to run the AzCopy from the powershell or command prompt.

Migration walkthrough:

The migration solution consists of two stages.

In stage one, we use AZcopy for transferring one-time full backup to Azure blob storage and then restore backup to the SQL Server database on SQL Managed Instance:

- Create a full backup of SQL Server database from the RDS SQL Server Instance.

- Transfer the full backup files to Amazon S3 bucket and Azure Blob storage using AZcopy.

- After your data is loaded into a Blob storage, Restore the full database backup file system to the target SQL Server running on Azure SQL Managed Instance in a “Restoring” state.

In stage two, we use AzCopy for transferring differential backups for ongoing data changes:

- Create SQL Server differential backups to Amazon S3 bucket.

- Copy data from S3 to Blob storage using AzCopy and restore differential backups.

- When the application is ready for cutover, Restoring the final differential backup files to the target SQL Managed Instance.

Stage 1 – Full Backups

Stage 1.1: Backing up the SQL Server database from the on-premises source

Take a full backup of the RDS SQL Server database using the following code:

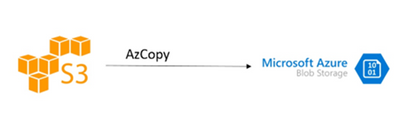

AzCopy is a command-line tool to manage and copy blobs or files to or from a storage account. It also allows you to sync storage accounts and move files from many different sources to a lot of different destinations. In our case we shall be moving files from Amazon S3 to Azure storage.

- AzCopyV10 is a fast-performing command-line tool that can be used to move data into Azure.

- It provides support for Blob, ADLS Gen2, and Azure Files with several parameters to configure the performance.

First, you will need to install AzCopy on your machine. After that, you will need to authorize AzCopy with Microsoft Azure and AWS.

AzCopy uses an access key, and secret to authenticate with AWS S3. For the destination Blob storage account, you can use any of the available authentication options (SAS token, or Azure Active Directory authentication).

Stage 1.2: Copy data from S3 to Blob storage using AzCopy.

AzCopy is a command-line tool to manage and copy blobs or files to or from a storage account. It also allows you to sync storage accounts and move files from many different sources to a lot of different destinations. In our case we shall be moving files from Amazon S3 to Azure storage.

- AzCopyV10 is a fast-performing command-line tool that can be used to move data into Azure.

- It provides support for Blob, ADLS Gen2, and Azure Files with several parameters to configure the performance.

First, you will need to install AzCopy on your machine. After that, you will need to authorize AzCopy with Microsoft Azure and AWS.

AzCopy uses an access key, and secret to authenticate with AWS S3. For the destination Blob storage account, you can use any of the available authentication options (SAS token, or Azure Active Directory authentication).

- Download AzCopy by following the documentation

- Authorize AzCopy to access your Azure Storage account and Amazon S3

|

Storage type |

Supported method |

|

Blob storage |

Azure AD and SAS |

|

Blob storage (hierarchical namespace) |

Azure AD |

|

File storage |

SAS only |

Step 1.3: Login to AzCopy using Azure AD

Login to a VM to run the AzCopy from the powershell. On Powershell, authenticate your identity by using the AzCopy login command. AzCopy uses your Azure AD account to authorize access to data in Blob storage. You will also need one of these permissions in Azure AD:

- Storage Blob Data Reader (downloads only)

- Storage Blob Data Contributor

- Storage Blob Data Owner

Run https://microsoft.com/devicelogin on your machine’s browser and enter the code provided to authenticate.

Stage 1.4: Login to AzCopy using SAS token & Get AWS access key and secret access key

To create a new key, got to IAM Management from your AWS Console, select the user, and click Create Access key button. Ensure you copy the secret key id and the secret access key as it would be required to authenticate to AWS.

You'll need to grab an Access Key ID and AWS Secret Access Key from Amazon Web Services. If you're not sure how to retrieve those, check out the AWS docs. From there, it's as easy as setting a few environment variables.

|

S3 bucket URL |

|

|

Source endpoint |

myrdsinstance.cmaee3eebtjd.us-east-1.rds.amazonaws.com |

|

Target endpoint |

mytargetsqlinstance.780f4aa8c17b.database.windows.net |

|

Azure Blob storage URL |

https://mystorageaccountname.blob.core.windows.net/mycontainer1 |

|

SAS Key |

sv=2019-02-02&ss=b&srt=sco&sp=rl&se=2023-12-02T00:09:14Z&st=2019-11-25T16:09:14Z&spr=https&sig=92kAe4QYmXaht%%3D |

The following example to Copy an object from AWS S3 bucket:

The following example to Copy a directory from AWS S3 bucket:

Now, Copy the backup file from AWS S3 bucket directory to Azure blob storage container. This example command recursively copies data from your s3 bucket to a blob container. A fictitious SAS token is appended to the end of the container URL.

Stage 1.5: Restoring the database backup file to the target SQL Managed Instance

After you transferred the file to Azure blob storage, restore a full database backup by executing the following T-SQL code.

Stage 2 – Differential Backups

In stage two, we copy differential backups for ongoing data changes or restore last differential backup before cutover:

Differential backups have all the changes since the last full backup. Because differential backups are cumulative, use the latest differential backup file to restore.

When the application is ready for cutover, perform the following steps:

- To minimize the cutover time, you may want to consider taking one final manual differential backup on source database.

- Restore the differential backup from URL on target SQL Server database using recovery option.

- When the application is ready for cutover to start using the target database endpoint on Azure SQL Managed Instance, simply point the application to the target database using the SQL Server database endpoint in Azure.

Stage 2.1 Create SQL Server differential backups to S3 bucket

Stage 2.2: Restoring the differential backup files to the target SQL Managed Instance.

Repeat the steps in the stage 1.2, 1.3, 1.4 to copy the differential backups to Azure Blob storage.

The NORECOVERY option leaves the database in a “Restoring” state after the restore has completed. This allows you to restore additional backups in the current recovery path.

Stage 2.3: Restoring the differential backup files to the target SQL Managed Instance

When the application is ready for cutover, perform the following steps:

- To minimize the cutover time, you may want to consider taking one final manual differential backup on source database.

- Restore the differential backup from URL on target SQL Server database using recovery option.

- When the application is ready for cutover to start using the target database endpoint on Azure SQL Managed Instance, simply point the application to the target database using the SQL Server database endpoint in Azure.

Summary

In this blog post, we have discussed SQL Server backup and restore option and AZCopy to migrate RDS SQL Server databases to Azure SQL Managed Instance.

In the next post, we will dive into using Azure Data Factory option for migration from Amazon RDS for SQL Server to Azure SQL Managed Instance.