This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Azure Synapse Analytics May 2022 Update

Welcome to the May 2022 update for Azure Synapse Analytics! This month, you’ll find sections on the new Azure Synapse Influencer program, Synapse Data Explorer live query in Excel, a Data Warehouse Migration guide for Dedicated SQL Pools in Azure Synapse, how to export pipeline monitoring as a CSV, and a new Azure Synapse Data Explorer connector for Microsoft Power Automate, Logic Apps, and PowerApps. Other new features are in SQL, Spark, Synapse Data Explorer, data integration, and Synapse Link.

Check out our companion video below!

Table of contents

- General

- SQL

- Apache Spark for Synapse

- Synapse Data Explorer

- Synapse Data Explorer live query in Excel

- Use Managed Identities for External SQL Server Tables

- New KQL Learn module (2 out of 3) is live!

- Azure Synapse Data Explorer connector for Microsoft Power Automate, Logic Apps, and Power Apps [Generally Available]

- Dynamic events routing from event hub to multiple databases

- Configure a database using a KQL inline script as part of JSON ARM deployment template

- Data Integration

- Synapse Link

General

Get connected with the Azure Synapse Influencer program

Join a community of Azure Synapse Influencers who are helping each other achieve more with cloud analytics! The Azure Synapse Influencer program recognizes Azure Synapse Analytics users and advocates who actively support the community by sharing Synapse-related content, announcements, and product news via social media.

Azure Synapse Influencers range in expertise and engagement level, but they all share one common goal—to help others get connected and expand their knowledge of Azure Synapse. In addition to recognizing users who regularly create and post content, this program encourages new users to get involved in community discussions.

If you’re passionate about Azure Synapse Analytics and helping your peers learn and do more with cloud analytics, this program is for you. The Azure Synapse Influencer Program brings together like-minded data professionals through social media to create a community of influencers who help each other solve challenges, get connected with a global network of users, and achieve more through Azure Synapse. Azure Synapse Influencer members will also have opportunities to collaborate directly with the Azure Synapse engineering team to create new content and highlight their expertise on specific topics.

Azure Synapse Influencers and advocates of all levels are welcome to join the program and becoming a member is easy! If you’re interested in helping to build an active community by engaging with and producing Synapse-related content, we encourage you to sign up for the Azure Synapse Influencer program today!

SQL

Data Warehouse Migration guide for Dedicated SQL Pools in Azure Synapse Analytics

With the benefits that cloud migration offers, we hear that you often look for steps, processes, or guidelines to follow for quick and easy migrations from existing data warehouse environments. We just released a set of Data Warehouse migration guides to make your transition to dedicated SQL Pools in Azure Synapse Analytics easier.

The Data Warehouse Migration Guide provides tips and best practices to make migrating workloads to dedicated SQL pools easier. It also provides guidance on the optimal approaches for migrations around Security.

The Synapse migration guide for Teradata and IBM Netezza contains the following:

- Design and Performance – Learn all about the design differences between on-premises data sources and Azure Synapse Analytics and performance tuning approaches to adopt.

- ETL and load Migration considerations – Read about the initial decisions to be considered for ETL and Data Migrations and best migration approaches to minimize your risk and impact.

- Security, access, and Operations for Migrations – Learn how to connect and migrate to Dedicated SQL Pools in Azure Synapse Analytics. Also, find workload management and automation considerations for housekeeping tasks here.

- Visualization and Reporting - This section provides guidance on migrating dashboards and reports.

- Minimize SQL Issues for Migrations – Read recommendations to simplify the task of migrating code (Stored procedures, DDL, DML, etc.).

- Microsoft and 3rd Party Tools – Learn which Microsoft and Microsoft Partner tools you can use to migrate your data.

- Implementing Modern Data Warehouse – Learn how to leverage other technologies within the Microsoft analytical eco-system to modernize your Data Warehouses.

Coming soon are guides for Oracle and SQL Server.

Check out the Data Warehouse Migration guides and embark on your journey into Azure Synapse Analytics.

Specify character column lengths... Not anymore!

Serverless SQL pools let you query files in the data lake without knowing the schema upfront. The best practice was to specify the lengths of character columns to get optimal performance. Not anymore!

Previously, you had to explicitly define the schema to get optimal query performance. In this case, the column countries_and_territories is defined as varchar(50):

With this new feature, you can get optimal query performance without having to define the schema. The serverless SQL pool will calculate the average column length for each inferred character column or character column defined as larger than 100 bytes. The schema will stay the same, while the serverless SQL pool will use the calculated average column lengths internally. It will also automatically calculate the cardinality estimation in case there was no previously created statistic. This feature works on sampled data before a user query is executed. Both average column lengths and cardinality estimations are stored and reused for other queries targeting the same source. In this case, the column countries_and_territories is inferred as varchar(8000) while calculated average column length will be used for resource estimations:

Character column lengths are used for query resource estimations as well as cardinality estimations when there are no previously created statistics. Both have a significant impact on query performance. With this recent improvement, you can stop worrying about schema and focus on delivering value to your customers.

The best part? It works for both OPENROWSET and external tables out of the box, as it will be triggered whenever you omit schema or specify character column length larger than 100 bytes.

Apache Spark for Synapse

Azure Synapse Dedicated SQL Pool Connector for Apache Spark Now Available in Python

Previously, the Azure Synapse Dedicated SQL Pool connector was only available using Scala. Now, it can be used with Python on Spark 3. The only difference between the Scala and Python implementations is the optional Scala callback handle, which allows you to receive post-write metrics.

The following are now supported in Python on Spark 3:

- Read using AAD Authentication or Basic Authentication

- Write to Internal Table using AAD Authentication or Basic Authentication

- Write to External Table using AAD Authentication or Basic Authentication

Below is an example of Read using AAD Authentication:

And Write using AAD Authentication (Internal Table):

To learn more about the connector in Python, read Azure Synapse Dedicated SQL Pool Connector for Apache Spark.

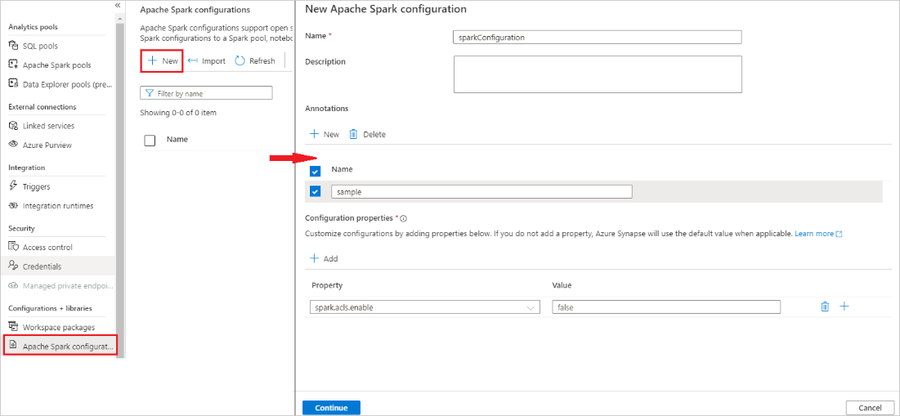

Manage Azure Synapse Apache Spark configuration

Apache Spark configuration management is always a challenging task because Spark has hundreds of properties. It is also challenging for you to know the optimal value for Spark configurations. With the new Spark configuration management feature, you can create a standalone Spark configuration artifact with auto-suggestions and built-in validation rules. The Spark configuration artifact allows you to share your Spark configuration within and across Azure Synapse workspaces. You can also easily associate your Spark configuration with a Spark pool, a Notebook, and a Spark job definition for reuse and minimize the need to copy the Spark configuration in multiple places.

To learn more about the new Spark configuration management feature, read Manage Apache Spark configuration

Synapse Data Explorer

Synapse Data Explorer live query in Excel

Using the new Data Explorer web experience Open in Excel feature, you can now provide access to live results of your query by sharing the connected Excel Workbook with colleagues and team members. You can open the live query in an Excel Workbook and refresh it directly from Excel to get the most up to date query results.

Using Open in Excel option In Synapse Data Explorer

To create an Excel Workbook connected to Synapse Data Explorer, start by running a query in the Web experience. Once you have a result set you are happy with, click the 'Open in Excel' button, located under the 'Share' menu:

The WebUI will create and download an Excel workbook that is connected to Data Explorer. Open the downloaded file and continue your work in Excel.

Excel integration

Once you’ve opened the exported file in Excel, the query results will be displayed. You may need to enter credentials to access the data.

The Excel workbook that was just created is connected to Synapse Data Explorer and is refreshable. Simply refresh your Excel workbook with new data from Data Explorer and all related Excel artifacts, like charts and pivot tables, will be updated with the latest data.

This new integration between Synapse Data Explorer and Excel is yet another way to help you be more productive using Azure data and the Office suite.

To learn more about Excel live query, read Open live query in Excel.

Use Managed Identities for External SQL Server Tables

One of the key benefits of Azure Synapse is the ability to bring together data integration, enterprise data warehousing, and big data analytics. With Managed Identity support, Synapse Data Explorer table definition is now simpler and more secure. You can now use managed identities instead of entering in your credentials.

An external SQL table is a schema entity that references data stored outside the Synapse Data Explorer database. Using the Create and alter SQL Server external tables command, External SQL tables can easily be added to the Synapse Data Explorer database schema.

The following option was added to the SqlServerConnectionString in the Create/Alter Table command:

- Authentication="Active Directory Managed Identity" for a system-assigned managed identity or

- Authentication="Active Directory Managed Identity";User Id={object_id} for a user-assigned managed identity with its object ID.

To learn more about managed identities, read Managed identities overview.

To learn more about external tables, read Create and alter SQL Server external tables.

New KQL Learn module (2 out of 3) is live!

The power of Kusto Query Language (KQL) is its simplicity to query structured, semi-structured, and unstructured data together. To make it easier for you to learn KQL, we are releasing Learn modules. Previously, we released Write your first query with Kusto Query Language. New this month is Gain insights from your data by using Kusto Query Language.

KQL is the query language used to query Synapse Data Explorer big data. KQL has a fast-growing user community, with hundreds of thousands of developers, data engineers, data analysts, and students.

Check out the newest KQL Learn Model and see for yourself how easy it is to become a KQL master.

To learn more about KQL, read Kusto Query Language (KQL) overview.

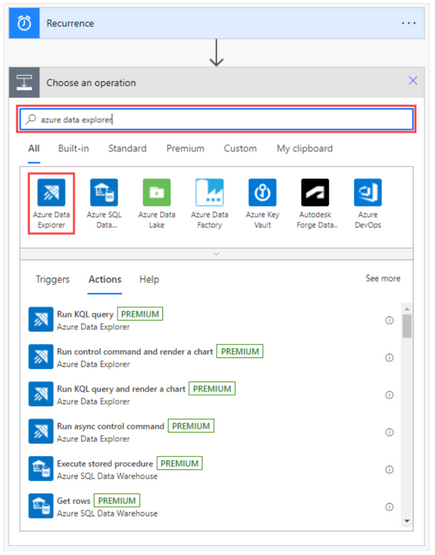

Azure Synapse Data Explorer connector for Microsoft Power Automate, Logic Apps, and Power Apps [Generally Available]

The Azure Data Explorer connector for Power Automate enables you to orchestrate and schedule flows, send notifications, and alerts, as part of a scheduled or triggered task.

In Power Automate, you can:

- Send notifications and alerts based on query results, such as when thresholds exceed certain limits.

- Send regular, such as daily or weekly, reports containing tables and charts.

- Schedule regular jobs using control commands on clusters. For example, copy data from one table to another using the .set-or-append command.

- Export and import data between Azure Data Explorer and other databases.

To create a new flow using the Azure Data Explorer connector:

- Sign-in to Power Automate

- Create a New scheduled Cloud Flow

- Add a Data Explorer operation by selecting one of the supported Azure Data Explorer actions:

The supported Azure Data Explorer actions are:

- Run KQL Query: This action sends a query to the specified cluster.

- Run KQL query and render a chart: Use this action to visualize a KQL query result as a table or chart.

- Run async control command: This action runs control command in async mode and returns its ID, state, and status on completion.

- Run control command and render a chart: Use this action to run a control command and get the result as a chart of your choice.

- Run show control command: This action runs the show control command and returns the result.

In addition, you can include a step in any flow to send reports by email, to any email address.

To learn more about the Azure Synapse Data Explorer connector for Microsoft Power Automate, Logic Apps, and Power Apps, read Azure Data Explorer connector for Microsoft Power Automate and Usage examples for Azure Data Explorer connector to Power Automate.

The following features are Standalone Azure Data Explorer features. Linked services can be used to connect the Azure Data Explorer (ADX) databases to Synapse.

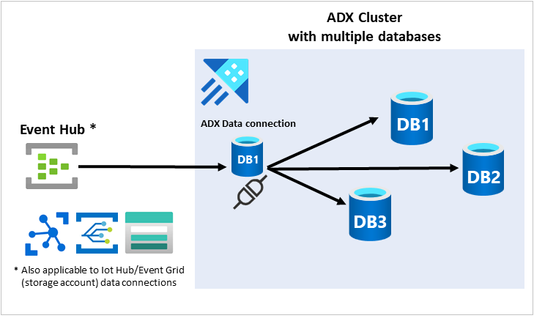

Dynamic events routing from event hub to multiple databases

Routing events from Event Hub/IOT Hub/Event Grid is an activity commonly performed by Azure Data Explorer (ADX) users. Previously, you could route events only to a single database per defined connection. If you wanted to route the events to multiple databases, you needed to create multiple ADX cluster connections.

To simplify the experience, we now support routing events data to multiple databases hosted in a single ADX cluster.

Routing data to an alternate database is off by default. To send the data to a different database, you must first set the connection as a multi-database connection. You can do this in the Azure portal Azure portal, C#, Python, or an ARM template.

For example, the configuration option in the Portal is:

To learn more about dynamic routing, read Ingest from event hub.

Configure a database using a KQL inline script as part of JSON ARM deployment template

Previously, Azure Data Explorer supported running a Kusto Query Language (KQL) script to configure your database during Azure Resource Management (ARM) template deployment. Now, this can be done using an inline script. The script is provided inline as a parameter to a JSON ARM template.

A script is a list of one or more control commands, each separated by one line break, and is created as a resource that will be accessed with the ARM template. The script can only run control commands that start with:

- .create

- .create-or-alter

- .create-merge

- .alter

- .alter-merge

The following is an example of how the inline parameter is defined in the JSON ARM template:

To learn more about using a KQL inline script, read Configure a database using a Kusto Query Language script

Data Integration

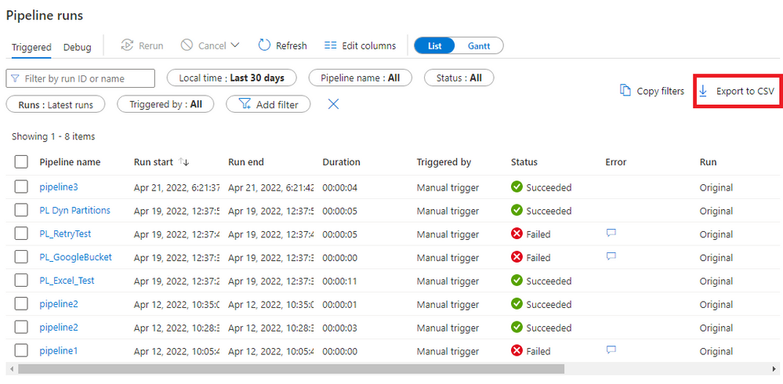

Export pipeline monitoring as a CSV

The ability to export pipeline monitoring to CSV has been added after receiving many community requests for the feature. Simply filter the Pipeline runs screen to the data you want and click ‘Export to CSV’.

To learn more about exporting pipeline monitoring and other monitoring improvements, read Azure Data Factory monitoring improvements.

Incremental data loading made easy for Synapse and Azure Database for PostgreSQL and MySQL

In a data integration solution, incrementally loading data after an initial full data load is a widely used scenario. Automatic incremental source data loading is now natively available for Synapse SQL and Azure Database for PostgreSQL and MySQL. With a simple click, users can “enable incremental extract” and only inserted or updated rows will be read by the pipeline.

To learn more about incremental data loading, read Incrementally copy data from a source data store to a destination data store.

User-Defined Functions for Mapping Data Flows [Public Preview]

We hear you that you can find yourself doing the same string manipulation, math calculations, or other complex logic several times. Now, with the new user-defined function feature, you can create customized expressions that can be reused across multiple mapping data flows. User-defined functions will be grouped in libraries to help developers group common sets of functions.

Once you’ve created a data flow library, you can add in your user-defined functions. You can even add in multiple arguments to make your function more reusable.

To learn more about user-defined functions, read User defined functions in mapping data flows

Assert Error Handling

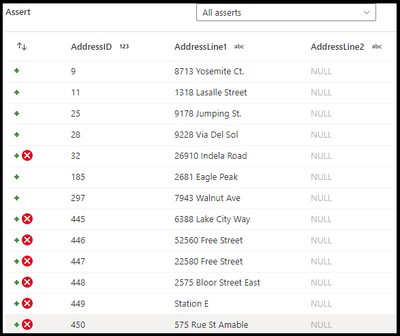

Error handling has now been added to sinks following an assert transformation. Assert transformations enable you to build custom rules for data quality and data validation. You can now choose whether to output the failed rows to the selected sink or to a separate file.

This example shows which rows failed your assertions:

To learn more about error handling, read Assert data transformation in mapping data flow

Mapping data flows projection editing

New UI updates have been made to source projection editing in mapping data flows. You can now update source projection column names and column types with the click of a button.

To learn more about source projection editing, read Source transformation in mapping data flow

Synapse Link

Azure Synapse Link for SQL [Public Preview]

At Microsoft Build 2022, we announced the Public Preview availability of Azure Synapse Link for SQL, for both SQL Server 2022 and Azure SQL Database. Data-driven, quality insights are critical for companies to stay competitive. The speed to achieve those insights can make all the difference. The costly and time-consuming nature of traditional ETL and ELT pipelines are no longer enough. With this release, you can now take advantage of low- and no-code, near real-time data replication from your SQL-based operational stores into Azure Synapse Analytics. This makes it easier to run BI reporting on operational data in near real-time, with minimal impact on your operational store.

To learn more, read Announcing the Public Preview of Azure Synapse Link for SQL and watch our YouTube video.